-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Web Engineering

2016; 5(1): 1-9

doi:10.5923/j.web.20160501.01

Touch Gesture and Pupil Reaction on Mobile Terminal to Find Occurrences of Interested Items in Web Browsing

Shohe Ito1, Yusuke Kajiwara1, Fumiko Harada2, Hiromitsu Shimakawa1

1Ritsumeikan University Graduate School of Information Science and Engineering, Kusatsu City, Japan

2Connect Dot Ltd., Kyoto City, Japan

Correspondence to: Shohe Ito, Ritsumeikan University Graduate School of Information Science and Engineering, Kusatsu City, Japan.

| Email: |  |

Copyright © 2016 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Mobile users usually browse web pages on mobile terminals. Many new interesting items occur when the user browses web pages. However, since former methods use the history of past searches to identify users’ interests in order to recommend services based on them, it is difficult to estimate pinpoint and new interests for the users. This paper proposes a method to estimate the hidden interesting items in pinpoint, by the user’s touch operations and pupil reactions. A part of a web page which user looks at is regarded as their interested items when both touch operations and pupil reactions make a response related to their interested items. The methods can deal with users’ interests, because touch operations and pupil reactions show their current interests. Moreover, using both touch operations and pupil reactions improves the precision of the estimation, because they can reduce each noise. Users are able to enjoy the services provided according to their estimated pinpoint and current interests after the estimation of the interested items. When we estimate interested items with the proposed method, we calculated the precision, the recall and the F-measure for every subject. The mean of the precision, the recall and the F-measure are 0.850, 0.534, and 0.603, respectively. In addition, we discuss how to improve the proposed method from the aspects of touch gestures and pupil reactions.

Keywords: Recommendation, Interest, Mobile Terminal, Touch Gesture, Pupil Movement

Cite this paper: Shohe Ito, Yusuke Kajiwara, Fumiko Harada, Hiromitsu Shimakawa, Touch Gesture and Pupil Reaction on Mobile Terminal to Find Occurrences of Interested Items in Web Browsing, International Journal of Web Engineering, Vol. 5 No. 1, 2016, pp. 1-9. doi: 10.5923/j.web.20160501.01.

Article Outline

1. Introduction

- More and more people use mobile terminals such as smartphones, and tablets [1]. These users of mobile terminals usually browse web pages as well as enjoy calling and mailing [2]. On the other hand, many web pages are likely to have a lot of information [3]. Those web pages include e-commerce sites [4], news sites [5], restaurant guides [6], picture sharing sites [7] and so on.This paper aims to provide services to mobile users based on their interests when they browse big web pages. For example, mobile user browses the web pages about the London 2012 Olympics, "London 2012: Revisiting BBC Sport's most-viewed videos" [8]. This web page shows 50 impressive scenes of various kinds of sports on the 2012 Olympics. Such web pages have attractive items which interest many users. Then, a specific user saw a title "The perils of courtside commentating" among various paragraphs on the web page, which brought a new interest to the user. This is the process that "The perils of courtside commentating" gets an interested item from an attractive item for the user. He might want to get more information of the interested item expressed in the paragraph. If interested items are identified, we are able to provide users with services based on their interests, such as recommendations of other articles related to the interested items.Though there are many methods to estimate interests of users browsing web pages, the methods use records of web pages the users visit for the estimation [9-11]. These existing methods cannot estimate interested items in web pages, in a pinpoint manner because these methods regard the whole web page as interested items of the users. In the methods, the user in the previous example is assumed to have interests in all of the 50 scenes. The paragraphs out of his interests prevent the estimation from identifying the user interest with high accuracy.Furthermore, these existing methods cannot deal with new interests which occurred suddenly, because they found the user’s interest on records of visited web pages. Suppose the user is apt to browse web pages about track and field events because he likes them. The methods based on the browsing logs estimate that he prefers track and field events from records of visited web pages. This paragraph tells a basketball game. It is difficult for the existing methods to recognize a new interest occurring suddenly for the paragraph about the basketball event.This paper proposes the method to estimate new items interesting the user by touch operations and pupil reactions. When users encounter interested items during web browsing, they take specific touch operations to watch the items carefully. On the other hand, pupils of human beings enlarge when they see interested objects. We identify both touch operations and pupil reactions related on the occurrence of users’ interests. Identification of encounters of users with interested items allows us to estimate their pinpoint interests. Since touch operations and pupil reactions show their interests immediately, this method can deal with their instant interests. Furthermore, using both touch operations and pupil reactions can cover each noise. They improve the precision of the estimation. Services such as recommendation of relevant information can be after the estimation of the pinpoint and current interested items. This paper shows results of estimation based on data of users’ web browsing.

2. Related Works

- Typical methods of interest estimation are enumerated in [12]. It shows the methods of the collaborative recommendations, the content-based recommendations, the demographic-based recommendations, and so on. Furthermore, it proposes combination of these methods.[13-17] show recommendation systems of mobile services. [13-16] propose the recommendation systems of mobile services with users’ contexts recorded by mobile terminals, day of the week, time, behavior, battery, places, users’ interests. Users’ contexts are taken by sensors on mobile terminals. Some works utilize records of other mobile users in order to take training data to recommend the services. [17] proposes a movie recommendation method in a mobile terminal by genre correlation. This method uses GPS to recommend nearby movie theatres. The method also uses a part of users’ contexts.However, all of the methods cannot estimate interested items of web pages in a pinpoint manner. Additionally, these methods have difficulties to deal with new sudden interests because they rely on records of web pages which users browse.

3. Interested Item Estimation Based on Pupil Reaction and Touch Gesture

3.1. Combination of Pupil Reaction and Touch Gesture

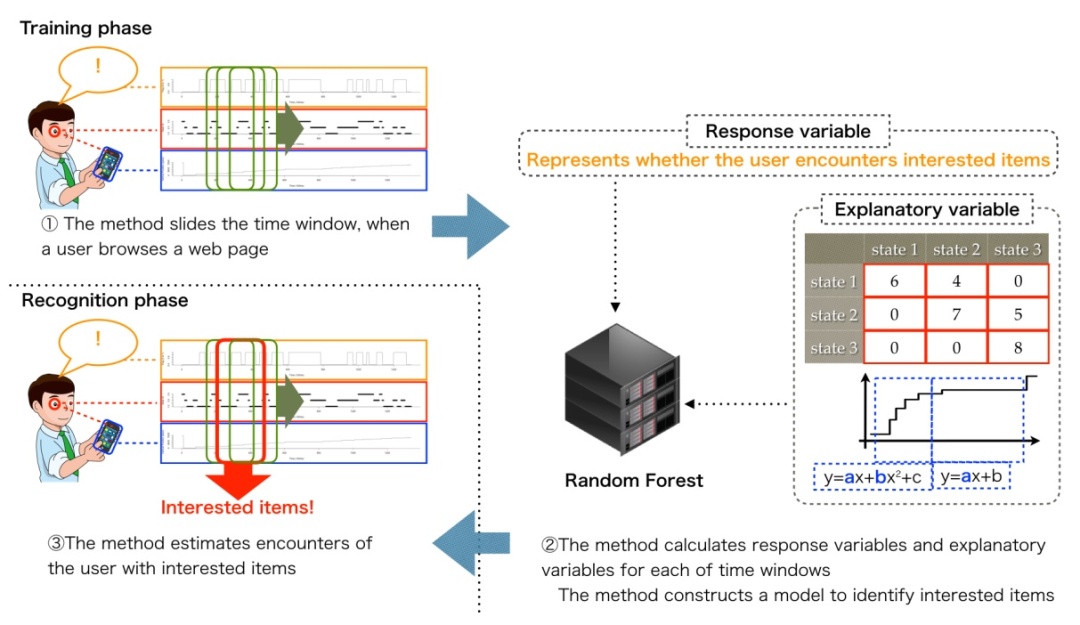

- We propose a method estimating interested items users encounter based on touch gestures and pupil reactions. When the method estimates interested items, we consider not only the way to browse web pages on mobile terminals, but also processes users encounter interested items. Consequently, we selected touch gestures and pupil reactions as information correlated with users’ interests in order to estimate the accurate second when users encounter interested items. We refer to touch gestures as touch operations on a mobile terminal screen. Touch gestures and pupil reactions can detect the accurate second when users encounter interested specific pinpoint items. The proposed method identifies the interested part of the web page reflected on the screen of a mobile terminal when it estimates encounters of users with interested items. We regard the part of the web page as an interested item. When the proposed method identifies an interested item, we can provide services based on the interested item. The services might include recommendations of web pages related to the interested item, such as showing meanings of current topic words in the interested item, showing meanings of current topic words in the interested item, exhibiting advertisements based on them and so on. Interest estimations using either touch gestures or pupil reactions reduce the precision due to some noise which comes out in each of them. Estimation using both of them can make up each other’s noise. Consequently, we can expect that the precision of the proposed method is higher than the method estimating interested item with only touch gestures or pupil reactions.Figure 1 shows the outline of the proposed method. The proposed method divides into a phase learning models and a phase identifying interested items. First, the method records encounters with interested items, pupil reactions, and touch gestures, when a user browses a web page. The analysis with the hidden Markov model divides pupil reactions into states. A time window slides on records of encountered items, states of the hidden Markov model, and touch gestures. These records are acquired each time window. Second, response variables and explanatory variables are extracted from each time window. The method applies the random forest algorithm to data composed these variables to estimate interested items. Finally, a model is constructed in the phase identifying and estimating interested items.

| Figure 1. Outline of Proposed Method |

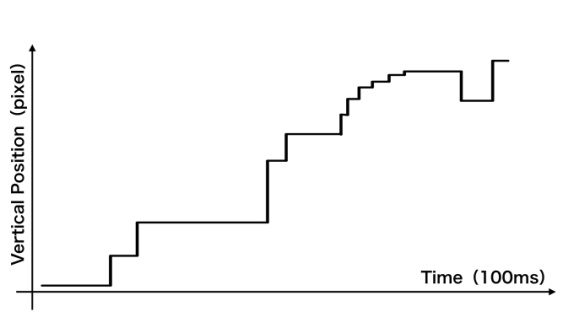

3.2. Interested Item Estimation by Touch Gestures

- A method to estimate interested items with only touch gestures has been developed on [18]. The paper [18] has shown that touch gestures with user-specific patterns appear when users encounter interested items during browsing a web page. The user changes the area of a web page displayed on a mobile terminal screen with touch gestures when he is browsing the web page. It means the history of touch gestures is expressed with a graph showing a time-series of the displayed area. We refer those data to the graph as a gesture trail. Figure 2 shows a gesture trail. The horizontal axis of the graph shows the time. The vertical axis of the graph is the vertical position which the user is currently looking at in a web page. The vertical position is defined as the number of pixels from the beginning of the page to the top of the displayed area on the screen. Gesture trails such as Figure 2 show the history of touch gestures of swipe upward and downward to go on reading web pages. The position of the displayed area of a web page increases when a user goes on reading the web page downward. As a consequence, the gesture trail is right-upward slope. On the other hand, the position of the displayed area decreases when a user goes back to a part of a web page which he looked at before. At that time, the gesture trail is right-downward slope.

| Figure 2. Gesture Trail |

| Figure 3. Gesture Patterns Proposed in [18] |

3.3. Noise Reduction

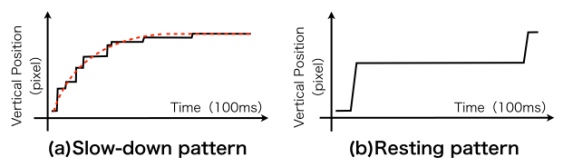

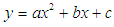

- The interested item estimation method of [18] does not address a gesture trail which resembles the gesture patterns of users reading out of an interested part. Such gesture trails degrade the precision in the interested item estimation. Such gesture trails appear when users look away during browsing a web page. Personal habits and operation errors of mobiles may also cause them. This research uses both touch gestures and pupil reactions, in order to prevent the degradation of the precision to estimate interested items. Pupil reactions related to users’ interests enable us to find whether a part of a gesture trail is the gesture pattern on interested items or not.In addition, this research introduces a combined gesture pattern which is the sequence of Slow-Down and Resting patterns to detect encounters of users with interested items. The Slow-Down pattern followed by the Resting pattern is defined as the combined gesture pattern in this research. We call the combined gesture pattern as the Slow-Down-to-Resting pattern. Figure 4 shows the Slow-Down-to-Resting pattern. We regard that a user encountered an interested item in a time duration if the Slow-Down-to-Resting pattern detected in the gesture trail during the time duration. The Slow-Down-to-Resting pattern shows the series of the following behaviors. First, a user reduces the velocity because he goes on reading a web page when he encounters an interested item. Second, the user reads the interested item carefully. There is the high possibility that the areas users encountered are interested items when such series occur. We are able to identify interested items more accurately using Slow-Down-to-Resting pattern. Slow-Down patterns and Resting patterns are approximated with the following functions.

| (1) |

| (2) |

| Figure 4. Slow-Down-to-Resting Pattern |

3.4. Pupil Reaction in Reading Interested Items

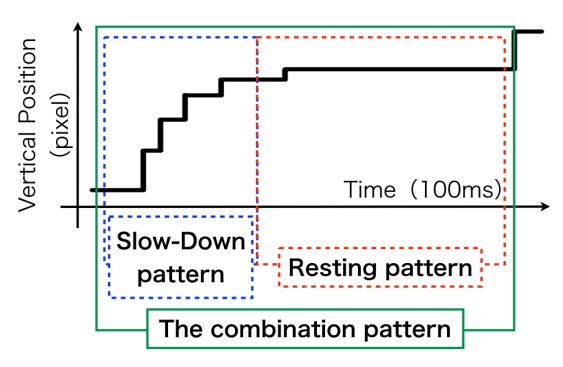

- This research considers not only touch gestures but also pupil reactions to estimate interested items on mobile terminals. Human beings inherently open their pupils when they encounter interested objects.Identification of pupil reactions related to users’ interests leads to the estimation of interested items in web pages. An in-camera is attached on a mobile terminal screen. The cameras are able to take pupil reactions without loads for users. However, the current camera does not have enough performance to take pupil reactions. There are several approaches to take pupil reactions [20]. Improvement of in-cameras is expected so as to develop the proposed method with in-cameras.Figure 5 (a) depicts the graph showing time zones a user encounters interested items. These horizontal axes of this graph represent the time divided by every 100 ms. The vertical axis of this graph shows whether a subject encountered an interested item or not. This graph is generated by the actual data through an experiment explained in the following section. In the experiment, a user presses a foot pedal when he encounters interested items during browsing a web page. A user shows an encounter to an interested item by pressing on a foot pedal when the vertical axis of the graph is one. Figure 5 (b) is the graph showing pupil reactions. The vertical axis of this graph is the pupil radius by pixel. These two graphs show that the user’s pupil expands before he encounters an attractive area. After the user’s pupil expands, the pupil shrinks in short period. After these series, the pupil seems to return to the former stable condition.

| Figure 5. Pupil Reactions with Interested Items |

4. Experiment to Evaluate the Proposed Method

4.1. Outline of Experiment and Evaluation

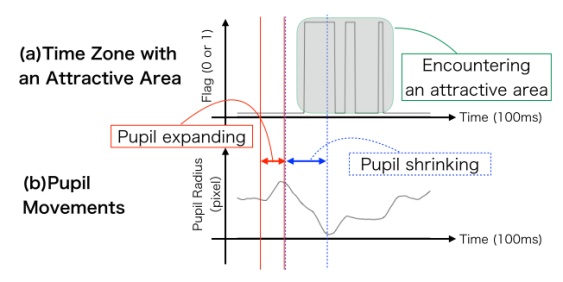

- When the subjects browse web pages, we experimented to record touch gestures, videos of pupils and their encounters with interested items. We evaluated the proposed method how correctly it estimates with these records. Four subjects conducted an experiment as follows.1. We explained the outline of the experiment to give a task to each of the subjects. The task was to summarize how to grow certain farm products on a document.2. The subject browses the selected web page whose content included how to grow the farm products. When the subject browsed the selected web page, we recorded touch gestures and a video of his pupil.3. The subject showed time zones when he encountered interested items by stepping on a foot pedal. The subject stepped on the pedal at the moment when he encountered interested items. The subject kept stepping on the pedal during reading these interested items. The time of the subject stepping on the pedal was recorded automatically.We measure the time-series of radiuses of the subject pupils from videos after the experiment. We use leave-one-out cross-validation to evaluate the proposed method. Figure 6 shows how to divide subject’s records into test data and training data. A time window slides records when a subject browses a web page. One of the records of every time widow is regarded as a test data. Similarly, the time window also slides records out of test data. All of the records are training data. The proposed method distinguishes test data showing that users encounter interested items from others. At that time, we calculate the precision, the recall and the F-measure.

| Figure 6. Division into Test Data and Training Data |

4.2. Recording Touch Gesture

- We used the tablet terminal "ASUS MeMO Pad 8 ME581C" in the experiment. The tablet terminal works on android 4.4.2. The screen size is eight inches. We put an anti-glare film on the tablet terminal screen to reduce the brightness of the screen. It prevents the brightness of the screen from affecting pupil reactions. We implemented a web browser application to record touch gestures. The application shows the web page we selected when a subject activates it. Every 100 ms, the application records positions which the subject watches in a web page, while the subject browses the web page.

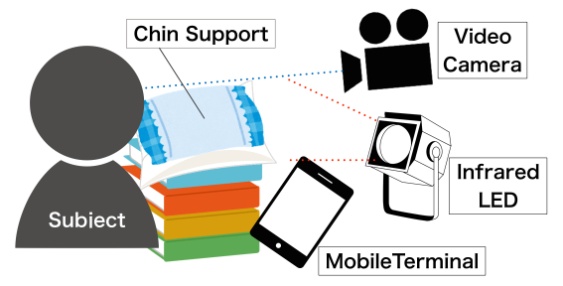

4.3. Recording Video of Pupil

- The facility recording videos of pupils is like Figure 7. A subject puts his chin on the chin-support when he browses a web page. The chin-support prevents the vibration of subject face, stabilizing the video of the pupil. In addition, when they use mobile terminals, they usually take slouching position because of the influences of light or their eyesight. The chin-support enables the subject to reproduce the position that he takes during he is browsing web pages. It also fixes his face, because of the weight of his head. It is difficult to film pupils using in-cameras of current mobile terminals. We filmed pupils with infrared ray in order to make it easy to identify the pupils [21], [22], using the camera, Canon ivis FS10. The infrared film on the camera cuts off visible light. The infrared film we used is "FUJIFILM IR-76 7.5x7.5". We irradiated the infrared ray surrounding subject eyes when we used the camera with the infrared film. We used 56 infrared LEDs to irradiate infrared ray.

| Figure 7. Facility to Record Pupil Videos |

4.4. Recording when Subjects Encountered Interested Items

- Subjects use a foot pedal to suggest the time zones where they encounter interested items in the experiment. These subjects are able to indicate their time zones without preventing their touch gestures because they use a foot pedal to suggest that they encounter interested items. These subjects are stamping the pedal in the time duration where their interested items appear. These subjects keep stamping the pedal while they browse these interested items. The time zones that they stepped on the foot pedal are recorded automatically. The proposed method gets records of the pedal in every time window. The response variable in the method is whether records of time windows show that these subjects encountered interested items or not. The proposed method identifies the maximum number of times these subjects stamp the pedal in a time window. If the number of times these subjects stamp the pedal is larger than the half of their maximum number, we regard the time windows as ones showing they encounter interested items.

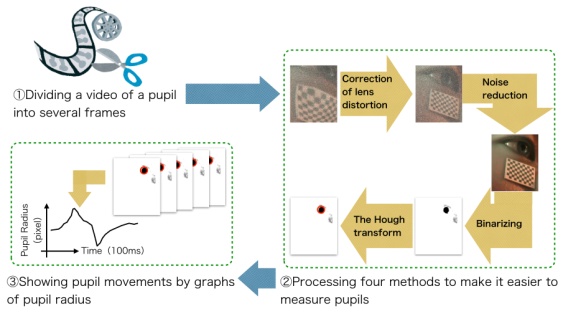

4.5. Measurement of Pupil Radius

- We generated the time series of pupil radiuses from the videos of pupils after the experiment. Figure 8 shows how to measure of the time-series of pupil radiuses from a video of a pupil. First, the video of a pupil is divided into each frame. Second, the picture of each frame is processed in order to make it easy to measure pupil radiuses in the picture of the frame. A lens distortion of the camera is reflected on the picture in each frame. We revise the lens distortion by the Zhang’s method [23]. These frames have noises due to the infrared ray. We apply the bilateral filter to the frame to remove the noise. After that, we binarize the frame and extract the part of the pupil. The Hough transformation is applied to the frame. The transformed picture shows the edge of the pupil. Finally, we extract a circle in the frame picture by the Hough transformation as the pupil’s radius. The Graph of pupil radiuses along the time series shows pupil reactions. We use the graph to analyze pupil reactions.

| Figure 8. How to Measure Pupil Radius |

4.6. Result of Evaluation

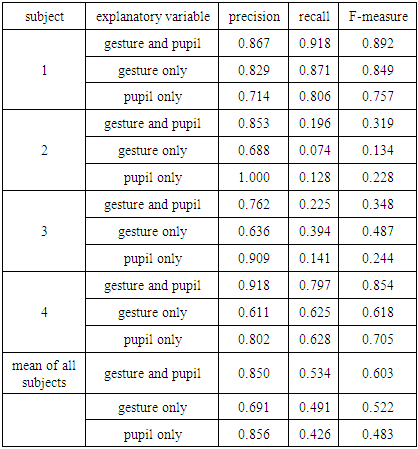

- The proposed method estimated that subjects encountered interested items. Table 1 shows the precision, the recall and the F-measure at that time. In this evaluation, we estimate interested items by three formulas of the explanatory variables. These three formulas are explanatory variables of both touch gestures and pupil reactions, explanatory variables of only touch gestures and explanatory variables of only pupil reactions. We suppose that the proposed method uses the explanatory variables of both touch gestures and pupil reactions.

|

5. Discussion for Improvement

- According to Table 1, the precision, the recall and the F-measure of several subjects decrease when the method uses the explanatory variables of both touch gestures and pupil reactions. In the following sections, we discuss to improve these values from aspects of touch gestures and pupil reactions.

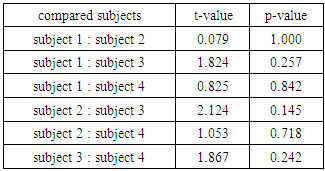

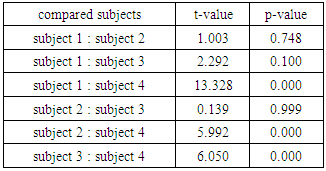

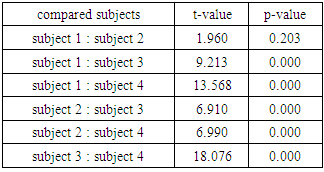

5.1. Parameter of Slow-Down-to-Resting Pattern

- According to Table 1, the precision of only touch gestures is lower than their precision of only pupil reactions in some subjects. Their precision of both touch gestures and pupil reactions is lower than their precision of only touch gesture or only pupil reactions. Furthermore, their precision of only touch gestures is extremely low. Therefore, their low precision affects their F-measure. Subject 2, 3 are the ones. Hence, we compared the distribution of touch gesture parameters of subject 1, 2, 3 and 4. We use three parameters as the explanatory variables of touch gestures. Table 2-4 shows that we compared the distribution of each subject parameter. We use the Steel-Dwass to compare it. According to Table 2-4, we compare between subject 2, 3 and subject 1, 4. Evaluation results of subject 2, 3 are worse than those of subject 1, 4. Table 3 and 4 have significant differences of distributions. Table 3 shows the comparison of the distribution of the parameters of the Slow-Down part in the Slow-Down-to-Resting pattern. The parameters show the positions of the quadratic functions approximating the Slow-Down patterns in time windows. Table 4 shows comparisons of the distribution of the parameters of the Resting part in the Slow-Down-to-Resting pattern. The parameters show the slopes of the linear functions approximating the Resting patterns. Both tables show that the distributions of the two parameters of subject 2 are different from those of subject 4. Similarly, these tables show that the distributions of the parameters of subject 3 are different from those of subject 4. Consequently, there is a possibility that touch gestures of subject 2, 3 are different from those of subject 4. Additionally, according to Table 3, the distributions of the two parameters of subject 2, 3 are not different from those of subject 1. Table 4 shows only subject 3 is different from subject 1. Touch gestures of subject 2, 3 are not different from them of subject 4. Evaluations of subject 2, 3 are poor even though touch gestures of subject 2, 3 are similar to subject 1 who is good in evaluation. For this reason, we guess the correlation of Slow-Down-to-Resting Pattern to interests of subject 2, 3 is low. We suspect that other gesture patterns occur when they encounter with interested items. We need to find correlations between gesture patterns out to Slow-Down-to-Resting Pattern and user interests. These correlations lead to the improvement of a performance estimating interested items with touch gestures.

|

|

|

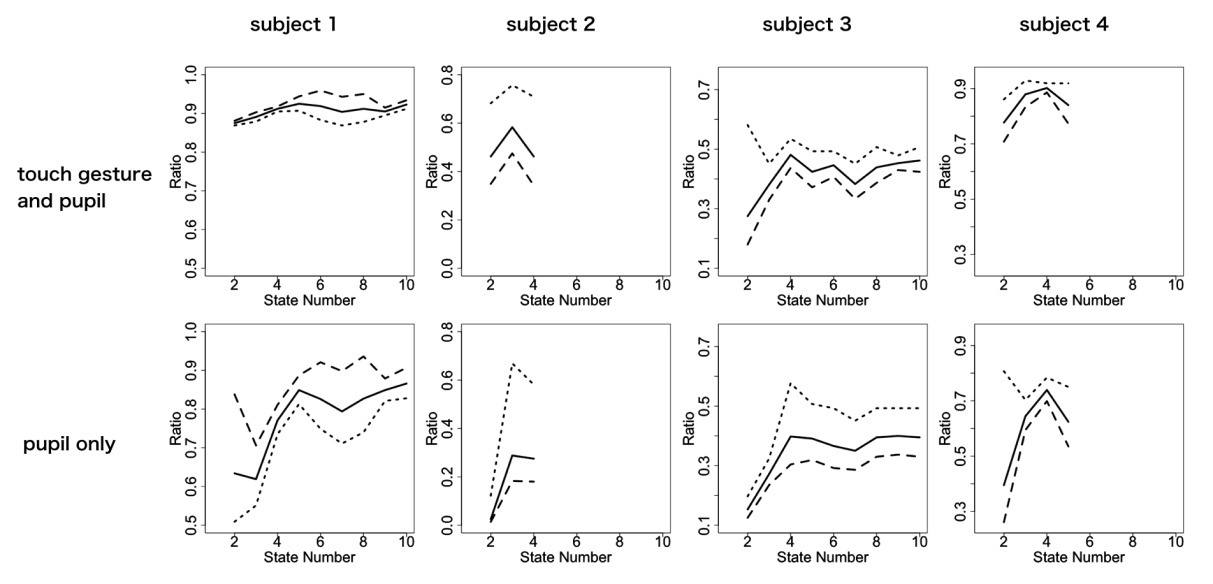

5.2. States of Pupil Reaction

- The precision of subject 2, 3 is high when their interested items are estimated by the explanatory variables of only pupil reactions. However, at that time, their recall is extremely low. As a result, their F-measure is also low. We focused on graphs of pupil reactions of subject 2, 3 and their graphs of the hidden Markov model. When we compare these graphs, their pupil reactions are not always classified as states we supposed. The definition of these states and state numbers seems to be incomplete. Accordingly, we increased state numbers of the hidden Markov model from two to ten. We calculated the precision, the recall and the F-measure with both touch gestures and pupil reactions or with only pupil reactions. We reduced sizes of samples to construct models to estimate interested items, because it takes time to evaluate a performance of the proposed method when we increase the number of states. Response variables of samples are two. One shows that subjects encounter interested items. The other shows that subjects do not encounter interested items. We reduced sampling so that the number of these response variables is same. Figure 9 shows results of the number of states from two to ten. The dashed lines, dotted lines, and solid lines in the graph show the transitions of the precision, the recall, and the F-measure, respectively. According to Figure 9, graphs of several subjects break in the middle. This is because their pupil reactions are not divided well into states as the number of states increases. According to Figure 9, the precision, the recall and the F-measure of every subject increase or decrease as the number of states changes. It implies we can improve the performance if we learn the appropriate number of states for each user. On the other hand, as the number of states increases, the learning takes more time to construct a mode for the estimation. It requires the improvements of the performance of terminals learning these models.

| Figure 9. Evaluation of Ten Pupil States |

6. Conclusions

- This paper proposed a method to estimate user’s interested items in a web page with touch gestures and pupil reactions during user views the page on mobile terminals. When the user gets attracted by a specific part of the page, the proposed method instantaneously finds which area currently interests him in detail. Such estimation can activate services based on the found detailed and temporal interested items. This paper showed our evaluation of the proposed method. When the proposed method estimates users encounter interested items, the mean of the precision, the recall, and the F-measure are 0.850, 0.534, and 0.603, respectively. According to the results of the evaluation, the performance of the method got worse for several users, when both touch gestures and pupil reactions were used. In order to improve the performance for these users, we discussed other gesture patterns out of Slow-Down-to-Resting pattern, and the number of states of pupil reactions for each user.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML