-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Software Engineering

p-ISSN: 2162-934X e-ISSN: 2162-8408

2018; 7(1): 1-12

doi:10.5923/j.se.20180701.01

An Analogous t-Way Test Generation Strategy for Software Systems | MC-MIPOG

Jalal Mohammed Hachim Altmemi

Department of Computer Engineering, Iraq University Collage, Iraq

Correspondence to: Jalal Mohammed Hachim Altmemi, Department of Computer Engineering, Iraq University Collage, Iraq.

| Email: |  |

Copyright © 2018 The Author(s). Published by Scientific & Academic Publishing.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Combinatorial testing has been a dynamic research region in late years. One test here is managing the combinatorial blast issue, which regularly requires an extremely costly computational procedure to locate a decent test set that covers every one of the blends for a given collaboration quality (t). Parallelization can be a powerful way to deal with deal with this computational cost, that is, by taking preferred standpoint of the current headway of multicore designs. In accordance with such appealing prospects, this paper introduces another deterministic technique, called multicore altered info parameter arrange (MC-MIPOG) in view of a prior system, input parameter arrange summed up (IPOG). Not at all like its antecedent system, has MCMIPOG embraced a novel approach by expelling control and information reliance to allow the tackling of multicore frameworks. Trials are attempted to illustrate speedup pick up and to contrast the proposed methodology and different procedures, including IPOG. The general outcomes show that MC-MIPOG beats generally existing techniques regarding test estimate inside worthy execution time. Not at all like most methodologies, MC-MIPOG is too fit for supporting high collaboration qualities of t > 6.

Keywords: Parameter, T-way, Frameworks, T-way Testing, MC-MIPOG

Cite this paper: Jalal Mohammed Hachim Altmemi, An Analogous t-Way Test Generation Strategy for Software Systems | MC-MIPOG, Software Engineering, Vol. 7 No. 1, 2018, pp. 1-12. doi: 10.5923/j.se.20180701.01.

Article Outline

1. Background Information

- Interaction (t-way) testing is a methodology to generate a test suite for detecting interaction faults. The generation of a t-way test suite is a n NP hard problem (Zamli, et al., 2013). Many t-way strategies have been presented in the scientific literature. Some early algebraic t-way strategies exploit exact mathematical properties of orthogonal arrays (Zamli, et al., 2013). These t-way strategies are often fast and produce optimal solutions, yet they impose restrictions on the supported configurations and interaction strength. Computational t-way strategies remove such restrictions, allowing for the support of arbitrary configurations at the expense of producing non-optimal solution (Zamli, et al., 2013).Zamli, et al., (2013) Prior works infer that pairwise testing considering 2-route connection of factors can be viable to distinguish most blames in a commonplace programming framework. While this conclusion may be valid for a few frameworks, it can't be summed up to all programming framework shortcomings, particularly when there are huge associations between factors (R. C. Bryce et al, 2010). For instance, the examination by the National Institute of Standards and Technology (NIST) announced that 95% of the genuine blames on the test programming include 4-way cooperation. Indeed, the greater part of the deficiencies is distinguished with 6-way cooperation. When all is said in done, the thought of higher collaboration qualities can be risky. Rahman, et al, (2014) states that whenever the parameter cooperation scope t increases to more than 2, the number of t-way test sets likewise increments exponentially.1 For case, think about a framework with 10 parameters, where each parameter has 5 esteems. There are 1,125 2-way tuples (or sets), 15,000 3-way tuples, 131,250 4-way tuples, 787,500 5-way tuples, 3,281,250 6-way tuples, 9,375,000 7-way tuples, 17,578,125 8-way tuples, 19,531,250 9-way tuples, and 9,765,625 10-way tuples.

2. Introduction

- From this illustrative case, for a substantial framework with numerous parameters, considering a higher-arrange t-way test set can lead toward a combinatorial blast issue Torres-jimenez, et al, 2013). Therefore, this paper likewise investigates the present best in class and examines the similitudes and contrasts among a few variations of IPOG inside the writing. Moreover, several examinations embraced are talked about to exhibit the speedup pick up. At long last, examinations with other existing methodologies, to be specific, TConfig, Jenny, TVG, ITCH, IPOG, IPOG_D, and IPOF are moreover illustrated. For most cases, MC-MIPOG outflanks other existing systems regarding test size and backings a high level of connection (t).2 Whatever is left of this paper is sorted out as takes after. Area II presents a best in class survey of the current techniques, area III gives the points of interest of the proposed MIPOG technique furthermore, how it differs from the first IPOG (Lehmann and Wegener, 2000). Segment IV gives a point by point portrayal of MC-MIPOG and talks about its usage. Segment V reports assessment tests. At last, segment VI expresses our decisions and proposals for future works.

3. Related Work

- Zamli, et al (2011) analyses that combinatorial testing methodologies can be delegated either computational or logarithmic systems. Generally mathematical approaches figure test sets straightforwardly by a numerical work.3 Arithmetical methodologies are frequently in view of the augmentations of numerical techniques for building orthogonal exhibits (OA). A few varieties of the arithmetical approach additionally abuse recursion to allow the development of bigger test sets from littler ones (Klaib, 2009). Therefore, the calculations associated with logarithmic methodologies are regularly lightweight and not subject to the combinatorial blast issue. Thus, procedures that depend on logarithmic approach are amazingly quick. Then again, arithmetical approaches frequently force limitations on the framework arrangements to which they can be connected. This essentially confines the relevance of logarithmic methodologies for programming testing (Lei, 2013). Prior works in combinatorial testing distinguish two procedures (Klaib, 2009), to be specific the programmed effective test generator (AETG) and input parameter arrange (IPO). The AETG fabricates a test set one test at once until all the tuples are secure. AETG and its variations are later summed up into a general system to help multi-way connection (t≤ 6). Interestingly, IPO covers one parameter at any given moment. This permits Initial public offering to accomplish a lower request of multifaceted nature than AETG. Initial public offering is a pairwise system (cooperation quality t = 2) in view of vertical and even augmentation. Firstly, a pair of test set is produced by the IPO system for both starting variables.4 At that point, it keeps on stretching out the test set to produce a pairwise test set for the initial three parameters and keeps on doing as such for each extra parameter until the point that every one of the parameters of the framework are secured by means of flat expansion (Younis, 2010). On the off chance that required for connection scope, IPO likewise utilizes vertical expansion with a specific end goal to include new tests after the fulfillment of flat expansion. Afterward, Initial public offering is summed up into IPOG. A few IPOG variations have been proposed to enhance its execution, including IPOG-D, IPOF, and IPOF2. Both IPOG and IPOG-D are techniques which can be determined. Not at all like IPOG, has IPOG-D consolidated the IPOG procedure with a mathematical recursive development called D-development to diminish the quantity of tuples to be secured. Lei and others detailed that when t = 3, IPOG-D is debased to a D-development arithmetical approach. Now, if t > 3, a minor rendition of IPOG covers the revealed tuples that might have been missed amid D-development.5 In that capacity, IPOG-D tends to be speedier than IPOG, however with a bigger test set.

4. MIPOG Strategy

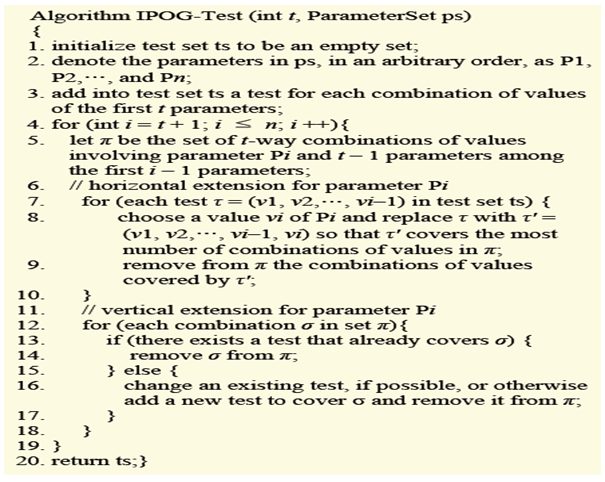

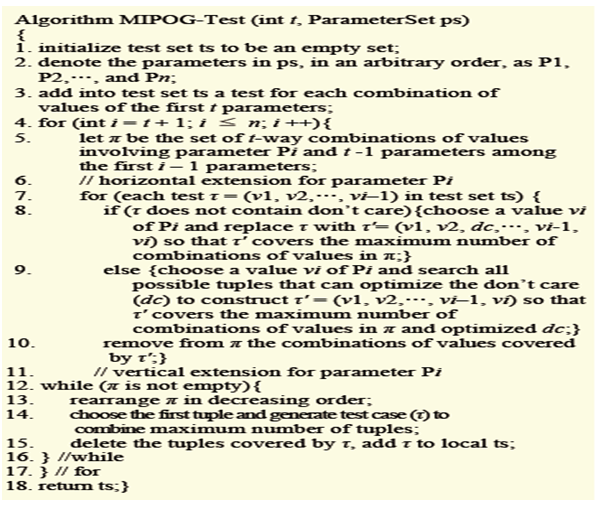

- In this area, we present the MIPOG technique and exhibit how it can be parallelized into MC-MIPOG. We likewise feature the likenesses and contrasts between MIPOG furthermore, IPOG (Bryce and Colbourn, 2007). Notwithstanding the way that it is an effective technique, we take note of that the age of a test set (ts) can be temperamental in IPOG (see Fig. 1) because of the likelihood of the present experiment changing amid the vertical expansion (particularly for test cases that incorporate "couldn't care less" esteem). This raises the issue of reliance between already produced test cases and the updated one.To address this reliance issue, we have considered variation calculations for both level and vertical expansion to expel conditions (see the MIPOG procedure in Fig. 2).

| Figure 1. IPOG Strategy (Wang, 2003) |

| Figure 2. IMPOG Strategy (Shaiful, 2016) |

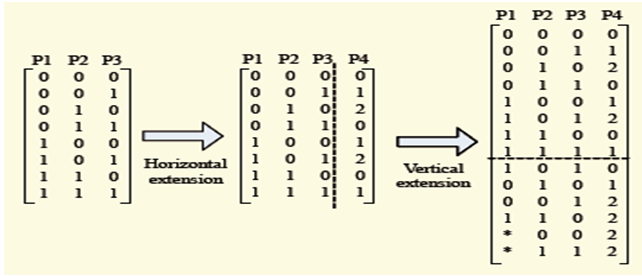

| Figure 3. Generation of test set using IPOG (Shaiful, 2016) |

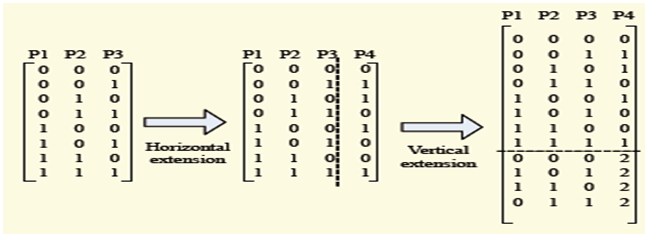

| Figure 4. Generation of test set using MIPOG (Ramli, 2016) |

5. MC-MIPOG Strategy

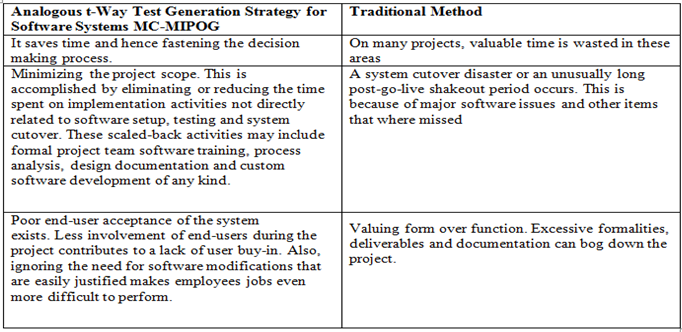

- Worked from MIPOG, the MC-MIPOG system conveys the computational procedures and memory into pieces. In rundown, the MC-MIPOG system execution depends on the following outline criteria: Memory should be conveyed with a specific end goal to hold Pi in moderately autonomous cells, called π[Vi]. Here, each π[Vi] needs its own memory to hold the t-way mixes for a one of a kind specific incentive for the parameter Pi; that is, there are Vi allotments for π. In this case, each segment is produced by a different string, called a combinatorial string. There are Vi isolate strings for even augmentation, called even augmentation strings. Similarly, there are likewise Vi isolate strings for vertical augmentation, called vertical expansion strings. The chose test set is put away into a mutual memory controlled by the test generator (ace) program which controls the creation, synchronization, and cancellation of all of these said strings.Note that the most recent advancement in multicore frameworks with multitasking working frameworks (as in Linux and Windows) oversees processor/proclivity in an ideal way. This improvement empowers every product string to be mapped into a similar equipment string while at the same time keeping the information near the processor through a procedure called a reserve worm.7 Accordingly, the real control of processor and memory partiality is naturally performed by the working framework.Advantages of An Analogous t-Way Test Generation Strategy for Software Systems MC-MIPOG over other state of arts.

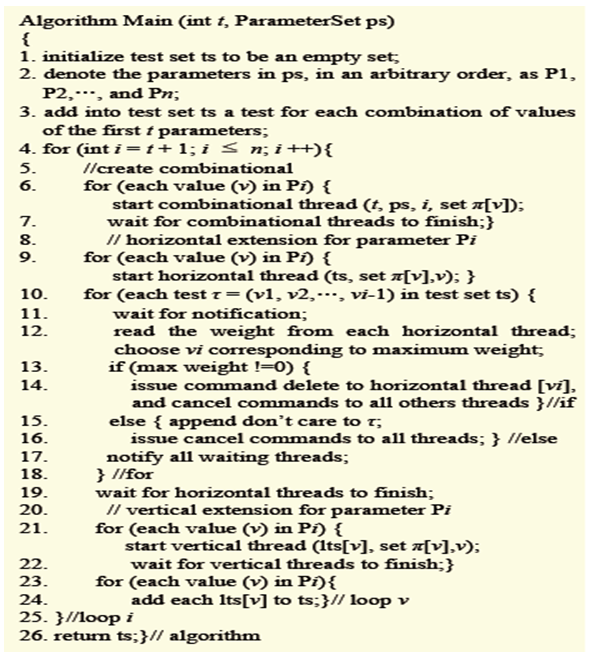

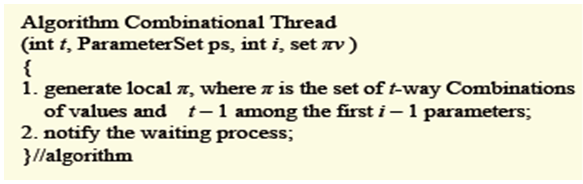

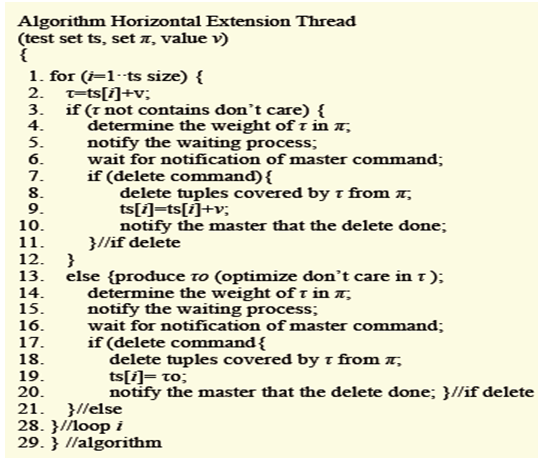

6. Test Generator (Main Program)

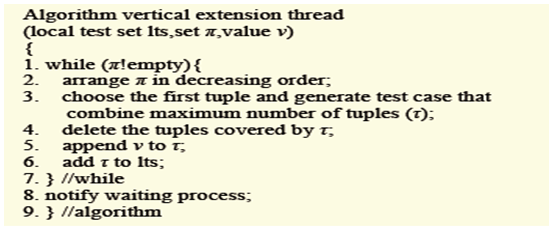

- As suggested before, the principle program parts are to deal with the shared memory and to arrange strings. Quickly, the principle program fills in as takes after: Start with a void test set (ts) and produces all tuples for the principal t-parameters. Create combinational strings (equivalent to the quantity of values in Pi), going to them parameters esteems (P1… Pi-1). Wait for every combinational string to complete their age, and afterward read π[Vi's]. Shut down the combinational strings. Create level expansion strings (equivalent to the quantity of values in Pi), going to them π[Vi's], and Vi's for the Pi variable. For flat augmentation: For each experiment τ in ts: Hold up until the point that all strings have approved outcomes. Read the weight (that is, the quantity of secured tuples in the wake of including the allotted esteem) from each string. At that point, pick the esteem comparing to the greatest weight to be added to ts if no tuples coordinate (weight zero) couldn't care less added to τ. Tell the flat expansion strings that approve that determination is finished. As indicated by the choice in c, issue charge to the chosen string to erase tuples from their own π set (πv). Enable the chose strings to refresh τ. Sit tight for chose string to complete its work.Shut down the flat strings. Create vertical augmentation strings equivalent to the quantity of values in Pi, pass them to π[Vi's], and Vi's for the Pi variable. In vertical augmentation: Sit tight for the strings to complete their halfway test set (tsvth). Gather tsvths from the strings. At that point add each tsvth to ts. Shut down the vertical strings.For lucidity, the total calculation for the ace program is given in Fig. 5.

| Figure 5. Algorithm for master program (Harman, 2014) |

| Figure 6. Algorithm for combinational trend |

| Figure 7. Algorithm for horizontal extension threads (Harman, 2014) |

| Figure 8. Algorithm for vertical extension thread (Nasser, 2015) |

7. Evaluation

- Our assessment has three principle points. To start with, we analyze the conduct of MC-MIPOG to that of IPOG as far as the test measure proportion.8 Besides, we explore whether there is speedup pick up from parallelizing MIPOG in MC-MIPOG. At long last, we think about the viability of the MC-MIPOG procedure to that of different methodologies (counting that of other IPOG variations) in terms of the produced execution time and test measure.

8. MC-MIPOG Behavior against IPOG

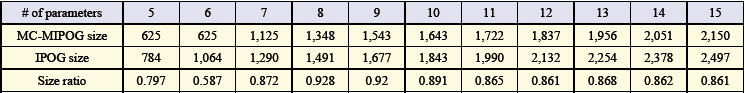

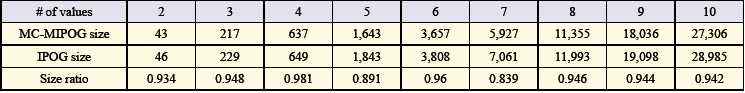

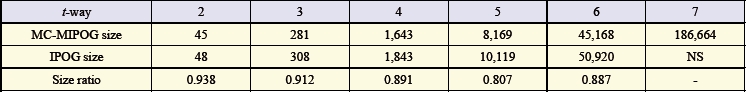

- To think about the conduct of MC-MIPOG and IPOG, we played out a gathering of investigations received from Lei and others. In these investigations, we are intrigued to look at the test sizes of MC-MIPOG and IPOG. Note that the IPOG test estimate is gotten from. Group 1: The quantity of parameters (P) and the qualities (V) are consistent, yet the scope quality (t) is fluctuated from 2 to 7. Group 2: The scope quality (t) and the qualities (V) are steady to 4 and 5, however the q uantity of parameter (P) is differed from 5 to 15. Group 3: The quantity of parameter (P) and the scope quality (t) are consistent from t to 10 and 4, individually, yet the values (V) are differed from 2 to 10. The after effects of the tests are appeared in Tables 1, 2, and 3, individually. Here, we characterize the size proportion as the extent of the test set from MC-MIPOG to the size got from IPOG.

| Table 1. Size ratio results for 5 to 15 variables with 5 esteems in 4-way testing |

| Table 2. Size ratio results for 10 variables with 2 to 10 esteems in 4-way testing |

| Table 3. Size ratio results for 10 variables with 5 esteems for t=2 to 7 |

9. Speedup Gain in MC-MIPOG

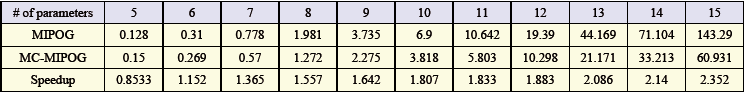

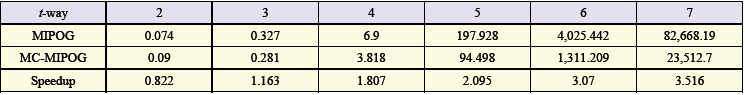

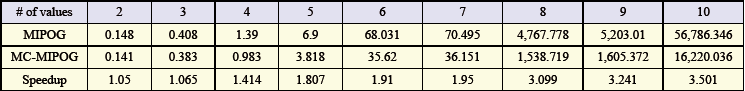

- To quantify the speedup, pick up from parallelizing MIPOG, we subjected both MIPOG and MC-MIPOG to three test bunches depicted before. The consequences of the examinations are appeared in Tables 4, 5, and 6. Here, the speedup is characterized as the proportion of the time taken by the successive MIPOG calculation to the time taken by MC-MIPOG calculation.9 Every one of the outcomes were acquired utilizing the Linux Centos OS with a 2.4 GHz Core 2 Quad CPU and 2 GB RAM with JDK 1.5 introduced. Note that the execution time is in seconds, and both MIPOG and MC-MIPOG create a similar test set in all cases (Ahmed, et al., 2015).

| Table 4. Speedup results for 5 to 15 variables with 5 esteems in 4-way testing |

| Table 5. Speedup results for 10 variables with 2 to 10 esteems in 4-way testing |

| Table 6. Speedup results for 10 variables with 5 esteems for t=2 to 7 |

10. Comparison with other Strategies

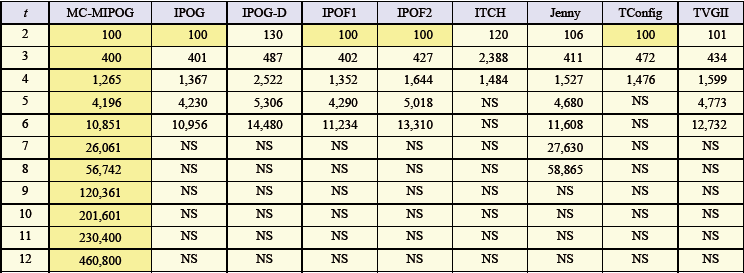

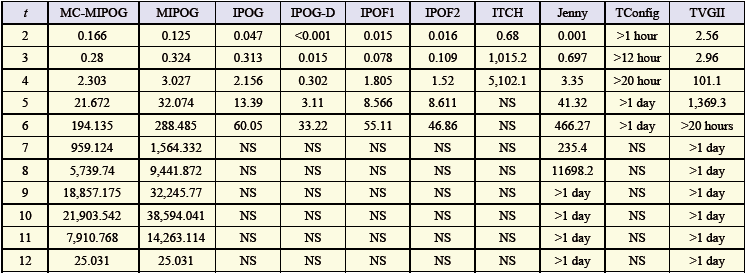

- To explore the viability of the MC-MIPOG procedure against different procedures, including IPOG and its variations, regarding test estimate and the quantity of created test sets, we receive a typical setup framework, the TCAS module. The TCAS module is an air ship impact shirking framework created by the Federal Aviation Administration which has been utilized as contextual analysis in other related works.10 The TCAS factor contains twelve variables; seven variablescontain 2 esteems, two variables contain three esteems, one variablecontains four esteems, and two variablescontain 10 esteems. As featured before, we picked the TCAS module claiming similar parameters and qualities have been utilized by other specialists. By embracing similar parameters and qualities, target examination might be made between different procedure usage. To guarantee that the outcomes got are up-todate given the way that a portion of the usage have developed enormously finished the years, we downloaded all the accessible usage inside our condition to guarantee reasonable examination. Here, we are likewise intrigued to research regardless of whether every procedure bolsters high (t > 6). We downloaded ACTS (actualizing IPOG, IPOG-D, IPOF1, and IPOF2) from NIST, ITCH, Jenny, TConfig, and TVGII. We were not ready to download AETG since the execution is a business item; in this manner it was not considered for correlation in our investigation. To repay the way that that Jenny is a MSDOS-based executable program, we picked a running situation comprising of Windows XP 2.0 GHz, an Intel Core 2 Duo CPU, furthermore, 1 GB RAM with JDK 1.6 introduced. Tables 7 and 8 abridge the entire outcomes. As in Table 3 NS demonstrates that the parameter and qualities picked with guaranteed quality are not bolstered. Additionally, obscured cell columns demonstrate the best execution in term of test estimate. As found in Table 7, MC-MIPOG, IPOG, IPOF1, and IPOF2 gave the ideal test measure at t = 2. At t = 3, both MC-MIPOG what's more, IPOG gave the ideal test measure. For every single other case, MCMIPOG continuously beats different systems. Other than MCMIPOG, only Jenny can bolster more than t = 6 for the TCAS module. Notwithstanding, we have not been effective in summoning Jenny for t > 8 because the program usage crashes.

| Table 7. Comparative test size results using the TCAS module for t = 2 to 12 |

| Table 8. Comparative test generation time using the TCAS module for t = 2 to 12 |

11. Conclusions

- As PC makers make multicore CPUs unavoidably accessible inside sensible costs, outfitting this innovation is never again an extravagance yet a feasible and helpful choice. In this paper, we explored and assessed a parallel technique called MC-MIPOG for t-way test information age on multicore engineering. Our outcomes demonstrate that MC-MIPOG scales well against existing systems. In arrangement for our future work, we are at present porting MIPOG and MC-MIPOG into the matrix condition. Our underlying usage comes about have been empowering. We are additionally intending to perform more broad correlations with Colburn's best-known outcomes. In examination with all other IPOG variations (aside from MCMIPOG), obviously IPOG beat IPOG_D, IPOF1, what's more, IPOF2 regarding test measure for the TCAS module. Like different systems (aside from MC-MIPOG), this group of procedures can't deliver a test suite for t > 6. That is, no choice is given for t > 6. As far as execution time, IPOG-D has the quickest general time for t ≤ 6. For t > 6, MC-MIPOG is quickest since no different systems can give t-way test age bolster (Jenny underpins up to t = 8). From one viewpoint, MIPOG is like IPOG and IPOG_D in the sense that they are overall deterministic procedures. From another point of view, IPOF and IPOF2, are non-deterministic systems. The general point of IPOG_D, IPOF, and IPOF2 is to accomplish a speedier execution time than that of IPOG. By and large, getting an advanced test measure and a quick execution time are two sides of the same coin12. Acquiring an improved test measure requires more preparing time for picking the most upgraded tuple. On the other hand, acquiring quick execution time implies that little improvement is performed to get the ideal test measure. This is apparent to the extent the test sizes are worried for IPOG_D, IPOF, also, IPOF2. MIPOG is a procedure that is intended to deliver a littler test estimate than that of IPOG under the cost of something beyond handling time amid flat augmentation. As talked about before, not at all like IPOG, IPOG_D, and IPOF, MIPOG embraces an alternate sort of vertical expansion which is more heavyweight than that of IPOG (for improvement of vertical expansion). Therefore, MIPOG's execution time proves to be slower as compared to the clear majority of the IPOG variations. In any case, the usage of MCMIPOG has lightened this disadvantage through the reception of a multicore design. Truth be told, MIPOG is the main methodology inside the IPOG family that can be parallelized.

Notes

- 1. Zamli, K.Z., Younis, M.I., Abdullah, S.A.C., Soh, Z.H.C.: Software Testing, 1st edn. Open University, Malaysia KL (2013).2. R. C. Bryce, Y. Lei, D. R. Kuhn, and R. N. Kacker, “Combinatorial Testing,” Handb. Res. Softw. Eng. Product. Technol. Implic. Glob., 196–208 (2010).3. M. Rahman, R. R. Othman, R. B. Ahmad, and M. Rahman, “Event Driven Input Sequence Tway Test Strategy Using Simulated Annealing,” in Fifth Int. Conf. on Intelligent Systems, Modelling and Simulation, 663–667 (2014).4. Lehmann, E., Wegener, J.: Test Case Design By Means Of The CTE-XL. In: Proceedings of the 8th European International Conference on Software Testing, Analysis & Review (EuroSTAR 2000), Copenhagen, Denmark (2000).5. Lei, Y., Kacker, R., Kuhn, R., Okun, V., Lawrence, J.: IPOG/IPOG-D: Efficient Test Generation For Multi-way Combinatorial Testing. Journal of Software Testing, Verification and Reliability 18(3), 125–148 (2013).6. K. Z. Zamli, B. Y. Alkazemi, and G. Kendall, “A Tabu Search hyper-heuristic strategy for t-way test suite generation,” Appl. Soft Comput. J.,44, 57–74 (2016).7. Wang, Z., Xu, B., Nie, C.: Greedy Heuristic Algorithms To Generate Variable Strength Combinatorial Test Suite. In: Proceedings of the 8th International Conference on Quality Software, Oxford, UK, pp. 155–160 (2013).8. Czerwonka, J.: Pairwise Testing In Real World. In: Proceedings of 24th Pacific Northwest Software Quality Conference, Portland, Oregon, USA, pp. 419–430 (2006).9. L. Y. Xiang, A. A. Alsewari, and K. Z. Zamli, “Pairwise Test Suite Generator Tool Based On Harmony Search Algorithm (HS-PTSGT),” NNGT Int. J. Artif. Intell.,2, 62–65 (2015).10. R. R. Othman, N. Khamis, and K. Z. Zamli, “Variable Strength t-way Test Suite Generator with Constraints Support,” Malaysian J. Comput. Sci., 27, 3, 204–217 (2014).11. Zamli, K.Z., Klaib, M.F.J., Younis, M.I., Isa, N.A.M., Abdullah, R.: Design And Implementation Of A T-Way Test Data Generation Strategy With Automated Execution Tool Support. Information Sciences 181(9), 1741–1758 (2011).12. A. B. Nasser, Y. A. Sariera, A. A. Alsewari, and K. Z. Zamli, “Assessing Optimization Based Strategies for t-way Test Suite Generation: The Case for Flower-based Strategy,” in IEEE Int. Conf. on Control System, Computing and Eng, 150–155 (2015).

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML