-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Software Engineering

p-ISSN: 2162-934X e-ISSN: 2162-8408

2012; 2(4): 112-123

doi:10.5923/j.se.20120204.04

Modeling Metrics for Measuring Service Discovery

G. Shanmugasundaram1, V. Prasanna Venkatesan1, C. Punitha Devi2

1Department of Banking Technology, Pondicherry University, Puducherry, 605014, India

2Department of Computer Science & Engineering, Pondicherry University, Pondicherry, 605014, India

Correspondence to: G. Shanmugasundaram, Department of Banking Technology, Pondicherry University, Puducherry, 605014, India.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

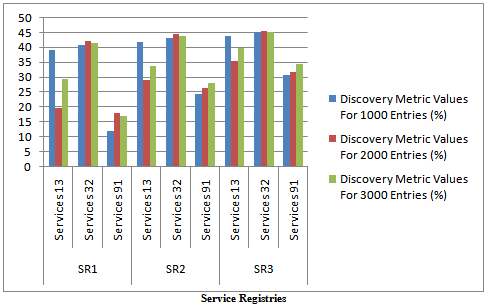

Services are discoverable based on the functional description published in the accessible registries. Service Discovery or identification is one key factor of discoverability in Service Oriented Architecture (SOA) systems. Locating or positioning the service exactly in the registry gives better discovery leading to easy identification of service. The success of service discovery lies in how appropriately a service is identified. This has been talked about qualitatively in the recent works of service discovery. This paper focuses on giving the related and recent works on the measures of service discovery indicating how far they have been addressed and brings out from the findings that there exist no proper measures for service identification /discovery. From the literature we have identified essential features. These features have been considered as a focal point and a metric suite is proposed with essential measures like service contract, relevance of metadata and exact positioning of service. Based on these measures, a measure for service discovery is proposed. In order to verify these measures, an experiment has been designed and conducted with three types of service registries namely Service Registry1, Service Registry2, and Service Registry3. From the experiment results it is evident that maximum of 18 percentage increase in service discovery in Service Registry 3 when compared to other two registries. So these measured results will help the service provider and service broker in fine tuning the service registry and the consumer for a better design the service queries. The results prove that the proposed measures enables effective service discovery.

Keywords: Service Discovery Model, Service Discovery Measures, Discovery Features, SOA Quality Metrics , Discoverability Measures

Cite this paper: G. Shanmugasundaram, V. Prasanna Venkatesan, C. Punitha Devi, Modeling Metrics for Measuring Service Discovery, Software Engineering, Vol. 2 No. 4, 2012, pp. 112-123. doi: 10.5923/j.se.20120204.04.

Article Outline

1. Introduction

- Distributed systems have their functionalities spread across various applications in heterogeneous environment. These systems demand a flexible architecture as that of SOA. SOA finds itself suitable because of its adaptive nature and ability to discover and adopt services and expresses the needed functionalities through service composition. Hence service discovery has a major role to play. Service Discovery enables composing or assembling together distributed functionalities or services to build new services. Discoverability is one of the quality attribute of SOA which promote reuse of services by increasing the consumption of services as many time a particular user requires them[3]. Discoverability is comprises of two components which are service discovery and service Interpretation. This paper focuses on service discovery.Service Discovery consists of various players like service consumer, service provider and service broker. The Service Discovery is based on sufficient information provided by the service providers which are published in theregistry[6][8][26], suitable queries used to find the required service andprocess to discover the service from the registry which is maintained by service broker. In order to enable effective service discovery, it has to be measured and finetuned. The measures involved are effect of service information representation in the registry, the similarity match of user query with the Meta data of service registry and also underlying algorithm in the discovery process. Based on these measurements appropriate steps can be taken by service producer, service consumer and the service broker to achieve effective service discovery.Hence a mechanism to check the appropriateness of the service discovery is highly needed. The objective of this paper is to propose the measures to estimate the effectiveness of service discovery process and information representation quantitatively. An experiment has been done to validate the measures. The rest of the paper is organized as follows, section 2 continues with a discussion of related work. Section 3 describes our proposed measures for service discovery. The experiment design was illustrated in section in 4. The experimentation was carried out and results are reported in section 5. Finally concludes in section 6.

2. Related Works

- Discoverability is the quality attribute of SOA which discovers the exact web service that the service consumer requires[1]. It is essential to find the aspects or constituents for achieving complete discoverability. From, the research contribution of different researchers it has been known that discoverability contains the two constituents or aspects in it. The two parts of discoverability are discovery/ identifiability and interpretability[2][17][21][29]. Many researchers have confusion with the use of these two terminologies (i.e. discoverability and discovery)[4][10][12][40]. The research works are more specific towards the discovering of web services and they are not addressing discoverability of services. From the literature it is obvious that discovery is a part of discoverability which finds or searches the web services. Here we have done a survey which focuses on both discoverability and discovery of services.The table 1 presents the list of works that focuses towards discoverability. The table indicates that most of works have concentrated more on the functional and non- functional aspects of web services. These aspects are generally used to describe the capabilities and to invoke or interpret the web services. The literature concludes that the constituents of discoverability have not been addressed exactly.In this paper our focus is on discovery component of discoverability. Here, we have narrow down our review to concentrate on works specific to discovery of services.From table 2, it reveals that most of research works focuses on the functional aspects, few researchers on categorization or location and some of them have focused on semantics and interface definition as factors for discovering the appropriate web services. Here the existing measures proposed for service discovery by various contributors are in a qualitative manner[16][23][41][44]. The above factors that are stated in the review do not cover the discovery part of discoverability completely, that had been visible through the study done to find out the factors that supports discovery alone[27][28][40][42]. This research gap motivates us carry out the research towards proposing the measures of discovery.

|

3. Proposed Work

- The definition for discoverability from various studies stating that it is the process of searching the individual service based on the service description and to invoke or interpret those services based on the purpose and its capabilities. Here the definition of service discoverability indicates that the two components or items, discovery and interpretability are involved in the entire process of service discoverability[17][21][29]. The discovery deals with the searching or finding the service and interpretability deals with usage or invocation of those services. We have defined three factors which is essential for discovering the services from the service registry. We have proposed measures to validate those factors for its appropriateness in service registry. To demonstrate the proposal an experiment was designed with three different service registries. Each registry contains the entries of services range from 1000 to 3000.To validate the discovery process in service registry, it is essential to measure the following factors which enable the better searching of services in service registry. • Check for Service Contract of required service in the Service Registry[40]The service provider has to register their services with the service contract. • Find the Appropriateness of Meta Data Associated with Service Contract of required service[28]Service contract of the registered services has associated with the corresponding Meta data which are describing the service functionalities.• Check the exact position or location of the required service in the Service Registry[27][42]In registry, the services has to be positioned or fall under the categories to make search easierBased on the above discussed factors, we have designed a service registry which contains complete list of attributes. The attributes relevant to discovery of services are listed in the table 1. We have defined the value range and corresponding units for each attributes are explained in section 4. In this paper we concentrate on proposing measures for service discovery. Three measures are proposed to check or validate the factors of service discovery of discoverability.Metrics for Discovery1. Metric for Checking for Service Contract Checking for Service Contract (CSC) is measured by assessing the ratio of total number of hits to get the Service Contract of required Service n to Maximum number of hits possible to check Service contract on the available services N on the Service Registry.Ratio of Checking Service Contract for Required Service (CSC) =

Where, the max of n >=NHere the value range of CSC is 0...1. The lower value of Service Contract metric indicates higher existence of the Service Contract. The maximum value of n is greater than or equal to N. The optimal value for large volume of N the ratio should be low. The value of this metric is zero in case of failure (i.e. Non-existence of Service Contract in the Service Registry).2. Metric to Check the Relevance of Meta Data in Service contractRelevance of Meta Data in Service Contract (RMD) measures the degree to which service contract contains relevance in its Meta data for required service in the service Registry. It means whether the supplied Meta data items are giving relevance with their corresponding service contract.Ration of checking the Relevance of Meta Data in Service Contract (RMD) =

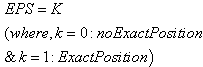

Where, the max of n >=NHere the value range of CSC is 0...1. The lower value of Service Contract metric indicates higher existence of the Service Contract. The maximum value of n is greater than or equal to N. The optimal value for large volume of N the ratio should be low. The value of this metric is zero in case of failure (i.e. Non-existence of Service Contract in the Service Registry).2. Metric to Check the Relevance of Meta Data in Service contractRelevance of Meta Data in Service Contract (RMD) measures the degree to which service contract contains relevance in its Meta data for required service in the service Registry. It means whether the supplied Meta data items are giving relevance with their corresponding service contract.Ration of checking the Relevance of Meta Data in Service Contract (RMD) =  Where, the value of a>=1 && a<=AHere A is the number of attributes giving Meta information and a gives the attributes which are having relevance Meta information of the required service in the Service Registry. The constraint chosen for a must be at least 1. The value of a increases and the part of the metric (A-a) nearing to zero seems a good relevance because a is directly proportional to number of attributes (a ∞ A). The RMD Metric value range is 0...1. The value nearer to one indicates the better relevance of the Meta information.3. Check for Exact Positioning or categorization of Service in the Registry (EPS)There is no metric for measuring the Exact or appropriate positioning of Service (EPS) in the Service Registry. We used the constant value for this factor. The value falls either zero or 1 i.e. if it is exactly positioned in the specified location the value is 1 otherwise the value is zero.

Where, the value of a>=1 && a<=AHere A is the number of attributes giving Meta information and a gives the attributes which are having relevance Meta information of the required service in the Service Registry. The constraint chosen for a must be at least 1. The value of a increases and the part of the metric (A-a) nearing to zero seems a good relevance because a is directly proportional to number of attributes (a ∞ A). The RMD Metric value range is 0...1. The value nearer to one indicates the better relevance of the Meta information.3. Check for Exact Positioning or categorization of Service in the Registry (EPS)There is no metric for measuring the Exact or appropriate positioning of Service (EPS) in the Service Registry. We used the constant value for this factor. The value falls either zero or 1 i.e. if it is exactly positioned in the specified location the value is 1 otherwise the value is zero. The discovery value for services is computed as follows,

The discovery value for services is computed as follows, Here, W1 & W2 is the weight factor whose value is 0.5. Since RMD and CSC are associated terms a single weight factor W1 has been used. The value for discovery is ranging from 0 to 1. Higher the value of Discovery metrics enables better discovery.

Here, W1 & W2 is the weight factor whose value is 0.5. Since RMD and CSC are associated terms a single weight factor W1 has been used. The value for discovery is ranging from 0 to 1. Higher the value of Discovery metrics enables better discovery. 4. Experiment Design

- To demonstrate the usability of the proposed metrics, we have designed and implemented three different service registries. The service registry and the corresponding information are designed based on the inspiration from[9][10][18][23][24][25][28][34][33][35]. For our experimental purpose we have registries with three different ranges of data (i.e. registry with 1000, 2000 and 3000 entries). We derived complete list of attributes and the value ranges (i.e. from minimum to maximum for each attribute) for the service registry as shown in table 3.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

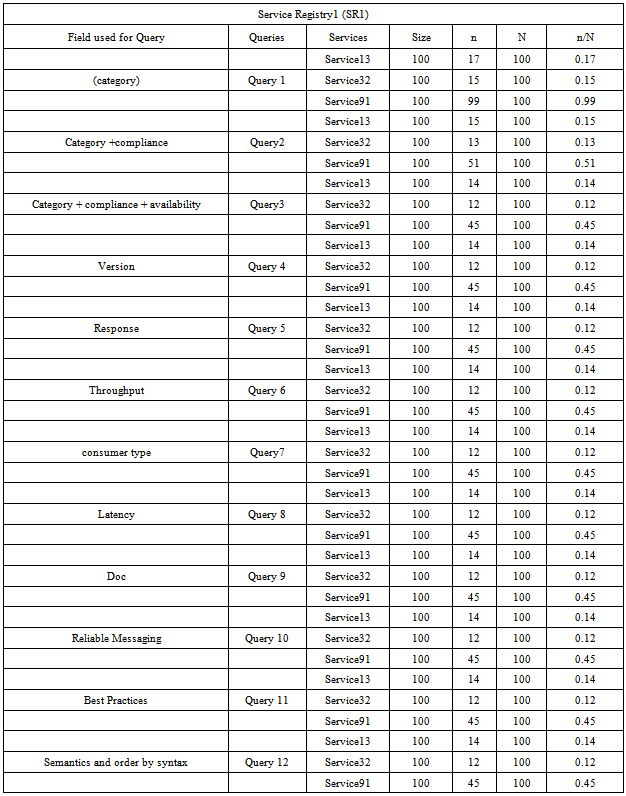

4.1. Experiment Conducted with Service Registry1 (SR1)

- The Service Registry the attributes are limited when compared to other registry. It contains basic attributes like service name, category, service ID, service operation, availability and compliance. Here we formulated 12 queries for our experiment.i.e. Query1 contains Category, Query2 contains Category + Compliance, and likewise remaining queries contains the fields from previous queries plus its own field.Out of 12 queries, SR1 gives response for first three queries and the remaining there is no response the values of the query 3 will be repeating because it is a primitive registry doesn’t contain the additional fields. Three proposed measures are validated with the experiment which is represented as factor1, factor 2 and factor3.a) Measuring CSC metric from service registry 1Similarly, we have computed the metrics on 2000 and 3000 data sets and the results are shown in table 8 & 9.b) Measuring RMD metric from service registry 1

|

The Value of RMD metric is 0.66c) Measuring EPS metric from service registry 1The value of exact positioning is 1 for the three services chosen in service registry SR1. The value is same for the SR1 with different data ranges (1000, 2000, and 3000).EPS= 1

The Value of RMD metric is 0.66c) Measuring EPS metric from service registry 1The value of exact positioning is 1 for the three services chosen in service registry SR1. The value is same for the SR1 with different data ranges (1000, 2000, and 3000).EPS= 14.2. Experiment conducted with Service Registry2 (SR2)

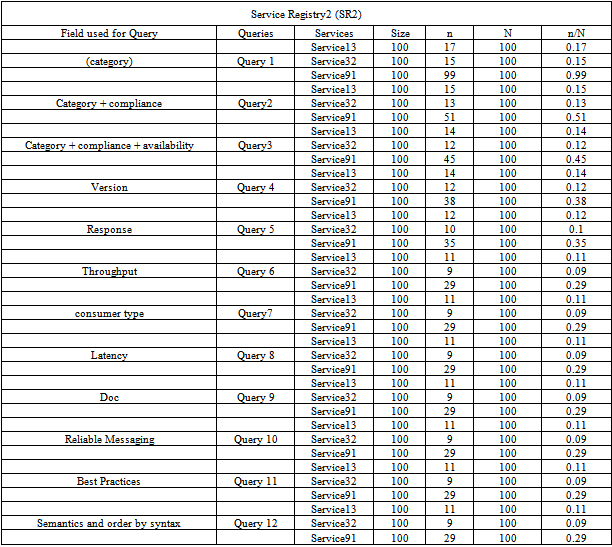

- The Service Registry 2 (SR2) is the extended version of SR1 with additional attributes like version, interface name, Response time and throughput. Out of 12 queries, SR2 gives response up to the sixth query and the remaining there is no response, the values of the query 6 will be repeating because it is an extended version which contains additional fields compared to SR1.a) Measuring CSC metric from service registry 2Similarly, we have computed the metrics on 2000 and 3000 data sets and the results are depicted in table 8 & 9.b) Measuring RMD metric from service registry 2In SR2, the attributes supporting the Meta data are nine in which 7 attributes are giving more relevance to Meta data. It is common for three different data ranges of SR2 Here A= 9, a=7Ratio of checking the Relevance of Meta Data in Service Contract (RMD) =

The Value of RMD metric is 0.77c) Measuring EPS metric from service registry 2The value of exact positioning of services (EPS) is 1 for the three services chosen in service registry SR2. The value is same for the SR2 with different data ranges (1000, 2000, and 3000).EPS = 1

The Value of RMD metric is 0.77c) Measuring EPS metric from service registry 2The value of exact positioning of services (EPS) is 1 for the three services chosen in service registry SR2. The value is same for the SR2 with different data ranges (1000, 2000, and 3000).EPS = 1

|

|

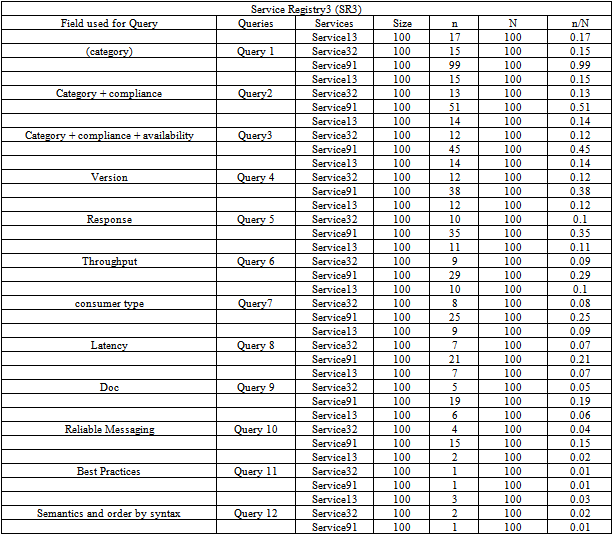

4.3. Experiment conducted with Service Registry3 (SR3)

- The Service Registry 3 (SR3) contains the all attributes listed in the table 3 because it is a complete registry and gives output for all the 12 queries.a) Measuring CSC metric from service registry 3Similarly, we have computed the metrics on 2000 and 3000 data sets and the results are shown in table 8 & 9.b) Measuring RMD metric from service registry 3In SR2, the attributes supporting the Meta data are 17 in which 14 attributes are giving more relevance to Meta data. It is common for three different data sets of SR2 Here A= 17, a=14Ration of checking the Relevance of Meta Data in Service Contract =

The Value of RMD metric is 0.82c) Measuring EPS metric from service registry 3The value of exact positioning of services (EPS) is 1 for the three services chosen in service registry SR3. The value is same for the SR3 with different data ranges (1000, 2000, and 3000).EPS= 1

The Value of RMD metric is 0.82c) Measuring EPS metric from service registry 3The value of exact positioning of services (EPS) is 1 for the three services chosen in service registry SR3. The value is same for the SR3 with different data ranges (1000, 2000, and 3000).EPS= 15. Discussion

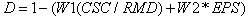

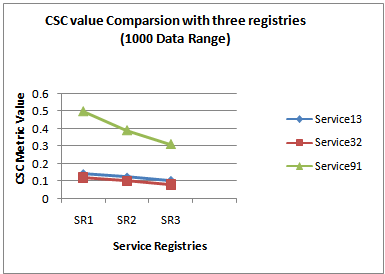

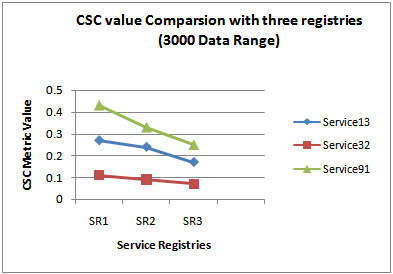

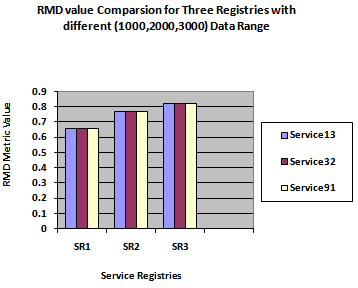

- Our proposed work is distinct when compared to[9][11][13][15][16][19][20] based on number of attributes used in measuring the service discovery. Due to this fact, it will be inappropriate if we carry out the comparison either qualitatively or quantitatively with other related works. So, we carried out the study based on the three different type of service registries with different data sets.The output of each case gives proposed metrics values for three different registries with different data sets as shown in table 7, 8 & 9. Let us consider the CSC metric value for three registries used. In case 1 the CSC metric value is high when compared to case 2. Similarly the case 2 CSC metric value is high when compared to case 3. It is clearly evident from this that Case 3 CSC metric value is low which indicates the higher existence of Service Contract. The graph below figure1, 2&3 shows that CSC metric value comparison with three registries on various data sets.The RMD metric gives the common value for service registry 1(SR1) of different data sets, similarly for other service registries (SR2 & SR3) also. The RMD Metric Value comparison shows that SR1 is low when compared to SR2, similarly SR2 RMD Metric value is low compared with SR3. So SR3 having high RMD Metric value indicates the better relevance of the Meta information when compared with other registries. The graph below figure 3 shows the comparison of RMD metric value of three registries with three different data sets.Finally, exact positioning factor gives a constant value 1 for all Service Registries with different sets of data because all the searched services are exactly categorized.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Figure 1. CSC Metric Value of various services compared with SR1, SR2 and SR3 for 1000 data range |

| Figure 2. CSC Metric Value of various services compared with SR1,SR2 and SR3 for 2000 data range |

| Figure 3. CSC Metric Value of various services compared with SR1, SR2 and SR3 for 3000 data range |

| Figure 4. RMD Metric Value of different services compared with SR1, SR2 and SR3 for 1000, 2000 and 3000 Records |

| ||||||||||||||||||||||||||||||||||||||||||||||||||

| Figure 5. Discovery Metrics values for 1000, 2000 and 3000 Data Entries in percentage |

6. Conclusions

- Service Discovery is a component of Discoverability, which increases the better discovery of services that are registered in the registry. In this paper we have identified the factors constituting the discovery component. We have proposed three metrics which can be used to evaluate those identified factors and in turn measures the service discovery. The proposed metrics are validated with our experiment using three different service registries. The results of each metric show how the discovery of services was improved from SR1 to SR2 and SR3 and also paves way to improve discovery faster and effectively by giving more attributes of services. This metric can offer the essential attributes of service that the service provider has been considered while publishing their services in service registry thereby consumer requirements are fulfilled in discovering the relevant services. Our future work is to carry out the same experiment with real time service registries. Also, we have planned to devise the method to compare our work with other existing approaches. In addition to the above we have planned to define metric suite for service discoverability. This can be carried out by defining metric suite for discovery and we have to propose measures for the interpretability. Future work focuses on proposing the features and measures for interpretability component of Discoverability.

References

| [1] | T. Erl. “Service-Oriented Architecture, concepts, Technology and design” The Prentice Hall service-oriented computing series. 2006 |

| [2] | T. Erl. "SOA principles of Service Design”, The prentice Hall Service-Oriented Computing Series .2009 |

| [3] | Liam O’Brien, Paulo Merson, and Len Bass, "Quality Attributes for Service-Oriented Architectures", Proceedings of International Workshop on Systems Development in SOA Environments, IEEE 2007 |

| [4] | Zain Balfagih and Mohd Fadzil Hassan, “Quality Model for Web Services from Multi-Stakeholders' Perspective", Proceedings of International Conference on Information Management and Engineering, IEEE 2009 |

| [5] | Renuka sindhgatta, et al., "Measuring the Quality of Service Oriented Design", ICSOC-sciencewave, LNCS 5900, pp. 485-499, Springer 2009 |

| [6] | OASIS. “Universal description, discovery and integration”, 2003 available at : https://www.oasis-open.org/ retrieved on January 5 2012 |

| [7] | OASIS, “Introduction to UDDI: important features and functional concepts,” 2004 available at : https://www.oasis-open.org/ retrieved on January 5 2012 |

| [8] | OASIS, “Web Services Dynamic Discovery (WS – Discovery) version 1.1,” 2009 available at : https://www.oasis-open.org/ retrieved on January 5 2012 |

| [9] | Si Won Choi, Jin Sun Her, and Soo Dong Kim, "Modeling QoS Attributes and Metrics for Evaluating Services in SOA Considering Consumers’ Perspective as the First Class Requirement" , Asia-Pacific Services Computing Conference, IEEE 2007 |

| [10] | Si Won Choi, Jin Sun Her, and Soo Dong Kim, "QoS Metrics for Evaluating Services from the Perspective of Service providers" , Proceedings of International conference on e-Business Engineering, IEEE 2007 |

| [11] | Si Won Choi and Soo Dong Kim, "A Quality Model for Evaluating Reusability of Services in SOA”, Proceedings of 10th IEEE Conference on E-Commerce Technology and the Fifth IEEE Conference on Enterprise Computing, E-Commerce and E-Services, 2008 |

| [12] | Chen Zhou, Liang-Tien Chia, and Bu-Sung Lee ,”Semantics in Service Discovery and QoS Measurement”, IT Professional Technology solution for Enterprise, IEEE Journal, volume 7, issue 2, 2005 |

| [13] | A. Kozlenkov V. Fasoulas F. Sanchez G. Spanoudakis A. Zisman, "A Framework for Architecture-driven Service Discovery", Proceedings of IEEE and ACM SIGSOFT 28th International Conference on Software Engineering, 2006 |

| [14] | Andreas Wombacher ,"Evaluation of Technical Measures for Workflow Similarity Based on a Pilot Study", R. Meersman, Z. Tari et al. (Eds.): OTM 2006, LNCS 4275, pp. 255–272, Springer 2006 |

| [15] | Ruben Lara, Miguel Angel Corella, Pablo Castells, “A flexible model for service discovery on the Web, Semantic Web Services with WSMO”, Special Issue on Semantic Matchmaking and Resource Retrieval, International Journal of Electronic Commerce, Volume 12, Number 2, Winter 2007-2008, Pages 11-41 |

| [16] | Benjamin Kanagwa and Agnes F. N. Lumaala ,"Discovery of Services Based on WSDL Tag Level Combination of Distance Measures", International Journal of Computing and ICT Research, Vol. 5, Special Issue, December 2011 |

| [17] | R. Deepa, S. Swamynathan, ”A Service Discovery Model for Mobile Ad hoc Networks”, Proceedings of International Conference on Recent Trends in Information, Telecommunication and Computing, IEEE 2010 |

| [18] | Eyhab Al-Masri and Qusay H. Mahmoud, "Discovering the Best Web Service: A Neural Network-based Solution", Proceedings of the International Conference on Systems, Man, and Cybernetics, IEEE 2009 |

| [19] | Bensheng YUN,"A New Framework for Web Service Discovery Based on Behavior ", Proceedings of Asia-Pacific Services Computing Conference, IEEE 2010 |

| [20] | Yu-Huai TSAI, San-Yih, HWANG, Yung TANG,” A Hybrid Approach to Automatic Web Services Discovery”, Proceedings of International Joint conference on Services Sciences, IEEE 2011 |

| [21] | Hong-Linh Truong et al., "On Identifying and Reducing Irrelevant Information in Service Composition and Execution", WISE, LNCS 6488, pp. 52–66, Springer 2010 |

| [22] | Youngkon Lee, “Web Services Registry implementation for Processing Quality of Service”, Proceedings of International Conference on Advanced Language Processing and Web Information Technology IEEE 2008 |

| [23] | Yannis Makripoulias et al., Web Service discovery based on Quality of Service", Proceedings of International Conference on Computer Systems and Applications,IEEE 2006 |

| [24] | Eyhab Al-Masri and Qusay H. Mahmoud, ”Toward Quality Driven Web Service Discovery”, IT Professional Technology solution for Enterprise, IEEE Journal, volume 10 issue 3 2008 |

| [25] | http://www.infoq.com/articles/rest-discovery-dns - Retrieved on February 2012 |

| [26] | http://support.sas.com/rnd/javadoc/93/Foundation/com/sas/services/discovery/ServiceTemplate.html - Retrieved on February 2012 |

| [27] | http://xmpp.org/extensions/xep-0030.html- Retrieved on February 2012 |

| [28] | http://docs.oracle.com/cd/B31017_01/integrate.1013/b31008/wsil.htm- Retrieved on February 2012 |

| [29] | Kyriakos Kritikos and Dimitris Plexousakis Semantic,” QoS Metric Matching Proceedings of the European Conference on Web Services (ECOWS'06)”, IEEE 2006 |

| [30] | Michael Rambold, Holger Kasinger, Florian Lautenbacher and Bernhard Bauer, “Towards Autonomic Service Discovery – A Survey and Comparison”, Proceedings of International Conference on Services Computing, IEEE 2009 |

| [31] | Daniel Schall, Florian Skopik, and Schahram Dustdar,” Expert Discovery and Interactions in Mixed Service-Oriented Systems”, IEEE Transactions on Services Computing, 2011 |

| [32] | Elena Meshkova, Janne Riihijärvi, Marina Petrova, Petri Mähönen *,” A survey on resource discovery mechanisms, peer-to-peer and service discovery frameworks’, Computer Networks 52 (2008) 2097–2128, Elsevier |

| [33] | Ahmed Al-Moayed and Bernhard Hollunder, "Quality of Service Attributes in Web Services", Proceedings of Fifth International Conference on Software Engineering Advances, IEEE 2010 |

| [34] | Ali ShaikhAli, Omer F. Rana, Rashid Al-Ali, David W. Walker, " UDDIe: An Extended Registry for Web Services", Proceedings of the Symposium on Applications and the Internet Workshops, IEEE 2003 |

| [35] | Marc Oriol Hilari, "Quality of Service (QoS) in SOA Systems. A Systematic Review", Master Thesis 2009 available at : http://upcommons.upc.edu/pfc/bitstream/2099.1/7714/1/Master%20thesis%20-%20Marc%20Oriol.pdf- Retrieved on February 2012 |

| [36] | Bill Inmon, "Making the SOA Implementation Successful", published in TDAN.com, 2006 available at : http://www.tdan.com/view-articles/4593 retrieved on February 2012 |

| [37] | Rickland Hollar," Moving Toward the Zero Latency Enterprise" SOA World Magazine Article, 2003 available at : http://soa.sys-con.com/node/39849 retrieved on February 2012 |

| [38] | SOA and Web Services: The Performance Paradox- white paper, tech web digital library, 2007 retrieved on February 2012 |

| [39] | http://help.eclipse.org/indigo/index.jsp?topic=/org.eclipse.jst.ws.consumption.ui.doc.user/tasks/ tuddiexp.html - Retrieved on February 2012 |

| [40] | http://www.membrane-soa.org/soa-registry/ - Retrieved on February 2012 |

| [41] | Stefan Diezte et al., "Exploiting Metrics for Similarity-based Semantic Web Service Discovery", International Conference on Web services, IEEE 2009 |

| [42] | Tao Gu, Hung Keng Pung, Jian Kang Yao, ”Towards a flexible service discovery”, Journal of Network and Computer Applications 28 (2005) 233–248, Elsevier |

| [43] | James E. Powell et al., "A Semantic Registry for Digital Library Collections and Services",doi:10.1045/november2011-powell – D-Lib Magazine Artcile – Retrieved on February 2012 |

| [44] | Minghui Wu, Fanwei Zhu, Jia Lv, Tao Jiang, Jing Ying," Improve Semantic Web Services Discovery through Similarity Search in Metric", Proceedings of International Symposium on Theoretical Aspects of Software Engineering, IEEE 2009 |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML