-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Science and Technology

p-ISSN: 2163-2669 e-ISSN: 2163-2677

2016; 6(4): 89-92

doi:10.5923/j.scit.20160604.01

Accuracy of Machine Learning Algorithms in Detecting DoS Attacks Types

Noureldien A. Noureldien, Izzedin M. Yousif

Department of Computer Science, University of Science and Technology, Omdurman, Sudan

Correspondence to: Noureldien A. Noureldien, Department of Computer Science, University of Science and Technology, Omdurman, Sudan.

| Email: |  |

Copyright © 2016 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Attaining high prediction accuracy in detecting anomalies in network traffic is a major goal in designing machine learning algorithms and in building Intrusion Detection Systems. One of the major network attack classes is Denial of Service (DoS) attack class that contains various types of attacks such as Smurf, Teardrop, Land, Back and Neptune. This paper examines the detection accuracy of a set of selected machine learning algorithms in detecting different DoS attack class types. The algorithms are belonging to different supervised techniques, namely, PART, BayesNet, IBK, Logistic, J48, Random Committee and InputMapped. The experimental work is carried out using NSL-KDD dataset and WEKA as a data mining tool. The results show that the best algorithm in detecting the Smurf attack is the Random Committee with an accuracy of 98.6161%, and the best algorithm in detecting the Neptune attack is the PART algorithm with an accuracy of 98.5539%, and on the average PART algorithm is the best algorithm in detecting DoS attacks while InputMapped algorithm is the worst.

Keywords: DoS Detection, DoS Attacks, NLS-KDD, Machine Learning Algorithms, WEKA

Cite this paper: Noureldien A. Noureldien, Izzedin M. Yousif, Accuracy of Machine Learning Algorithms in Detecting DoS Attacks Types, Science and Technology, Vol. 6 No. 4, 2016, pp. 89-92. doi: 10.5923/j.scit.20160604.01.

Article Outline

1. Introduction

- Intrusion detection and prevention systems are commonly used as a major security tool to detect and prevent networks from malicious attacks. Intrusion detection systems are classified either as misuse or anomaly detection systems [1, 2]. Misuse detection systems, also known as signature based systems, detect known attack signatures in the monitored resources, while anomaly detection systems identify attacks by detecting changes in the pattern of utilization or behavior of the system.Anomaly based intrusion detection systems are categorized into three basic techniques, statistical based, knowledge based and machine learning based [3, 4].In machine learning based intrusion detection systems, machine learning algorithms are trained to learn system behavior. The learning process or technique is classified either as supervised or unsupervised. In supervised learning, the data used in training is normally labeled as normal or malicious. During training, the machine learning algorithm attempts to find a model between data features and their classes so that it can predict the classes of new data, usually known as testing data.Several machine learning-based schemes have been applied. Some of the most important are Bayesian networks, Markov models, Neural networks, Fuzzy logic, Genetic algorithms, and Clustering and outlier detection [3].On the other hand, networks attacks are generally classified into four classes [5]. Probes, which are attacks targeting information gathering. Denial of Service (DoS), which are attacks that either denies resource access to legitimate users or render system unresponsive. Remote to Local (R2L), these are attacks in which an attacker bypass security controls and execute commands on the system as legitimate user, and User to Root (U2R), the attacks in which a legitimate user can bypass security controls to gain root user privileges.Out of these four classes, DoS is the known to be the most common and serious network attack. DoS attack class constitutes various attacks such as, Smurf, Neptune, Land, Back, teardrop, and TCP SYN flooding.Accordingly, building DoS attacks intrusion detection systems becomes an interested research area and many machine learning based anomaly intrusion detection systems have been proposed.To compare the detection accuracy of these systems, researchers compare the detection accuracy of machine learning algorithms deployed in the heart of these systems.Most of the research work in the literature is centered on examining the performance of machine learning algorithms in detecting DoS attack as a class rather than focusing on a specific DoS attack type. The possibility that one machine learning algorithm may out performs other algorithms in detecting a specific DoS attack type, is the motivation of this work.In this paper, we provide a comprehensive set of simulation experiments to evaluate the performance of different machine learning algorithms in detecting different types of DoS attacks.The rest of this paper is organized as follows; in Section 2 a related work is presented. In Section 3 the experimental environment is explained. Section 4 shows the experimental results and conclusions and future work are drawn in Section 5.

2. Related Work

- Machine learning based systems use machine learning algorithms or classifiers to learn system normal behavior and build models that help in classifying new traffic. Machine learning techniques are based on establishing an explicit or implicit model that enables the patterns analyzed to be categorized.Developing an optimum machine learning based detection systems directs research to examine the performance of a single machine learning algorithm or multiple algorithms to all four major attack categories rather than to a single attack category. G. Meera Gandhi [6], examined the performance of four supervised machine learning algorithms in detecting the attacks in the four attack classes categories; DoS, R2L, Probe, and U2R. The results indicate that the C4.5 decision tree classifier outperforms in prediction accuracy the other three classifiers Multilayer Perception, Instance Based Learning and Naïve Bayes.Nguyen and Choi evaluate a comprehensive set of machine learning algorithms on the KDD'99 dataset to detect attacks on the four attack classes [7].Abdeljalil and Mara [8], have compared the performance of the three machine learning algorithm; Decision Tree (J48), Neural Network and Support Vector Machine. The algorithms were tested based on detection rate, false alarm rate and accuracy of four categories of attacks. From the experiments they found that the Decision tree (J48) algorithm outperformed the other two algorithms.Sabhnani and Serpen, have assessed the performance of a comprehensive set of machine learning algorithms on the KDD'99 Cup intrusion detection dataset [9]. Their simulation results demonstrated that for a given attack category certain classifier algorithms performed better.Zadsr and Daved [10], have compared the performance of two algorithms, an adaptive threshold algorithm and a particular application of the cumulative sum (CUSUM) algorithm for change point detection, in detecting SYN flooding attack.Yogendra and Upendra [11], evaluates the performance of J48, Bayesnet, OneR, and NB algorithms, they conclude that J48 is the best algorithm with high true positive rate (TPR) and low false positive rate (FPR).Unlike above studies, our work concentrates on examining detection accuracy of machine learning algorithms on different DoS attacks to determine which algorithm is better for specific DoS attack.

3. Experimental Environment

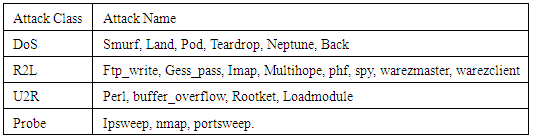

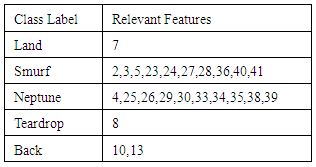

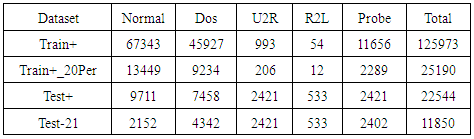

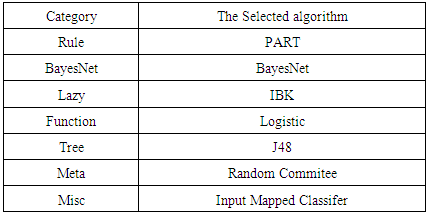

- This section discusses the dataset used in experiments, and the measure used to evaluate algorithm's performance. All experiments were performed using a laptop with windows7 Ultimate operating system, Intel® Atom™ Cpun2700 processor, and 1.00 GB.The KDD’99Cup dataset has been widely used for the evaluation of anomaly detection methods. KDD'99 is prepared and built based on the data captured in DARPA’98 IDS evaluation program [12, 13]. KDD dataset is divided into labeled and unlabeled records; labeled records are either normal or an attack. Each labeled record consisted of 41 attributes or features [14].KDD'99 contains different types of attack classes. Each attack type is recognized by a set of features. Table (1) shows the attacks classes in KDD'99 dataset, and Table (2) shows the most relevant features of each DoS attack type in KDD'99 [5].

|

|

|

|

|

4. Results

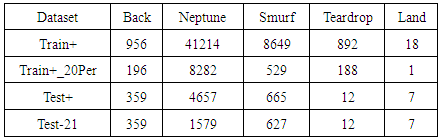

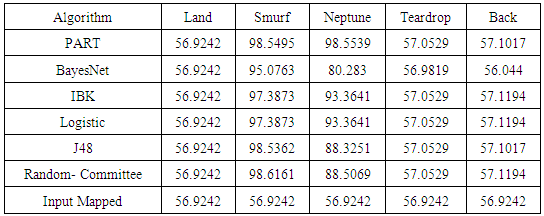

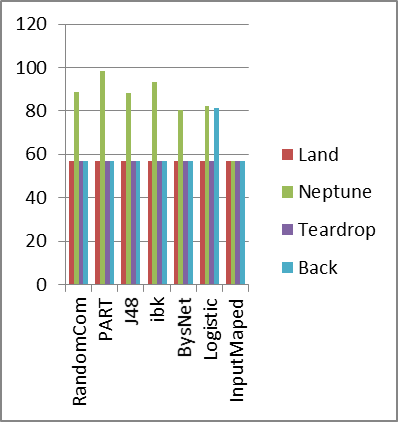

- Table (6) and Fig (1) below shows a summary of testing experiments of algorithms against different types of attacks. The percentage of correctly classified instances is reported.From table (6) we deduce that:1- All algorithms perform poorly and equally in detecting Land, Teardrop and Back attacks. This result is due to the fact that these attacks are recognized with only one and two features with very few records in the training and testing dataset. Thus the few numbers of features and records in the dataset conceal the individual characteristics of classification algorithms.2- The Random Committee algorithm is the best algorithm in detecting Smurf attack with 98.6161% accuracy, with insignificant difference from PART and J48 with 98.5495% and 98.5362% respectively, while PART algorithm is the best algorithm in detecting Neptune attack with 98.5539% accuracy, with significant difference from Random Committee and J48 with 88.5069% and 88.3251% respectively.3- On average PART is the best algorithm in detecting DoS attacks and InputMapped is the worst.

|

| Figure (1). Algorithms Detection Performance |

5. Conclusions and Recommendations for Future Work

- The experimental work in this paper shows that the machine learning algorithms perform differently in detecting DoS attacks, and their performance is directly affected by the amount of attack features and records in the testing dataset.On average, the PART algorithm is the best algorithm to be implemented by DoS attack intrusion detection systems, while InputMapped algorithm is the worst.Our future work is to build intrusion detection systems using different machine learning algorithms and to punish mark these systems using various DoS attacks.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML