-

Paper Information

- Next Paper

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Science and Technology

p-ISSN: 2163-2669 e-ISSN: 2163-2677

2012; 2(6): 152-155

doi: 10.5923/j.scit.20120206.02

How to Apply Real Bat and BallintoVirtual Baseball Contents

Jong-il Park 1, Soo Hong Kim 2

1Dep’t of Computer and Information Telecommunication Engineering, Sangmyung University, Seoul, 110-743, South Korea

2Dep’t of Computer Software Engineering, Sangmyung University, Cheonan, 330-720, South Korea

Correspondence to: Jong-il Park , Dep’t of Computer and Information Telecommunication Engineering, Sangmyung University, Seoul, 110-743, South Korea.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

It is very challenging issue to utilize human-being’s behaviours or reactions as a way of interacting to virtual world. Indoor baseball simulation system is one of good candidates to combine real equipment and actions with virtual contents. As one of experimental approach to break a barrier between virtual game space and human-being’s real behaviour, we mapped out an experiment on seamless gaming between 3D virtual game space and human-being’s real behavioural space, where real balls and bats are augmented with online baseball contents through computer vision and sensor technologies. The Simulation Engine, the key part of our proposal, consists of 6 components: Pitch Recognizer, Toss Controller, Swing Detector, Vehicle Recognizer, Vehicle Tracker and Trajectory Calculator. This approach, utilizing human-being's behaviours as input event, will open the new field of digital interfaces.

Keywords: Computer Vision, Augmented Reality, Immersion to Game

Cite this paper: Jong-il Park , Soo Hong Kim , "How to Apply Real Bat and BallintoVirtual Baseball Contents", Science and Technology, Vol. 2 No. 6, 2012, pp. 152-155. doi: 10.5923/j.scit.20120206.02.

Article Outline

1. Introduction

- We are embarking on the exploration of developing interfaces that can work with missing intelligence and aesthetics[1,2,3]. To do this, interfaces must understand our metaphors, must solicit information on its own, must acquire experiences, must improve over time, and must be intelligent in context. For a context-sensitive indoor baseball game, human- being’s interaction with the simulation system must be augmented, making the computer as seamlessly as possible, by exploring the use of a real ball for interaction with the virtual application on a screen. Therefore, they may augment actions that users are capable to perform in the real world, both in quantity of tasks performed and their quality [4]Especially indoor baseball simulation system needs the use of real ball and bat for interaction with its virtual application to reflect on user’s natural behaviour aspect as well as to make a user immerse into the game. As one of experimental approach to break a barrier between virtual game space and human-being’s real behaviour, we mapped out an experiment on seamless gaming between 3D virtual game space and human-being’s real behavioural space, where real balls and bats are combined with online baseball contents through computer vision and sensor technologies.The remainder of this paper is organized as follows. Section 2 compares with existing related work. Section 3 introduces our ideas about augmented indoor baseball simulation game interface including conceptual system structures as well as synchronization mechanism between a virtual pitcher’s pitching ball and a tossing machine’s one. Finally, we conclude with a note on the current status of our projects and future works.

2. Related Work

- A variety of research efforts have recently explored computationally augmented interfaces that emphasize human interaction using the human being’s behaviours and senses.

2.1. Camera-based Approach

- The MagicMouse allows the user to operate within both 2D and 3D environments by simply moving and rotating their fist. Position and rotation around the X, Y and Z-axes are supported, allowing full six degree of freedom input. This is achieved by having the user wear a glove, to which is attached a square marker. Translation and rotation of the hand is tracked by a camera attached to the computer, using the ARToolKit software library[5]. Wagner and Schmalstieg use a PDA camera to recover the position of specific markers positioned in the real environment[6]. Rohs uses visual codes for several interaction tasks with camera-equipped cell phones[7]. His IPARLA Project designed a new 3-DOF interface adapted to the characteristics of handheld computers. This interface tracks the movement of a target that the user holds behind the screen by analysing the video stream of the handheld computer camera. The position of the target is directly inferred from the color codes that are printed on it using an efficient algorithm[8].

2.2. Sensor-based Approach

- Affective Computing Group at MIT is making an effort for sensing, recognizing, understanding, and synthesizing the human behaviour patterns. This group discussed the use of biometric sensors with wearable computers. Such sensors allow for new interactions between the wearable and the wearer, which they based upon affect detection, prediction, and synthesis[9].

2.3. Object Tracking

- To recognize and track an object, many techniques have been devised including edge-based, optical flow-based, texture-based and hybrid methods. [9] used both edges and optical flow without the need of a known motion model, which is the case of most AR applications. Texture based feature extraction and optical flow tracking were also joined together in a multithreaded manner in [10].

2.4. Sports Simulation

- Visual Baseball takes 2000 pictures each and every second, and determines the exact trajectory, direction and speed of an object like our approach, however, it supports only pitcher mode not batter mode [11].Our approach is the first trial in the world for batting simulation combining camera and sensor technology, and synchronized with tossing machine, virtual content and batter’s swing.

3. Indoor Baseball Simulator

3.1. Indoor Baseball Environment

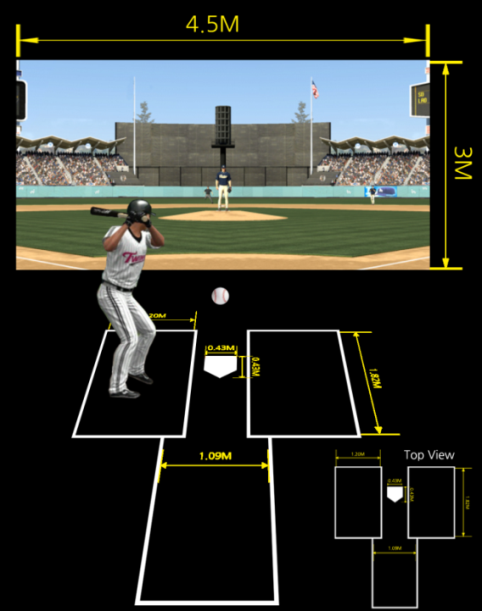

| Figure 1. Indoor baseball environment |

3.2. Simulator System Flow

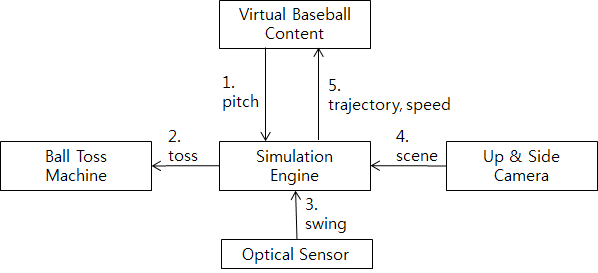

- When a batter enters into batter box the pitcher on virtual contents pitches a ball. After the Simulation Engine catches the ball’s attributes it commands ‘toss’ to Toss Machine. As soon as the Optical Sensor detects whether the batter’s swing or not, High Speed Camera takes over 2000 pictures each and every second. After the Simulation Engine analyses and calculates it sends the ball’s trajectory and speed to Virtual Baseball Contents. The 3D Contents represent the hit ball and reaction of defenders and spectators with appropriate sounds. This flow is shown in Figure 2.

| Figure 2. Simulation Flow |

3.3. Simulation Engine

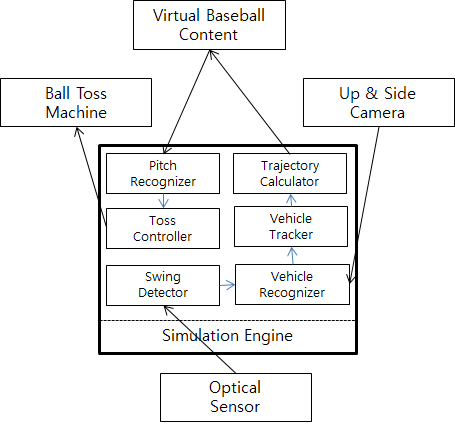

- Our Simulation Engine consists of 6 components as shown in Figure 3; Pitch Recognizer, Toss Controller, Swing Detector, Vehicle Recognizer, Vehicle Tracker and Trajectory Calculator.1. Pitch Recognizer: receives type and speed of a ball from virtual pitcher.2. Toss Controller: sends the type and speed of a ball to portable toss machine thru infrared communication.3. Swing Detector: detects batter’s swing thru infrared sensor on an end of home plate.4. Vehicle Recognizer: analyses the scene from high-speed camera and find hit ball.5. Vehicle Tracker: tracks the movement of the hit ball and sends the position by predefined coordinate to Trajectory Calculator. We use our Color Blob Matching algorithm [12] to track the vehicle.6. Trajectory Calculator: solves trajectory and speed of the hit ball by our algorithm, and sends them to virtual contents.

| Figure 3. Simulation Engine |

3.3. Trajectory Calculation

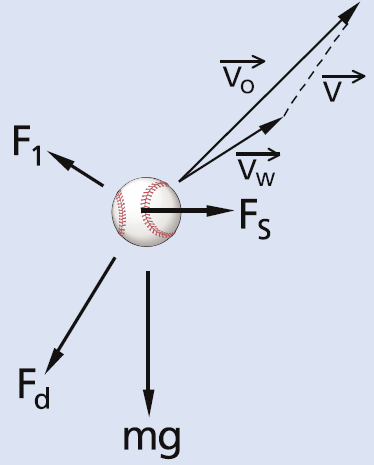

- Basically, there are three components of aerodynamic force acting on the baseball in Figure 4: the drag force, Fd; the lift force, Fl; and the side force, FS, acting lateral to the ball’s flight path. The largest aerodynamic force acting on the ball is the drag component. The lift and side forces are caused by asymmetries in the stitch patterns, and by the so-called Magnus force, FM, resulting from ball spin.

| Figure 4. Aerodynamic Force Acting on the Baseball |

3.4. Tossing Machine Synchronization

- Our tossing machine has following specification and function.●Light weight equipment which takes about 20 balls;●Portable machine which user can put it appropriate place;●Wireless control using infrared communications; ●Synchronized with speed, direction and type of virtually pitched ball.

3.5. Reaction of Virtual Contents

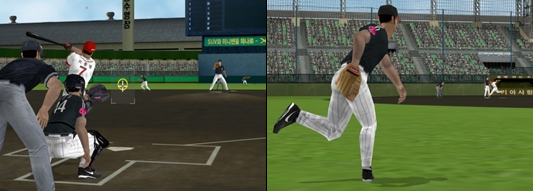

- To redouble feeling response of users our virtual baseball contents react to a batter’s swing and hit on a ball as follows and shown in Figure 5.●3D real-time realization of batter’s swing and hit;●A realistic reaction representation of a pitcher, catcher, judge, defenders and spectators;●An accurate realization of hit ball’s trajectory and defender’s movement;●An appropriate sound effect tightly related to batter’s swing and hit.

| Figure 5. Virtual Baseball Contents |

4. Conclusions

- For breaking a barrier between virtual game space and human-being’s real behaviour,we attempted an experiment on camera and sensor-based user interface for indoor baseball simulation which interacts to virtual baseball contents.We mapped out an experiment on seamless gaming between 3D virtual gamespaces and human-being’s real behavioural space, where real balls and bats are augmented with online baseball contents through computer vision and sensor technologies. Our proposed SimulationEngineconsists of 6 components: Pitch Recognizer, Toss Controller, Swing Detector, Vehicle Recognizer, Vehicle Tracker and Trajectory Calculator. Our approach is the first trial in the world for batting simulation combining camera and sensor technology, and synchronized with tossing machine, virtual content and batter’s swing. These attempting will increase friendliness and immersion to a game. We are still working on following areas.●Motion or gesture interface as new game interface●Social games thru n-screen●Interaction mechanism to make real and virtual world seamless

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML