-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Science and Technology

p-ISSN: 2163-2669 e-ISSN: 2163-2677

2012; 2(5): 114-121

doi:10.5923/j.scit.20120205.02

Circular Hough Transform for Iris localization

Noureddine Cherabit, Fatma Zohra Chelali, Amar Djeradi

Speech communication and signal processing laboratory, Faculty of Electronic engineering and computer science University of Science and Technology Houari Boumedienne (USTHB), Box n :32 El Alia, 16111, Algiers, Algeria

Correspondence to: Noureddine Cherabit, Speech communication and signal processing laboratory, Faculty of Electronic engineering and computer science University of Science and Technology Houari Boumedienne (USTHB), Box n :32 El Alia, 16111, Algiers, Algeria.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

This article presents a robust method for detecting iris features in frontal face images based on circular Hough transform. The software of the application is based on detecting the circles surrounding the exterior iris pattern from a set of facial images in different color spaces. The circular Hough transform is used for this purpose. First an edge detection technique is used for finding the edges in the input image. After that the characteristic points of circles are determined, after which the pattern of the iris is extracted. Good results are obtained in different color spaces.

Keywords: Hough Transform, Iris Detection, Face Recognition, Face Detection

Cite this paper: Noureddine Cherabit, Fatma Zohra Chelali, Amar Djeradi, Circular Hough Transform for Iris localization, Science and Technology, Vol. 2 No. 5, 2012, pp. 114-121. doi: 10.5923/j.scit.20120205.02.

Article Outline

1. Introduction

- Identification of people is getting more and more importance in the increasing network society[1]. Biometrics is the branch of science in which human beings are identified with their behavioral or physical characteristics. Physical characteristics include face, finger, iris, retina, hand geometry; palm print etc. where as behavioral characteristics include signature, gait, voice…etc. In all of these characteristics, iris recognition is gaining more attention because the iris of every person is unique and it never changes during a person’s lifetime[2].Generalized iris recognition consists of image acquisition, iris segmentation and localization (preprocessing), feature extraction and feature comparison (matching). Biometric based personal identification using iris requires accurate iris localization from an eye image[3].Several researchers have implemented various methods for segmentation and localising the iris. John Daugman[5] has proposed one of the most practical and robust methodologies, constituting the basis of many functioning systems. He used integro-differential operator to find both the iris inner and outer boundaries for iris segmentation. Wildes[4] proposed a gradient based binary edge map construction followed by circular Hough transform for iris segmentation[2].Almost all methods stated are based on the assumptions that centre of iris (Outer Boundary) and Pupil (Inner boundary) is same and iris is perfectly circular in shapes, which are practically incorrect. Therefore, the iris segment- ation and localization from an acquired image leads to the loss of texture data near to pupil and/or outer iris boundary.The effect is more serious when the iris is occluded[2].In recent years, various methods of iris detection for gaze detection, applied to man-machine interfaces have been proposed[1, 2, 3, 4]. However, most studies did not report the relationship between the accuracy rate of the iris detection and the directions of the face and the eyes. Actually, the shape of facial parts is not only different depending on the person, but also dynamically changes depending on the movement of the head, facial expressions, and so on. Also, when a person faces down, the eye regions tend to be dark. In this case, it is difficult to detect iris in the face. However, most studies are based on research of the eye region to detect iris. Where, these methods extract the eye regions before detecting irises.An authentication system for iris recognition is reputed to be the most accurate among all biometric methods because of its acceptance, reliability and accuracy. Ophthalmologists originally proposed that the iris of the eye might be used as a kind of optical fingerprint for personal identification. Their proposal was based on clinical results that every iris is unique and it remains almost unchanged in clinical photographs. The human iris begins to form during the third month of gestation and is complete by the eighth month, though pigmentation continues into the first year after birth. It has been discovered that every iris is unique since two people (even two identical twins) have uncorrelated iris patterns[5], and yet stable throughout the human life. It is suggested in recent years that the human irises might be as distinct as fingerprint for different individuals, leading to the idea that iris patterns may be used as unique identification features[11].Research in the area of iris recognition has been receiving considerable attention and a number of techniques and algorithms have been proposed over the last few years.A number of algorithms have been developed for iris localization. One of them is based on the Hough transform. An iris segmentation algorithm based on the circular Hough transform is applied in[6, 7, 8]. At first, the canny edge detection algorithm is applied. The eye image is represented using edges by applying two thresholds to bring out the transition from pupil to iris and from iris to sclera. Then circular Hough transform is applied to detect the inner and outer boundaries of the iris. The circular Hough transform is employed to deduce the radius and centre coordinates of the pupil and iris regions. In this operation, the radius intervals are defined for inner and outer circles. Starting from the upper left corner of iris the circular Hough transform is applied. This algorithm is used for each inner and outer circle separately. The votes are calculated in the Hough space for the parameters of circles passing through each edge point. Here some circle parameters may be found. The parameters that have maximum value are corresponded to the centre coordinates[6].After determining centre coordinates, the radius r of the inner circle is determined. The same procedure is applied for the outer circle to determine its centre coordinates and radius. Using determined inner and outer radiuses the iris region is detected. The application of the Hough transform needs long time to locate the boundaries of the iris[6].The aim of this work is to realize an application which detects the iris pattern from an eye image. The software of the application is based on detecting the circles surrounding the exterior iris pattern. Figure 1 shows an overview of the proposed method. Our work concerns the iris detection by the geometric model "Hough transform" using the facial images of the “Fei-Fei Li” database[9], 396 images of different subjects with 24 frames per individual are used.The paper is presented as follows: section 2 presents the state of the art for iris localization and recognition; Section 3 describes the circular Hough transform for known and unknown radius. Section 4 presents the algorithm applied for iris detection; results obtained for facial images are presented in section 5. Conclusion is drawn in section 5.

2. State of the art for Iris Localization

- Methods such as the Integro-differential, Houghtransform and active contour models are well known techniques in use for iris localisation. These methods are described below including their strengths and weaknesses [11].

2.1. Daugman_s Integro-Differential Operator

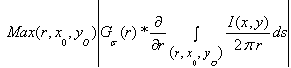

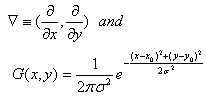

- In order to localise an iris, Daugman proposed an Integro-differential operator method. It assumes that the pupil and limbus are circular contours and operate as a circular edge detector. Detecting the upper and lower eyelids is also carried out using the Integro-differential operator by adjusting the contour search from circular to a designed arcuate shape[11].The Integro-differentialoperator is defined as follows:

| (1) |

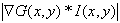

is a smoothing function controlled by σ that smoothes the image intensity for a more precise search[11].This method can result in false detection due to noise such as strong boundaries of upper and lower eyelids since it works only on a local scale.

is a smoothing function controlled by σ that smoothes the image intensity for a more precise search[11].This method can result in false detection due to noise such as strong boundaries of upper and lower eyelids since it works only on a local scale.2.2. Hough Transform

- The Hough Transform is an algorithm presented by Paul Hough in 1962 for the detection of features of a particular shape like lines or circles in digitalized images[18].The classic Hough Transform is a standard algorithm for line and circle detection. It can be applied to many computer vision problems as most images contain feature boundaries which can be described by regular curves. The main advantage of the Hough transform technique is that it is tolerant to gaps in feature boundary descriptions and is relatively unaffected by image noise, unlike edge detectors[17].

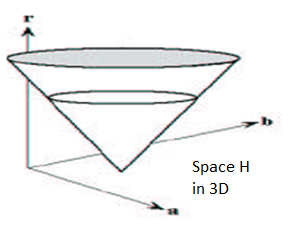

| Figure 1. General overview of Iris localization |

| (2) |

| (3) |

| (4) |

2.3. Discrete Circular Active Contours

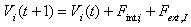

- Ritter proposed an active contour model to localise iris in an image. The model detects pupil and limbus by activating and controlling the selected active contour using two defined forces: internal and external[11].The internal forces are designed to expand the contour and keep it circular. This force model assumes that pupil and limbus are globally circular, rather than locally, to minimise the undesired deformations due to peculiar reflections and dark patches near the pupil boundary. The contour detection process of the model is based on the equilibrium of the defined internal forces with the external forces. The external forces are obtained from the grey level intensity values of the image and are designed to push the vertices inward. The movement of the contour is based on the composition of the internal and external forces over the contour vertices. Each vertex is moved between time t and (t+1) by

| (5) |

3. Hough Transform Algorithm

3.1. Principal of Hough Transform

- In an image, a curve "in any form" can be defined by a set of points. There is generally a set of parameters that links these points by their spatial information, or coordinate information in the image space. So it means that the curve is parameterized and can be modeled by a mathematical equation that gives the relationship between the two sets (for example, the equation of a line, circle...).Thus, the general principle of the Hough transform is a projection of the N-dimensional image space (denoted by I and defined by its variable i = 1: N) to a parameter space with a dimension M (denoted by H and defined by its variable j = 1: M). The two spaces are related by the mathematical model

. So the objective of the work is to find the parameters defining the shape to be detected. For example, a straight line is determined by equation:

. So the objective of the work is to find the parameters defining the shape to be detected. For example, a straight line is determined by equation:  , where m represents the slope and p the intercept. Then the Hough transform is given by the equation:

, where m represents the slope and p the intercept. Then the Hough transform is given by the equation: There are two kinds of Hough transform (depending on the formulation of the mathematical equation linking the two spaces):-Transformation of 1 to m (one point of I is associated with m points of H).-Transformation of m to 1 (m-points of I are associated with one point of H).In practice the Hough space H is discretized. The result of Hough transform is stored in a table cell of two dimensions (in the case of space (m, p) named the Hough accumulator). Thus, each cell position

There are two kinds of Hough transform (depending on the formulation of the mathematical equation linking the two spaces):-Transformation of 1 to m (one point of I is associated with m points of H).-Transformation of m to 1 (m-points of I are associated with one point of H).In practice the Hough space H is discretized. The result of Hough transform is stored in a table cell of two dimensions (in the case of space (m, p) named the Hough accumulator). Thus, each cell position  has its own accumulator[10].

has its own accumulator[10].3.2. Hough Transform for Circles Detection

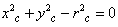

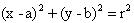

- The Hough transform can be applied to detect the presence of a circular shape in a given image. It is used to detect any shape or to locate the iris in the face[10].The characteristic equation of a circle of radius r and center (a, b) is given by:

| (6) |

| (7) |

. Two cases may be presented as described in Fig 2.

. Two cases may be presented as described in Fig 2. | Figure 2. Transformation of a point in circle[10] |

, the transformation for each point

, the transformation for each point  in space I yields a circle in space H having a center

in space I yields a circle in space H having a center  and radius R.

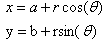

and radius R.  | Figure 3. Representation of HT for several points in circle[10] |

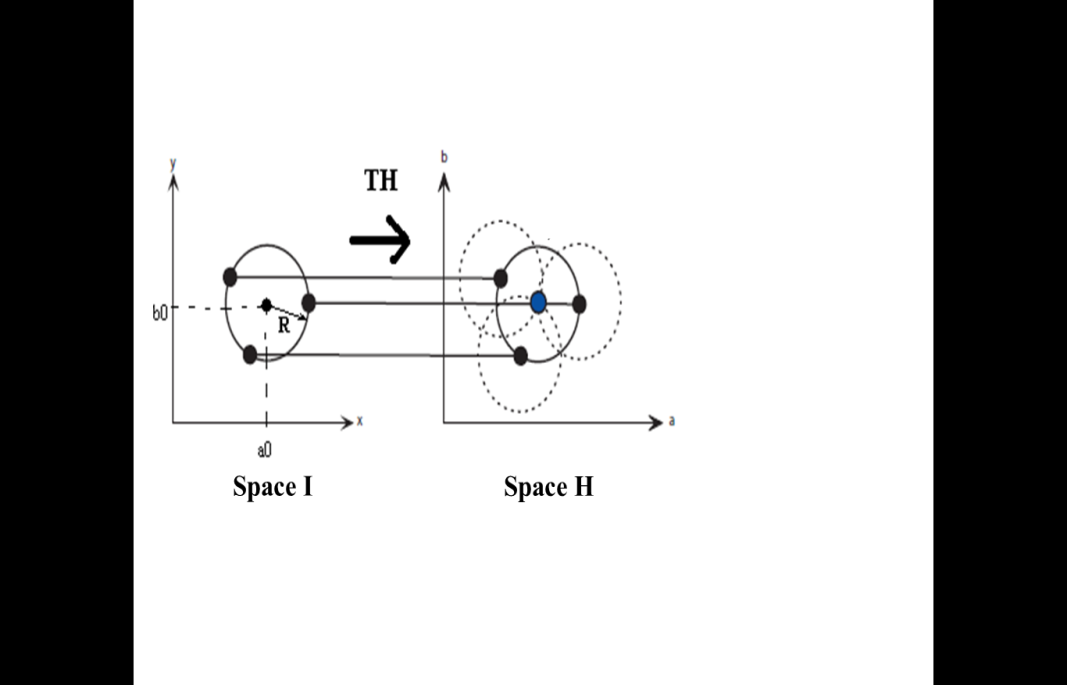

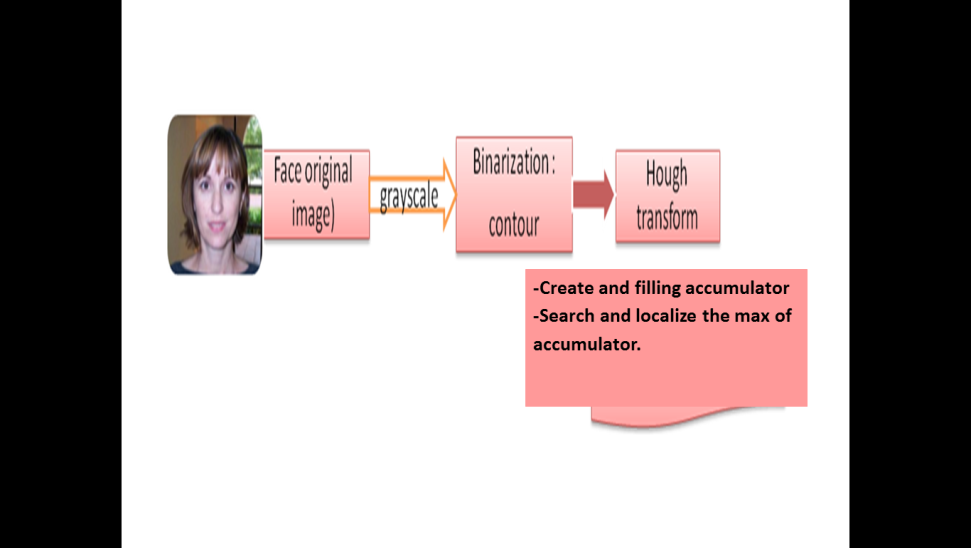

. This point is obtained by searching the maximum of the accumulator.-Case of unknown radius In this case, the work consists to find the triplet parameters

. This point is obtained by searching the maximum of the accumulator.-Case of unknown radius In this case, the work consists to find the triplet parameters  witch define the points of circle to find. The space will be in 3D.For each point

witch define the points of circle to find. The space will be in 3D.For each point  of the space I will match a cone in space H, as the radius r varied from 0 to a given value (figure 4). After transforming of all points of contour in the same way, the intersection will give a spherical surface corresponding to the maxim of accumulator. The area is characterized by a center

of the space I will match a cone in space H, as the radius r varied from 0 to a given value (figure 4). After transforming of all points of contour in the same way, the intersection will give a spherical surface corresponding to the maxim of accumulator. The area is characterized by a center  and the radius

and the radius  searched[10].

searched[10]. | Figure 4. Transformation of a point for an unknown radius[10] |

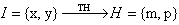

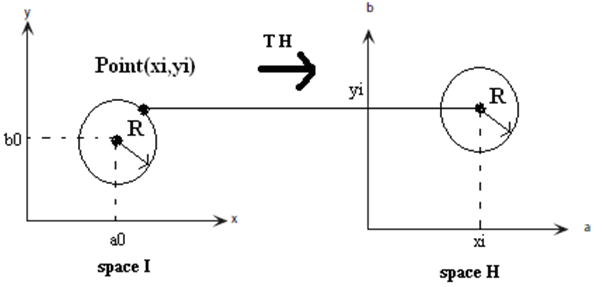

4. Iris Detection System

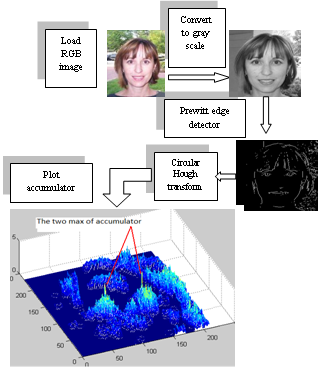

- Before the Hough transform is applied to the image, there was an edge detection technique used for finding the edges in the input image. First, we convert the image into the gray scale. Then extract the essential information of contour present in the image.

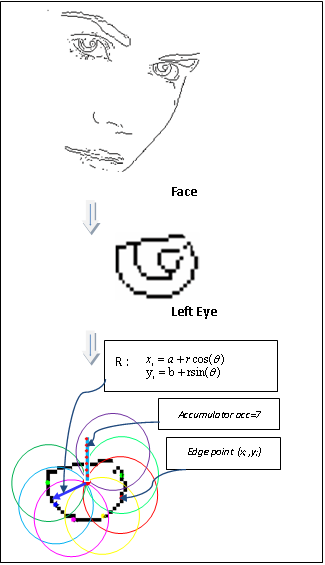

| Figure 5. Different steps of treatment |

, where r is the radius, is created.The second step consists of taking each circle with a radius Ri, we do a scan of image on the contour and placing the circle on each non-zero point of this picture (contour), and increment each position of points (xi, yi) of the accumulator by one for each intersection of all point with any point of contour.In the final step, we locate the position Xi and Yi of N maximum points of accumulator; N represents the number of radius to search.We can summarize this work as follows:-Read RGB Image: Converting the RGB image into grayscale level.- Extract the contour by the Prewitt filter (binary image).- Introduce the variation range of the iris radius (in pixels)- Creation of models and centralize of circles.- Search and locate the position of the maximums in accumulator.- Extract the positions X0i, Y0i for each radius ri.- Display the image and the circles found satisfying equation

, where r is the radius, is created.The second step consists of taking each circle with a radius Ri, we do a scan of image on the contour and placing the circle on each non-zero point of this picture (contour), and increment each position of points (xi, yi) of the accumulator by one for each intersection of all point with any point of contour.In the final step, we locate the position Xi and Yi of N maximum points of accumulator; N represents the number of radius to search.We can summarize this work as follows:-Read RGB Image: Converting the RGB image into grayscale level.- Extract the contour by the Prewitt filter (binary image).- Introduce the variation range of the iris radius (in pixels)- Creation of models and centralize of circles.- Search and locate the position of the maximums in accumulator.- Extract the positions X0i, Y0i for each radius ri.- Display the image and the circles found satisfying equation  The research of parameters that define the circles has been limited with centers

The research of parameters that define the circles has been limited with centers  and radius r. To get a good results, we give each radius corresponds to an interval of iris rays.

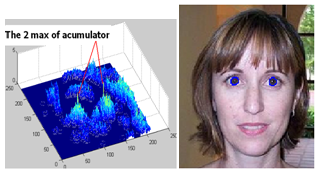

and radius r. To get a good results, we give each radius corresponds to an interval of iris rays. | Figure 6. Accumulator surrounding left iris |

5. Simulation results

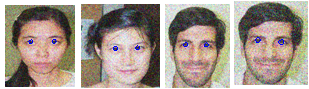

- We tested our algorithm on the “Caltech database” available on the Web[9], with 396 individuals with a number of 24 frames per individual for different poses. The images are: 24 bit - RGB, 576 x 768 pixels, file format: jpg. The proposed algorithm is implemented in MATLAB7.0; on Intel processor with 712MB RAM 2 GHz.

5.1. Results of Iris Detection

- The experimental results have shown that the proposed algorithm gives better results. The algorithm accurately extracted irises of 379 images out of 396 images giving a success rate of 95 %. Fig7 represents the better results of iris detection. In our experiments, the range of radius variation is from 6 to 11 pixels. This interval depends of a lot of parameters like: size of images, age…etc.

| Figure 7. Good detection of iris |

| Figure 8. Top left : Accumulator in 3D, top right localization of iris from the two maximums |

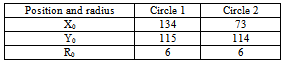

|

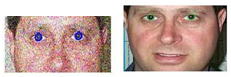

| Figure 9. False iris detection |

5.2. Effect of Noise on the Iris Detection

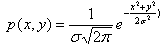

- Our algorithm was tested on noisy images by adding Gaussian noise with zero mean (m = 0) and variance

.The density of probability is expressed by the equation:

.The density of probability is expressed by the equation: | (8) |

| Figure 10. Iris detection of noisy images |

| Figure 11. Iris detection for sub images (eyes, face and others) |

5.3. Application of Iris Detection in Different Color Space

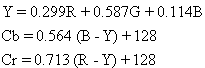

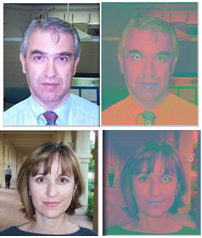

- √ YCbCr Color Space:The YCbCr color space was introduced in image coding for video. In the case of color images, it allows to compensate the errors in transmission of information. From the original image, we make a transformation from RGB to YCbCr space by applying the following rules[16] :

| (9) |

| Figure 12. Examples of iris detection in YCbCr color |

| Figure 13. Bad iris detection in YCbCr color |

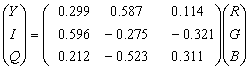

| (10) |

| Figure 14. Examples of iris detection in YIQ color |

| Figure 15. Bad iris detection in YIQ color space |

6. Conclusions

- We’ve presented in this article the circular hough transform for iris detection. Our method consists to search circles in contours image. The detection of contours plays a very important role in the detection of iris. The algorithm was applied to color image. The results depend on the influence of many parameters such as: The conditions for image capture are a very important factors for good or bad detection for example the lighting and the position of the human (position of the iris on face). We note that the position of the iris plays a very important role in the detection results. A recognition rate about 95 % was obtained. The hough transform achieves also good results in HSV and YCbCr space. Bad results were obtained for noised images when the variance of Gaussian noise exceeds the value 0.1.Our method can be tested in several applications such as biometric systems, human machines interfaces, person identification, etc.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML