Andrey L. Gorbunov

Moscow State Technical University of Civil Aviation

Correspondence to: Andrey L. Gorbunov , Moscow State Technical University of Civil Aviation.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Abstract

This paper considers the possibility of creating an aircraft landing system (LS) with the augmented reality (AR) 3D+stereo interface (patent pending). Such a LS system can be used as a supplementary navigation resource and has the following advantages in comparison with the existing solutions: improved spatial navigation, shirt pocket size, autonomous power supply, minimal training, and low cost. The design of experiments to prove the effectiveness of an AR 3D+stereo interface is described.

Keywords:

Instrument Landing, Enhanced Vision System, Augmented Reality

Cite this paper:

Andrey L. Gorbunov , "3D+Stereo Augmented Reality for Landing Systems Feasibility and Experiment Design", Science and Technology, Vol. 2 No. 1, 2012, pp. 21-24. doi: 10.5923/j.scit.20120201.04.

1. Introduction

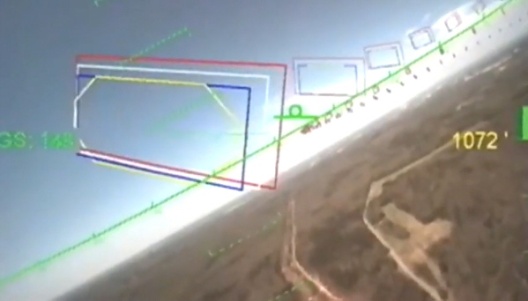

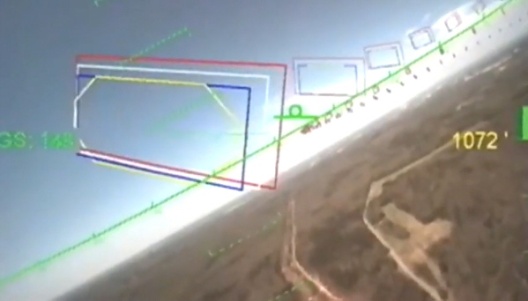

Possibilities provided by the AR technology correspond well with the specifics of the LS used for landing approaches in limited visibility conditions. This statement is confirmed by the following examples dated 2010: 1. An Enhanced Vision System (EVS, developed by Rockwell Collins)[5] has been certified by US Federal Aviation Administration for Boeing aircraft. This system includes the head-up display (fig. 1) which is per se an “AR” device with 2D virtual objects (speed vector symbol etc.). | Figure 1. View through the head-up display (AR with 2D virtual objects). Image from http://www.boeing.com |

2. The Rocket Racing League has demonstrated the AR pilot interface based on the Targo helmet (Elbit Systems) with 3D objects. The interface traces the flight trajectory by means of virtual markers (fig. 2) projected on the helmet's windshield. AR interfaces allow the pilots to get the flight parameters and to be oriented in space while keeping a high level of situation awareness. | Figure 2. Virtual flight trajectory in Targo pilot helmet (AR with 3D virtual objects). The urgency of such LS has been tragically illustrated by the well-known crash of Polish president Kachinsky's plane in April 2010 |

2. Problem

AR in LS requires the use of a special display device which combines virtual objects with the real world. In the systems mentioned above (as well as in all others) such displays are cumbersome, very high cost solutions and in addition these devices do not provide a pathway in the system's development in the direction which is obvious now: from 2D and 3D to 3D+stereo.

3. Solution

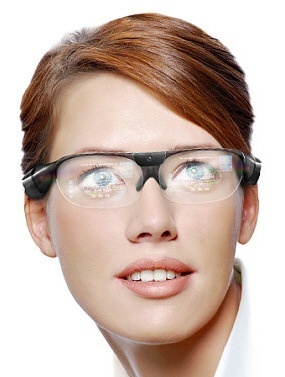

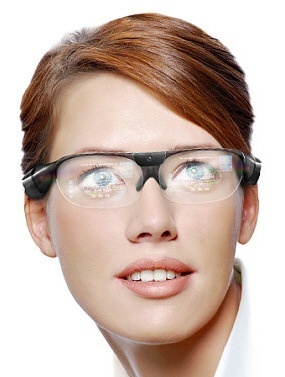

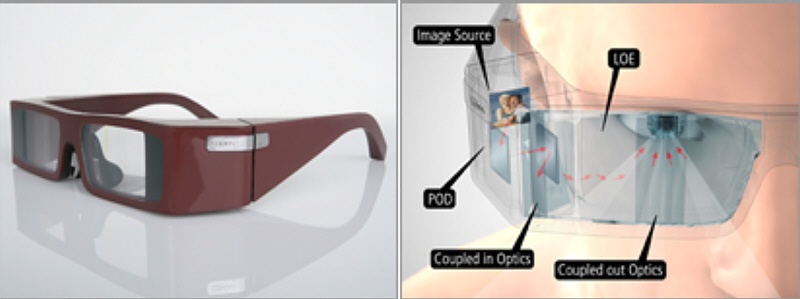

At least two companies — Laster Technologies[3] and Lumus[4] have announced last year the AR display devices looking as common glasses with transparent lenses containing special optic elements which show the user a stereo image of virtual objects. Such an approach transforms AR stereo displays into small and comparatively cheap glasses (fig. 3 and 4). Both companies promise to make their products commercially available in 2011. | Figure 3. SmartVision AR glasses developed by Laster Technologies. Image from http://www.laster.fr |

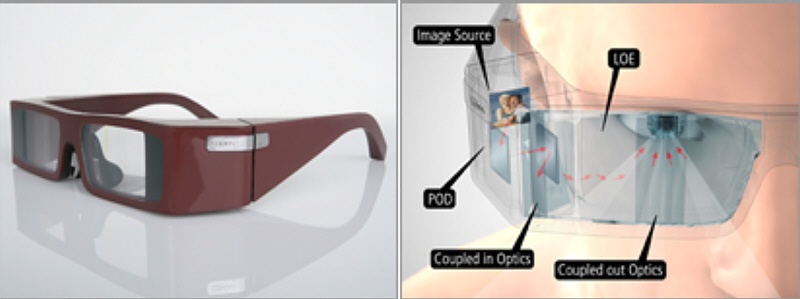

| Figure 4. AR glasses Video Eyeglasses developed by Lumus |

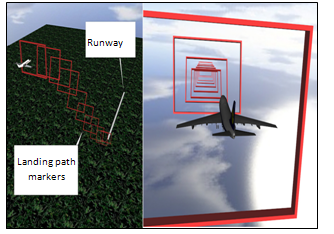

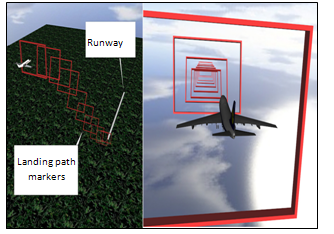

The combination of such AR glasses with a mobile device containing GPS, accelerometer, digital compass, gyroscope and high performance processor (e.g. iPhone4) provides everything necessary (6DOF tracking, processing tool and stereo AR display) for the creation of the pocket size (literally – fitting into the shirt pocket), autonomous and low cost LS which could be used as a back-up or supplementary on-board navigation system. The necessity of such a system was proved by the crash while landing of the plane in Moscow Domodedovo airport in Desember 2010. All the plane's engines failed, as a result the on-board electrical system also failed, and this by-turn caused the failure of the on-board navigation system. Because of very low clouds and poor visibility the pilots carried out a “blind” landing using only the air traffic controller instructions resulting in catastrophe. Also the value and the relevance of such a pocket LS is obvious for light aircrafts.Besides the above-stated, the suggested LS allows implementation of a 3D+stereo visual interface which is most natural for a human being. This interface not only improves the quality of the pilots spatial navigation, but minimizes training required for using this tool. A pilot will be able to see the approach descending path as a series of markers that can be represented by 3D frames in the stereo mode (fig. 5). The flight inside the virtual tunnel marked by the 3D frame increases the probability of an error-free landing in poor or even zero visibility. A kind of such a tunnel is already realized in systems known as synthetic vision systems (SVS), but these systems provide non-stereo 3D virtual reality without the visual contact with the real world[6,7]. SmartView SVS developed by Honeywell[2] has AR display combining infrared camera data with virtual reality, but it also supports only non-stereo 3D and 2D (as well as Rockwell Collins’s EVS which allows showing monochrome 3D terrain models or infrared camera picture).The problems of GPS accuracy and accessibility are out of the scope of this paper. Let us point out briefly that the current state of GPS (and differential GPS) and similar systems (GLONASS) and especially the perspective of these systems integration in the nearest future make the satellite positioning acceptable for obtaining linear coordinates for a LS of supplementary type. GPS is used in Honeywell's SmartView for 3D terrain model positioning. By 2009 US Federal Aviation Administration registered more GPS landings than landings with “traditional” instrument LS. iPhone4's built-in gyroscope and accelerometer solve the problem of angular coordinates.

4. Experiment Design

Despite the intuitive evidence for the high effectiveness of 3D+stereo interface (in comparison with 2D and 3D) for LS, a study proving its advantages is needed (or the absence of such an advantage). In order to do it in the beginning of 2012 an experiment series will be carried out in the Moscow State Technical University of Civil Aviation.  | Figure 5. 3D frames marking the landing path which are seen by a pilot in a stereo mode |

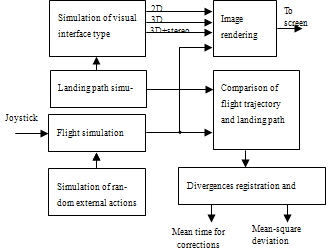

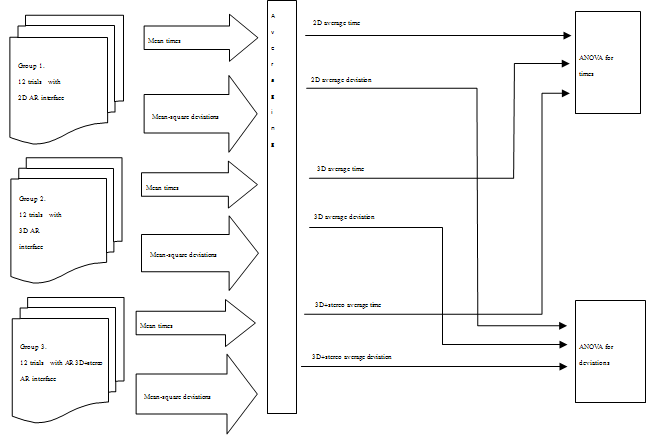

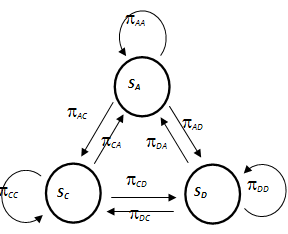

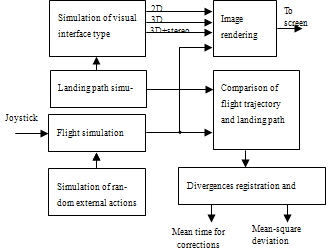

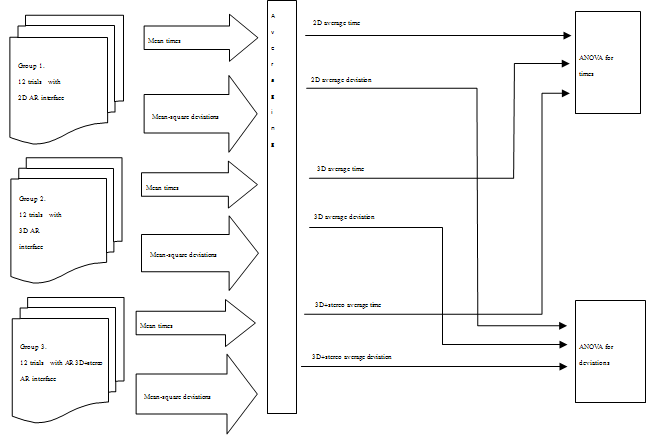

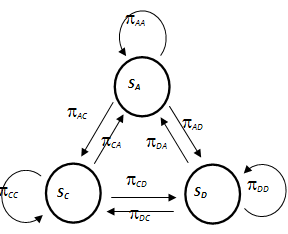

Three groups of subjects (each group consists of 12 students, groups are homogeneous by gender, age and education level) are to take part in the experiments. Pilots intentionally are not included in the subject groups, since their professional experience of flying the plane using the present instrument technology would be a distorting factor for the comparative experiments. Three computer flight simulator programs have been developed using the Vizard VR Toolkit [8] to simulate the flight along the same landing path: the flight using AR LS with 2D interface (like shown on fig. 1); the flight using AR LS with 3D interface (as shown on fig. 2); the flight using AR LS with 3D+stereo interface (as shown on fig. 5). The virtual plane is controlled by means of a joystick. 2D and 3D simulators work with a common display, 3D+stereo simulator works with a stereo display (ViewSonic vx2268vm 3D Ready and NVIDIA 3D Vision Glasses). The 1st group of subjects “lands” the virtual plane using the 2D interface, the 2nd group of subjects uses the 3D interface, the 3rd group of subjects uses the 3D+stereo interface, thus the independent variable is the type of the visual interface. The dependent variables are the mean-square deviation of the virtual plane trajectory from the ideal landing path and the mean time spent by a subject to correct deviations from the ideal landing path arising due to the modelled external actions. The simulator structure is shown in fig. 6. The experiment results processing scheme is shown on fig. 7. The statistically significant difference between the experimental results is determined by the analysis of variance (ANOVA). All subjects have small instruction and learning session before the trial.Processing procedure for trial results is important part of the experiment design. Mean-square deviations are general, static estimates for the random process of the divergence correction. In order to have the estimate of this process dynamics, it is presented as a Markov chain [1] which includes 3 states (fig. 8):SA – the state when the divergence between the virtual plane trajectory and the ideal landing path ascents;SD – the state when the divergence between the virtual plane trajectory and the ideal landing path descents;SC – the state when the divergence between the virtual plane trajectory and the ideal landing path does not exceeds some small threshold T.Let the divergence at the moment i be Ri. One can describe changing of the states of the correction process by means of a time series consisting of Ri. Then it is possible to interpret the suggested chain as an approximation of a random process with unlimited countable number of states by the first differences  | Figure 6. Structure of the flight simulator used in the experiments |

| Figure 7. Experiment results processing scheme |

| Figure 8. Markov chain for the correction process |

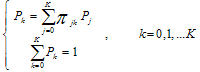

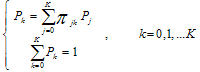

process, which has only three states: SA (Ri-Ri-1 > T), SD (Ri-Ri-1 < -T), and SC (-T < Ri-Ri-1 < T). Markov chain is completely defined if the start distribution {Pi(0)} and the matrix {πij} are known where {Pi(0)} is the probability of the process being in the state with number i at the moment t=0; πij is transition probability, i.e. the probability of an event that the process, being at the moment t=n in the state i, will be in the state j in the moment t=n+1.For Markov chain there are final probabilities {Pk} of being in the states which do not depend on the start distribution {Pi(0)}: | (1) |

A random process is called a Markov process if its future evolution depends only on the current state and does not depend on the trajectory, which has led the process to the current state. The behavior of a subject while correcting the flight trajectory does not depend on his/her previous correction actions so it is reasonable to consider the correction process as a Markov one.During the simulation the numbers of transitions between states SA, SD and SC are registered as the events of changing the sign of difference Ri-Ri-1. After the generation of the statistic estimates for the elements of matrix {πij} the equation system (1) is solved to find the final probabilities PA, PD and PC.These probabilities are informative parameters of the correction process dynamics. The correlation of PA, PD and PC in conjunction with the mean-square deviation provides a rich spectrum of useful interpretations of the experiments results. For example big shares of PA, PD even with small mean-square deviation definitely mean the impulsive control actions, and that by-turn means the discomfort of the visual interface for the subject.Even more analysis possibilities may be obtained if the divergence correction is considered as a semi-Markov process with embedded Markov chain. Semi-Markov process differs from Markov process in the sense that the future evolution of the first one depends not only on the current state, but also on the time of being in this state. These times affect the final probabilities and this may improve “the analytical resolution”.

5. Conclusions

In case of confirmation of the 3D+stereo interface effectiveness, the perspective is opened for AR LS with the following advantages:● Improved spatial navigation.● Pocket size.● Autonomous power supply.● Minimal training.● Low cost.It could be a market breakthrough for the field of industrial AR applications. So far AR technology products are being used extensively in the entertainment sector and for simple navigation using smartphone apps. Pocket size AR LS with a 3D+stereo interface will be inevitably popular among small/light aircraft pilots whose number worldwide(1) makes it reasonable to discuss the considerable market of fully utilized AR solutions

References

| [1] | Bolch G., Greiner S., Meer H., Trivedi K. Queueing Networks and Markov Chains. NY John Wiley & Sons, 1998 |

| [2] | Honeywell. SmartView™ Synthetic Vision System: |

| [3] | http://www.honeywell.com (last visit 4/15/2011) |

| [4] | Laster Technologies. SmartVision: http://www.laster.fr (last visit 4/15/2011) |

| [5] | Lumus. Video Eyeglasses: http://www.lumus-optical.com (last visit 4/15/2011) |

| [6] | Rockwell Collins. Head-up Guidance System: |

| [7] | http://rockwellcollins.com (last visit 4/15/2011). |

| [8] | Sachs, G., Schuck, F. and Holzapfel, F. Low-Cost On-Board Guidance Aid for Landing on Small Airports in Low Visibility and Adverse Weather. Proceedings of the AIAA Guidance, Navigation and Control Conference, August 2008, Honolulu, Hawaii, USA |

| [9] | Sachs, G., Holzapfel, F. (2008). Predictor-Tunnel Display and Direct Force Control for Improving Flight Path Control. Proceedings of the AIAA Guidance, Navigation and Control Conference, August 2008, Honolulu, Hawaii, USA |

| [10] | WorldViz. Vizard VR Toolkit: http://www.worldviz.com (last visit 4/15/2011) |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML