-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Journal of Laboratory Chemical Education

p-ISSN: 2331-7450 e-ISSN: 2331-7469

2017; 5(5): 95-107

doi:10.5923/j.jlce.20170505.01

The Effectiveness of General Chemistry Lab Experiments on Student Exam Performance

Junyang Xian , Daniel B. King

Department of Chemistry, Drexel University, Philadelphia, PA, USA

Correspondence to: Daniel B. King , Department of Chemistry, Drexel University, Philadelphia, PA, USA.

| Email: |  |

Copyright © 2017 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Verification labs are widely used, despite the belief by many that other types of labs are more effective. For those who use verification labs it is important to understand how these experiments contribute to student learning. The main purpose of this study is to determine if there is evidence that completing a verification experiment leads to improved performance on a corresponding exam question related to the lab content. Student performance on exam questions that related to lab content was compared to student performance on exam questions that were not related to lab content in two general chemistry courses. Student performance on quantitative exam questions that related to lab content was also compared to student performance on conceptual exam questions that related to lab content for a few experiments. Overall results were mixed. Students performed better on lab-related questions for some topics, such as kinetics and electrochemistry. The graphing component of the kinetics experiment seemed to benefit science majors and female students more than engineering majors and male students, respectively. In contrast, similar performance was observed on lab-related quantitative and lab-related conceptual questions related to pH titration and electrochemistry.

Keywords: General chemistry, Verification laboratory, Exam performance

Cite this paper: Junyang Xian , Daniel B. King , The Effectiveness of General Chemistry Lab Experiments on Student Exam Performance, Journal of Laboratory Chemical Education, Vol. 5 No. 5, 2017, pp. 95-107. doi: 10.5923/j.jlce.20170505.01.

Article Outline

1. Introduction

- Laboratories have been a standard educational technique in science classes at all levels for more than a hundred years [1, 2]. From a sample of 40 public universities in the United States 58% offer general chemistry laboratories as a required portion in the students’ curriculum [3]. Science laboratory activities provide students a chance to observe and understand the natural world by their interactions with the environment [2]. Students gain more benefit in practical manipulations from the lab than they gain from other types of instruction. In addition to practical skills, some of the studies concluded that laboratory experiments could improve the learners’ favorable attitudes toward science [4, 5]. Labs have been reported to help students develop their creative thinking and problem-solving skills [6]. In addition, labs can help students develop scientific thinking [7, 8], scientific abilities [9], intellectual abilities [10] and conceptual understanding [11, 12]. Students’ interest in specific activities, such as experimenting, dissecting and working with microscopes) are significantly higher if they have lab experience [13]. However, even though laboratories have been viewed as a fundamental part of a science course and they are assumed to be important and necessary, it is sometimes hard to justify the inclusion of lab courses due to the high cost in terms of time, finances and personnel required [14]. Therefore, more study is needed to determine whether or not the high laboratory costs are a necessary component of science education.The majority of colleges and universities that require lab (70%) have their students complete verification chemistry experiments [15], in which students attempt to reproduce a result or measurement they have already been provided. Verification labs are beneficial when there is need to minimize resources, including time, space, equipment and personnel [16]. Verification labs provide well stated procedures, which the students can easily follow. They are well designed for a large number of students to complete the experiment simultaneously. It has been reported that verification labs can improve students’ lab experiment skills and teach them factual information [1]. Verification experiments are often preferred when lab time is limited, due to the fact that other lab types, e.g., inquiry-based labs, can require that students spend up to four times longer in the lab. The additional lab time associated with the inquiry-based experiments didn’t produce increased content understanding, as there was no significant difference in student exam scores between students enrolled in verification labs and students enrolled in inquiry-based labs [9]. In a review of a group of studies conducted over a 32-year period, it has been found that the test scores on post-tests of students who enrolled in verification labs are about 10 points higher than those of their pre-tests [17]. However, despite their benefits, verification experiments are heavily criticized by some faculty [18]. The first reason is that the outcome of a verification experiment is predetermined, so students are trying to reproduce the expected result. This means that students who complete verification labs spend more time determining if their outcome agrees with the predicted result than they spend thinking about or interpreting the data [19-21]. The second reason is that when the students are doing verification experiments, they often focus more on mathematical skills required to complete the corresponding calculations than on conceptual understanding of the associated chemistry [9]. Students usually don’t understand the purpose of the experiments, because their sole focus is on the procedure and there is no time or effort spent thinking about the experiment. Researchers also pointed out that students failed to be engaged in discussion and analysis of the science phenomena in lab courses that used verification experiments [1, 2].Understanding the benefits that verification labs might contribute to general chemistry teaching is important for those who use these labs. At our institution, all general chemistry labs use verification experiments, and students are required to write individual lab reports in which they summarize their results. The goals of the lab reports are to increase the time that students spend thinking about the lab content, to improve their conceptual understanding of the lab content, to improve their ability to do the experimental calculations, and to improve their ability to graph data and write scientifically. The main purpose of this study is to investigate the relationship between the content in the general chemistry verification experiments and student performance on corresponding content questions on general chemistry exams (these exams are primarily based on the lectures; there are no exams or quizzes given in lab). Student performance on exam questions that related to lab content was compared to student performance on exam questions that were not related to lab content. Student answers on quantitative questions and conceptual questions were analyzed. Student exam performance was also compared as a function of major and gender. In addition, the lab-related exam performance of students with higher average exam scores was compared to the exam performance of students with lower average exam scores.

2. Methodology

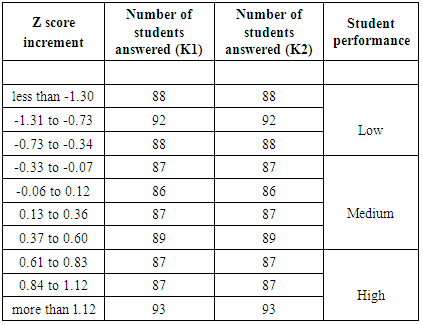

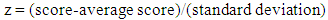

- Student performance has been evaluated during two terms of a general chemistry sequence: General Chemistry I (CHEM101) and General Chemistry II (CHEM 102). During each 10-week term students complete four verification labs, three in-term exams, and one (cumulative) final exam, along with other course components. The lab is part of the general chemistry course, so all students are required to take the labs and lecture together. Individual lab reports, which include an introduction, a discussion and data analysis section, and conclusions, are submitted one week after the experiment is completed. Students are provided access to the rubric used to grade the lab reports before they write the report. This study used data collected during academic years 2012-2013 and 2013-2014. In the first year, 1177 students were enrolled in CHEM 101 and 1005 students were enrolled in CHEM 102, 915 of whom were enrolled in both 101 and 102. In the second year, 985 students were enrolled in CHEM 101 and 882 students were enrolled in CHEM 102, with 760 enrolled in both courses. Most of these students are first-year science and engineering majors. Students in the honors section of the course were not included in this study, as their performance is statistically different than the rest of the sections.Student exam performance on individual lab-related questions was analyzed. Student in-class performance and student exam performance on the same lab topic were also compared. In-class performance was determined for a subset of the students who were enrolled in a lecture section where personal response devices (i.e., clickers) were used. To help determine the effectiveness of lab for students as a function of average chemistry ability, students were sorted into different groups by their average exam scores. Each group is assigned to represent a corresponding “ability level”: high-performing, medium-performing, and low-performing. The “difficulty level” of a question is determined from the percent correct of all the test takers on that question [22], where a lower percent correct corresponds to higher difficulty. Item characteristic curves have been used as a graphical method to show the range of student performance (percent correct) at different “ability levels”. The same technique was used to analyze student performance on American Chemical Society (ACS) exams [22]. In this study, students were grouped by their average exam scores over the whole term, which was considered as their “ability level” or “student proficiency”. All students who took each exam were evenly divided into ten groups. Each group contains 70-90 students, depending on the number of students who took the exam. Students’ standard z scores are calculated with equation (1).

| (1) |

|

3. Results and Discussion

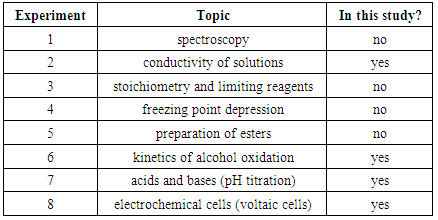

- Student performance on exam questions related to several different lab topics has been analyzed. The analysis includes lab-related and non-lab related questions; lab-related quantitative and lab-related conceptual questions; and the impact of student major and gender on their performance on lab-related and non-lab related questions. Table 2 shows a list of topics that were covered in CHEM 101 and 102 labs. Some of these topics were not included in this study because there were no questions about these topics on the general chemistry exams or because the exam questions didn’t match the criteria of this study (e.g., exams only included questions that were not lab related).

|

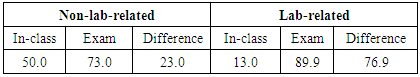

3.1. Lab-related and Non-lab Related Questions

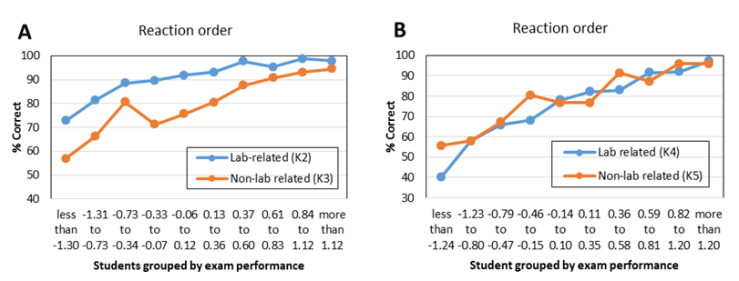

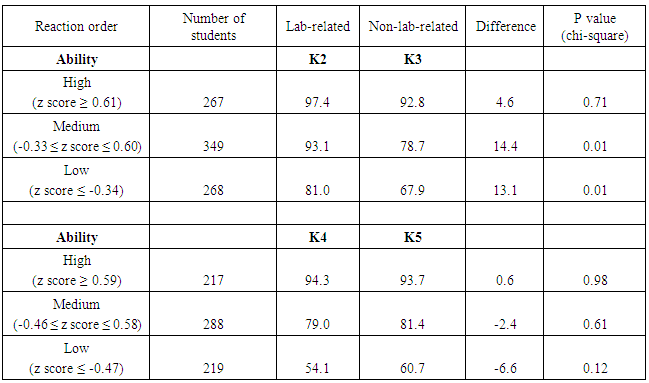

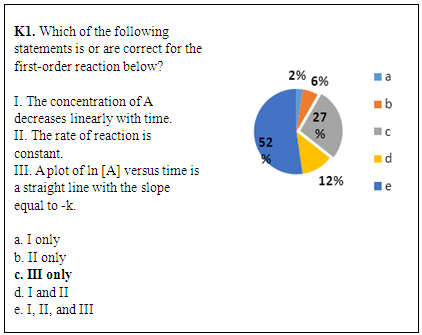

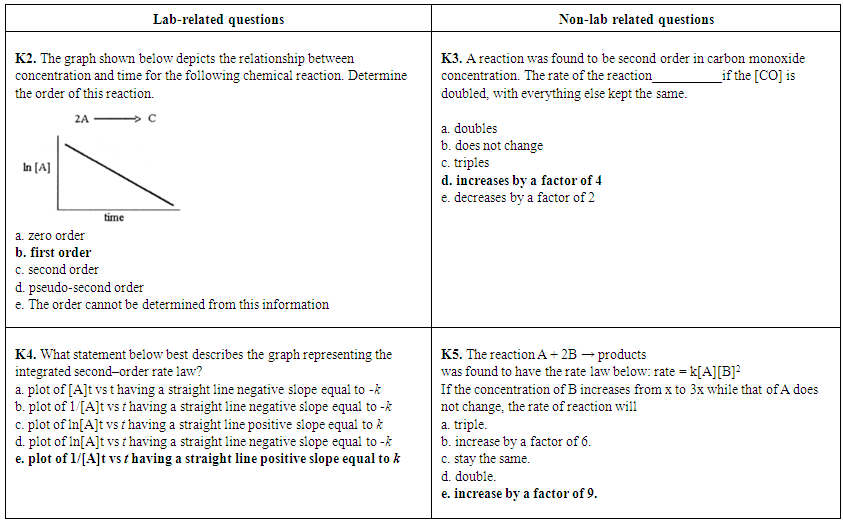

- In the kinetics experiment (CHEM 102), students recorded times and calculated the rate of a pseudo-first order reaction. The data were recorded by computer using the Vernier LoggerPro System (Beaverton, OR). In the lab report, each student plotted three kinetics curves (zero order, first order and second order) and chose the graph that had the best linear relationship to determine the reaction order [23, 24]. Figure 1 shows a lab-related question (K1) from the CHEM 102 final exam (2014) and the corresponding distribution of students’ answers. Statements I and III in the question refer to kinetics curves of zero and first order, respectively, which were directly related to the lab content. Many students could identify that statement III was correct (79% chose c or e), but many of those students failed to identify that statements I and II were incorrect (52% chose e). The students who chose answer e failed to show complete conceptual understanding of first order reactions; they thought the concentration of the reactant should decrease linearly with time and the rate of reaction is constant. This result indicates that this experiment might not help those students improve their conceptual understanding of first order reactions. This may be due to the fact that students cannot connect the graphs they made in the kinetics lab report to the fundamental concepts associated with this experiment.

| Figure 1. Student performance on a lab-related question about reaction orders from CHEM 102 final exam (2014, n=754). The correct answer is indicated with bold font |

|

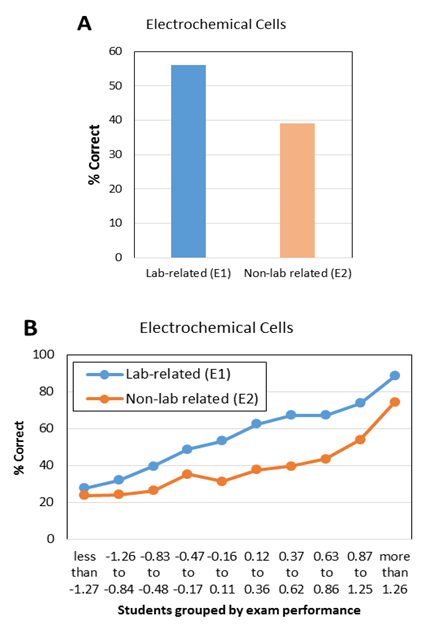

| Figure 2. Lab-related questions and non-lab related questions about reaction orders from CHEM 102 second in-term exams of 2013 (K2, K3) and 2014 (K4, K5). Correct answers are highlighted in bold font |

|

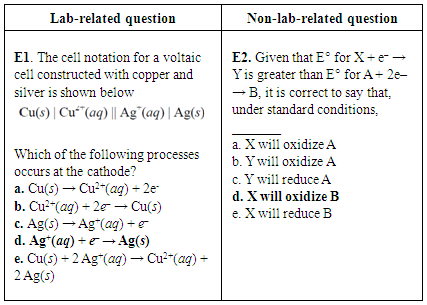

| Figure 4. Lab-related question and non-lab-related question about electrochemical cells from CHEM 102 final exam of 2014. Correct answers are highlighted in bold font |

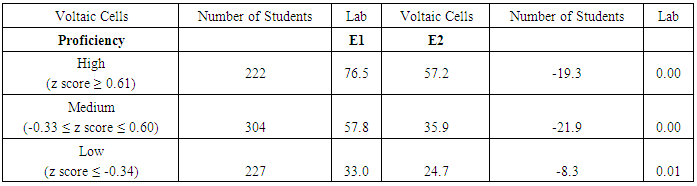

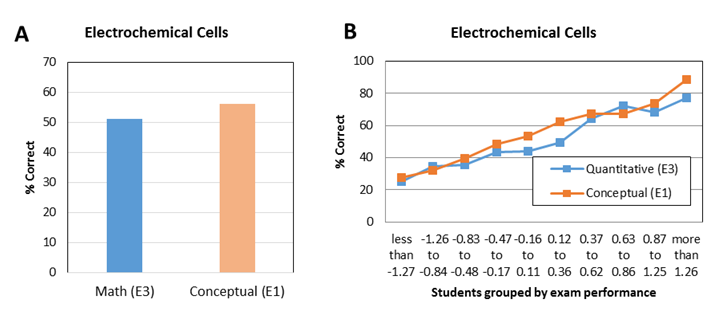

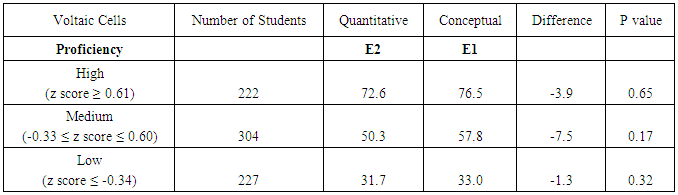

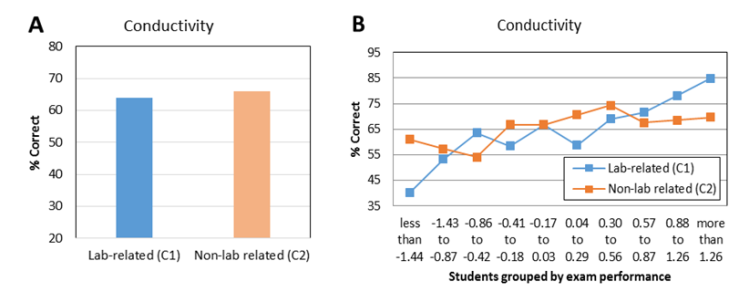

| Figure 5. Average student performance (A) and item characteristic curve (B) on lab-related and non-lab related exam questions about electrochemical cells from CHEM 102 final exam in 2014 |

|

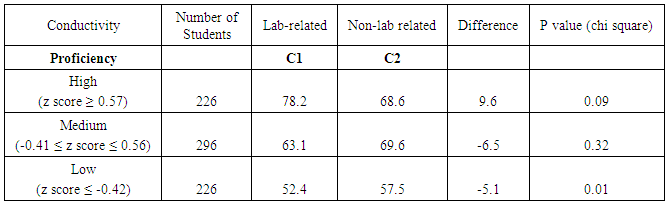

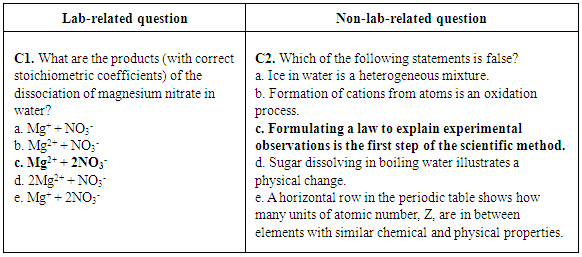

| Figure 6. Lab-related question and non-lab- related question about solutions from CHEM 101 first in-term exam of 2013. Correct answers are highlighted in bold font |

| Figure 7. Average student performance (A) and item characteristic curves (B) on lab-related and non-lab-related exam questions about solutions from CHEM 101 first in-term exam in 2013 |

|

3.2. Lab-related Quantitative and Lab-related Conceptual Questions

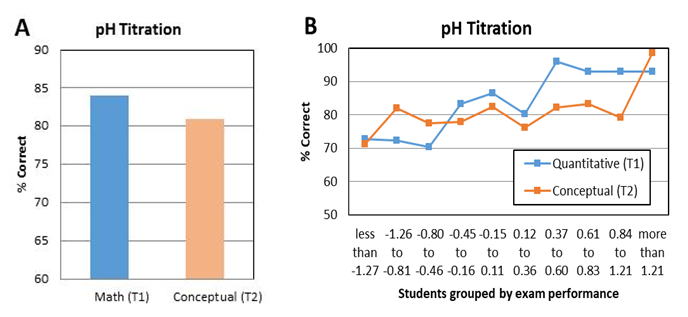

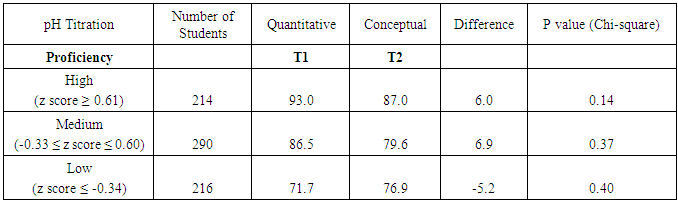

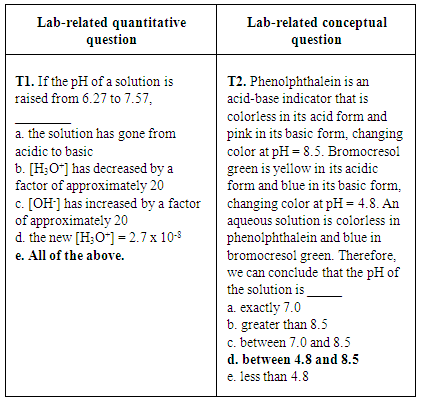

- To gain more information about the effectiveness of verification experiments, student performance on quantitative and conceptual questions related to several experiments was investigated. One example of paired lab-related quantitative and lab-related conceptual questions related to a pH titration experiment (CHEM 102). In this experiment, students performed a titration of a vinegar solution with NaOH standard solution and observed the color change of an indicator at the endpoint. Students used the equivalence point volume to calculate the concentration of acetic acid in vinegar. In the lab report, students used the pH value of the half equivalence point to calculate the pKa value. The conversion between pH values and [H+] was provided in the lab manual [23, 24]. Figure 8 shows two pH titration questions (T1 and T2) from the third in-term exam of CHEM 102 in 2014. To answer T1, students had to convert pH values to [H3O+] and [OH-]. In addition, students had to know the pH ranges that correspond to acidic and basic solutions, which was also related to the lab content. To answer T2, students were asked to identify the pH range of a solution using the behavior of two indicators, which also directly relates to the lab content.

| Figure 8. A lab-related quantitative question and a lab-related conceptual question about pH values from CHEM 102 third in-term exam of 2014. Correct answers are highlighted in bold font |

|

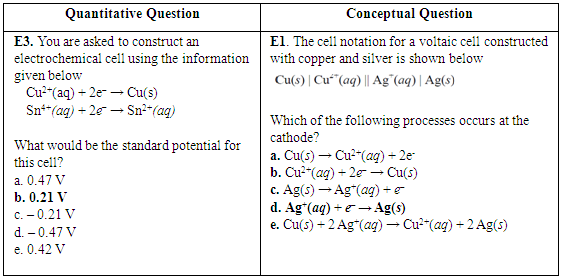

| Figure 10. Lab-related quantitative question and lab-related conceptual question about electrochemical cells from CHEM 102 final exam in 2014. Correct answers are highlighted in bold font |

|

3.3. The Impact of Major and Gender

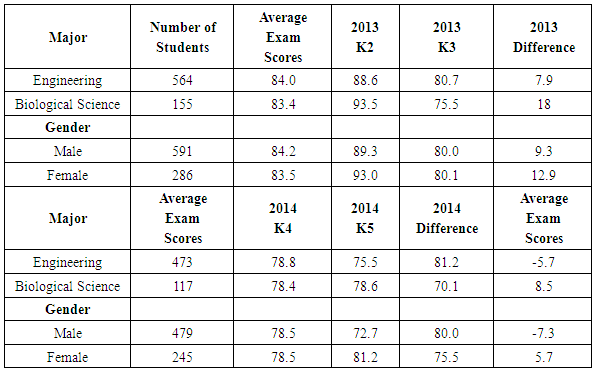

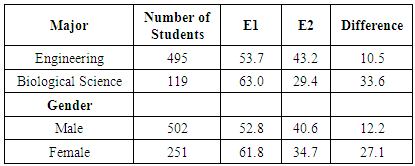

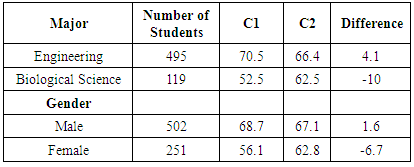

- To further investigate potential benefits of verification experiments, results of the lab-related and non-lab related questions were also analyzed as a function of student major and gender. Since the student population in CHEM 101 and CHEM 102 is primarily composed of engineering majors (about two-thirds of the students) and biology majors (about one-sixth of the students), comparisons by major were made using only these two groups of students. Comparisons by gender, however, used all students enrolled in the courses. The first results presented relate to the kinetics experiment. The average performance of biological science students on the lab-related kinetics questions (K2, 93.5%, and K4, 78.6%) was higher than the performance of engineering students on the same questions (88.6% and 75.5%, respectively, Table 9). The average performance of female students on the lab-related kinetics questions (K2, 93.0%, and K4, 81.2%) was higher than that of male students on the same questions (89.3% and 72.7%, respectively). These differences are not due to an inherent difference in performance between these groups, as demonstrated by the fact that the average exam scores are the same for each group in a given year (Table 9). Since engineering majors are predominantly male and biology majors are more evenly mixed with respect to gender, it is not surprising that similar results (i.e., lower scores) are observed for both engineering majors and male students. When comparing student performance on lab-related and non-lab related questions, again, engineering majors and male students performed in a similar way. Engineering students performed 7.9% higher on question K2 (lab-related) than K3 (non-lab related), whereas biological science majors showed a bigger difference, scoring 18% higher on question K2 than K3, with all groups scoring higher on the lab-related questions during 2013. In 2014, however, some of the groups scored higher on the non-lab-related question. Engineering students performed 5.7% lower on question K4 (lab-related) than K5 (non-lab-related), while biological science majors performed 8.5% higher on question K4 than K5. As was the case in 2013, engineering majors and male students showed similar results relative to biology majors and female students, respectively. It is not known why the results differed between the two years in the response to lab-related and non-lab related questions.

|

|

4. Summary and Conclusions

- Evidence from this study suggests that students tend to perform better on lab-related exam questions associated with some verification experiments, such as kinetics and electrochemical cells. Results for the kinetics experiment were mixed, however. While students appeared to benefit from the practice gained generating graphs, they weren’t always able to translate that learning to different questions. In 2013, an item characteristic curve and chi square tests showed that low-performing and medium-performing students scored higher on a lab-related kinetics question than a corresponding non-lab related kinetics question. However, in 2014, there was no difference in student scores at all performance levels. Further investigations are needed to determine why the experiments help some students answer some questions better than others. While students at all levels performed better on an electrochemical cell lab-related question than on an electrochemical cell question that wasn’t related to the experiment, results are only available for one year. Therefore, it is unknown if this result is reproducible. It doesn’t appear that the conductivity experiment helped most students to improve their performance on corresponding exam questions, as the results on lab-related and non-lab-related questions were very similar.When lab-related quantitative questions were compared to lab-related conceptual questions, the results also were variable. For the pH titration experiment, students scored slightly higher on the quantitative question. For the electrochemical cells experiment, students scored slightly higher on the conceptual question. It is likely that the effect of verification experiments is content-specific. Different experiments will provide benefits that correspond to the particular design of that experiment and the skills and/or techniques that are reinforced in that experiment.Student performance on lab-related and non-lab related questions does not appear to be a function of student major or gender. As with quantitative and conceptual questions, the results vary by experiment. For the kinetics experiment, biology majors and female students scored higher on the lab-related question. For the conductivity experiment, engineering majors and male students scored higher on the lab-related question. Rather than observing that verification experiments are generally more effective for a particular group of students (e.g., engineering majors), the effectiveness of a particular experiment should be determined for each population.Student performance on quantitative and conceptual questions also was compared as a function of major. Analysis of two pairs of questions related to pH titration and electrochemical cell experiments indicates that engineering majors were better at answering quantitative questions and biological science majors tended to score better on conceptual questions. These results might be due to their relative prior experience with these types of questions.The results of this study suggest that there is a relationship between student exam performance and some verification experiments. Future studies should include investigations of experiments related to chemistry topics not included in this study. Additional studies should also include a larger number of paired exam questions. Since this study only focused on the impact of verification experiments on exam performance, there is still a need to investigate the effectiveness of this type of experiment on the development of other skills, such as lab work and scientific writing.

References

| [1] | Hofstein, A.; Lunetta, V. N., Rev. Educ. Res. 1982, 52 (2), 201-217. |

| [2] | Hofstein, A.; Lunetta, V. N., Science Education 2004, 88 (1), 28-54. |

| [3] | Matz, R. L.; Rothman, E. D.; Krajcik, J. S.; Holl, M. M. B., J. Res. Sci. Teach. 2012, 49 (5), 659-682. |

| [4] | Bybee, R. W., Science Education 1970, 54 (2), 157-161. |

| [5] | Ben-Zvi, R.; Hofstein, A.; Samuel, D.; Kempa, R. F., J. Chem. Educ. 1976, 53 (9), 575-577. |

| [6] | Hill, B. W., J. Res. Sci. Teach. 1976, 13 (1), 71-77. |

| [7] | Wheatley, J. H., J. Res. Sci. Teach. 1975, 12 (2), 101-109. |

| [8] | Raghubir, K. P., J. Res. Sci. Teach. 1979, 16 (1), 13-17. |

| [9] | Etkina, E.; Karelina, A.; Ruibal-Villasenor, M.; Rosengrant, D.; Jordan, R.; Hmelo-Silver, C. E., J. Learn. Sci. 2010, 19 (1), 54-98. |

| [10] | Renner, J. W.; Fix, W. T., J. Chem. Educ. 1979, 56 (11), 737-740. |

| [11] | Kösem, Ş. D.; Özdemir, Ö. F., Science & Education 2014, 23 (4), 865-895. |

| [12] | von Aufschnaiter, C.; von Aufschnaiter, S., Eur. J. Phys. 2007, 28 (3), S51-S60. |

| [13] | Holstermann, N.; Grube, D.; Bögeholz, S., Research in Science Education 2010, 40 (5), 743-757. |

| [14] | John Carnduff, N. R., Enhancing Undergraduate Chemistry Laboratories, Pre-Laboratory and Post-Laboratory Exercises, Examples and Advice. Royal Society of Chemistry: London, 2003; 32 pages. |

| [15] | Bopegedera, A. M. R. P., J. Chem. Educ. 2011, 88 (4), 443-448. |

| [16] | Lagowski, J. J., J. Chem. Educ. 1990, 67 (3), 185. |

| [17] | Abraham, M. R., J. Chem. Educ. 2011, 88 (8), 1020-1025. |

| [18] | Domin, D. S., J. Chem. Educ. 1999, 76 (4), 543-547. |

| [19] | Pickering, M., J. Chem. Educ. 1987, 64 (6), 521-523. |

| [20] | Louis E. Raths, S. W., Arthur Jonas, and Arnold M. Rothstein, Teaching for Thinking: Theory, Strategies, and Activities for the Classroom. Teachers College Press: New York, 1986; 264 pages. |

| [21] | Tobin, K., Tippins, D. J., & Gallard, A. J., Research on Instructional Strategies for Teaching Science. In Handbook of Research on Science Teaching and Learning, Gabel, D. L., Ed. Macmillan: New York, 1994; pp 45-93. |

| [22] | Holme, T.; Murphy, K., J. Chem. Educ. 2011, 88 (9), 1217-1222. |

| [23] | Thorne, E. J., Laboratory Manual for General Chemistry. John Wiley & Sons, Inc: Hoboken, NJ, 2012; 101 pages. |

| [24] | Thorne, E. J., Laboratory Manual for General Chemistry. John Wiley & Sons, Inc: Hoboken, NJ, 2013; 103 pages. |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML