-

Paper Information

- Next Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Probability and Statistics

p-ISSN: 2168-4871 e-ISSN: 2168-4863

2012; 1(4): 80-94

doi: 10.5923/j.ijps.20120104.01

How Quantum is the Classical World

Gary Bruno Schmid 1, Rudolf M. Dünki 2

1Research Group F.X. Vollenweider, Psychiatric University Clinic, University of Zuerich, Lenggstrasse 31, CH-8029 Zürich, Switzerland

2Dept. of Physics, CAP,University of Zuerich, Winterthurerstr. 190, CH-8057 Zürich, Switzerland

Correspondence to: Gary Bruno Schmid , Research Group F.X. Vollenweider, Psychiatric University Clinic, University of Zuerich, Lenggstrasse 31, CH-8029 Zürich, Switzerland.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Background: Experiment has confirmed that quantum phenomena can violate the Information Bell Inequalities. A violation of the one or the other of these inequalites is equivaent to a violation of local realism meaning that either objectivity or locality, or both, do not hold for the phenomena under investigation. Main idea: Test suspected classical violations of local realism for statistical significance by determining whether or not a data set – which displays a well-defined, built-in complementarity structure – is “classically random”. Problem: Design an experiment which mocks up ontological complementarity in a set of classical measurements, thus providing epistemologically complementary (pseudocomplementary) data which can be analysed with the same information theoretic algebra normally used to detect an information deficit in quantum physical results. Method & Analysis: Test for violations of local realism in classical measurements in the absence of ontological complementarity using inference statistics to reject stochastic and/or suspected violations of local realism in such data. Results: Local realism can be violated stochastically in the case of classical measurements using an experimental design mocking complementarity. The probability that local realism is violated by chance increases (1) the more outcomes a given experiment encompasses and (2) in accord with the (anti)correlation of results between the outcomes of a given experiment. The degree to which local realism is violated by chance is relatively independent of the number of outcomes and the correlation of results between the outcomes of a given experiment. A measure for the statistical significance of violations of local realism is presented. Conclusions: Pseudocomplementary data collected from a classical experiment in the way suggested here can be tested for the statistical significance of an initially hypothesized synchronicity between the (dichotomous) values of two physical quantities measured in two isolated, classical systems.

Keywords: Bell’s Inequalitiy, Complementarity, Entanglement, Information Deficit, Local Realism, Nonlocality, Pseudo-Telepathy, Quantum Physics, Synchronicity

Cite this paper: Gary Bruno Schmid , Rudolf M. Dünki , "How Quantum is the Classical World", International Journal of Probability and Statistics , Vol. 1 No. 4, 2012, pp. 80-94. doi: 10.5923/j.ijps.20120104.01.

Article Outline

1. Introduction

- The reader may remember asking themselves as a graduate student questions like: “Is a perfect (ultimately thin), macroscopic coin in an unstable balance between two states at once just before collapsing into a stable classical state of Heads or Tails actually in a quantum physical q-bit state, if only for a fraction of a second, before falling flat on a table top? And if so, could two such classical coins somehow become entangled?”It’s easy to ask this provocative question, but hard to design an experiment to falsify the corresponding Null Hypothesis H0: Local realism obtains macroscopically. (The concept of local realism is discussed below.) Quantum theory does not exclude the possibility that the proposed Null Hypothesis might be correct. Indeed, there is an ongoing scientific endeavor to reconcile the underlying quantum nature of the world with everyday classicality - see, for example, the discussions of quantum Darwinism[1, 2, 3, 4], einselection[5], and the emergence of local realism[6, 7, 8, 9, 10]. Unfortunately, however, the quantum probability field serves little more than as a wonderful black box, like the psychologist’s unconscious and the believer’s netherworld (German: Jenseits), within which rational explanations of all kinds of bizarre speculations can lie hidden unless an experiment can be designed and carried out to test them.

2. Objectives

- There are at least two difficulties involved in an experimentaltest of the above-mentioned Null Hypothesis: (1) In contrast to quantum physics, there is, to our knowledge, no algebra capable of describing the above-mentioned or similar classical situations which can be used to test the Bell Inequalities; (2) the utter absence of complementarity in classical measurements seems to make it impossible to design a classical experiment along the lines of a quantum experimental test of the Bell Inequalities, even if such an algebra were to exist. Overcoming these difficulties is what this paper is all about.The Bell expressions, be they standard or information-theoretic ones, involve correlations or probabilities which can be tested in the lab and allow experimental errors. As statistical statements, it is no wonder that, in a finite set of data, they can be violated by chance. If the violation happens rarely, the tested source can as well be local realistic. Indeed, and in spite of the provocative title, we do not wish to suggest that there has to be something “nonlocally realistic” going on in the classical world just because the violation of local realism can happen by chance using a classical model. Quite to the contrary, in this paper, we provide rigid criteria which would have to be fulfilled if a classical-like model could indeed – as shown here – now and again violate nonlocal realism.

2.1. Perspectives

- Bell’s Inequality is a restriction placed by local realistic theories such as classical physics upon observable correlations between different systems in experiments. Bell’s original theorem and stronger versions of this theorem „state that essentially all realistic local theories of natural phenomena may be tested in a single experimental arrangement against quantum mechanics, and that these two alternatives necessarily lead to observably different predictions[11] .“ In this work, we study the probability of violating the Bell Inequality using two classical-like numerical models and a finite number of “experimental” runs. In the first model (stochastic case), the results of all local measurements are totally random and independent, also in different runs of the experiment. In the second model (anticorrelated case), the results for the settings chosen by the simulations are always anticorrelated, and all other predetermined results are random and independent. Note that these models are nothing more than numerical simulations of two possible, extreme, experimental outcomes. The results provide a statistical basis which can be used to estimate the significance of outcomes from real experiments such as the quantum coin experiment discussed below. It is only the experiments themselves – and not these numerical simulations - which, of course, must be expected to avoid certain loopholes which would otherwise allow violations of Bell’s Inequality within the context of a classical experiment. Such loopholes include the locality, the fair-sampling, and the freedom-of-choice loopholes[12]. (See also [13, 14, 15].)We also emphasize that although our experimental design might aid the explanation of possible observed violations of Bell’s Inequality by local realistic theories, it’s aim is to place a strong restriction on the acceptance of systematic violations of local realism in a particular set of repeated measurements. In order to do this, however, we have had to figure out a way to gather and analyse the results coming from a classical experiment which, of course and by its very nature, cannot involve ontologically complementary variables – see below.

2.2. Information has its Price

- Quantum physics is the physics of the microcosmos, the world of tiniest things like molecules, atoms, electrons, protons, neutrons, photons etc.. The behaviour of things in the microcosmos obeys natural laws which seem to be in defiance of our trusted, everyday experience. This is especially true with regard to our empirically based belief in an objective, local reality. No one in their right mind, thinking in a normal, common sense way, would ever expect, for example, that two separated coins would always be able to display an opposite result: heads vs. tails or tails vs. heads with 100% reliability without there being some kind of information transfer taking place between them to correlate their outcomes nonlocally. But this is, of course, just the kind of behaviour which „quantum things“ can – under particular experimental conditions - be shown to display.There is still no way to logically get from the empirically established, nonlocal realism of the quantum world to the just-as-empirically-established local realism of the classical world in a self-consistent, complete, analytical way. This unsolved problem is often overlooked when trying to apply the ideas of quantum entanglement and teleportation as metaphors to understanding certain classical observations such as unusual healing methods and psychological phenomena[16]. For example: Although it is not unthinkable that the human mind in an especially prepared mental state of psychological absorbtion, dream, flow, hypnosis, meditation or trance etc. might somehow be able to biochemically isolate certain macromolecules from their thermodynamic environment within the brain, thus enabling them to partake of nonlocal, quantum phenomena, that is, to inhibit the decoherence of the Psi-function of the entire system[17, 18, 19, 20, 21, 22], this non-impossibility is highly speculative and does not open the door to using quantum physics to simply explain such unphysical things as, say, mental telepathy, as many (enthusiastic) authors might like to believe.Nature dictates that information has its price. The lowest non-negotiable one is defined by quantum physics: Carrying out a decision or expressing an intention, for example, measuring something, always disturbs something else, even if this disturbance might, as in most cases, go unnoticed. Let’s take, for example, the famous double-slit experiment: Light enters a single, narrow opening and illuminates a screen with two slits, each of which can be independently closed again. If both slits are left open, one observes interference bands on the screen attesting to the wave-like property of light. If one of these slits is closed, these bands disappear and one simply observes a single illuminated stripe, as if light were particle-like. Quantum interference only then occurs, if absolutely no information is available as to which way these light „particles“ might have taken. The point is not whether or not an observer might actually be in possession of this information but, rather, whether or not they could in any possible way, even if only in principle, deduce which way these particles might have taken. In order for interference to be observed, it must be impossible for anyone – no matter where they might be and no matter what kind of sophisticated technology they might have at their disposal – to figure out which of the two possible ways these light particles have taken. In other words, for the wave-like interference to occur, one must be able to completely and totally isolate the system from its (thermodynamic) environment so that no kind of surreptitious information transfer, like, say, the emission of an electromagnetic or some other kind of signal, might, in principle at least, be able to give a clue as to which path the light particles are taking.

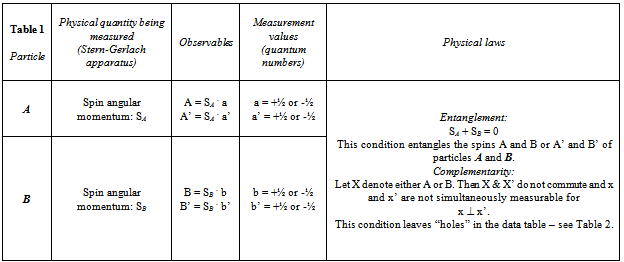

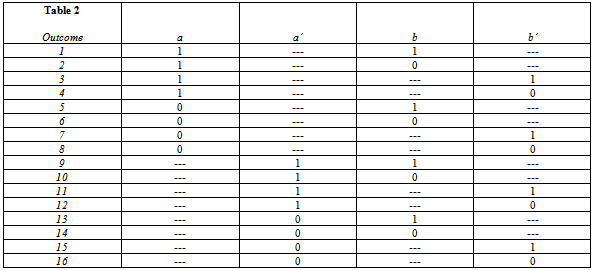

2.3. Braunstein & Caves Experiment

- Consider the experiment suggested by Braunstein & Caves (henceforth referred to as B & C) consisting of two counter-propagating spin-1/2 particles, A and B[23]. These particles are emitted by the decay of azero angular momentum particle and, accordingly, have spins SA and SB such that SA + SB = 0. Each particle is sent through a Stern-Gerlach apparatus which measures a component of the particle’s spin along one of two possible directions labelled by the unit vectors a and a’ for particle A, and b and b’ for particle B. (The factors involved in the Stern-Gerlach experiment are summarized in Table 1.) Accordingly, for particle A there are two observables A = SA . a and A’ = SA . a’ . Similarly, for particle B there are two observables B = SB . b and B’ = SB . b’ . For a spin ½ system, the possible values of A and A’ labelled by a and a’, respectively, and denoted by the quantum numbers: m = +1/2 and m = -1/2 are dichotomous, that is, a = +½ or -½ and a’ = +½ or -½. (States with positive or negative spin values are called spin-up or spin-down states, respectively.) Accordingly, there are 4 observables: A and A’ associated with particle A, and B and B’ associated with particle B.Entanglement of two systems means that, at any given instant of time after their separation and isolation, the overall, combined system is simultaneously in two states at once, each of which corresponds to two observably different outcomes for the individual systems. The problem of entanglement still remains a theoretical and experimental concern of modern research [24, 25, 26, 27]. To get a clear picture of what entanglement is all about, consider, for example, the overall, combined system of two spinning coins: Coin1 and Coin2 being tossed about in a shaker. After letting them fly separate ways out of the shaker, the combined system could be thought of as being simultaneously in the following four states at once: (Coin1-spin-up and Coin2-spin-down) AND (Coin1-spin-down and Coin2-spin-up) AND (Coin1-spin-up and Coin2-spin-up) AND (Coin1-spin-down and Coin2-spin-down). All four states in ()’s correspond to four observably different outcomes for the individual coins. In the jargon of quantum physics, one says that the state vector of the overall, combined system is a vector of the second type[28].In the experiment of B & C, we have the following condition of entanglement: SA + SB = 0. This relation entangles the spins A and B of particles A and B into an ambiguously superposed state of the form: (A-spin-up and B-spin-down) AND (A-spin-down and B-spin-up). The same pertains for A’ and B’. Such an ambiguous, superposed state is called a q-bit. Furthermore, in a quantum physical system, the two observables X and X’ associated with each system X do not commute under certain conditions, for example in this case, when the angle between the unit vectors x and x’ is 90 degrees. Under this condition, X and X’ cannot be observed simultaneously, that is, the values of x and x’ can not be determined simultaneously.This condition of complementarity (non-commutativity) between X and X’ in each system leaves corresponding “holes”, that is, empty cells, in the data table – see Table 2. It is just this combination of entanglement for a certain preparation of states and complementarity for a certain range of angles between x and x’ which leads to violations of the peculiar statistics underlying Bell’s Inequality. In the given case of a system with spin zero disintegrating spontaneously into two spin-1/2 particles, that is, to thezero-angular-momentum state of SA and SB, this leads to a ca. 41% degree of violation of Bell’s Inequality for x perpendicular to x’, with an angle of 135O between a and b’, and 45O between both b’ and a’ as well as between a’ and b ([28], p. 15).

|

|

|

3. Methods

3.1. Pseudocomplementary Data: Ontological Versus Epistemological Complementarity

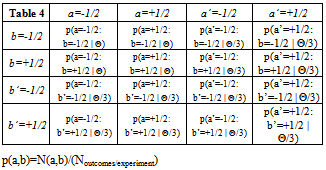

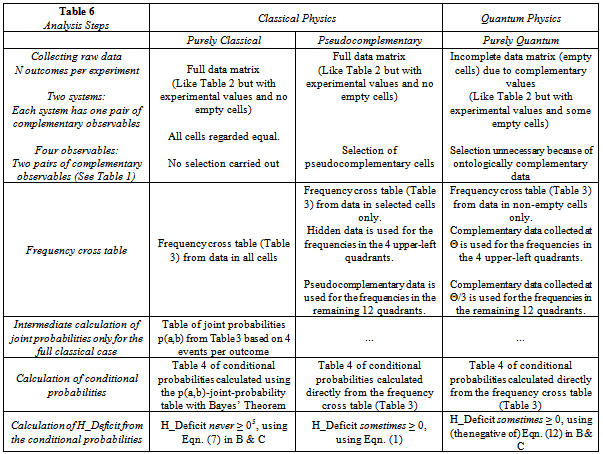

- Now what if we don’t know the algebra underlying a phenomenon, but we do have a series of measurements at hand and want to test the original data table for violations of local realism? (This situation was briefly mentioned under Point 1 above.) If the phenomenon involves complementarity, there will be missing data in the cells complementary to the measurable ones. Let us call this the case of strong or ontological complementarity. Strong complementarity obtains in the quantum world. In this case, we would simply proceed as outlined above to calculate the information deficit from only those frequency cells providing data.But what if complementarity only obtains in a weak or epistemological sense[29]? Could there be a clever way to select the one or the other variable, a or a’, from system A, and b or b’ from system B so as to result in a violation of local realism? In other words, what if there would be some way to know in each outcome which of the two measurements, a or a’, from system A, and b or b’ from system B, is physically relevant, even though the complementary quantity in each system is also observable (but, presumably, not physically relevant)? This knowledge would take over the role of the complementarity between certain observables which leads to the noncommutativity in the algebra we always have in the quantum physical case. Then the particular set of selected measurements of the one quantity a or a’ in system A and b or b’ in system B from each outcome might reliably result in a violation of local realism whereas the overall set of all “weakly” complementary quantities from both systems would simply yield random results which may or may not stochastically violate local realism.Let us assume that the data selected in some clever way as mentioned above is the “real”, physically relevant data - see, for example, Table 5. (Exactly how this data is collected, whether objectively according to some algorithm or device or, subjectively according to intuition, need not concern us for the purposes of this paper.) We call such a data set: pseudocomplementary data and the remaining data – which would otherwise be missing because it would not be observable in a real quantum experiment – hidden data. Note that the hidden data are extracted from the basic population in a fashion similar to the extraction of the pseudocomplementary data, but are assumed to involve little to no “entanglement”. Accordingly, the entropies are additionally conditioned on the pseudocomplementary data as explained in Table 6 below in order to use epistemological complementarity to reconstruct or mimic ontological complementarity. This statistical mimicry is the gist of our analysis.The pseudocomplementary data can be understood to lead to the p(a|b | Θ/3)’s in Table 4.

3.2. How Quantum is the Classical World

- A classical phenomenon taken together with an algorithm to reliably carry out a clever choice of pseudocomplementary data could be thought to be quantum physical at the extent to which the data resulting from this algorithm violates local realism. The B & C Inequality involves conditional entropies between measurement results and, as such, is fully operational and can be established in any theory to test violations of local realism. The question is now: How can we discover whether or not local realism is violated in the physically relevant results, that is, in the pseudocomplementary data of a classical phenomenon? In other words, how quantum is the classical world?

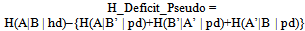

3.3. Quantum Coin Study Design

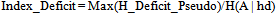

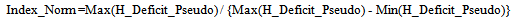

- In an attempt to answer the above questions, we carried out the following two extreme quantum coin computer simulations under selection of only one of the two variables, a and a’, from system A, and similarly for system B. (We will return to this quantum coin simulation again further below within the context of a corresponding gedankenexperiment.) Accordingly, the counterpart to the quantum physical results schematically shown in Tables 2 and 3 above is obtained by constructing pseudocomplementary data for a new Table 2 in the following ways:1.) Stochastic Case: For the given outcome, randomly generate, four times, a 0 (“Tails”) or a 1 (“Heads”), and write each of these values, one after the other, into the corresponding column of data: a, a’, b, b’. This procedure will gradually lead to a full data matrix of random results with no empty cells. For each outcome, as mentioned above, select one column from each of the groups (a,a’) and (b,b’), for example, a and b’ (instead of a and b, or a’ and b, or a’ and b’) according to some selection rule, e.g., by means of a random number generator or intentionally by a clever, educated guess. This defines the subset of pseudocomplementary data – in this example, cells a and b’ - with the remaining, so-called hidden data located in the remaining, unselected cells – in this example, cells a’ and b. For a given outcome, all results are random: the selection of the values 0 or 1 as well as the choice of complementary observables.2.) Anticorrelated Case: For each outcome, randomly (or cleverly – see above) define one of the columns a or a’ and, similarly, only one of the columns b or b’ to contain the pseudocomplementary data. (The other cells, by default, contain what we have come to call hidden data values in this outcome.) Now randomly generate each of the pseudocomplementary values a or a’ as a 0 (“Tails”) or a 1 (“Heads”). Then require the value of the corresponding pseudocomplementary cell b or b’, respectively, to be anticorrelated with the pseudocomplementary cell a or a’, and the value of the remaining hidden cell to be random. Accordingly, all selected (pseudocomplementary) cells have perfect anticorrelation between them, and all nonselected (hidden) cells contain random results. Again we have a full data matrix (=no empty cells) whereby only half of the data is assumed to be physically relevant, namely, the pseudocomplementary data.A computer simulation or “experiment”, for short, was defined to consist of 4, 8, 12, or 16 pseudocomplementary numerical outcomes. A set of 10’000 experiments was carried out in each case for each of the two above-mentioned extremes: Stochastic Case and Anticorrelated Case. The information deficit, H_Deficit_Pseudo, was calculated for each computer experiment as suggested in the paper of B & C in the following way (measured in bits):

| (1) |

| (2) |

|

- is a measure of the extent to which nonlocal realism is violated in a set of experiments. Here, the value of H(A | hd) for the data matrix with maximum H_Deficit_Pseudo is used. Another measure which also proves useful is

| (3) |

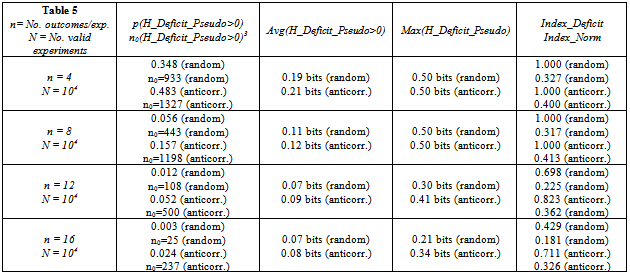

4. Results

- We are interested in the two cases mentioned above:1.) Random selection of pseudocomplementary cells and random results in cells.2.) Random selection of pseudocomplementary cells and perfect anticorrelation between results within the selected cells.In both cases, it is helpful to know from a given set of experiments:a.) the probability that the value of H_Deficit_Pseudo is greater than zero: p(H_Deficit_Pseudo>0)b.) the frequency of values of H_Deficit_Pseudo greater than zero: No(H_Deficit_Pseudo>0)c.) the average value of H_Deficit_Pseudo: Avg(H_Deficit_Pseudo)d.) the maximum value of H_Deficit_Pseudo: Max(H_Deficit_Pseudo)e.) Index_Deficitf.) Index_NormOn the one hand, it is reasonable to assume that especially in the first extreme quantum coin experiment described here, the value of H_Deficit_Pseudo will always be less than zero since, in this stochastic case, there is no essential difference in the statistical nature of the hd and pd data sets. Nevertheless as shown in Table 7, this condition is, indeed, sometimes, if only rarely, violated by chance. On the other hand, in the second, anticorrelated case, one might expect local realism to be greatly influenced by the significant mathematical differences in the statistical correlations within the hd and pd data sets. However, also under this condition is local realism only seldomly violated.The results for repeating a set of 10’000 computer experiments with experiments comprising only four, eight, or twelve outcomes per experiment are presented in Table 5.1.) The smaller the number n of outcomes per experiment, the greater the probability {p(H_Deficit>0) &No(H_Deficit>0)} that this set of outcomes will lead to a positive information deficit.2.) The smaller the number n of outcomes per experiment, the greater the average value of H_Deficit_Pseudo for a given degree of correlation between the results of the pseudocomplementary cells. Nevertheless, even in the case of perfect anticorrelation for n=4, the average value of H_Deficit_Pseudo is only 0.21 bits.3.) The smaller the number n of outcomes per experiment, the greater the maximum value of H_Deficit_Pseudo for a given degree of correlation between the results of the pseudocomplementary cells. Nevertheless, even in the case of perfect anticorrelation for n=4, the maximum value of H_Deficit_Pseudo is only 0.50 bits.4.) For a given number n of outcomes per experiment, the average value of H_Deficit_Pseudo is larger for perfect as opposed to random correlation between results in the pseudocomplementary cells. Similarly, for a given number n of outcomes per experiment, the maximum value of H_Deficit_Pseudo for perfect correlation between results in the pseudocomplementary cells is greater or equal to that for random correlation.5.) The smaller the number of outcomes n per experiment, the less the extent (Index_Deficit & Index_Norm) to which nonlocal realism (H_Deficit_Pseudo>0) is violated has to do with the degree of correlation between the pseudocomplementary data.Our pseudocomplementary classical data mimic a quantum physical spin ½ system. In the quantum physical spin ½ system, the spins are perfectly anticorrelated under entanglement. In Figure 1 of B & C we see that although the value of Max(H_Deficit_Pseudo) for the quantum physical systems under consideration increases with spin number from ca. 0.25 bits for spin ½ to ca. 0.45 bits for spin 25, the physical window (angle between entangled vectors) within which entanglement occurs shrinks to 0 ([23], p. 664). Other authors have shown that although local realism can indeed be violated by noise-resistant systems of arbitrarily high dimensionality d, the quantum state in question vanishes at the extent (probability) to which it is affected by noise ([30], Eqn. 20). Casually summing up “on the back of an envelope”: the larger the spin, the closer the system is to being classical, but the greater is the probability that the system is affected by noise and the smaller is the physical window within which entanglement can be expected to occur. Furthermore, in the quantum physical case under the experimental condition of entanglement, any and every set of outcomes, no matter how large, always leads to a positive information deficit: p(H_Deficit_Pseudo>0) = 1.00. This is the case, for example, for all angles Θ in the range between 0 and the crossing point of the information difference curve with thenull information difference axis in Figure 1 of B & C.

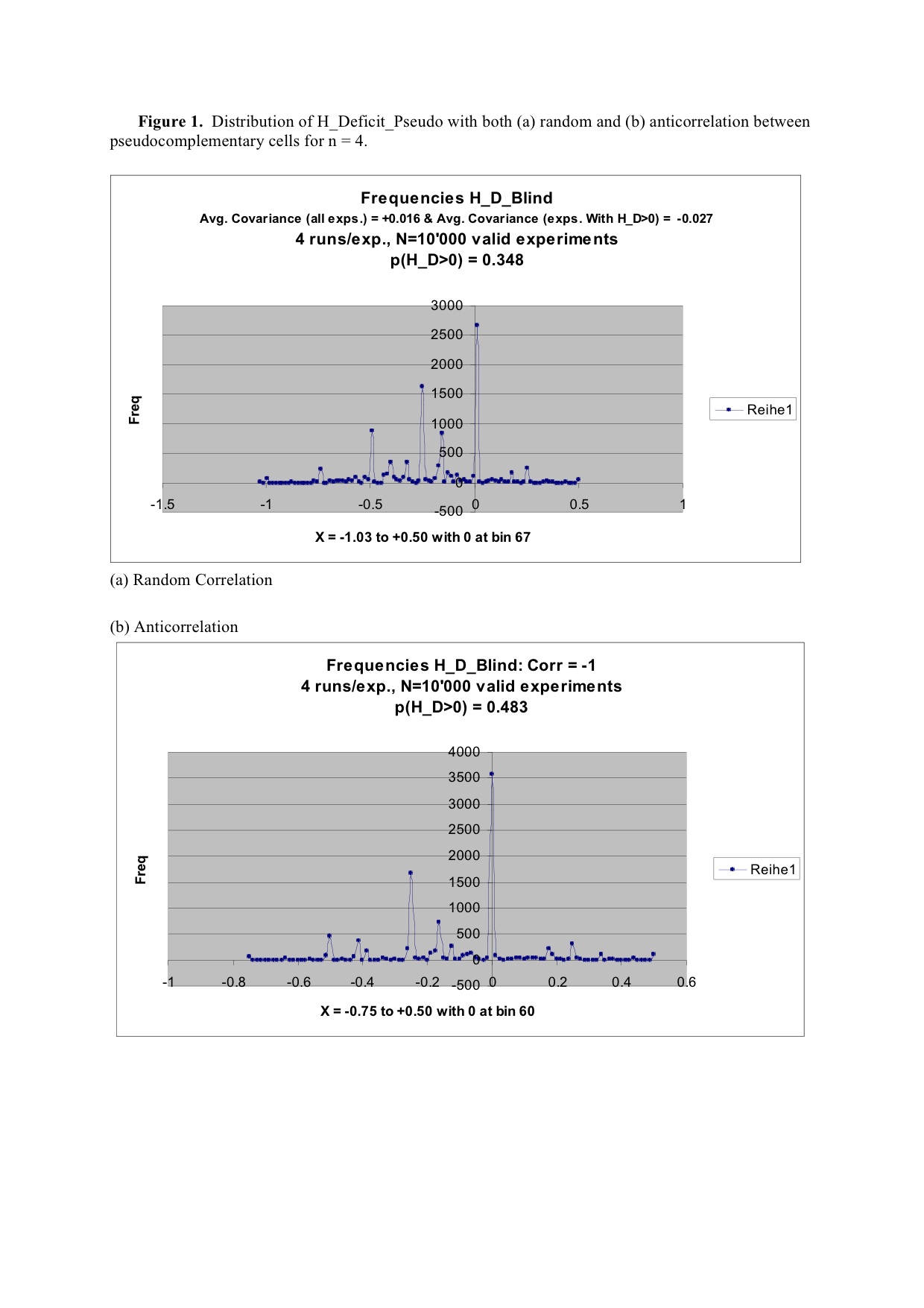

4.1. Distributions of H_Deficit_Pseudo with both Random and Anticorrelation between Pseudocomplementary Cells for n = 4 and 16

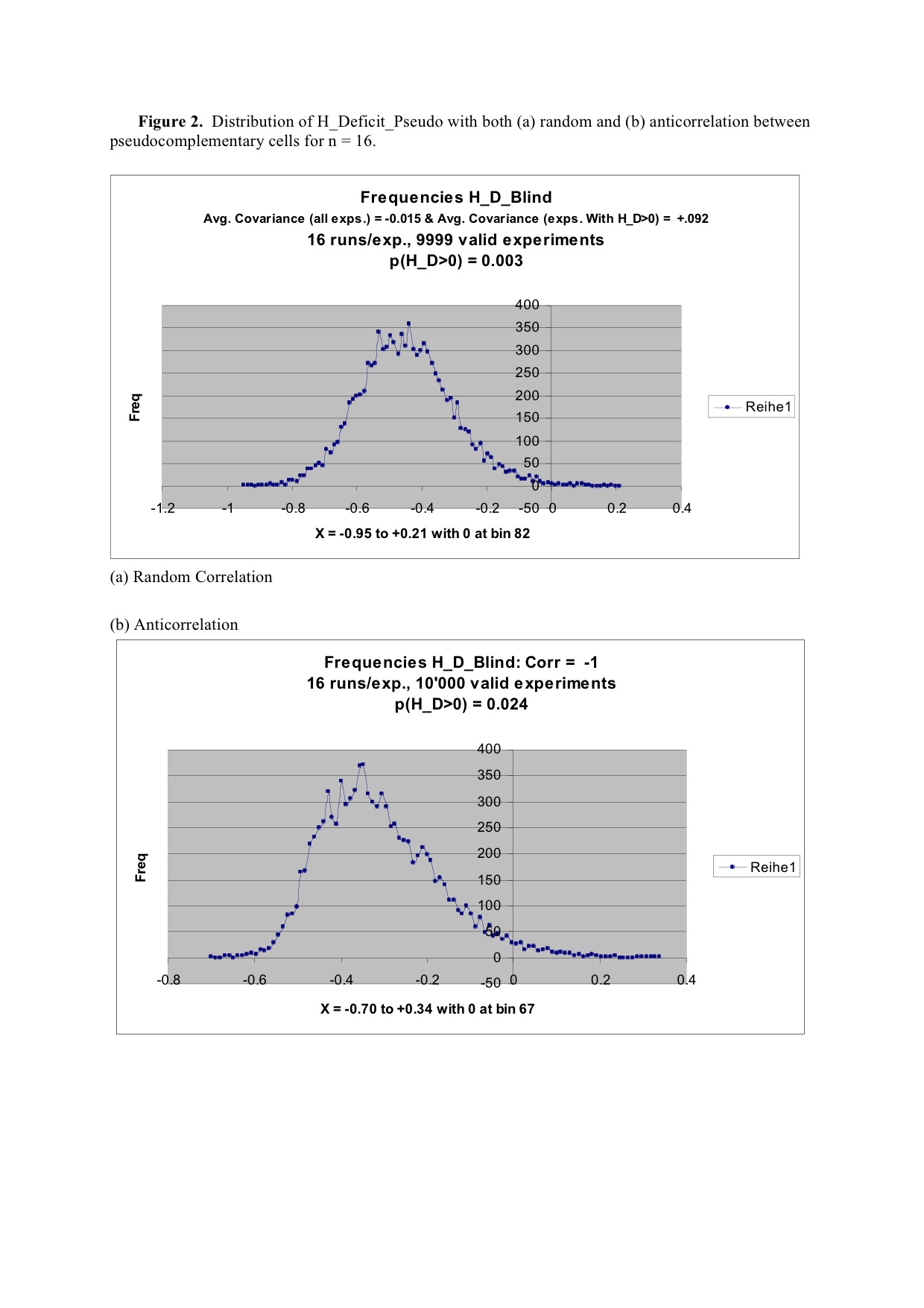

- The distributions of H_Deficit_Pseudo from Eqn. (1) are given for n=4 and n=16 in Figure 1 and 2, respectively, and are labelled H_D_Hidden in reference to the first term H(A|B | hd) in this equation. (Distributions for n=8 and n=12 are available from the authors upon request.) The average covariances for all experiments as well as for only those experiments leading to positive values of H_Deficit_Pseudo are shown in the respective figure captions. (Covariances instead of correlations are given for randomly generated data because EXCEL gives singular results for data with correlations of +1.0.)

4.2. Statistical Significance of Violations of Local Realism

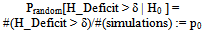

- We have demonstrated that local realism can be violated stochastically even in classical experiments when carried out in the above-mentioned way to accomodate pseudocomplementary data. The results of our computer simulations also offer a way to test for a possible violation of local realism onhand an appropriate statistical measure for the goodness of classical violations of local realism.From the standpoint of inference statistics, it is advisable to à priori deny the violation of local realism and to state this denial as the Null Hypothesis H0: Local realism obtains macroscopically. This means that any violations would be regarded to be non-deterministic (stochastic), i.e., to occur only randomly. The acceptance of a systematic violation in repeated measurements is then the Alternative Hypothesis H1: Local realism does not obtain in a particular set of repeated measurements.Let us assume the Null Hypothesis. The probability that a spin s system displays a positive H_Deficit may then be directly read from the sample of outcomes of the corresponding simulation: This probability, Prandom [H_Deficit > δ | H0 ] or p0 for short, is just the ratio of the number of outcomes displaying H_Deficit > δ (δ >= 0), #(H_Deficit > δ), divided by the size, #(simulations), of the whole sample:

| (4a) |

| (4b) |

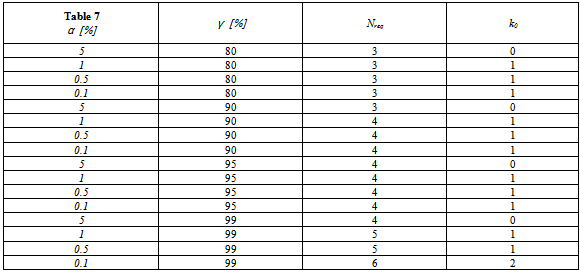

4.3. Number of Required Experiments Nreq

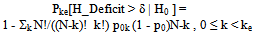

- If – as is the case in quantum physical experiments – a quantitatively specified Alternative Hypothesis H1 is formulated (see below), one can determine the number of experiments Nreq which need to be conducted to be able to accept the Null Hypothesis, H0 with a significance level of (1-α), or H1 with a significance level of γ (γ <~ 1.0). However, in the case that the Null Hypothesis H0 is rejected because α is, say, 6%, it would be mistaken to assume that the Alternative Hypothesis H1 can be accepted at the level of 94%. In other words, we still need to know to what extent the rejection of H0 means that the Alternative Hypothesis H1 can be accepted at some level, say, γ≥80%.On the one hand, we require under the Null Hypothesis, as in the case above, that the probability of a certain (or a more extreme) outcome in violation of the Null Hypothesis should not exceed α. (Usually, we take α ≤ 0.01.) On the other hand, we demand that, under the alternative hypothesis, the probability for such or a more extreme outcome is at least γ. (Usually, we take γ ≥ 0.80.) Both these requirements are expected to be fulfilled within the theoretical limit defined by the value k0. In other words for an arbitrary number N, one has to find some k0 such that for k outcomes with H_Deficit > 0 simultaneously holds:

| (5a) |

| (5b) |

| (6) |

4.4. Pseudocomplementary Quantum Coin Gedankenexperiment

- Consider a physicist having a kind of classical Stern-Gerlach apparatus which may or may not be secretely manipulated. He can adjust this apparatus to measure the information deficit in the experiment discussed by B & C for their Figure 1. His experiment consists of two systems A and B each of which, after being sent through the detector, evidences one of two orientations along one of two possible directions labelled by the unit vectors a and a’ for system A, and b and b’ for system B. For simplicity, we will call the value of the orientation along a or b: Heads, and the value of the orientation along a' or b': Tails. Our experimenter is now faced with a tricky problem in this gedankenexperiment, namely, he can’t be sure whether or not the values he gets have been secretely manipulated: The results he gets might have been generated either by a random number generator – for example, by the toss of a coin - or by the actual physics of a measurement process involving spin-½ particles and their respective complementary observables. This is because the tricky apparatus fills in– with random values – cells which would otherwise remain empty in a fully quantum experiment. (In other words, the cells with “---“ in Table 2 would contain randomly selected 0’s and 1’s.)

| Figure 1. Distribution of H_Deficit_Pseudo with both (a) random and (b) anticorrelation between pseudocomplementary cells for n=4 |

| Figure 2. Distribution of H_Deficit_Pseudo with both (a) random and (b) anticorrelation between pseudocomplementary cells for n=16 |

- Accordingly, the experimenter has to decide from the statistics of the experimental outcomes alone whether or not he is observing the behaviour of a real, quantum physical system or that of a simple random number generator.All our experimenter can do is use his gut feeling and guess which measurements are physical and which can be neglected as artifactual. For reasons which should already be clear from our discussion of the quantum coin computer experiment above, the “complementarity” in this gedankenexperiment arises not only from the ontology underlying the actual measurements but also from the epistemology underlying the mental choices made by the experimenter as to which two of an overall set of four measurements he intuitively decides to define to be the “physical” ones.Our experimenter doesn’t know whether or not he is dealing here with a fully quantum phenomenon and, therefore, doesn’t have any algebra to help him rationally select the angles between the unit vectors: a, a’, b and b’. Nevertheless, he has been informed that, if the phenomenon is quantum at all, it involves spin-½ particles. Therefore, he decides for simplicity to also select these angles as discussed above: all four vectors coplanar, angleab=θ, angleab’=θ/3, angleb’a’=θ/3, anglea’b=θ/3. Then, he picks the angle θ between the unit vectors a and b at random between 0 and 100 degrees.

|

|

5. Discussion & Conclusions

- This paper offers a method by which one can determine whether or not a set of seemingly random data – which displays a well-defined, built-in complementarity structure – is “classically random”. This kind of analysis might be especially relevant to the field of quantum cryptography (QCY). QCY is interested in deciding whether or not a message has been eavesdropped by determining whether or not the statistical structure of complementarity inherent to the data set has been disturbed. This can be formulated more concretely as follows: For a given data set, do the statistics of entangled events follow a classical statistics – then one has been eavesdropped – or a quantum statistics – then the message was not eavesdropped. The point is that any and every act of eavesdropping destroys the quantum physical correlations between entangled events.Here we do something quite similar although more general. Instead of offering statistical tests for the comparison: “classical versus quantum statistics”, we offer a comparison “classical versus nonclassical statistics” WITHOUT the explicit assumption of a quantum physical background. This is certainly of interest because a deviation per se would already be a surprising result independent of knowing the details of the underlying mechanism.

5.1. Objectivity, Locality and Local Realism

- Objectivity is a kind of reality assumption.6 It means that, at any given instant in time, all physical quantities considered to be state variables have definite values, independent of observation. Specifying values to all state variables (classical physics) or quantum numbers (quantum physics) at any given instant in time uniquely defines the momentary physical state of a system.Thus, both classically and quantum physically, objectivity means that an object is always in a defnite physical state independent of observation.Vice versa, at any given instant in time, forcing a system into any one of its possible physical states means uniquely defining values to all its state variables (classical physics) or quantum numbers (quantum physics). Accordingly, in each outcome of an experiment, all measurable physical quantities have definite values independent of observation. In classical physics, this is always the case for all state variables. In quantum physics, complementary (=mathematically noncommuting) physical quantities cannot be measured simultaneously within a given system. However, - and that’s the claim of objectivity - it can be assumed even in the case of a complementary quantity that, in principle at least, the value of the “hidden” variable which is not determined and, hence, remains unknown, is nevertheless well-defined.This “in principle at least” is the crux of the matter surrounding the statistical implications of objective realism. Statistically speaking, objectivity means that the statistics of outcomes that measure a set of physical quantities are given by corresponding joint and conditional probabilities. Joint probabilities define the likelihood of finding simultaneous (=joint) values for two or more measurable quantities, called observables, some belonging to the one system, some to the other. Under the condition that one already has knowledge about one or more of these values for the one system, there will be a certain probability, called a conditional probability, that one will discover certain values for some of the variables associated with observables of the other system. The more the two systems in question share information between themselves (=so-called mutual information), the larger this conditional probability will be on the average (and vice versa).Locality is a kind of “nondisturbance assumption”. This means that if two systems are isolated, a measurement on one does not disturb the results of any measurements on the other ([23] , p. 663). The idea of locality goes hand-in-hand with the idea of local causes, that is, separate systems can only influence one another via currents of substance-like quantities (mass-energy, momentum, angular momentum, entropy, charge, particle number etc.) flowing between them continuously through interstitially neighboring volumes of space-time[31].Statistically speaking, locality means that the statistics of outcomes that measure a pair of physical quantitities, one associated with each of two isolated systems, are entirely defined by the corresponding joint probabilities, in this case, by pair probabilities. In the case of isolated systems, the joint probabilities are given by simple products of corresponding single probabilities.Local realism assumes the validity of both objectivity and locality, that is, that physical systems have objective, local properties. Local realism always fulfills two assumptions: (1) Locality, i.e., no „substance“ can disappear at one place and reappear at another without having flowed, continuously, through the interstitial regions of space separating them; (2) objectivity, i.e., this substance exists as a physical quantity unceasingly at each and every point in time between its moving from the one place to the other, even if its existence has not been verified along the way.Statistically speaking, local realism establishes the existence and relevance of the relationship between the joint and the conditional probabilities according to Bayes’ Theorem. With the help of Bayes’ Theorem, the world picture of local realism dictates how any two systems must carry information between themselves and, in this way, constrains the statistics of measurements on two presumably separated systems.From the point of view of developmental psychology, an infant reaches conscious objectivity when he or she can realize: “My mother exists even when I don’t see or hear her behind the door!”, and conscious locality when the infant can realize: “My mother can only then be aware of my changing needs when I communicate these to her; she can’t just ‘know’ them!” It seems that objectivity is psychologically more basic, i.e. is acquired earlier in development, than locality, and is psychologically harder to give up on - see below. At any rate, beyond a certain age, local realism is a matter of course for every (normal) child: Whereas for the very young child, their mother might seem to have been “destroyed” at the one side of a dividing wall only to be “created” again at the other side, the slightly older child will understand their mother - after disappearing - to be existing the whole time while out of sight and walking continuously from the one region to the next behind the wall until she reaches the other side where she finally reappears again.

5.2. Bayes’ Theorem & the Information Bell’s Inequality

- To explain Bayes’ Theorem, consider the case of two isolated systems A and B, each possessing a corresponding measurable quantity (observable) A and B with possible values labelled by a and b, respectively. Then we have

| (7) |

| (8) |

| (9) |

5.3. Quantum Coin "Pseudo-Telepathy" Experiment

- A real, that is, classical coin is, so to say, a quantum object with infinite spin, meaning that p0H1 approaches zero in Eqn. (6). (The reader can also infer this asymptotic behaviour from the progression of ever narrower curves in the information difference vs. degrees plot of Figure 1 in the B & C article.) As a consequence, Equations (5) and (6) would require an Nreq approaching infinity to satisfy any of the goodness criteria [α, γ] shown in Table 7 to prove entanglement between a pair of classical objects. On the other hand, one could argue that the spin number attributed to the object might just as well inherently depend upon the nature of the observation (measurement process) as it does upon the nature of the object itself. In this case, somehow intuitively “knowing” which of two real measurements on an infinite spin, classical object is the physically relevant one could be understood to reduce its observable behaviour to, say, that of a spin-1/2 quantum object (if the outcome Table 3 for the pseduocomplementary data were to be identical to the outcome Table 3 from real measurements on an actual spin-1/2 quantum object. In this case, the use of p0H1 = 0.85 as above would be justifiable.)Now assume that a psychologist would use a real coin in a quantum coin study design in which 12 outcomes are considered to comprise a single experiment. (Recall the gedankenexperiment above.) From Table 5, we find p0H0 = 0.012. If he wants to arrive at results which, according to Table 7, violate Eqn. (7) with a goodness of [α = 0.001, γ = 0.99], then his design must allow for Nreq=6 experiments to complete the data set for a single investigation. In this case, k0+1=3 experiments must result in a positive H_Deficit_Pseudo in order to take his claim of entanglement seriously, that is, ke must be at least 3. As just mentioned above, this would mean that his method of mental observation, that is, his intuitive way of cleverly selecting the pseudodata, has somehow been able to mentally reduce the spin-number behaviour of the presumably entangled coins down to the lesser complexity of low-spin (quantum) objects.In the words of Selleri and Tarozzi[28], the triumphal successes of quantum theory in explaining the world of atoms and molecules and, to a lesser extent, nucleons and elementary particles, “constitute by themselves a heavy argument against a realistic conception of Nature: a physicist who has full confidence in quantum mechanics cannot maintain that atomic and subatomic systems exist objectively in space and time and that they obey causal laws.” And these physicists had an algebra to back up these seemingly parapsychological or paraphysical ideas! Perhaps one could say that a theoretical physicist trying to apply quantum physical thinking to classical situations without a corresponding algebra is in even greater danger of entering the world of “quantum psychosis”. With the ideas based upon quantum physics as developed in this paper, we offer a method to avoid this danger of undisciplined thought while mathematically investigating the possibility of entanglement/synchronicity phenomena in an otherwise obviously classical system.

6. Outlook

- Pseudocomplementary data collected in the way suggested here from a real experiment can be tested for the statistical significance of an initially hypothesized synchronicity between the (dichotomous) values of two physical quantities measured in two isolated, classical systems. Such a synchronicity would indicate a possible violation of local realism. Computer experiments show that local realism can be violated stochastically in the case of classical measurements using an experimental design mimicring ontological complementarity. The more outcomes a given experiment encompasses, the less probable it is that the data from this experiment will violate local realism by chance. The degree to which local realism is violated by chance is relatively independent of the correlation of results between the outcomes of a given experiment. This paper offers a way to test suspected classical violations of local realism for statistical significance.

ACKNOWLEDGEMENTS

- We gratefully thank R. M. Füchslin, Zuerich College of Applied Sciences zhaw, for many stimulating discussions.

Notes

- 1. Notice that the notation in B & C is somewhat different. The conditional probabilities in their Eqn. 10 use commas to separate the arguments, thus making it easy to mistake them for joint probabilities.2. We prefer to use the negative of the information difference defining the information deficit in Eqn. 12 of B & C.3.The p(H_Deficit_Pseudo>0) =1.0-PERCENTRANK(H_Deficit_Pseudo values;0) values deviate from the n-values for H_Deficit_Pseudo>0 because of redundancies (0’s) in the H_Deficit_Pseudo data and the way EXCEL treats redundancies when evaluating the function PERCENTRANK: PERCENTRANK(0) = (No. values < 0)/{(No. values <0)+(No. values =0)+(No. values >0)}. Such redundancies start occurring for n<12 outcomes/experiment.4. The sum in this formula assumes a discrete distribution of values for k. Equation (4b) can also be understood to express the probability of finding H_Deficit > δ ke or more out of n times.5. Recall Footnote 2 above regarding the sign of H_Deficit.6. What we call objectivity is also referred to as realism by other authors. See, e.g., [11].

References

| [1] | R. Blume-Kohout, W. H. Zurek, Quantum Darwinism in quantum Brownian motion. Phys Rev Lett 101(24), 240405 (Dec 12, 2008). |

| [2] | W. H. Zurek, Quantum Darwinism. Nat. Phys. 5, 181 (2009). |

| [3] | M. Zwolak, H. T. Quan, W. H. Zurek, Quantum darwinism in a mixed environment. Phys Rev Lett 103(11), 110402 (Sep 11, 2009). |

| [4] | C. J. Riedel, W. H. Zurek, Quantum Darwinism in an everyday environment: huge redundancy in scattered photons. Phys Rev Lett 105(2), 020404 (Jul 9, 2010). |

| [5] | C. Fields, A model-theoretic interpretation ofenvironmentally-induced superselection. http://arxiv.org/pdf/1202.1019.pdf (2012). |

| [6] | R. Ramanathan, T. Paterek, A. Kay, P. Kurzyński, D. Kaszlikowski, Local realism of macroscopic correlations. Physical Review Letters 107(6), 060405 (2011). |

| [7] | D. Chruściński, A. Kossakowski, Non-Markovian quantum dynamics: local versus nonlocal. Physical Review Letters 104(7), 070406 (2010). |

| [8] | H. Jeong, M. Paternostro, T. C. Ralph, Failure of local realism revealed by extremely-coarse-grained measurements. Physical Review Letters 102(6), 060403 (2009). |

| [9] | A. Leshem, O. Gat, Violation of smooth observable macroscopic realism in a harmonic oscillator. Physical Review Letters 103(7), 070403 (2009). |

| [10] | J. Kofler, C. Brukner, Conditions for quantum violation of macroscopic realism. Physical Review Letters 101(9), 090403 (2008). |

| [11] | J. F. Clauser, A. Shimony, Bell's theorem: experimental tests and implications. Rep. Prog. Phys. 41, 1881 (1978). |

| [12] | T. Scheidl, R. Ursin, J. Kofler, S. Ramelow, X. S. Ma, T. Herbst, L. Ratschbacher, A. Fedrizzi, N. K. Langford, T. Jennewein, A. Zeilinger, Violation of local realism with freedom of choice. Proc Natl Acad Sci USA 107(46), 19708-13 (2010). |

| [13] | A. Cabello, Stronger two-observer all-versus-nothing violation of local realism. Physical Review Letters 95(21), 210401 (2005). |

| [14] | S. Gröblacher et al., An experimental test of non-local realism. Nature 446(7138), 871 (2007). |

| [15] | G. Oohata, R. Shimizu, K. Edamatsu, Photon polarization entanglement induced by Biexciton: experimental evidence for violation of Bell's inequality. Physical Review Letters 98(14), 140503 (2007). |

| [16] | G. B. Schmid, Much Ado about Entanglement: A Novel Approach to Test Nonlocal Communication via Violation of 'Local Realism'. Forschende Komplementärmedizin / Research in Complementary Medicine 12(4), 214 (2005). |

| [17] | A. Zeilinger, Experiment and the foundations of quantum physics. Reviews of Modern Physics 71(2), S288 (1999). |

| [18] | A. Zeilinger, Einsteins Spuk. (Goldmann, München, 2007). |

| [19] | H. Atmanspacher, Quantum theory and consiousness: an overview with selected examples. Discrete Dynamics 8, 51 (2004). |

| [20] | J. A. d. Barros, P. Suppes, Quantum mechanics, interference, and the brain. Journal of Mathematical Psychology 53, 306 (2009). |

| [21] | A. Litt, C. Eliasmith, F. W. Kroon, S. Weinstein, P. Thagard, Is the brain a quantum computer? Cogn Sci 30(3), 593 (May 6, 2006). |

| [22] | D. I. Radin, L. Michel, K. Galdamez, P. Wendland, R. Rickenbach, A. Delorme, Consciousness and the double-slit interference pattern: Six experiments. Physics Essays 25(2), 157 (June, 2012). |

| [23] | S. L. Braunstein, C. M. Caves, Information-Theoretic Bell Inequalities. Physical Review Letters 61(6), 662 (1988). |

| [24] | M. Barbieri, F. De Martini, P. Mataloni, G. Vallone, A. Cabello, Enhancing the violation of theeinstein-podolsky-rosen local realism by quantum hyperentanglement. Physical Review Letters 97(14), 140407 (2006). |

| [25] | E. Chitambar, R. Duan, Nonlocal entanglement transformations achievable by separable operations. Physical Review Letters 103(11), 110502 (2009). |

| [26] | R. Thomale, D. P. Arovas, B. A. Bernevig, Nonlocal order in gapless systems: entanglement spectrum in spin chains. Physical Review Letters 105(11), 116805 (2010). |

| [27] | Z.-W. Wang et al., Experimental entanglement distillation of two-qubit mixed states under local operations. Physical Review Letters 96(22), 220505 (2006). |

| [28] | F. Selleri, G. Tarozzi, Quantum Mechanics Reality and Separability. Rivista del Nuovo Cimento Serie 3 4(2), 1 (1981). |

| [29] | H. Atmanspacher, H. Römer, H. Walach, Weak Quantum Theory: Complementarity and Entanglement in Physics and Beyond. Foundations of Physics 32(3), 379 (2002). |

| [30] | D. Collins, N. Gisin, N. Linden, S. Massar, S. Popescu, Bell Inequalities for Arbitrarily High-Dimensional Systems. Physical Review Letters 88(4), 040404 (28 JANUARY 2002, 2002). |

| [31] | G. B. Schmid, An Up-to-Date Approach to Physics. Am. J. Phys. 52(9), 794 (1984). |

| [32] | J. S. Bell, On the Einstein Podolsky Rosen Paradox. Physics 1(3), 195 (1964). |

| [33] | M. Michler, K. Mattle, H. Weinfurter, A. Zeilinger, Interferometric Bell-state analysis. Physical Review A 53(3), R1209 (1996). |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML