-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Brain and Cognitive Sciences

p-ISSN: 2163-1840 e-ISSN: 2163-1867

2014; 3(3): 51-61

doi:10.5923/j.ijbcs.20140303.01

No-Body (the Noise Builds the Obscure Deep Yourself) is a New Hypothesis on a Complementary Model of Consciousness that does not Require the Body and the Interaction with the External World

Luca Marchese

Genova, Italy

Correspondence to: Luca Marchese, Genova, Italy.

| Email: |  |

Copyright © 2014 Scientific & Academic Publishing. All Rights Reserved.

This document proposes a new hypothesis on consciousness that complements existing theories. This hypothesis argues that the body and then external sensory inputs are not mandatory for the existence of consciousness intended as “to know to be.” I argue that the noise is a mandatory element for a real form of consciousness that is not simply the capability to integrate sensorial inputs in the global workspace. Thus, NO-BODY is a proposal of a consciousness model that does not require a body or its interaction with the external world.

Keywords: Consciousness, Awareness, Cognition, Embodiment, Time, Noise, Phylogenetic modulation, Ontogenetic modulation

Cite this paper: Luca Marchese, No-Body (the Noise Builds the Obscure Deep Yourself) is a New Hypothesis on a Complementary Model of Consciousness that does not Require the Body and the Interaction with the External World, International Journal of Brain and Cognitive Sciences, Vol. 3 No. 3, 2014, pp. 51-61. doi: 10.5923/j.ijbcs.20140303.01.

Article Outline

1. Introduction

- There are many theories on consciousness, and there is no obvious uniform definition of such an “entity.” There are two main philosophical approaches to consciousness that refer to the requirement of the body that interacts with the external world. The first approach argues that the body and the capacity of interacting with the external world are required for the existence of consciousness. This approach is principally based on a behavioral interpretation of consciousness and is a simple reduction of the complexity of the problem of building an “artificial consciousness.” The second approach attempts to address the “hard problem” of consciousness that is intended as “to know to be” but that typically relies on a philosophical or metaphysic interpretation and does not provide elements that can help in the development of artificial consciousness models.I argue that the noise is a mandatory element for a real form of consciousness that is not simply the capability to integrate sensorial inputs in the global workspace.Thus, NO-BODY is a proposal of a consciousness model that does not require a body or its interaction with the external world.

2. Current Theories on Consciousness

- The most influential modern physical theories of consciousness are based on psychology and neuroscience. Theories proposed by neuroscientists, such as Gerald Edelman and Antonio Damasio [1], and by philosophers, such as Daniel Dennett [2, 3], seek to explain consciousness in terms of neural events that occur within the brain. Many other neuroscientists, such as Christof Koch [4], have explored the neural basis of consciousness without attempting to frame all-encompassing global theories. In addition, computer scientists working in the field of Artificial Intelligence have pursued the goal of creating digital computer programs that can simulate or embody consciousness.Here, I do not want to describe all of the theories on consciousness because there have been previous studies that have explored this field in a modern and sufficiently detailed manner.In this article, I present a partial list of the most important scientific issues:1. Embodiment2. Situatedness3. Emotions4. Motivations5. Unity and causal integration6. Time7. Free willThe current approaches to consciousness follow some different directions that are related to resolving some of the issues mentioned above, which are listed below:1. Autonomy and resilience2. Phenomenal experience3. Self motivation4. Information integration5. Attention6. Global workspaceAs previously described, I do not want to explain these issues and theories. For further details, please refer to Manzotti [7] for a deeper and complete overview on this argument.However, I briefly describe these issues and theories with regard to the potential implications or connections with the presented theory and divulge some key concepts. • EmbodimentThe question of embodiment refers to the necessity of the body to create the conditions for possible consciousness. Many scientists have suggested that the importance of the interface between an agent and its environment should not be underestimated. Considering that consciousness is driven by attention to the events occurring in the environment, it appears that a sensorial activity and thus a body are mandatory. Moreover, there are living embodied agents, such as insects, that interact with their environment in an extraordinarily efficient manner but are not considered good candidates for consciousness due to their poor cognitive capability. The question of the interaction with the environment is a part of these theories of consciousness, which I refer to as “behavioral.” In such theories, consciousness has a concrete value for the behavior of the agent and thus it is also relevant for AI. In this study, I explain why and how the NO-BODY theory argues that the body and thus a complete set of sensors are not mandatory for consciousness. This theory shifts the concept of conscious experience from a “behavioral” perspective to a more philosophical “to know to be” perspective, which provides a scientific explanation that involves the noisy nature of the brain and a new concept of consciousness time.• TimeIt has been widely recognized that our cognitive processes require time to produce a conscious experience. Thus, we report that consciousness exists only in the time domain. In a conscious experience, we float in the flow of time with apparent continuity. The continuous or discrete nature of consciousness time is an issue. Newton argued that only the instantaneous present is real and thus the present has zero durations. The Einstein-Minkowsky space-time model is a formalization of the Newtonian time, which is expressed as a geometrical dimension in which the present is a point with no width. Many scientists argue that the classical Newtonian time does not correspond with our experience of time. Libet showed that half a second of continuous nervous activity is necessary to have a visual experience [5]. Consistent with this finding, most neuroscientists argue that a conscious process is instantiated by patterns of neural activity extended in the time domain (trains of temporally distributed spikes). However, if the neural activity that builds a conscious experience is extended in a time frame (the time elapsed from the first to the last spike), then it can be argued that any instantaneous perception occurring in such a time frame is a part of the same conscious experience.We could not accept such a statement because we feel that any instantaneous experience is different from previous and future experiences. Thus, the question is: does a temporal window exist such that it substitutes for the concept of instant in consciousness time? In the following explanation of the NO-BODY theory, I suggest that:1. We should take the intrinsic parallelism that underlies brain behavior into account. Thus, the timeframe required for the completion of a conscious process consisting of many parallel streams can be reduced in the absolute time domain. Thisconcept is “nothing new under the sky,” but it must be highlighted. 2. This timeframe is minimal-concept driven and thus is variable. Minimal-concept completions are instantaneous in conscious time and are mapped almost continuously to absolute time due to the large number of parallel streams in the brain. • EmotionsDamasio suggested that there is a core consciousness that supports higher forms of cognition [1]. Although it is not clear how emotions could be more than simple labels on cognitive modules in a conscious machine, the hypothesis proposed by Damasio is not simply fascinating but sets an extremely important and fundamental threshold between the behavioral models of consciousness and the models that can truly explain “to know to be.”In the explanation of the NO-BODY theory, I will explain how I suggest mapping emotions to neural activity, which underlie connections with attention and the completion of elementary concepts.• Information integrationConsciousness appears correlated with the notion of unity. A collection of uncorrelated processes cannot represent a conscious experience. Classical theories of consciousness are vague and do not explain how a collection of separated processes can become elements of a single unit. The most recent approach to this problem is the theory of integrated information, which was introduced by Giulio Tononi [11]. This theory argues that causal processes do not contribute separately to produce a complex conscious experience, but instead, they are entangled. Thus, these processes are meaningful only in the whole behavior of the brain and such behavioral and time-distributed vision of unity is more plausible to support consciousness. The arguments supported and the conditions required by the NO-BODY theory are tuned in with the notion of integrated information.• AttentionThe relationship that exists between attention and consciousness remains unclear. Some scientists have argued that there cannot be consciousness without attention [6]. However, there is no evidence that attention is sufficient to support the existence of consciousness.CODAM [10] is a neural network control model that provides an interesting hypothesis as to how the brain could implement the attention. In this article, I do not want to deepen the discussion on the neural models that could implement the attention in the brain, but I will provide a general definition of attention.A general but precise definition of attention is mandatory to avoid misunderstandings on the following explanations of the NO-BODY theory.

3. NO-BODY: A Complementary Hypothesis

- Current theories on behavioral consciousness require the presence of an interaction with the external world. These theories support the statement that consciousness can be present only if there is an active sensorial activity of the body. However, attention and awareness cannot be explained in the same manner. Attention is mandatorily linked to the interaction with the external world while awareness can be explained as “to know to be.”The purpose of the theory presented in this paper is to propose a theory of consciousness that is complementary with the existing behavioral theories and that provides a potential explanation for the possibility of “to know to be” without any interaction with the external world.Wecan attempt a virtual thinking experiment in a brain that is completely disconnected from the body: this means that it cannot receive images, sounds and any other sensorial inputs. Next, we assume that this brain is our brain. In such a condition, our brain should always be able to think, and we remember the famous phrase of Cartesio “cogito ergo sum” (I think, therefore, I am). However, what is the actual substance of a thought? The answer is pronounced words, written words and images. Thus, our brain “knows to be” because it can remember images and sounds or sensations stored in its synapses when it was connected to the body. This is an internal representation of the external world, and our brain is interacting with a virtual world. However, this virtual world can exist only because our brain has been connected to the body in the past.If we assume that our brain developed completely disconnected from the body, then which scenario would we expect? Could we know to be? This is the extreme hypothesis of consciousness. In our brain, there are no images or sounds or any other recorded sensation from the real world. Thus, we cannot formulate a hypothesis of thought because we have not elements to build on it. Can we say that there is always a consciousness without a thought? I argue that consciousness intended as the deepest primitive awareness is present in the brain that never had any interaction with the world via the body. Thus, I argue that the brain can be conscious without thinking, and that awareness is independent of thought. Thus, this begs the question: what is consciousness without a thought? To formulate my hypothesis, I need to take a step backward to make some considerations regarding the formal definition of what is the interaction with the external world.Ican state that:1. Images are variations of light and color in the space domain (retina).2. Movies are variations of light and color in the space and time domains.3. Sounds are variations of pressure on the tympanum in the time domain.In all of the above statements, the word “variations” is present. A continuous constant pressure on the tympanum is not a sound, and a completely black image is not a visual stimulus because it does not carry any information. The change from high pressure to low pressure or vice-versa on the tympanum is a sound. The black and white image with the shape is a visual stimulus in the space domain. An image that switches from being completely black to completely white is a visual stimulus in the time domain.The variations build entropy and produce information. Collecting and integrating information from the external world is a behavior that builds consciousness as argued by the most widely accepted theory of consciousness. I argue that elementary information for the brain is any change in status in the space and/or time domain. I argue that complex information for the brain is a sequence of changes in the time domain and/or space domain that can also be chaotic or random.In a brain that is completely disconnected from the body, the modules responsible for the reception of sensorial stimuli in the cortex are alive and their inputs are not constant because our brain is fundamentally a “noise based machine.” Noise is present in any activity and any region of our brain, and some scientists have previously argued, and in some cases demonstrated, that it plays a fundamental role in some behaviors. There is also a new approach to the dynamics of neural processing, which argues that noise breaks deterministic computations, producing many advantages [9]. I argue that the noise is a fundamental element of consciousness intended as the deepest absolute awareness or the “to know to be,” which is independent of a symbolic thought and interaction with the real world. Noise is the basic information that the brain elaborates and makes the brain alive without external stimuli. It is the “sound of silence.” It produces images and sounds in a complex dynamic way. Is there any form of attention in the brain that is stimulated only by noise? I believe that the answer is yes, and I will try to explain the motivation in the following considerations.We need to formalize the behavioral definition of attention. Attention may be definedas the capacity to elaborate the instantaneous transition of a stimulus and the prediction of the next state. Attention is meaningful only in the time domain. Noise in the brain is a stream of state transitions in the time domain and is subsequently a stimulus that perfectly supports the existence of attention as it has been definedabove. Thus, I argue that attention is a mandatory element of the deepest awareness supported by noise and that it is in the brain that interacts with the real world. Attention and awareness are meaningful only in the time domain, which supports the idea of the ancient philosopher S. Augustine. His ideas represent a turning point with respect to thinking about "time" and formulate the idea of internal time, which advocates the twentieth-century conceptions regarding time. For S. Augustine, the time was created by God along with the universe, but its nature remains deeply mysterious, such that the philosopher states ironically, "If they don’t ask me what is the time, I know, but if they ask me, I do not know."However, criticizing the Aristotelian concept of time, in "Confessions," he states that time is a "distension of the soul" and is due to the perception of the individual, which despite living only in the present (with attention), is aware of the past (what has been and is no longer) due to memory and the future (what will be, but it is not) by virtue of waiting. Thus, conscious experience is located in time and is represented by the integration of activities located in space. A pattern of spikes in space and time domains is a representation of a conscious experience. P.Pinelli and G.Sandriniin their “Neural Sequences: an introduction and overview” [8] wrote “The brain, processing the temporal dimension inherent in the sequence,activates the mechanisms of memorization that underlie the formation of our grasp of the past, present and future, i.e., the memorization process, which is a sort of neuropsychological miracle, at the core of philosophical considerations from Aristotle and Augustine to Bergson, and which in turn represents a starting point for the development of higher mental activity.”I underline the fundamental importance of time and consequently, of noise for consciousness because I could, perhaps, explain why brain is such a different machine. I try to respond to questions on some behaviors regarding brain and neurons:• Why does the single neuron exhibit different responses to repeated presentations of a specific input signal?• Why does the neuron’s spontaneous activity display varying degrees of randomness?Perhaps the brain needs to be noisy because this enables it to create unpredictable status transitions continuously in the time and space domain. Consequently, I argue that neurons emit spikes spontaneously and stochastically because the machine brain needs to be active and unpredictable. I argue that a deeply quiescent status or a predictable behavior is not compatible with a conscious machine. I want point out that I refer to stochastic behaviors and not chaotic behaviors. The trajectory of a chaotic system is reproducible from a given initial condition, which is not the case for a stochastic system. Trajectories of a system in a chaotic regime can even be predicted, although over a short time scale. The resulting fluctuations of a chaotic regime must be distinguished from those produced by the noise alone. Longtin has explained a comparison of a deterministic and stochastic form of "random" phase locking [13, 14].

4. What about Emotions?

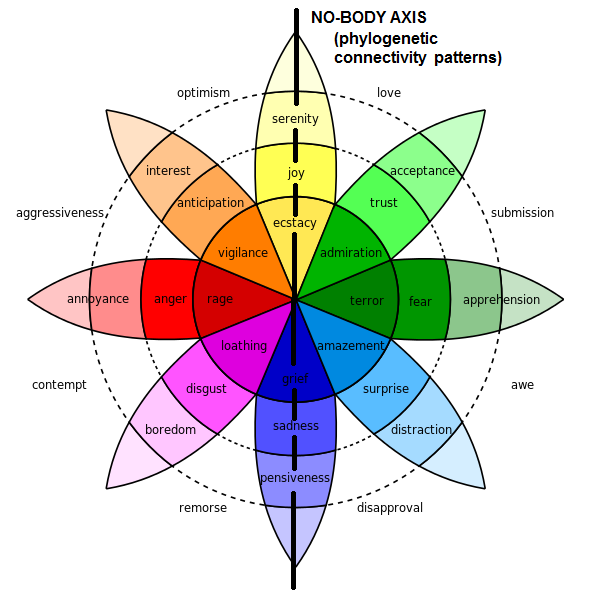

- Regarding the existing theories and classifications on emotion in psychology and neurology, one could write a book. Robert Plutchik's Wheel of Emotions (Fig. 1) demonstrates a relatively complete spatial representation. I want to examine which emotions could be meaningful in the context of the NO-BODY theory. There are many emotions that are strictly dependent on a relationship (i.e., love, aggressiveness) or on a more simple interaction with the external world (i.e., rage or amazement). We cannot think about experiencing love if we have not had a full relationship experience. Similarly, we cannot be aggressive without a target. Fear is often related to a dangerous situation. However, I believe that all of us have experienced, at least once, sadness or serenity that was not explainable by the contextual situation. I believe that these types of basic emotions are typically weaker, longer and inertial, which may be generated without an interaction with the external world or a specific situation. I argue that this type of emotion could be triggered by intrinsic noisy activity of the brain. Thus, these types of emotions could be the media of the “to know to be” in the brain that never interacts with the real world.

5. The Question of the “Time Frame”

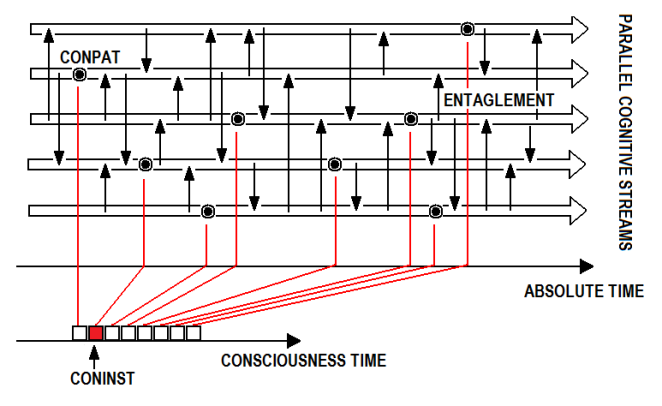

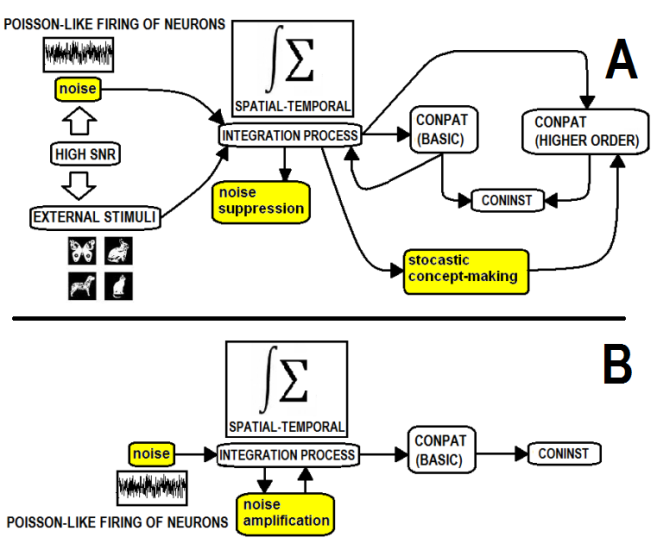

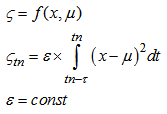

- I state that a pattern of spikes in the space and time domains is a representation of a conscious experience. However, I also state that attention could be definedas the capacity to elaborate the instantaneous transition of the stimulus and prediction of the next state. A train of spikes exists in a time frame that starts at the occurrence of the first spike and stops at the occurrence of the last spike. Thus, how can we collimate the concept of a time frame with the concept of an instantaneous transition? I argue that the concept of time as a dimension of consciousness must be revised. Again, P.Pinelli and G.Sandrini write in “Neural Sequences: an introduction and overview” [8]: “Eventually, when temporal and relational processing combine, what occurs is a duplication of the subjective and objective worlds, and it is this that lies at the root of the formation of consciousness.” Although they refer to a subject that fully interacts with the real world, they suggest a duplication of the real world and thus of real time with a subjective world and thus a subjective time. We need to translate the absolute time to a relative time in which the sequence of “instants” is not agnostic, but is driven by the completion of elementary patterns. Thus, instants that elapse during the evolution of a spike train are not instants of consciousness time. Instants of the consciousness time are all instants of a spike train or an ensemble of spike trains, anywhere in the whole brain, which completes the internal representation of a conceptual pattern. Such a pattern can be elementary, noisy and unlabeled, but it represents an elementary concept. Hereinafter, I will refer to “CONPAT” (CONsciousnessPATtern) as elementary concepts and “CONINST” (CONsciousnessINSTant) as absolute time instants referred to for the completion of a CONPAT. Mapping between the absolute time and consciousness time intended as a sequence of CONINSTs is shown in Fig. 2. Importantly, the CONPATs occur in a spatial domain and are generated by parallel streams of the whole brain, and thus many CONPATs can occur at the same absolute time instant. The question is now: what is a CONPAT? I stated that a CONPAT is an elementary concept; the word “elementary” indicates that these concepts are not acquired with a learning activity but are a part of the neural structures that grows during brain development. Indeed, they could be “concept cells” that integrate the activity of many neurons and represent something that can be meaningful in the physical world in which the brain has been created. Thus, these cells are a component of systems that constitute the dimensions of consciousness in the brain.We should think of a generic model of the brain as a conscious machine that has itsown dimensions of consciousness, which is realized in modules that produce CONPATs. Human and animal brains are designed to interact with a world consisting of light and sound, and they are provided in modules that can receive stimuli from this world via sensors. Would a brain be different if it were designed to receive stimuli from electromagnetic waves via multiple antennas in the skin of the body? The physical support of the stimulus is not a difference. I argue that the module in the brain that is assigned to elaborate such signals from “antennas” should have “basic concept cells” that fire producing CONPATs. A real world experience starts with a large flow of CONPATs that via a hierarchical temporal and spatial integration process, produces even higher order cognitive concepts that are the fruit of a learning activity. The real world experience is possible due to sensors. Without sensors, the modules that represent the dimensions of the consciousness still function. They produce limited flows of CONPATs due to the noisy nature of the brain, and they make the machine brain able “to know to be.” The key is to abandon the concept of the sensory model as a simple system to interact with the real world. A sensory model should be more appropriately interpreted as a multidimensional consciousness system that can produce a real-world experience by receiving inputs from sensors (Fig. 3).Two potential situations of the brain with and without external stimuli are shown in Fig. 3. In the first case (A), a Poisson-like firing of an ensemble of neurons is integrated with the deterministic spiking activity generated by the external stimuli. The spatial-temporal integration process due the high SNR (Signal Noise Ratio) can behave in two different manners:• In a specific instant, part of the system collapses to a state that corresponds to the formation of a concept. Thus, the noise is suppressed.• In a specific instant, part of the system remains in a state of equilibrium between completion processes of different concepts. Here, noise plays the role of the stochastic “decision maker.” The concept of probabilistic decision-making via noise activity is best described in Rolls and Deco [9]. Here, I suggest that noise can play a role, not only in the process of decision-making but also in the completion of concepts that are a part ofthe perception process and thus antecedent respect to the decision-making process.

- In the second case, which is represented in Fig. 3 (B), the brain never received any external stimuli. The integration process produces an amplification of noise, which in turn initiates the activation of basic concepts. Higher order concepts, which are created by the learning activity, are not present. Basic CONPATs are sufficient to trigger attention and consciousness.

6. What about Attention?

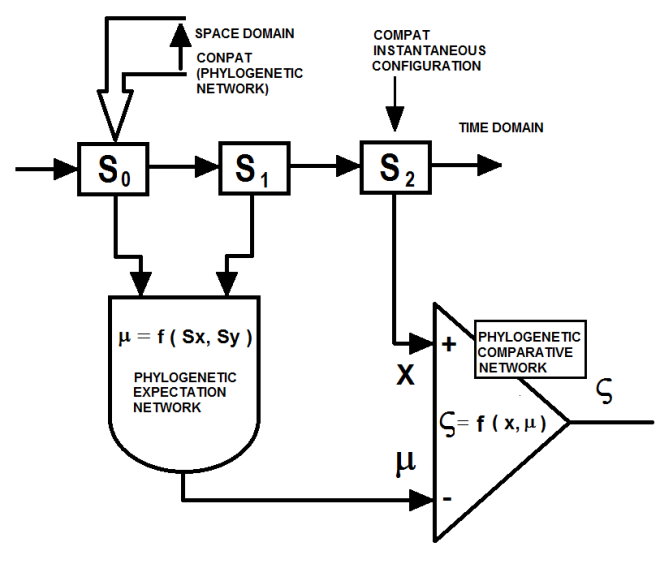

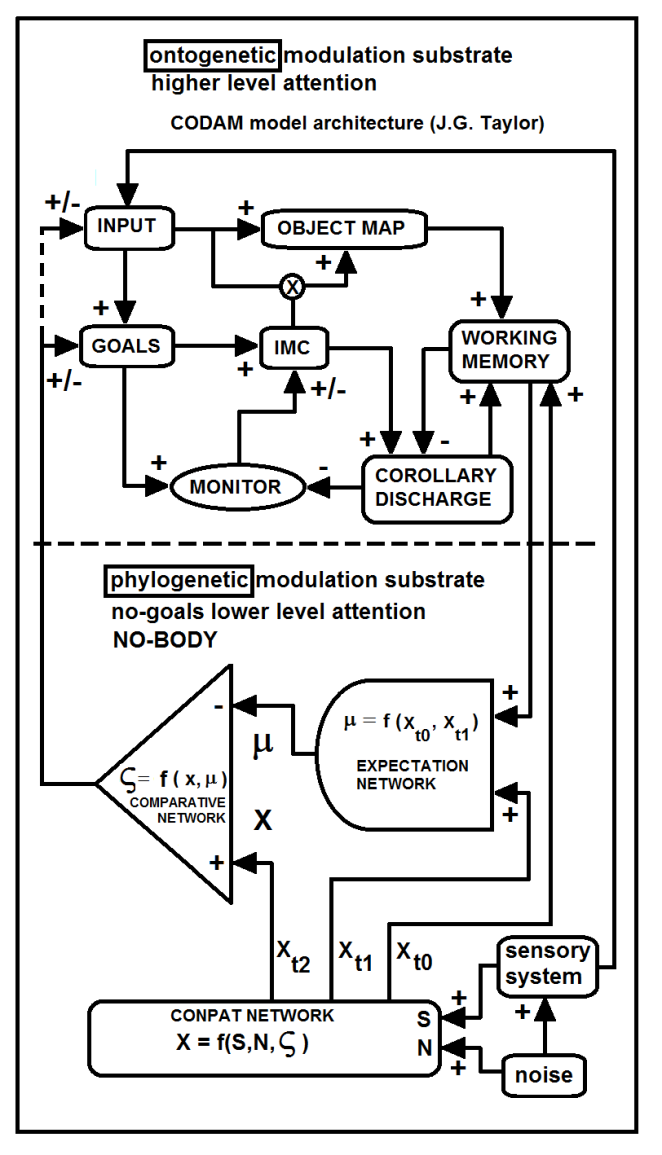

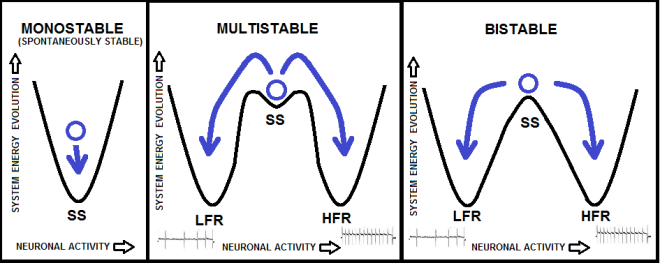

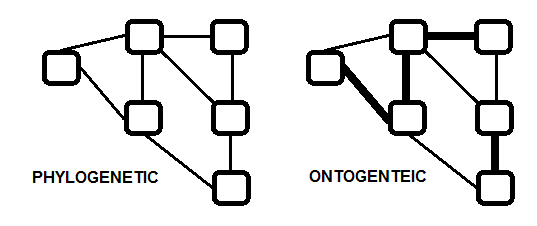

- I stated that attention is mandatory for consciousness and that attention can be viewedas the capacity to elaborate the instantaneous transition of a stimulus and the prediction of the next state. Thus, I have designed a hypothetical model of consciousness based on two types of network: expectation-network and comparative-network. These networks are built by phylogenetic modulation and elaborate the CONPATs in the temporal evolution. The CONPAT is a basic network built by phylogenetic modulation. Below, I refer to phylogenetic networks but I need to make some clarifications with respect to the meaning of the term, which, in the scientific literature, could be more restrictive. In this paper, I refer to networks that are specific basic patterns of anatomical connectivity determined by the evolution of the species (phylogenetic modulation). However, Ialso suppose that these networks are modulated by individual’s genes. Furthermore, I do not exclude that there could be a moderate and agnostic (not associative) ontogenetic (experience dependent) modulation (strength variations of connections) on these networks (Fig.5). Such an ontogenetic modulation can only strengthen or weaken the ability to achieve particular emotional states of the NO-BODY axis (Fig.1). In my vision, an emotional state is determined by a complex configuration of the neuronal activities of multiple cortical area networks. In this complex environment, we must not forget that the chemistry of the brain sets multiple biases involved in the global behavior. In the proposed theory, the consciousness is a measure of the variance of the difference between CONPATs and the prediction performed by the expectation-networks that elaborate the previous CONPATs in the same space domain. In other words, consciousness would be a measure of the unpredictability of CONPATs by the phylogenetic expectation-networks (Fig. 4).

| Figure 5. The phylogenetic connectivity pattern and the ontogenetic reinforcement of some connections |

| (1) |

7. Is Consciousness Useful?

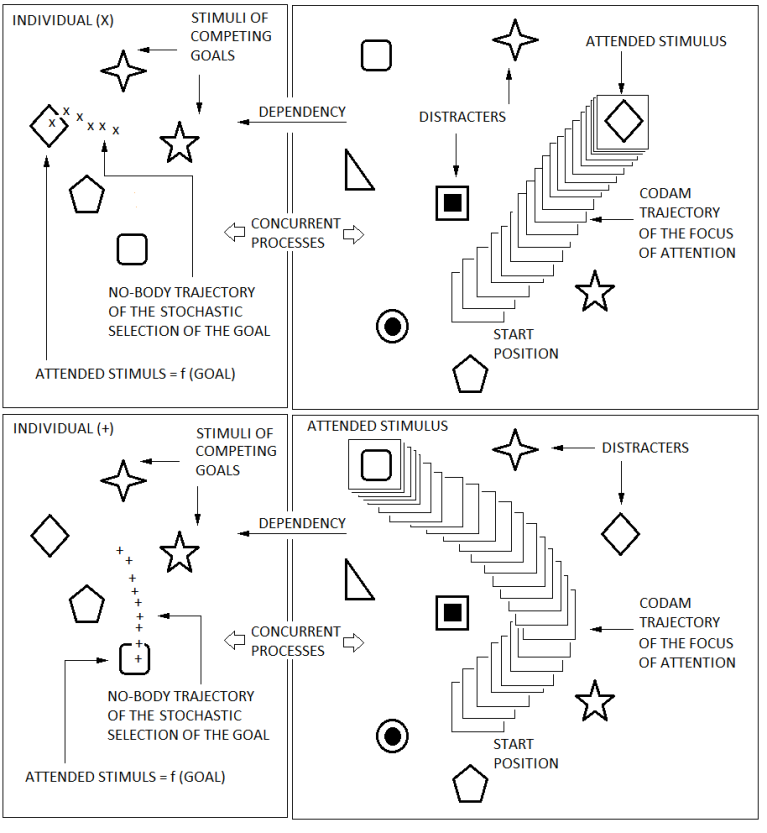

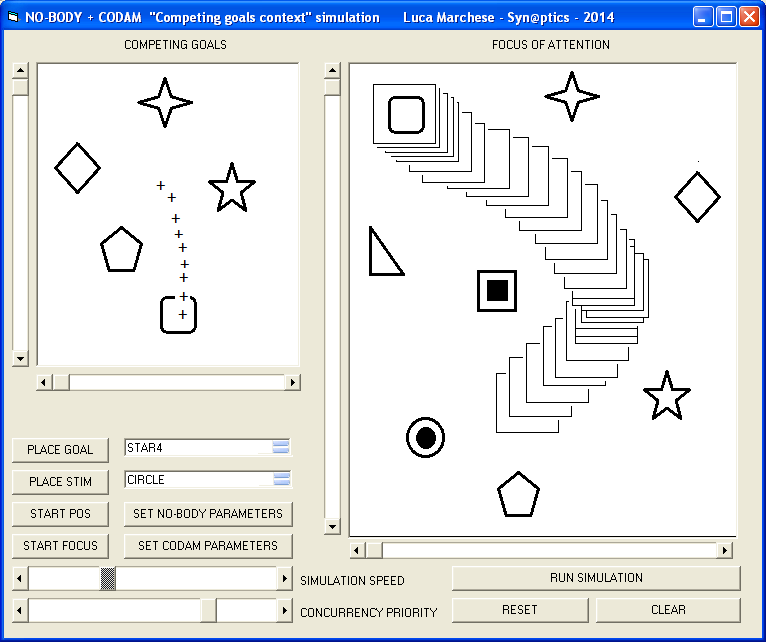

- An important issue related to consciousness is the usefulness from a behavioral point of view. Behavioral interpretations of consciousness, like the CODAM model, give a positive answer to the question intrinsically. NO-BODY is a no-goal oriented theory and, although it is complementary to CODAM, requires a possible plausibility by an evolutional point of view. I believe that the rationale of the formation of such phylogenetic circuits originates in the interaction with the ontogenetic circuits. The interaction is targeted to generate more complex behaviors in complex “social environments”,where the unpredictability ofthe social behavior can play a role in the survival. I suppose that the NO-BODY network contributes to creatingprecursors of basic emotional states (Fig. 1). The emotional statescan influence goals and, perhaps, the perception of reality (the input), in the CODAM network (Fig. 6). The behavior of simple animals like insects can be related to simple actions and a limited systematic social relationship. More evolved animals live in more complex social environments where multiple actors play different roles that are not only prey and predator. I believe that the unpredictability of the social behavior transforms these animals into distinct individuals. By this point of view, I could state that the proposed model of consciousness is strongly correlated with the uniqueness of the individual. In this framework, the noise is represented as a source (Fig.6), but we know that it is intrinsically present in the behavior of the brain at a microscopic level (the stochastic behavior of neurons). I suppose that the phylogenetic neural circuits here mentioned (NO-BODY) are more sensible to noise than ontogenetic networks and process it through transfer functions that are strictly correlated with the uniqueness of the individual. The output of such networks influences the perception of reality (input) as well as the desires and the needs (goals), performing the stochastic decision making and creating the unpredictability of the behavior of the individual: this is an interpretation of existing theories of attractor-based models of decision-making [9]. They suggest a model for how attractors could operate by biased competition to implement probabilistic decision-making (Fig.7). They also suggest that this type of network model could operate in many brain areas, and the short term memory helps the network to integrate information over time to reach a decision. I believe that the proposed phylogenetic neural circuit NO-BODY could have a role in such integration process acting also on the information retrieve.I have realized a software simulation (Fig.9) of the circuit represented in Fig.6 in a context of competing goals. Two concurrent processes implement the CODAM and the NO-BODY networks. The NO-BODY network performs the stochastic selection of the goal. The goal determines the expected stimulus. The simplification in this simulation is the assumption that the goal is at the same level of the stimulus. In the reality, the goal can be related to a higher level of cognition than the expected stimulus (Fig.8).

8. Is the Brain Smart Enough to Understand Its Complexity?

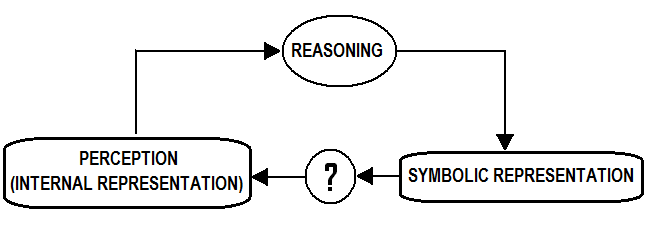

- Why is any theory on consciousness, in such a way, disappointing? I could answer that none of the theories that have been proposed until recently are complete. These theories do not contain the correct account of one or more issues. However, I believe that the answer is found in the interpretation of disappointment, which is more perceptive than rational. Perception is an internal representation of something that is difficult to describe in a symbolic way (S.Augustine wrote: "If they don’t ask me what is the time, I know, but if they ask me, I do not know"). Similarly, it is, perhaps, impossible for our brain to try to rebuild a perception from a symbolic description. We are not able to convert a symbolic description of consciousness to a perceptive internal representation. We experience the same difficulty when we try to imagine the real essence of the mathematical symbol “ ∞.” Perhaps, our brain is not able to perceive the “infinite” concept because no living agent on Earth has had this necessity to survive. Thus, the brain could be smart enough to understand its complexity, but it misses the capability to convert a symbolic and behavioral description produced by reasoning into an internal representation. If I make a comparison with informatics, I could say that the brain is relatively able to reverse-engineer an internal representation (perception = executable file), but it is not able to re-compile the “reverse-engineered” source code (source code = symbolic representation) to produce an internal representation. Thus, the brain is not able to validate the symbolic descriptions produced by reasoning (Fig. 10).

9. Conclusions and Future Work

- I proposed a new theory on the essence of consciousness that is complementary with existing theories. This theory argues that consciousness can exist in the brain that is completely disconnected from the external world due to the intrinsically noisy nature of the brain. This theory also argues that consciousness time is composed of instants that contain the completion of elementary concepts. Such concepts represent basic patterns that are a part of the structure of modules in the brain, which behave like “engines” (perception and codification) of particular dimensions of consciousness. Naturally, such modules are the result of an evolutionary process in which living agents interact with the real world. Obviously, this is a field where it is difficult, if not impossible, to demonstrate the theory, but I attempted to determine the elements in brain behavior that support the underlying arguments of the presented theory. Although a software simulation cannot prove any plausibility of the theory presented, Ihavedesigned a software model of the possible interaction between the CODAMneural network and the NO-BODY neural network in the MOSAIC (MOdular Scalability AImed to Cognition) framework [12]. Despite I have built this framework in order to test complex neurocognitive networks based on the SHARP model [12], the framework is enough flexible to accept the integration of any neural network. The goal of the simulation is to demonstrate how the phylogenetic circuits of the NO-BODY network can, dynamically, influence the higher level activity of the ontogenetically modulated circuits, through the individual processing of the noise. The second phase will be the simulation of the interaction of individuals in a social environment with complex constraints, relationships, and competitive goals. In this test, there will be individuals with NO-BODY circuits and individuals without NO-BODY circuits. The target of this simulation is to demonstrate that individuals with the NO-BODY circuits perform better in achieving individual goals.

ACKNOWLEDGEMENTS

- I want to thanks the reviewers of this paper for the precious suggestions on the required improvements.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML