-

Paper Information

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Brain and Cognitive Sciences

p-ISSN: 2163-1840 e-ISSN: 2163-1867

2013; 2(2): 23-37

doi:10.5923/j.ijbcs.20130202.03

Distinct Attentional Mechanisms for Social Information Processing as Revealed by Event-related Potential Studies

Anna Katharina Mueller, Christine Michaela Falter, Oliver Tucha

Department of Clinical and Developmental Neuropsychology, University of Groningen, Groningen, NL, Netherlands

Correspondence to: Anna Katharina Mueller, Department of Clinical and Developmental Neuropsychology, University of Groningen, Groningen, NL, Netherlands.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Phenomena such as the vanishing ball illusion, in which a ball seems to disappear in the atmosphere, whereas the magician is just using cues that capture social attention in order to deceive the audience, shows how human visual perception can be susceptible to social information. In this review the most recent event-related potentials (ERPs) studies of visual social information processing will be discussed. The available literature will be analysed concerning the question whether the processing of social information uniquely modulates attentional mechanisms (indexed in ERP amplitudes and latencies) compared to non-social information. The review demonstrates that ERPs show indeed functional consistency in amplitude and latency modulation on some components (e.g., P1, N170) across different types of social stimuli tested. Importantly, these unique attentional modulations in response to social stimuli as opposed to non-social stimuli were found beyond modulation by other stimulus characteristics. The attentional mechanisms reflected by these components are believed to facilitate social information processing in neurotypicals and point towards a special role of the social domain in human information processing.

Keywords: Event-Related Potentials, Social Vision, Face, Eye, Gaze, Emotion, Body Perception

Cite this paper: Anna Katharina Mueller, Christine Michaela Falter, Oliver Tucha, Distinct Attentional Mechanisms for Social Information Processing as Revealed by Event-related Potential Studies, International Journal of Brain and Cognitive Sciences, Vol. 2 No. 2, 2013, pp. 23-37. doi: 10.5923/j.ijbcs.20130202.03.

Article Outline

1. Introduction

- Over the last decade, cognitive studies focusing on the processing of social information and underlying neuronal markers have been on the increase[1]. The question whether social information plays a special role for human attention and information processing distinct from non-social information processing is still unclear though. Visually presented social information is associated with greater activation of specific brain areas, for example the medial prefrontal cortex (mPFC), the superior temporal sulcus (STS), the medial parietal cortex (mPC), and the temporoparietal junction (TPJ)[2].The outlined brain areas have a unique metabolic resting activity that is believed to facilitate social-perceptual processes[3].The comparison of social and non-social information processing with respect to visual selection, organisation, and interpretation therefore appears of interest. To approach this comparison the current review will be focussed onstudiesreporting event-related potentials (ERPs) associated with social stimuli. ERPs are electroencephalographic recordings (EEG) in response to experimental stimuli that are believed to express the sum of postsynaptic potentials from simultaneously firing neurons[4]. The amplitude of the ERP wave represents the amount of associated psychological processes involved in the operation, with the component’s peak latency indicating the point in time at which the psychological operation has been terminated[1]. ERP studies are especially interesting for the purpose of this review as they provide temporal information concerning processes such as attention. Earlier ERP components, such as the P100, N100, P200, N170, and N200 usually relate to attentional selection mechanisms (e.g.,[5]), whereas later components are more often associated with organisation and interpretation of the stimulus, such as the N400 modulation by incongruency of person identity[6]. Given that this review aims to discuss whether attention to social information is associated with ERP modulations that are distinct from the processing of non-social information the focus will be mainly on early ERP modulations. Social information can be any information related to the outer appearance of humans (i.e. posture, gesture, mimic) or associated with the understanding (i.e. emotional cues, social gestures) and representation of other people (i.e. social group membership) up to verbal or symbolic information associated with human culture (i.e. stop signs). The current review will be confined to ERPs in response to social information in terms of faces, emotion displayed by faces, eyes and eye gaze information as well as human posture, and biological motion. The concluding discussion at the end of the review provides a comparison of ERP findings across stimulus types in order to answer the question whether social information processing is indeed associated with unique ERP modulations.

2. Faces

2.1. Review of ERPs Sensitive to Human Faces

- Studies captured by recent reviews on social information processing (e.g.,[7]) focused on the uniqueness of human face perception, facial identity recognition, and discrimination of facial emotions. Overall it was suggested that faces are generally detected more accurately and earlier than objects (e.g.,[8]), words (e.g.,[9],[10]), and inverted or scrambled faces (e.g.,[11],[12]). Moreover, performance on emotion categorisation tasks was enhanced if facial expressions represented unambiguous emotional information as for example compared to scheming[13] or blurred faces[14]. Given these findings, it is likely that the behavioural enhancement in response to social information, when compared to non-social information processing, is paralleled by unique ERP modulations. In a recent review[7]it was reported that the P80 was affected by participants’ emotional disposition towards faces (liked vs. disliked faces,[15]) or the stimulus’ emotional valence[16, 17]. The P110 was modulated by happy compared to sad faces[18] and a unique response of the frontal P135 component related to successful face detection[10, 12, 19]. Subsequent ERP components between 140-270 ms PSO were related to emotion registration and emotion discrimination (e.g.,[20],[21]). Furthermore, recognition of individuals (familiar vs. unfamiliar faces, learned vs. new faces) affected N170 amplitudes and latencies[22, 23]. The N170 modulation by familiarity of the stimulus is replicated in studies focusing on eye and eye gaze processing (for a review see[24]). Unfortunately, previous studies on facial perceptiononly sparsely used non-facial stimuli as a control condition, making the identification of unique ERP responses to faces more difficult. Nevertheless, there are a few exceptions to this methodological critique such as studies comparing faces to clock stimuli[25], a woman caricature with Necker cubes[26], faces with cars[27], and schematic faces with objects[28]. For instance, Ishizu et al.[25] compared ERPs in response to intact, split, and inverted neutral faces to similarly manipulated clock images (intact, split, inverted) and identified a selective impact of face configuration on the N170 amplitude. In contrast to N170 latency, which was delayed in both stimulus types (split faces and split clocks), the N170 amplitude was uniquely enhanced by split faces when compared to split clocks and by inverted faces when compared to neutral faces.

| Figure 1. Illustration of stimulus diversity; Physically contrasting social stimuli that modulate P1 amplitudes[28, 74, 86] or P1 latencies[102] |

2.2. Discussion

- Taken together, the P1 has been interpreted to be responsive to exogenous low-level visual features, irrespective of stimulus type (social vs. non-social/facial vs. non-facial;[27]) but responded also to facial inversion[34], facial misalignment[43], or level of stimulus ambiguity[26]. Similarly, P2 and P3 amplitudes were modulated by face inversion[25, 34]. With regard to the question whether attention to social information is special, clear ERP modulation by social versus non-social stimuli was found for N1. The N1 showed increased amplitudes in response to faces compared to letters even when attention was actively turned away from faces[32] and when faces were inverted[34], emphasising the assumption of a perceptual facilitating process in favour of faces. Similar to N1, N170 was modulated by social versus non-social information[25, 28, 32] and by rearrangement of facial features such as inversion (e.g.,[25, 34]) and spatial misalignment of faces (bottom part of face misaligned with regard to the upper part of the face,[43]). The N2 was modulated by face inversion and incongruency of prime face and target face[34]. The authors concluded that whereas earlier components (P1, N1, N170) most likely represent configural changes of facial stimuli at low-visual perceptual encoding stages associated with attentional mechanisms, N2 modulations already represent facial identification[34] most likely driven by an implicit “attention-orienting response”[44].Overall, the findings from priming and cueing paradigms with facial stimuli support the idea of distinct attentional processes in facial perception. Facial processing was marked by rapid attentional processes, as characterised by distinct responsiveness of early components to faces. Moreover, face inversion effects and studies demonstrating enhanced ERPs in response to faces compared to objects while controlling for overall stimulus complexity (e.g.,[27, 28]) showed that facial and therefore social perception is different from non-social perception.Future studies are encouraged to control for confounding variables such as luminance and contrast as well as personal significance of the stimuli. Control for luminance and contrast seems warranted as early ERPs have been shown to be sensitive to low-level visual features of a stimulus (e.g.,[5, 34]). Finally, it would be interesting to find out whether experts in a particular field who are often exposed to a particular stimulus category show similar ERP responses to pictures of their expertise as to human faces.

3. Emotional Expressions

3.1. Review of ERPs Sensitive to Emotional Expressions

- Previous research (see review[7]) showed that fearful, threat, and disgusted facial expressions speeded up visual processing and were associated with distinct ERP modulations. Automatic visual attention and distinct ERP responses towards neutral, happy, surprised, and sad faces, however, revealed less univocal results[7]. Studies included in this review report an overall increase in ERP positivity around 100-180 ms PSO in response to emotional facial expressions when contrasted with ERPs to neutral faces (e.g.,[45, 46, 47]). Moreover, inversion of emotional faces delayed the onset latency of these early positive potentials[45]. However, when participants did not pay explicit attention to faces of different emotional valence, no evidence was found for emotion-specific ERP modulations[48] suggesting that facial emotion processing does not operate preattentively and that explicit spatial attention is crucial in emotion identification, in contrast to pure face identification[45]).Besides studies on face perception, the N170 was also investigated for its role in emotion processing from faces. Both fearful and happy, in contrast to neutral faces increased the N170 response[49], leading to the suggestion that enhanced N170 amplitudes indicate perceptual processing of facial emotional information in general, with no further differentiation of the actual valence of the emotion[49]. In the current review’s section on face perception, however, it was outlined that changes in the N170 amplitude reflect manipulations of face configurality. Given that every emotional expression different from neutral (e.g., happy, fearful) is associated with at least some physical changes in facial features, it remains open whether the reported N170 increase in response to fearful and happy faces are actually due to emotional valence of the stimulus. Enhanced P80, Vertex Positive Potentials (VPP), which is the positive cognate of the N170, and P300 amplitudes in response to fearful faces were interpreted to reflect more detailed processing of threat-related information[49]. The perception of fear enhanced earlier potentials in general[49] and led some authors to conclude that fearful information is given priority in perceptual processing when compared to happy and neutral faces (e.g.,[50, 51]). Increased Nc amplitudes of 7 month-old children indicate biased overt attentional processes to fearful faces when compared to neutral faces[52]and prioritised processing of angry faces when compared to fearful faces[53]. This ERP response to the emotional load of the face was yet absent in 5-month old children[52]. The authors conclude that infants’ increased sensitivity to angry facial expressions might be associated with anger addressing the viewer more directly than fearful faces do, since fear does not have to relate to the observer per se, but is more likely to be linked to environmental threat[53]. Likewise, 4 to 6 year old children’s Nc amplitudes were maximal in response to the own mother’s face when the mother’s face expressed anger[54]. The authors interpreted the increase in Nc amplitude as enhanced attention to the stimulus level of personal significance and recognition memory based on the stimulus level of familiarity[54]. These findings suggest that early accelerated potentials (e.g., N170, Nc) are related to person identification on the basis of familiarity and that emotional content (in this case anger) is more likely to be reflected in later components (>500 ms PSO; LPC response). Later effects of emotion as marked by an LPC modulation[54] supported the idea that late positive potentials are sensitive to negatively loaded stimuli in general (e.g.,[55, 56]). However, this interpretation is in contrast with[46], showing speeded early ERP responses (P100) to emotional significance of facial stimuli in adults, with emotional intensity (N170) and face identification being reflected in potentials after this initial emotion related increase in attention. Notably, a recent study on adolescents showed that N170 effects of emotion might not be detectable until the “adult N170 morphology” is completely developed[47], which is believed to be the case in later teenage years[57]. As Todd et al.’ s[54] sample consisted of 4 to 6 year olds and Utama et al.[46] base their argumentation on adults’ ERPs, contrasting results are likely to be associated with neuronal differences in diverging age groups.An emotional negativity bias in human perception was detected and indexed by increased N170 amplitudes and P200 as well as P300 amplitude and latency modulation[59]. Moreover, N170, P200 and P300 modulation by intensity of facial emotion[46, 59] adds to the observation that emotion-related fine-tuned processes of perception are reflected in distinct ERP responses. Extreme negative facial expressions were associated with increased N170 amplitudes[59] and participants’ ratings of the emotional intensity of the face[46, 47]. This effect was not present in ERP responses when positive faces were varied in intensity[59]. Moreover, P2 amplitudes were significantly lower and P2 latencies shorter for extreme negative facial expressions compared to moderate negative or neutral facial expressions. Finally, P3 amplitudes were decreased in response to extreme negative facial expressions, which was not the case in response to moderate negative or neutral facial expressions[59].It has to be mentioned that most of the studies on emotion processing from facial cues did not control for other task characteristics that could modulate ERPs as well, such as low-level visual (colour, luminance) spatial characteristics of the stimuli. The identified ERP modulations therefore, might index attentional mechanisms unrelated to stimulus category (social vs. non-social). In order to address this issue, more advanced studies evolved that controlled for low-level visual features (luminance) and spatial alignment of the stimuli (e.g.,[60, 61]) and yet could replicate previous findings on P1 and N170 modulation by fearful faces[46, 49]. The comparison of low-spatial frequency (LSF) to high-spatial frequency (HSF) filtered fearful faces showed that the typical P100 and N170 amplitude modulation by facial emotion (e.g.,[15, 62, 63]) is mainly mediated by LSF information of the stimuli[61]. The study by Vlamings et al.[61] further controlled for luminance and contrast levels of the HSF and LSF facial cues showing that the reliance on LSF features in facial expression decoding is not associated with contrast or luminance alterations across spatial frequencies (HSF vs. LSF) of the stimuli. Based on previous work (e.g.,[17, 44, 64,65, 66]) enhanced P1 responses were interpreted as a perceptual mechanism providing attentional resources to enhance the processing of emotionally significant information[61]. In contrast to early potentials, later ERPs were shown to be insensitive to spatial frequency filtering[60] suggesting that human perception is marked by a “preattentive neural mechanism for the processing of motivationally relevant stimuli” ([60], p. 3223) that is activated by LSF features. In contrast,[45] reported no effect on ERP modulations by LS- or HS-filtering of fearful or neutral faces. Diverging findings might stem from differences in stimuli given that Alorda et al.[60] used rather complex scenes whereas[45] used facial stimuli.Facial expressions and eye gaze direction have been shown to be of high significance in shared attention and social referencing. Fichtenholtz et al.[67] therefore assessed the distinct and combined effects of facial emotion (fearful/happy) and eye gaze direction (left/right) on attentional orienting towards positively (a baby) or negatively loaded (a snake) targets. In addition, the impact on target processing by validly/invalidly cued eye gaze orientation was tested. Increased N180 amplitudes were found for a facial emotion-gaze direction interaction (happy leftward gazing faces,[67]). Moreover, increased P135 and N180 amplitudes were found for both target types (baby, snake) if preceded by a happy face. The authors interpreted these effects in line with an early priming effect of facial emotion[67]. Later ERP modulations, such as a P300 amplitude increase was associated with invalidly cued targets that were preceded by a happy face. Whereas P300 amplitudes in the early time window (250-550 ms PSO) were increased in response to positively loaded targets (the baby), later P300 amplitudes (550-650 ms PSO) were increased in response to negatively loaded targets (the snake). Fichtenholtz et al.[67] concluded that facial emotion affects ERP responses first (early after stimulus onset), followed by an interaction of facial emotion and gaze location (spatial attention), and a subsequent interaction of facial emotion and gaze validity (whether the stimulus actually occurred at the cued location). These results provide insight into the complexity of attentional precedence mechanisms in the processing of emotion-, gaze-, and cueing information.Other effects of negative facial expressions on the P300 were interpreted to reflect sustained attention to the task of recognising and categorising the emotional valence of aface[68]. Another attentional cueing paradigm showed that contextual non-facial information that preceded the actual facial cue influenced ERPs associated with facial emotion[69]. P1 amplitudes increased in response to fearful faces when preceded by intact objects as compared to scrambled objects[69]. Whereas, P1 latencies did not respond to facial emotion, N170 latencies were longer in response to fearful faces when preceded by an intact object. Later effects on the P2 amplitude and latency were most pronounced when fearful faces were cued by intact fear eliciting objects (a bee). As the authors did not directly compare cueing effects on social (e.g., faces) and non-social (e.g., cars) stimuli it is difficult to say whether the observed attentional gain is due to stimulus category (social vs. non-social) or the preceding cue’s emotional loading (bee vs. baby). In contrast to previous studies showing a speeded attentional advantage towards fearful facial expressions[20, 70], Fichtenholtz et al.[71] reported modulations of early ERPs by happy emotions with fearful facial expressions leading to rather late attention-related effects on the P300 (550-650 ms PSO). This time dependent dissociation in ERP modulation of facial stimuli in response to diverging emotional valence (happy vs. fear) was interpreted to reflect a reduction in selective attention towards approach-oriented stimuli (happy faces) and a special facilitation of spatial attentional processes towards fearful expressions, when cued by eye gaze[67].Manipulation of participants’ attentional load by contrasting ERPs under single and dual-task performance showed that P100, VPP, N170, N300 and P300 amplitudes in response to emotional faces increased under single-task but not under dual-task conditions[72]. Additionally, P1 latencies were longer when attentional demands were increased (dual-task condition,[72]). Participants’ ERP responses in a face-in-the-crowd task revealed that fearful faces were detected faster than happy or angry faces[73]. This behavioural advantage for the detection of threat information was accompanied by an increased N2pc amplitude and shorter latency when compared to perception of angry or happy faces[73]. The N2pc component was preceded by an increase in amplitude at 160 ms PSO, signalling an increase in attention allocation in accordance with type of emotion (again favouring threatening faces,[73]). The authors concluded that fast and highly automatic neuronal processes bias attention towards threat loaded cues, even above mechanisms sensitive to anger and even when many social distractors were present, hence when attentional demands were high (crowd of faces,[73]). Findings of the studies by Luo et al.[72] and Feldmann-Wuestefeld et al.[73] emphasise that ERPs in response to social stimuli can be affected by attentional mechanisms associated with the task. However, both studies did not compare interactional effects of stimulus category (social vs. non-social) and task condition (single s. dual). The findings therefore give no information about whether social perception is less affected by attentional demands than non-social perception. Nevertheless, the idea that social stimuli access unique attentional mechanisms is supported by a study by Santos et al. ([74]; see Figure 1) in which the authors focused on the effects of object-based attention towards either facial expressions or spatial alignment of superimposed houses. They showed that speeded identification of fearful faces as indicated by an enhanced N170 was mediated by selective attention to faces. A sustained positivity starting 160 ms PSO was only present in the processing of fearful faces, even if attention was not directed explicitly to the stimulus[74]. This result underlines that human perception is characterised by distinct attentional mechanisms for socially relevant information (fearful faces) on a subconscious level. Future studies should follow Santos et al.’s[74] design with respect to the control of diverse types of task dependent attentional mechanisms (e.g., object-based vs. spatial attention) in order to broaden the understanding of how attention to social stimuli is modulated by task dependent constrains. Implicit and explicit attention manipulation towards affective words and affective faces further showed that negative facial expressions modulated the N170 response independent of the focus of attention, whereas emotional words were only reflected in somewhat later occurring ERPs (EPN) if explicitly attended[75]. Late ERP responses (LPP modulation), were equally affected by both stimulus categories[75]. These results emphasise the distinctiveness of attentional processes in response to social stimuli. Whereas earlier ERPs seem to be sensitive to the stimulus category (social vs. non-social), late ERPs have been shown to be less selective.

3.2. Discussion

- In summary, greater P100 amplitudes were reported for facial stimuli expressing fear compared to neutral faces (e.g.,[71, 74]), in response to low-spatially filtered unpleasant stimuli[60], and in response to happy faces when compared to fearful faces[67, 76]). Moreover, P100 amplitudes were increased for intact unfiltered emotional faces[60, 69] and when stimuli were previously cued[74]. Intact emotional faces elicited greater P2 amplitudes when compared to scrambled stimuli, with an increase in P200 amplitude being further associated with emotion congruency of previously presented cues and facial stimuli[69]. Moreover, angry as compared to neutral faces increased the P200 amplitude, corroborating Eimer and Holmes’[45] proposal that emotional faces are associated with greater P200 amplitudes. Increased N100 amplitudes were reported for explicitly attended fearful faces[74] and happy expressions[67] when compared to neutral faces. Infants´ Nc amplitudes were sensitive to face familiarity[54] and to unknown faces expressing anger[53] or fear[52]. The Nc amplitude enhancement was interpreted to reflect enhanced attention towards emotionally relevant stimuli[52]. This attentional enhancement is not detectable in ERP recordings until infants reach the age of 7-months[52]. N170 amplitudes correlated with the level of negativity expressed by a face[76] or general intensity level of emotion[46]. The majority of studies reported increased N170 responses to fearful faces (e.g.,[72, 77]) with only one study reporting increased N170 amplitudes in response to happy faces[72]. Increased N170 amplitudes were also found for expressions of neutral compared to pleasant emotions[60] and for strangers’ compared to mothers’ faces in children[54]. The diversity of emotion that impacts on N170 modulation indicates that the N170 apparently responds to changes in facial features, while being rather unspecific to actual emotional valence. Later ERP responses were shown to reflect more elaborate processes such as empathy (P300 enhancement,[36]) or face familiarity (shorter P300 latencies,[39]). Nevertheless, P300 amplitudes were also associated with sustained emotion processing from faces[68, 72]. In contrast to Luo et al.[72], Fichtenholtz et al.[67] identified increased P300 amplitudes in response to happy as compared to fearful faces, an effect that was reversed for the P300 response in later time windows (550-650 ms PSO). Contrasting results, however, are in this case due to a different use in terminology across research groups. It has to be stressed, that a consensus about which ERP peak and latency is associated with which component is highly aspired but not always realised.To conclude, unique effects of attention and perception of social stimuli in the case of emotionally loaded facial stimuli are reflected best in the study by Santos et al.[74]. The recording of ERPs in response to either explicitly or implicitly attended emotional faces that were superimposed by a non-social stimulus (house) showed that fearful faces are associated with a speeded neuronal response immediately after stimulus-onset up to 160 ms PSO, even when participants were instructed to pay attention to the superimposed house. A special role of emotional social stimuli was also supported by a unique N170 response to emotional faces as compared to affective words[75]. This N170 enhancement was even seen, when participants did not directly attend to the facial expression per se[75]. Apparently, human information processing is marked by attentional mechanisms that favour and facilitate the processing of social stimuli. Again, early potentials (e.g., N70, N170) seem more sensitive to the social category of the stimulus than later potentials. ERP evidence supports the presence of a unique facilitation in form of speeded and implicit attention to social stimuli (e.g.,[74, 75]). Future studies should aim to directly compare early ERPs associated with emotionally loaded social stimuli (e.g., faces) to emotionally significant objects (e.g., cultural signs, such as stop or first aid signs), while trying to keep luminance and contrast levels constant across conditions.

4. Eyes and Eye Gaze

4.1. Review of ERPs Sensitive to Eyes and Eye Gaze

- Similar to facial stimuli, information from eyes and eye gaze cues is a crucial source to extract information from social situations. The eye region has been shown to be the most attended to face area for social perceptual processes (e.g.,[78, 79]). Moreover, information from eyes seems to be a prerequisite in emotion recognition (e.g.,[80, 81]), especially in processing signals of fear[82, 83].However, some behavioural findings question the distinctiveness of eye stimuli in capturing participants’ attention and show similar attentional enhancement using simple arrow stimuli (e.g.,[84, 85]). ERP responses to an ambiguous cue, presented twofold, either introduced as an eye in profile or as an arrowhead showed that even though cued targets always produced a behavioural advantage in reaction-time, irrespective of social (eye gaze) or non-social (arrowhead) interpretation of the stimulus, P1 was only enhanced in response to the cue if introduced as an eye ([86], see Figure 1). Given that the stimuli in the two conditions (eye vs. arrowhead) did not differ physically, low-level visual properties cannot account for the observed difference in sensory/attentional gain. The authors concluded that eyes are indeed associated with a special attentional gain mechanism. This gain mechanism is likely to derive from the higher amount of social information associated with eyes in comparison to arrows[87].Feng et al.[88] found enhanced and earlier P120 amplitudes when high-intensity eye whites where compared to low-intensity eye whites, resembling different levels of fear. This effect was absent for pixel-matched control squares suggesting that this unique attentional effect of eye stimuli on the P120 is related to the social relevance of the stimuli[88]. ERP effects of inversion seen in face perception (see section 2.1) were also found for eye stimuli[89]. Whereas the pure perception of eye gaze affected early attentional mechanisms (around 100 ms PSO), inversion of eyes was associated with N170 modulation comparable to that in studies on face-inversion (e.g.,[38]). In line with an attention enhancing effect due to detection of change[24] detection of variation of eye movements was also reflected in N170 modulations[90]. In addition to N170 modulation by eye inversion, P100 latencies were delayed for inverted eyes in upright faces but unaffected when inverted eyes were presented in inverted faces[89].Investigating interactional effects of emotion and gaze perception revealed a speeding-up of reaction-times towards fearful faces and targets that were anteceded by a leftward gazing character[71]. This behavioural effect corresponded to an increased P135 amplitude in response to fearful expressions and enhanced N190 amplitudes in response to rightward gazing faces. An enhanced P325 amplitude was interpreted to represent an attentional bias towards the left visual field in facial emotion processing[71]. Given that emotional expressions were more likely than eye gaze direction to enhance target processing, the authors concluded that the emotional state of a person is of primary importance during social attention, with actual gaze direction being subordinately processed[71]. In contrast, Holmes et al.[91] did not find any interactional effects on ERPs due to the combined presentation of gaze direction and emotional facial expression. Overall, enhanced ERPs to fearful compared to neutral facial expressions were interpreted to represent regular perceptual alerting effects[91]. A marked effect on the EDAN in reaction to the spatial direction of the gaze cues, however, was in line with the gaze cues’ capacity to capture participants’ attention automatically and to keep attention held at the gazed-at position[91]. Whereas Fichtenholtz et al.[71] used the social stimulus (face and gaze variation) as a cueing stimulus and two rectangles as target stimuli, Holmes et al.[91] presented neutral faces that moved their eyes either to the right or left changing their facial expression either to happy or fearful or not at all (neutral) upon the eye movement. The contrast in findings might therefore be due to differences in study design. Modulating effects on the P350 and P500 were found to depend on the social context in which eye gaze stimuli were presented[92]. In this study the social context was manipulated by presenting three face stimuli that gazed at each other, gazed at the participant, or avoided gaze exchange. Shorter P350 latencies were found for gaze cues that made eye contact with the participant compared to gaze cues that avoided gaze exchange. P350 latencies were also shorter for faces that avoided the gaze of the participant but made eye contact with each other, compared to faces that avoided eye gaze with the participant and each other[92]. P500 amplitudes were smaller for faces that made eye contact with the participant and for faces that looked at each other but not to the participant compared to the gaze avoiding faces[92]. Apparently information from social context is not reflected in early attention related ERPs but in later ERPs only (P350, P500). Later ERP modulations (P250, P450, P600) were assumed to reflect higher-order cognitive processes associated with social interactions such as the participants’ level of interest or arousal associated with avoidance/non-avoidance of gaze exchange[90]. Finally, a gaze adaptation paradigm showed that a decreased negativity of the ERP response in the time-window 250-350 ms PSO was associated with participants´ overt judgements of gaze direction representing an attentional after-effect of spatial adaptation to social cues (eye gaze) with a subsequent response of the LPC (400-600 ms PSO,[93]). The initial ERP signal of the after-effect of adaptation to eye gaze (250-350 ms PSO) was interpreted to reflect participants’ sustained attention to eye gaze information associated with social exchange. According to the authors, amplitudes and latencies of the subsequent LPC in response to eye gaze cues were similar to typical P300 responses. P300 modulation was formerly shown to reflect an attentional enhancement associated with a comparison of previous and current perceptual events in working memory (for a recent review, see[94]). Consequently, LPC modulation by eye gaze cues was interpreted to index novelty detection under perceptual spatial adaptation[93]. This observation again, is in line with the idea that late ERPs are more sensitive to higher-order processes such as social information integration and organisation and less to alerting effects.

4.2. Discussion

- In line with an enhancing effect of social cues on attention, increased P1 amplitudes were found for cued eyes[86] and variations in eye-white intensities[88]. Changes in eye gaze were associated with shorter P1 latencies for upright and intact eye-face contexts, when compared to eye gazes in inverted faces[89]. Directly gazing eyes elicited increased P3 amplitudes when preceded by right-ward gazing eyes compared to eye gaze cues that did not change their gaze direction[93]. Invalidly cued eye gazes elicited greater P300 amplitudes in general indicating possible task-dependent effects as described in previous sections[34, 71]. P400 amplitudes were increased in response to leftward gazing eyes in a social gaze exchange[90]. Moreover, smaller P500 amplitudes were observed when comparing processing of two persons gazing at each other to the perception of three persons that avoid gaze with each other and the participant[92]. P300, P400 and P500 modulations are therefore associated with the process of deducing social meaning from eye gaze[92], with EDAN modulations facilitating attention keeping mechanisms towards socially relevant locations[91]. N170 latencies were delayed for inverted eyes in upright faces[89] and eye blinks elicited decreased amplitudes as compared to closed, leftward or upward gazing eyes[90]. Finally, being exposed to a real person gazing directly at participants compared to inanimate dummies increased N170 amplitudes[95] showing sensitivity of the N170 for actual social context. However, the N170 was not modulated by the level of familiarity of eye gaze stimuli[39], adaptation to eye stimuli[93], and whether two or more facial stimuli shared attention as indicated by eye gaze direction[92]. Strong support for the idea of unique attentional processes for social compared to non-social perception is provided by the study by Tipper et al.[86] demonstrating how social loading of physically identical stimuli elicits distinct ERP modulations in favour of unique social perception. P1 amplitudes were only enhanced when participants were instructed that the presented cue represents an eye. Given that luminance, contrast and even shape of the stimuli were the same and only the social/non-social dimension was varied, it must have been the social information eliciting the unique attentional response. Similarly, comparing ERPs associated with eye-white intensities to pixel-matched squares[88] enabled more straightforward comparisons between social and non-social stimuli. Again early attention related P120 modulations were found for the social stimulus (the eye-white stimuli) only, with the non-social matched control squares eliciting no unique ERP modulations. These two studies are rather exemplary for the usefulness of designs in which social stimulus processing is directly compared to non-social stimulus processing. In contrast to earlier potentials that seem to reflect enhanced perceptual mechanisms for social information, late ERP modulations (>300 ms PSO) were shown to rather reflect higher-order processes associated with social cognition (organisation, interpretation of social cues (e.g.,[90, 92]).

5. Human Posture and Biological Motion

5.1. Review of ERPs Sensitive to Human Posture and Biological Motion

- As shown above, studies on social perception as assessed by face-, eye-, and eye gaze stimuli has grown extensively during the last decade. Less attention has been devoted to the perception of social information from other cues (e.g., hands, gestures, or body movements). A recent review by Peelen and Downing[96] indicated that visual body perception, similar to face perception, is marked by distinct attentional responses. N170 responses towards human bodies were similar to those known from studies on face perception[97, 98]. Inverted bodies[77] and changes in body configurality affected the N170 amplitude even across age groups (3-month olds vs. adults:[99];[100]). Moreover, earlier N170 latencies were found for human bodies when compared to faces, with latencies towards objects being overall delayed[77]. Earlier N170 latencies therefore might be interpreted to reflect the attentional modulation in response to the social dimension inherent to both human bodies and faces compared to objects, with further unique attentional responses to emotional content within each class of social stimuli (human bodies vs. faces). Whereas attention to emotion from facial expressions was reflected in N170, P2 and N2 modulation, emotional content of body cues affected the VPP (the positive equivalent of the N170) and was reflected in a sustained negativity 300-500 ms PSO. Increased N170 amplitudes were found in response to point-light-displays depicting standard body movements compared to random scrambled point-lights[101]. An early body movement detection mechanism was associated with a positive ERP shift between 100-200 msPSO[101]. P400 amplitudes were decreased when a typical sequence of human movement was processed compared to random movements[101]. These findings underline that it is the social dimension of the stimulus (human movement) that modulated the attentional response (P100-P200, P400).In contrast to earlier studies reporting no effect of emotion on body perception[97, 98], Van Heijnsbergen et al.[102] observed earlier P1 latencies for intact fearful bodies, whereas scrambled versions of the stimuli did not modulate P1 responses[102]. The VPP showed faster latencies for bodies expressing fear as compared to neutral bodies. Again, the scrambled stimulus versions did not impact on N170 or VPP temporal and spatial topography[102]. The authors concluded that fear information derived from body cues is associated with similar speeded neuronal responses as seen in fearful face processing[102]. Recently, Wang et al.[103] suggested a hierarchy in LPC amplitude enhancement for differences according to the level of social content of complex scenes. Accordingly, the LPC was enhanced for scenes of persons interacting with one another compared to scenes depicting unanimated objects. Moreover, LPC amplitudes towards Theory of Mind (ToM) scenes were even higher than for both aforementioned stimulus categories. These results show that attentional mechanisms indexed in an increase in LPC amplitudes reflect the complexity of the amount of social information. Finally, larger N400 peaks in response to abnormal sequences of an actual goal-directed body movement (eating) were interpreted by Reid and Striano[104] as reflecting a neuronal correlate sensitive to the semantic information of a typical body movement. This gain in N400 amplitude is likely to reflect an attentional enhancement of social perceptual mechanisms in order to extract and process untypical from typical social behaviour.

5.2. Discussion

- The perception of human posture and body movements has been shown to be associated with diverse ERP modulations. Shorter P1 latencies were found for body postures expressing fear compared to neutral body postures[102]. Person perception and ToM eliciting scenes increased P2 amplitudes[103]. If participants attended to human movement, N2 amplitudes increased[101]. Finally, enhanced N400 amplitudes were shown to be not only sensitive to violations in verbal semantics (e.g.,[105]) but also to violations of semantics of biological motion[104].Particularly informative for the question of unique attentional mechanisms for social versus non-social stimuli is the finding of earlier N170 amplitudes in response to bodies and faces as compared to objects[77], which can be taken as experimental evidence for a predisposition of human perception for social information. Future studies should aim to directly compare ERPs in response to body postures, human movement and complex social scenes to other social stimuli such as faces, eyes etc. and to non-social objects in order to outline similarities in attentional processes across social stimulus types corroborating the idea of generally prioritised attentional processes in social perception.

6. General Discussion

- The aim of the current literature review was to investigate whether the processing of social information uniquely modulates attentional mechanisms (indexed in ERP amplitudes and latencies) differently from non-social information. Previous literature on social perception on the basis of ERPs focused foremost on face or eye gaze processing, in particular on processing of emotion from faces or eyes. These previous studies nearly univocally report selective attention related effects of face processing on the N170 component (see review[7]).More recently, direct comparison of faces with objects was associated with unique attention-related enhancement of early ERPs (P1, N1; e.g.,[32]). This attentional enhancement is likely to be related to facilitating processes in response to social information, supporting the idea that human perception is predisposed for social cues. Late ERPs such as the N400 (e.g.,[104]) or P350 and P500 (e.g.,[92]) in contrast, reflect more elaborate socio-cognitive processes such as evaluation or interpretation of social information[106, 107].Recent studies on social perception evolved methodologically as manipulations of implicit or explicit attention to the stimulus (e.g.,[88]), spatial- or object-based attention to the stimulus category (e.g.,[74]), and stimulus complexity (e.g.,[46, 88, 103]) were introduced instead of measuring ERPs to passively presented social stimuli. This refinements of measurement made it possible to address methodological confounds unrelated to the processing of social information (e.g., influence of luminance or contrast;[61, 86, 60].Given that unique attentional mechanisms were still found for social information after these methodological refinements (e.g.,[86, 60) the evidence that human perceptual processes are distinct for social perception is strengthened. In addition, the focus on body perception increased (for a recent review see[96]) acknowledging the fact that social information is not only related to faces or eye cues. This development of increasing stimulus diversity of social perceptual studies led to an enhancement of the conceptual understanding of ERP functionality as attentional mechanisms can be compared across types of social stimuli. As an example, the comparison of attentional mechanisms in response to emotional cues across different stimuli (face stimuli vs. body posture vs. body movement/gestures) showed that the VPP, which appears to be the positive equivalent of the N170, seems to be the neural correlate of emotion processing from body cues[77, 102], whereas the N170 is the functional emotion-sensitive equivalent for facial cues (e.g.,[49, 72]).Itier and Batty[24] addressed the methodological limitations of the discussed literature and questioned the suitability of cueing paradigms for attentional studies. The author’s criticism appears to be important as they speculated that the reflexive nature of participants’ orientation to social stimuli such as eyes might be a consequence of the task and not the social dimension of the stimulus[24]. Future designs should therefore aim to address actual task-dependent influences on ERP modulation in order to clarify whether there are interactional effects of stimulus category (social vs. non-social) and task-dependent factors. The majority of reviewed studies made use of small sample sizes and only a minority controlled for the influence of gender. Because of females’ biased sensitivity for emotion arousing information[108], it would be desirable that studies on emotion processing from social cues would assess whether neuronal correlates might differ between male and female participants. Yet, irrespective of emotional load, a recent ERP study revealed that females in general show increased cueing effects[109]. Hence, not only ERPs in response to social stimuli might be influenced by sample selection, but also non-social ERPs. Finally, a consensus in terminology with regard to which amplitude and latency can be attributed to which ERP component across research groups is lacking. For example, whereas some (e.g.,[72]) refer to the P3 response as occurring at around 300 ms PSO, others (e.g.,[67]) refer to early (250-550ms PSO) and late (550-650 ms PSO) P3 complex time windows. A comparison of ERP functionality across studies solely based on ERP components is therefore difficult.Nevertheless, the current review showed striking similarities of ERP modulations across a wide range of social stimuli, supporting a special role of social information processing. For instance, the amplitude of the early peaking P1 was not only increased in response to inverted[34], misaligned[43], fearful (e.g.,[71, 74]), happy[67, 76], and cued faces[74] but also to abstract stimuli introduced as representing an eye[86] or different levels of fear in eyes[88]. Similarly, the N170 amplitude was increased in response to attended[25], ignored[32], inverted (e.g.,[25, 34]), misaligned[43] and schematic faces[28], but also to bodies[77] and interactions of participants with an actor making eye contact[95]. Consequently, we propose that it is the social dimension of stimuli leading to the described ERP modulations, rather than other stimulus characteristics, given that the discussed stimuli varied in their outer appearance but were accompanied by similar attentional responses. For illustration Figure 1 depicts a visual comparison of physically different stimuli that nevertheless had comparable effects on the P1[28, 74, 86,102].In conclusion, current studies on social perception indicate that the processing of various types of social information shows functional consistency in ERP modulations on some components (e.g., P1, N170). These functional modulations of early ERPs can be found across different paradigms when using social information as target cues. As shown by paradigms that directly compared ERPs in response to social information with ERPs to non-social cues that were matched in luminance and contrast, these early modulations were uniquely associated with the perception of social information. Modulations of late potentials (e.g., P350, P500) are more likely to reflect social-cognitive processes such as integration of social context (e.g., social referencing effects as seen in social attention studies). Overall, the current review’s integrative approach of identifying similarities in ERP modulation in response to a diversity of social stimuli emphasised the existence of distinct attentional mechanisms for social information processing.

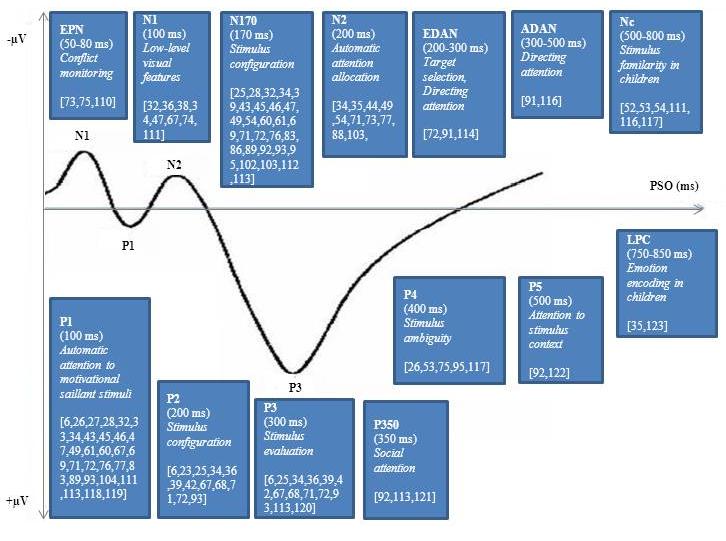

| Figure 2. ERP components, their associated functions, and articles per ERP component citing modulation effects associated with social stimuli |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML