-

Paper Information

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Applied Psychology

p-ISSN: 2168-5010 e-ISSN: 2168-5029

2016; 6(5): 138-149

doi:10.5923/j.ijap.20160605.02

Revision of the Erhardt Learning-Teaching Style Assessment (ELSA): Results of a Qualitative Pilot Study

Rhoda P. Erhardt

Consultant in Pediatric Occupational Therapy, Private Practice, Maplewood, USA

Correspondence to: Rhoda P. Erhardt, Consultant in Pediatric Occupational Therapy, Private Practice, Maplewood, USA.

| Email: |  |

Copyright © 2016 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

A field test study of five elementary-aged children in a Title I class was conducted over an 8-month period to generate descriptive data for revision of the Erhardt Learning-Teaching Style Assessment (ELSA). This observational checklist had been designed to: (a) identify learning styles of individual children, (b) help teachers, therapists, and parents select optimal strategies for teaching new skills in schools, clinics, and home environments, and (c) evaluate results of the selected intervention strategies in order to either maintain or modify them. Two expanded case studies illustrate classroom application of assessment interpretations, specific strategies recommended, and documented performance changes. In addition, qualitative data derived from the pilot study describes the process of conducting research in a natural context, and offers specific examples of teacher feedback contributing to structure and content changes that will help improve effectiveness of the instrument for educational, clinical, and research purposes. Suggestions for future research include descriptions of additional needed informal field tests and formal outcome studies.

Keywords: Assessment, Learning Styles, Teaching Strategies, Contextual Environments

Cite this paper: Rhoda P. Erhardt, Revision of the Erhardt Learning-Teaching Style Assessment (ELSA): Results of a Qualitative Pilot Study, International Journal of Applied Psychology, Vol. 6 No. 5, 2016, pp. 138-149. doi: 10.5923/j.ijap.20160605.02.

Article Outline

1. Introduction

- This paper proposes that an assessment linked with appropriate instructional strategies is needed, not only for classroom teachers responsible for children's academic achievements, but also for parents/caregivers and professionals in the health fields, who are helping them learn developmental gross and fine motor skills and self-help tasks such as feeding, dressing, and grooming.The National Center for Education Statistics (NCES) published its Condition of Education 2015 report stating that stronger academic skills have been correlated with learning behaviors such as paying attention, showing eagerness to learn, and persisting in task completion [1]. Children are motivated to achieve those positive behaviors if they can experience success. Research studies published across several decades have focused on investigating the relationship among teaching strategies, learning styles, and academic achievement. The questions that have been asked are: Do individuals learn more easily when teaching methods match their learning styles? What assessments produce useful information that can guide teachers to be as effective as possible for their students?

2. Literature Review

2.1. Learning Styles: Relationship to Current Educational Principles and Practices

- Developmentally Appropriate Practice (DAP) is based on researched knowledge of how children learn and develop, together with curriculum and teaching effectiveness evidence, forming a solid basis for decision making in early child care and education [2]. Components of DAP address both age and individual appropriateness. Age appropriateness refers to predictable changes in all areas of development: cognition, communication/language, social/emotional, physical/motor, and adaptive/self-help. Individual appropriateness includes the unique qualities and individual patterns of development: physical growth, personality, learning style, interests, and family culture [3].Differentiated Assessment (DA) supports the learning process by helping teachers identify and address student strengths and needs, by assessment of student learning and assessment for instructional planning. It is ongoing and responsive, changing over the course of time in response to student growth and development [4, 5].Response to Intervention (RtI) is a multi-tiered approach matching the needs of general education students academically or behaviorally at risk, providing appropriate levels of support, effective instructional opportunities, and targeted interventions to insure progress. The Tier 1 level identifies children performing below expectations; Tier 2 designates individualized intervention by school staff (one-on-one or small groups); and Tier 3 indicates the need for more intensive strategies and referral to special education services [6-8].Universal Design for Learning (UDL) is defined as the design of materials and environments to be usable by all people. Its guidelines can be incorporated into assessment and intervention frameworks to provide fair and equal opportunities for learners with different abilities, backgrounds, and motivations [9, 10].

2.2. Definitions of Learning Styles and Strategies

- One of the first broad definitions of learning style was "cognitive, affective, and physiological traits that are relatively stable indicators of how learners perceive, interact with, and respond to the learning environment". Keefe [11, p. 4] described learning styles as the method that people use to process, internalize, and remember new academic information. Her first model included environmental, emotional, sociological, and physical (later converting to physiological and psychological) variables. One of the physiological variables, the perceptual element, included hearing (auditory), seeing (visual), manipulating (tactual), and moving (kinesthetic). These categories have been widely used among educators and psychologists since first introduced [12, 13].Sarasin [14] defined learning styles as "the preference or predisposition of an individual to perceive and process information in a particular way or combination of ways" (p. 3), which naturally leads to the question: Is it predisposition, or preference, or both? Some researchers who have explored that differentiation have stated that learning styles are unconscious internal characteristics developed in childhood, whereas learning strategies are external skills that can be learned consciously when students' are motivated to improve and develop their level of comprehension [15]. Cassidy [16], from the UK, and Hartley [17, p. 149], from Australia, explained that although students approach learning tasks in ways that feel most natural, "different strategies can be selected by learners to deal with different tasks. Learning styles might be more automatic than learning strategies which are optional".

2.3. History of Learning Styles Theory

- Research in the area of learning style has been increasingly active during the past three decades, throughout the world, in the continents of Africa, Asia, Australia/Oceania, Europe, North America, and South America. The focus of attention has been on attempts to identify factors affecting learning‐related performance, specifically, the relationships between teaching strategies, learning styles and academic achievements [18-22]. Most theories of teaching styles emphasize the importance of teachers adjusting their strategies to accommodate students' sensory learning styles [23-25] In fact, Cafferty [26] found that the closer the style match between each student and teacher, the higher the student's grade point average.More than 40 experimental studies based on the Dunn and Dunn Learning Style Model were conducted between 1980–1990 to determine the value of teaching students through their learning-style preferences. The results were synthesized through meta-analysis, and suggested that when students' learning styles were accommodated, they could be expected to achieve 75% of a standard deviation higher than the scores of students in the control groups [27]. Similar gains were also documented for poorly achieving and special education students in urban, suburban, and rural schools [28].During this time, with general acceptance of the concept that individuals’ learning styles have a great impact on their academic achievement and the assumption that matching these styles would increase students’ performance levels, researchers became highly motivated to look for measures that could help identify the favorite learning styles of students and teachers. As a result, many different learning-style inventories began to be developed for investigating the learning preferences of children and adults, and some of them have continued to be instruments of choice for current research. For example, the Learning Styles Inventory (LSI) questionnaire was originally designed for self-reporting responses to environmental, sociological, physical (perceptual strengths: auditory, visual, tactile, kinesthetic), and psychological factors. Versions of the scale were developed for primary and secondary school children and as well as adults, and the resulting profiles were used to plan learning situations, materials, and teaching approaches [29]. The LSI was reviewed by Cassidy [30] as having one of the highest reliability and validity ratings, and being the most widely used assessment for learning style in elementary and secondary schools. The VARK (Visual, Auditory, Read/Write, Kinesthetic) was expanded from the VAK model [24, 31]. It was based on the idea that most people possess a dominant or preferred learning style, with the learning modalities described as visual (learning through seeing), auditory (learning through hearing), and tactile/kinesthetic (learning through touching, doing, and moving), and multi-sensory (balanced blend of the above previous styles). Its primary purpose was to be advisory, rather than diagnostic [32]. The Perceptual Learning Style Preference Questionnaire (PLSPQ) was developed specifically for learners of foreign language, to assess preferred visual, auditory, and kinesthetic learning styles [15, 33].However, during those same decades, controversy arose about the credibility and reliability of these instruments, as well as the validity of the general concept of learning style theory, despite published studies of learning-style preference validation [27]. Pashler, et al [34] proposed that the prevalence and popularity of the learning-styles approach in education could be related to its perceived success, rather than supporting scientific evidence. They argued that the literature failed to provide adequate support for applying learning-style assessments in school settings, and urged investigators examining those concepts to adopt factorial randomized research designs, which have the greatest potential to provide action-relevant conclusions.Although controversy still exists, many recent published studies appear to be re-examining learning styles theories. A survey of more than 60 international university studies found that students’ achievement increased when teaching methods matched their learning styles, described as biologically and developmentally imposed sets of personal characteristics that make the same teaching method effective for some and ineffective for others [25]. This construct continues to be explored at every level of education, from pre-K to graduate school [21, 22, 30, 35-48].Identification of learning styles in children and adults is complicated because of the variability of how and when preferred sensory modes are chosen. For example, multi-sensory learners are said to include those who switch from mode to mode, depending on the task or situation, as well as others who integrate input simultaneously from several or all of their processing styles [24]. In addition, some authors feel that preferences may change over time, depending on cognitive levels, developmental stages, and contexts of the learning experiences. Still others believe that beginning learners prefer kinesthetic modes first, followed by visual, and then auditory, and as they progress, tend to rely more on auditory, visual, and kinesthetic modes, in that order [46]. Dunn [49] found that when children were taught initially through their most preferred modality, and then reinforced through their other modality, their test scores increased. Linda Nilson, the founding director of the Office of Teaching Effectiveness and Innovation at Clemson University, South Carolina, advises, "Teach in multiple modalities. Give students the opportunities to read, hear, talk, write, see, draw, think, act, and feel new material into their system. In other words, involve as many senses and parts of the brain as possible" [43, p. 5].The apparent differing theoretical bases for learning styles suggests that a variety of perspectives are needed to capture the comprehensive character of learning styles, that no one instrument can capture all of the complexities of learning styles.

2.4. Research Designs for Development of New Learning Style Assessments

2.4.1. Limitations of Current Instruments

- Although more than 70 learning style instruments have been available during the last 30 years, only about a dozen are considered to have academic credibility [41]. It is also important to note that most are self-reporting and were developed for adults, rather than for children [25, 49]. Some of these measures provide direct links from assessment results to intervention strategies, but few are categorized as observational, and none assess performance skills in specific environmental contexts by designated categories of observers, a usability concept described by Trived & Khanuat at Paher University in India [50].

2.4.2. Quantitative vs. Qualitative Designs

- Two groups of researchers in the United States and Canada who have concluded that no links exist between teaching strategies and learner performance have conducted empirical, quantitative studies only [41, 51]. Mays & Pope [52] believe that it is not possible to judge qualitative research by using criteria designed for quantitative research, such as reliability, validity, and the ability to generalize the results to other populations. They describe methods appropriate for studies of learning styles as qualitative, naturalistic, observational, and holistic. For example, one of the findings of a qualitative study exploring mainstream classroom teachers' opinions about meeting the academic needs of English Language Learners (ELLs), was the conclusion that the best way to insure success is with differentiated assessment and instruction [44]. Willingham & Daniel [47] admitted, however, that attending to those different learning styles in the same classroom is challenging for teachers and cannot be done at all times.

2.4.3. Combined Methodology

- Since qualitative methods are now widely used and increasingly accepted in the education, health, and psychology fields, it has been suggested that qualitative explorations can lead to measurement instruments being developed for later quantitative research [53-55]. In fact, the research design categorized as Sequential Exploratory, with a purpose of investigating a phenomenon, can be a useful strategy for developing and testing a new instrument. The design is characterized by an initial phase of qualitative data collection and analysis, followed by a phase of quantitative efficacy research [56]. As an example, in their study of the learning-style preferences of English as a Foreign Language (EFL) learners in relation to their proficiency levels, Soureshjani & Naseri [46] first created a modified version of an existing learning style questionnaire to determine those levels in Iranian students. At the next stage, they collected and analyzed statistical data about the learning style preferences of those students in each proficiency category. This concept of the Sequential Exploratory design could be a way to establish scientific credibility when creating a new instrument, by planning two separate studies (qualitative investigation and then quantitative research), rather than one mixed-methods strategy.

2.4.4. Naturalistic Contexts

- Many leaders in the education and health fields have stated that participating in naturalistic observational research can be extremely productive [57]. In Britain, the Centre for Education Research and Practice, which promotes research for policy and practice in the education and assessment sector, recommends that administrators should create time and space for staff to engage with research, apply it to their own contexts, test specific interventions, and then refine and improve their practice [58]. According to the Assessment and Teaching of 21st Century Skills (ATC21STM) project, research efforts can be beneficial at the classroom level, especially for the design and development of assessments [59].To summarize, although the primary goal of the field test pilot study was to generate descriptive data for revision of a new qualitative observational assessment, another important purpose was to provide a model for the process of how research can be interwoven into the professional activities of teachers and clinicians within natural environmental contexts.

3. Development of the Erhardt Learning-Teaching Style Assessment (ELSA)

- The ELSA is a unique qualitative measure, designed not only to identify learning styles of individual children, but also to help teachers, therapists, and parents teach new skills in natural contexts such as schools, clinics, and home environments.

3.1. The Process

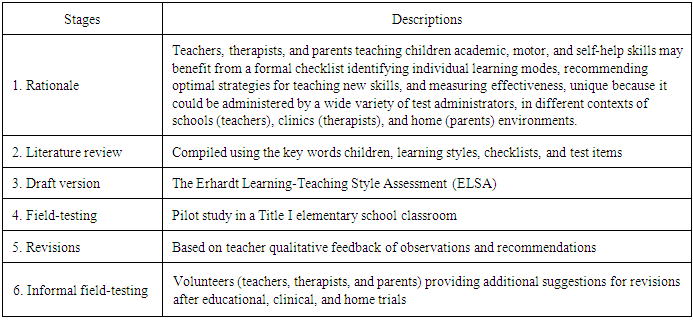

- Table 1, Process of Creating a New Observational Assessment, reviews the first three sequential stages of this process described in a previous article: 1) Statement of the rationale (motivation), 2) compilation of the literature review, and 3) creation of the draft version [61].

|

3.2. Test Construction

- The inductive approach described by Thomas had been used for the original construction of the test, by creating a practical framework through the classifications of themes and categories emerging from a literature review of existing learning style instruments [61]. This type of qualitative rather than quantitative methodology was also selected for the pilot study of the ELSA, because it was descriptive, process-oriented, and meaningful, supporting investigation within the context of the specific school environment [62, 63]. The structure of the ELSA was explained as containing three categories of learner styles (Visual, Auditory, and Tactile/Kinesthetic), each with two subsections (Behavior Characteristics Observed) and (Teaching Strategies Recommended). The final section (Interpretation and Recommendations) included the analyses of results, based on calculations to indicate strongest learning styles, or to detect Multi-Sensory Learners, with consideration of possible relationships to specific tasks, topics, and/or environmental contexts.Review of the literature containing similar checklists included educational, clinical, and family studies. The author wrote 160 preliminary test items (72 Behavior Characteristics Observed and 88 Teaching Strategies Recommended) for the draft version. Phrases were repeatedly edited and revised over a period of several months in the effort to be consistent in style, as objective as possible, and relevant to the categories of school, clinic, and home environments [61].

4. The Pilot Study

4.1. Rationale

- When the author shared information with a colleague about the newly published article that described the process of creating a learning-teaching style assessment [61], their discussion evolved into a collaborative, interdisciplinary field-testing project, because they were both interested in the challenge of individualizing teaching methods in different contexts. The author had decades of experience supervising school occupational therapists and teaching home programs to families. Her colleague, a Title I teacher, realizing that the assessment might provide useful information about certain underachievers in her classroom, volunteered to use it to record observations in a structured way, select appropriate intervention strategies, and help interpret the results. She viewed this research study as an opportunity to provide constructive feedback for the revision of an effective instrument for teachers, therapists, and parents. Since a randomized sample is not necessarily appropriate to obtain qualitative data, a convenience sample from the Title I classroom would be the most practical and affordable method for these professionals working in the field without research funding.

4.2. Participants

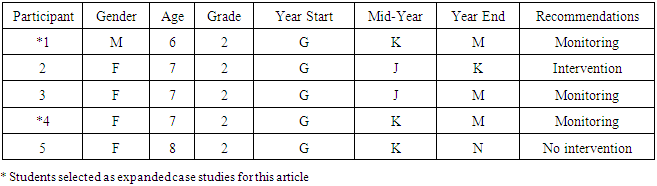

- The convenience sample of five children who were struggling with academic performance in reading (25%-50% quartile) was recruited from a Title 1 pull out classroom in an urban elementary school. The four girls and one boy, ages 6-8, were all in the 2nd grade. Numbers instead of identifying information were used in the data tables presented so that confidentiality of participants could be preserved.Title I of the Elementary and Secondary Education Act of 1965 was created and federally funded for the purpose of improving the academic achievement of young, low-achieving children, e.g. those with limited English, living in low-income households, with disabilities, etc. [64]. Title I teachers are aware of the need to identify their students' learning styles and preferences, and adjust their own teaching methods to meet those educational needs [65].

4.3. Method and Procedures

- At the beginning of the school year, as the lead teacher in a Title I classroom, she collaborated with teacher assistants, scoring each child's learning styles with the Erhardt Learning-Teaching Style Assessment (ELSA) 2014 draft version during everyday observations. The primary purpose of the pilot study was to provide useful feedback for refining and improving the scale to make it more effective for readers who wish to use it for educational and research purposes. Open-ended questions requested their opinions about 1) clarity of administration and scoring instructions, 2) correlations between scoring results, interpretations, and recommendations, 3) relevancy for intervention/ management, and most importantly, 4) specific suggestions for test improvement.Each Behavior Characteristics page was scored, results calculated, and then interpreted in terms of the criteria specified in Section 4 of the ELSA. Initial Teaching Strategies were selected and implemented during the following months until the mid year point. Effectiveness was then scored, to Interpret and record final Recommendations, including methods of ongoing individualization and adaptations. Suggestions for improvements in structure and content of the ELSA were documented on relevant pages of each test booklet, as well as on the feedback form. This process was repeated at the end of the school year.

4.4. Case Studies

- The online journal article, The Process of Creating a Learning-Teaching Style Assessment, described two children identified as potential candidates for this study because they typified the challenges that the ELSA was designed to address [61]. Those same case studies will be expanded here, with teachers' notes from the beginning, throughout, and after completion of the 8-month field test study.

4.4.1. Participant #1

- While he was a first grader, this six-year-old was referred to the Title I reading intervention team for assessment and additional reading assistance, because the Response to Intervention (RtI) Tier I interventions used in his classroom had not been enough to help him keep up with first grade reading goals. Although he easily learned sight words, and he could read and spell words with common phonetic patterns, he had difficulty with comprehension. He lacked confidence when asked to share ideas about stories the class was reading. His expressive language was grammatically correct, but he appeared to be unfamiliar with many words and concepts that most first graders knew, e.g., "flower". He lived with his mother and two older brothers who were responsible for him while their mother was at work. His evenings and weekends were spent playing video games and watching television. He spent very little time with friends, playing outside, or having conversations with his family members. It appeared that these limited social experiences prevented him from gaining the prior knowledge and vocabulary needed to comprehend what he read at a first grade level. Both the classroom teacher and the Title I teacher recommended that in second grade he would benefit from continuation of the Title I reading intervention program (RtI, Tier 2).In second grade he continued with this same Title I teacher who thought that he would be a good candidate for the pilot study, because the ELSA seemed to be the type of Differentiated Assessment (DA) that would help her gain additional insight into his learning preferences and also suggest new strategies to increase his reading performance. Although the pre-test results suggested that he was primarily a visual learner, she decided to add other strategies to help him with his focusing issues. For example, touching his hand to get his attention (tactile), in addition to reminding him to look at the speaker (visual), resulted in his increased engagement with tasks. Another successful strategy addressed his difficulty asking and answering questions. She modelled the way to ask questions before, during, and after short reading passages (auditory), encouraged him to try as well, and was gratified to see him learn to share and retain new information. She also would regularly stop him at certain points while he was reading aloud and comment about what specific things he did well. His reaction to this strategy was positive. For example, he commented, "Something didn’t look right, so I stopped and went back and fixed the word. Then it made more sense". His classroom teacher soon shared the fact that he was reading for longer periods, and enjoying his independent reading time.The Title I teacher then realized that her intuitive sense of him as a multi-sensory learner (despite the pre-test results) was verified by the success of using the additional sensory approaches. It became obvious to her that limited social, language, and physical experiences had contributed to his inability to appropriately access his auditory and tactile channels. His progress during second grade is presented in Table 2, Field Study Qualitative Data, and in Table 3, Field Study Demographics and Reading Test Scores.

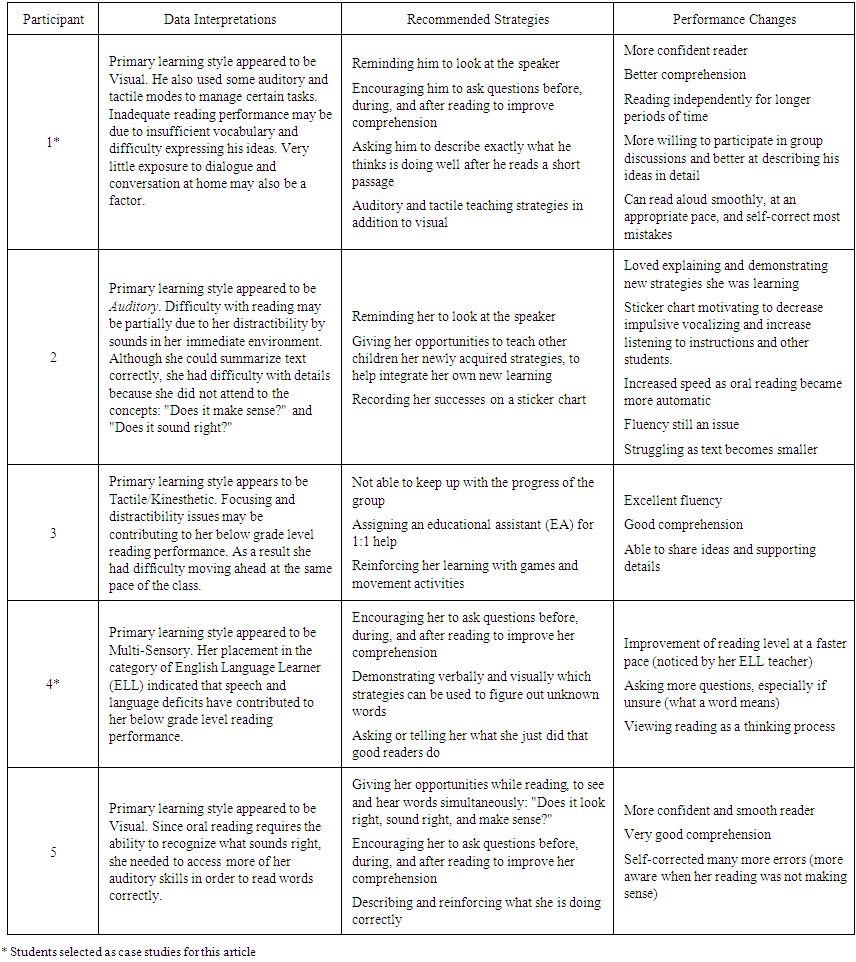

| Table 2. Field Study Qualitative Data for Title I Participants: Results of the Erhardt Learning-Teaching Style Assessment (ELSA) |

|

4.4.2. Participant #2

- This six-year-old was in first grade when she was referred to the Title I reading intervention team for assessment and additional reading assistance as an RtI Tier 2 intervention, with English Language Learner (ELL) support, and an individualized Education Program (IEP) for Language Processing. She had come to kindergarten with limited English language experience, which resulted in a slower pace in improving reading skills. Her social language skills were fair, but her limited vocabulary and processing difficulties made it difficult for her to comprehend text that most children her age could understand. She appeared to be a happy girl, living with her parents and three siblings, who often participated in special family activities together. Her mother stated that she read with her daughter on a regular basis. Becoming a good reader was important to her, and within the small group, she liked to share her ideas about the stories they were reading together. The Title I reading intervention team collaborated with the ELL teacher to make sure the student was placed appropriately in a small reading group that would provide opportunities to follow Universal Design for Learning (UDL) guidelines to deal with the fact that English was not her primary language. In second grade, she continued with ELL, Speech, and Title I Reading Intervention, but the ELL and classroom teachers expressed their pessimistic belief that her below-average performance was evidence of her limited potential, and she would continue to have difficulties not only in second grade, but also in the future.Because her primary learning style appeared to be multi-sensory, some of the strategies selected were to demonstrate verbally and visually several suggested new methods to help her figure out unknown words. First, her mother agreed to play word games with her at home to reinforce new learning in an enjoyable way and help her focus on meaning. She was also encouraged to ask herself and others questions about what they were reading. As a result, she began to see reading as a thinking process, as well as the decoding of words. Whenever her teacher listened to her read short passages, she would mention something done well (that good readers do). Soon she was able to determine and express what she had done well herself. By the end of the year, she demonstrated increased confidence, and was telling others that she was a “good reader”. If unfamiliar with a word, she would ask what it meant. Both her classroom and ELL teachers expressed excitement as they realized that she was using higher level thinking skills, showing greater effort, and more enthusiasm for reading. Despite the earlier predictions, she made significant progress and reached the second grade end of the year goal, according to her Title I teacher, who described qualitative changes in Table 2, and independent reading test score improvements in Table 3.

4.5. Results of Qualitative Data

- Table 2, Field Study Qualitative Data for Title I Participants, presents interpretations of ELSA scores, recommendations related to learning concerns, and results upon completion of the study. Interestingly, in this small cohort, two of the five children appeared to be visual learners, and one each of the other three used auditory, tactile/kinesthetic, and multi-sensory modes.

4.6. Pilot Study Feedback

- Examples of Teacher Comments and Recommendations. Teacher recommendations collected from the pilot study feedback form were used to revise and refine the category configuration, scoring system, and test items, e.g. searching for redundancy and contradictory statements. Teacher comments were related to what was learned from administering the instrument, its effectiveness, and its contribution to a deeper understanding of children's learning needs.In their study conducted at an elementary school in Japan, Hirose, et al [66] found that many expert teachers are acutely aware of teacher-student interactions, recognize behavior patterns in their classroom, and assign meaning to them very quickly, a process facilitated by their extensive experience.Over the years, this Title I teacher had developed practical routines that she instinctively utilized to adapt her teaching methods for individual students when indicated, as well as for the entire classroom on a regular basis. For example, she had discovered that mnemonics, memory devices using rhythm and song, were highly effective learning tools [67]. Additionally, when she recognized many of the strategy items listed in the ELSA as similar to her own, she made the recommendation that scoring symbols should be added in the revision to reflect the difference between usual classroom strategies and those newly recommended. She also stated that preview of the test items before actual administration of the ELSA could help an examiner know in advance exactly what to observe. In retrospect, she realized that finalizing the ELSA pre-test scores should be delayed at least one or two months after the school year begins, to give the examiners enough time to know the students well enough to score the assessment as comprehensively as possible.She and her assistant teachers discovered that students sometimes use other learning styles in addition to their strongest one, especially for different tasks or in different contexts. They may need strategies that also teach through their less strong channels, because certain situations need processing through different or several simultaneous modes. They found that other students in the class could benefit from new strategies implemented for one or more of the children in the study. Their conclusion was that the process of using the ELSA to document everyday observations over extended time periods can make teachers more aware of each student's reading difficulties, and can offer additional and individualized strategies. (Cavanagh, personal correspondence, 2015).

4.7. ELSA Revisions

- The 2014 draft version of the ELSA was revised after analysis and discussions with the Title I teacher who implemented the study. Teacher comments were related to what was learned from administering the instrument, its effectiveness, and its contribution to a deeper understanding of children's learning needs. Specific examples of test revisions were:Scoring symbols for the Teaching Strategies pages were changed to denote Usual Strategy: X, and Newly Recommended: circled X.Since comparing the number of checked items in each of the three Behavior Characteristics section was sufficient to calculate learner type, the additional step of calculating percentage of checked items to total items was deemed unnecessary, and therefore deleted.More examples were added to specific context sections of the Teaching Strategies Recommended pages, such as opportunities to work alone in a school context e.g. solving math problems; imagining oneself performing a task before doing it in a clinic context, e.g. as athletes do; and repeating a newly learned skill until competent in a home context, e.g. tying shoelaces.

5. Discussion

5.1. Purpose

- The goal of this study was to describe the process of conducting research in the natural context of a school classroom [57-59], identifying learning styles of five individual children, while generating descriptive data for revision of the Erhardt Learning-Teaching Style Assessment (ELSA). The test's purpose is to facilitate the success of teachers, therapists, and families as they teach new skills to children, not only in schools, but also in clinics and home environments. The field test feedback would be instrumental in contributing to structure and content changes to improve the instrument's effectiveness for educational, clinical, and research purposes.Incorporating current theories of educational principles and practices into the rationale and procedures of this study was done to provide an operational plan for the teachers to implement as effectively as possible. Developmentally Appropriate Practice, Differentiated Assessment, Response to Intervention, and Universal Design for Learning are all based on researched knowledge of how children learn, identification of student strengths and needs, teaching effectiveness, and appropriate levels of support [2, 4, 5, 45].In order to illustrate specific classroom application of assessment interpretations, recommended strategies, and performance changes that arose from the project, case studies of two subjects from a previous article are expanded in this paper.

5.2. Findings

5.2.1. Positive Changes

- Qualitative data extrapolated from the results of the Erhardt Learning-Teaching Style Assessment (ELSA) field study not only provided essential and useful information to the author for the revision process, but also supported theories of teaching styles that emphasized the importance of teachers adjusting their strategies to accommodate students' sensory learning styles [23-25].The most frequent performance changes in all five children during the 8-month period (documented in Table 2) were: better comprehension, asking questions when unsure, more participation and sharing of ideas in group discussions, increased speed and fluency reading aloud, self-correction of most errors, longer periods of independent reading, and most significantly, improved confidence. The teaching staff viewed the gains in these essential academic, social, emotional, and skills as concrete evidence confirming the value of teaching these low achievers with their learning-style preferences in mind [28].

5.2.2. The Controversy

- Researchers who have conducted only empirical studies refute the concept that true links exist between teaching strategies and learner performance [41, 51]. However, other investigators argue that it is unreasonable to judge qualitative research with criteria designed for quantitative research [52].Other findings that partially explain the inconsistent results among learning style researchers were that students often use other learning styles in addition to their strongest one for different tasks or in different contexts. These results are in line with previous studies describing certain students who needed to learn through their less strong channels in order to succeed in specific situations [16, 17].

5.2.3. Unexpected Findings

- The teachers found that other students in their classroom could benefit from the new strategies implemented for one or more of the children in the study, a discovery that helped alleviate the challenge of attending to different learning styles in the same classroom [47].Learning in multiple modalities, either simultaneously or sequentially, seemed to be appropriate for many of the subjects, a finding that corroborates previous studies reporting that students were more motivated when they were given opportunities to see, hear, and touch new materials by reading, talking, writing and drawing, thinking, and moving [43].

5.3. Limitations of the Study

- The complex nature of learning styles requires that the qualitative results of this pilot study should be viewed as indicative and informative, not diagnostic nor efficacious because the feedback was collected from the observations and recommendations of the teaching staff in only one classroom. Although the information presented can be applied to some children of different ages, cultures, and social class, generalizations cannot be made about the entire target population of elementary age children with reading problems. In addition, it has been suggested that preferences may change over time, depending on developmental stages, that impact on learning experiences. [46].

5.4. Suggested Future Research: Additional Qualitative Field Tests and Quantitative Outcome Studies

5.4.1. Qualitative Methodology

- Since this pilot study was conducted in only one Title I classroom, future qualitative studies using a similar design should be planned in order to substantiate the value of the assessment in other schools, as well as in clinic and home environments. In addition to standardized measures, clinical observation across natural settings can provide valuable insight into how specific task or environmental factors promote or hinder children’s learning. For example, some children may show difficulties learning particularly challenging tasks because of environmental factors, such as the amount of noise, type of lighting, room temperature, or the distraction of other children moving around in the room [5, 30, 68].

5.4.2. Quantitative Methodology

- A future efficacy study in another school setting could begin with a hypothesis such as, "Children whose teachers follow recommended strategies derived from the ELSA would improve their independent reading test scores more than children in a control group". Similarly, in a therapy clinic, "Children whose therapists follow recommended strategies derived from the ELSA would improve their fine motor test scores more than children in a control group".To ensure that every item is objective and clearly observable, an additional revised version of the ELSA would be required for certain items in all visual, tactile, and auditory observational sections of the test. For example, the following test items meet those criteria: "May close eyes to visually remember" and "Gestures when speaking". However, this example "Is sensitive to loud noises" could be changed to "Demonstrates sensitivity to loud noises by covering ears".

5.4.3. Results of the BAS Scores

- The type of data that could be included in quantitative studies has been illustrated in Table 3, through pre-test, retest, and post-test scores of the Benchmark Assessment System (BAS), an independent test of reading performance (levels A - N for grades K - 2) [69]. All the 2nd grade teachers, including Title I, used the BAS as one of their tools for observing/testing academic performances at this elementary school. They generally didn't complete the first battery of tests until the end of September, to give children who lost a level or two during the summer (e.g. those who had not been reading every day), a few weeks to regain the scores of their previous May levels.The ELSA was added to the list of assessments already in place at this participating elementary school, for those in the Title I cohort sample only, to provide qualitative data for instrument revision, as well as for individualized program planning throughout the year. Results showed various levels of progress that have influenced programming recommendations for each student in the next school year.Table 3, Field Study Demographics and Reading Scores for Title I Participants, presents the results of all three BAS scores (Year Start, Mid-Year, and Year End) for each of the five children. It is important to note that second-graders typically started the school year at Level I, with goals of K at mid-year and M at year-end. Title I students usually begin the school year below Level I, so they usually needed assistance to progress at a faster rate to reach those same goals.Since the federally funded Title I funds were not available after 2nd grade in this school district, the teacher would make recommendations for 3rd grade of either, 1) no intervention needed, 2) monitoring progress, or 3) intervention (extra small group reading time). At the beginning of the following year in 3rd grade, the Title I students would be retested. If special education placements were considered, a problem-solving team would make recommendations for further testing at that time.

6. Summary and Conclusions

- The primary goal of this pilot study was to generate field-test data and feedback for revision of a new observational checklist, the Erhardt Learning-Teaching Style Assessment (ELSA). The revision was planned to help teachers in schools, therapists in clinics, and parents at home be more effective in identifying learning styles of individual children, determining teaching strategies with awareness of those styles, and modifying their teaching methods for optimal student success. Qualitative rather than quantitative methodology was selected, because the descriptive analytical approach was critical to the revision process.Results indicated that the ELSA does provide comprehensive information about the learning modes students prefer, and can guide teachers in choosing strategies aligned with those preferences. The findings also showed that many learners with a very strong preference in one mode will still accumulate score in other modes, which teachers should be prepared to employ as well, depending on the types of learning activities introduced and their contexts.Comments and recommendations collected from this feedback have contributed to revision and refinement of the category configuration, scoring system, and test items, e.g. to eliminate redundancy, address contradictory statements, and ensure that the language is appropriate for both professional and lay administrators.The lead teacher's critical thinking skills gained from years of experience proved invaluable during the study, as she collaborated with the author to interpret the results of the ELSA, and make intervention decisions to benefit her at-risk ELL students. Teacher attributes that can have positive effects on the entire learning process include sensitivity to children's needs and a stronger focus on their strengths than their deficits [70].The ELSA is a unique observational tool because it is the only one designed to facilitate learning in not only schools, but also clinics, homes, and/or other environmental contexts, where children need to learn academic, motor, and self-help skills [50]. It can therefore provide appropriate, comprehensive, and detailed information for instructional planning that is not available from standardized measures.

ACKNOWLEDGEMENTS

- Thanks to Mary Cavanagh for her dedicated participation in this pilot study, her astute observations, insightful comments, and important suggestions that have contributed to the next version of the ELSA.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML