-

Paper Information

- Next Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

International Journal of Applied Psychology

2012; 2(2): 1-7

doi: 10.5923/j.ijap.20120202.01

Intelligent Tutoring in a Non-Traditional College Classroom Setting

Elizabeth Arnott-Hill 1, Peter Hastings 2, David Allbritton 3

1Department of Psychology, Chicago State University, Chicago, IL, USA

2Department of Psychology, DePaul University, Chicago, IL, USA

3Department of Computing and Digital Media, DePaul University, Chicago, IL, USA

Correspondence to: Elizabeth Arnott-Hill , Department of Psychology, Chicago State University, Chicago, IL, USA.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Research Methods Tutor (RMT) is a web-based intelligent tutoring system designed for use in conjunction with introductory research methods courses. RMT has been shown to result in average learning gains of .75 SDs above classroom instruction in traditional college environments. However, a primary goal of the RMT project is to provide greater access to tutoring for students without access to traditional one-on-one human tutoring. Therefore, we further tested RMT’s effectiveness at a university that enrolls primarily non-traditional students. Although we again found evidence of RMT’s effectiveness, a few key outcome differences between traditional and non-traditional environments emerged, including perceptions of the pedagogical agent and access to the system.

Keywords: Intelligent Tutoring, Technology and Education, Non-traditional Students

Article Outline

1. Introduction

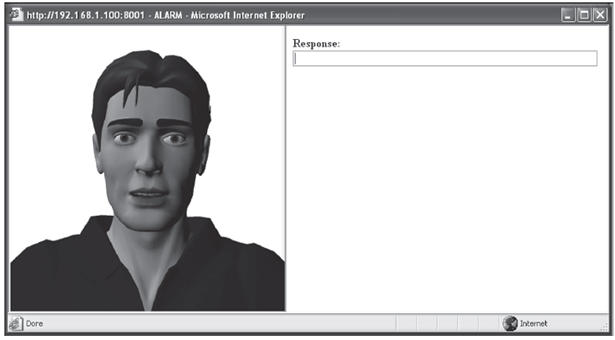

- Computing technologies, particularly those that allow students convenient access to learning resources, are infiltrating every aspect of the educational system. Textbooks routinely come with online student resources, and many instructors regularly assign online or computer - based homework to enhance the classroom learning experience. One of these computer-based technologies, intelligent tutoring systems (ITS), seeks to mimic the benefits of one-on-one human tutoring. Expert human tutoring is believed to be among the most beneficial learning techniques. Bloom, for example, found that a successful human tutoring situation can enhance learning by up to 2.3 standard deviations over classroom instruction alone[1]. In contrast to more didactic approaches, such as lecture, textbook learning, or websites that mimic a traditional textbook layout, tutoring allows students to engage in a dialog that helps them to assess their current levels of understanding and to work cooperatively to increase levels of knowledge[2-7]. The extent of the student’s engagement in this dialog is correlated with the student’s learning outcomes[8-10]. An ITS that uses the techniques employed in expert human tutoring, therefore, could provide access to this powerful learning technique without the drawbacks of human tutoring, which include the cost, the time involvement, and the inconvenience of coordinating schedules. Due to the challenges faced by many of today’s students, including childcare, work schedules, and transportation[11], ITS have the potential to make vast contributions to effective teaching and learning.A number of successful ITS have been created and tested to date. For example, Koedinger and colleagues[12] conducted a large-scale study of the effectiveness of PAT (the PUMP Algebra Tutor) in conjunction with a high school algebra curriculum. They found that students who used the system scored 100% higher on basic skills tests than those who did not use the system. In laboratory-based tests, another ITS, AutoTutor, increased students’ scores by one standard deviation above reading a textbook alone[13]. ANDES, an ITS used in undergraduate physics courses, has also been associated with significant learning gains for students who use the system in lieu of pencil-and-paper based homework[14].Research Methods Tutor (RMT) is a dialog-based ITS created to increase student learning in introductory undergraduate psychology research methods courses. Most psychology programs require at least one course in research methods[15]. Research methods courses, however, tend to be difficult for psychology majors due to their applied, technical, and largely quantitative nature. In addition, research methods courses require students to engage in critical thinking, which is generally a skill that undergraduate college students are in the process of developing[16]. RMT is designed for use in conjunction with traditional coursework and is available online, making it convenient to use at any time of the day without cost. It consists of five topic modules that coincide with typical topics from psychology research methods - ethics, variables, reliability, validity, and experimental design. Each RMT topic module is assigned after the topic is covered in the classroom.The RMT system has two presentation modes that allow the student to interact with the tutor in a specific way based on student preference or technical requirements. Students are presented the material either in text on the computer screen (the text-only mode) or via an on-screen interactive pedagogical agent (the agent mode). For the text-only mode, students see RMT’s questions and feedback in text on the screen. In the agent mode, an interactive avatar “speaks” RMT’s content and the student types his/her response in a response box onscreen. RMT’s pedagogical agent, Mr. Joshua, is shown in Figure 1. Mr. Joshua not only speaks, but also blinks, turns his head, and gestures with his hands. Due to the requirements of the agent software, the student must be able to download the required software (thus, students in a public computer lab cannot download the agent) and must have a PC with Internet Explorer. Students who meet these criteria can choose to download the software or can opt to use the text-only version of the system.

| Figure 1. The RMT interface with the animated pedagogical agent, Mr. Joshua, used for the “agent mode” |

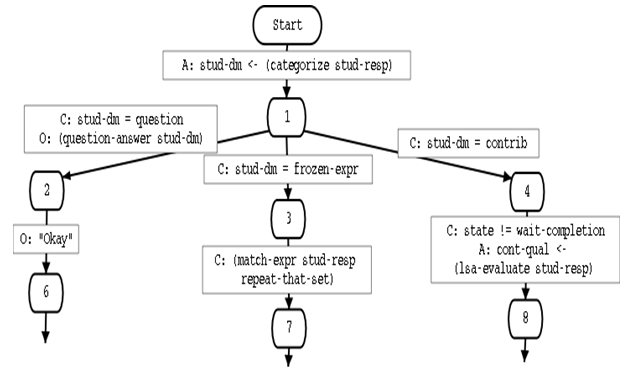

| Figure 2. A Partial View of the Dialog Advancer Network (DAN) |

2. Method and Results

2.1. Participants

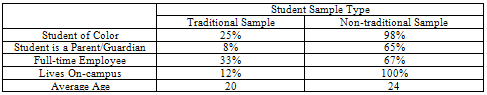

- Our non-traditional student assessment was conducted at an urban university with a large non-traditional student population. For the purposes of the present study, we broadly defined “non-traditional student” as a student who was not of traditional college age (18-23), worked full-time, acted as a primary caregiver / parent, or belonged to a historically underrepresented group. Of the 87 students in the sample, 98% were African American, 65% were parents or primary caregivers of children, 67% held full-time jobs, and 100% lived off-campus. The average age of the students was 24. Table 1 compares the demographic attributes of students in this non-traditional student sample to the students in our traditional sample.Students in RMT classes completed all RMT topic modules for course credit, as well as a 50-item pre-test and post-test, but were allowed to anonymously opt-out of data collection. Students in classes that did not use RMT completed the same 50-item pre-test and post-test for course credit, but, once again, they were allowed to anonymously opt-out of data collection.

2.2. Procedure

- In order to assess the effectiveness of RMT, we selected two research methods courses to use the RMT system and two to serve as non-equivalent control groups. The data was collected over the course of two semesters. During the first semester of data collection the daytime section used RMT, and the evening section did not. During the second semester of data collection the daytime section did not use RMT, and the evening section used RMT. Students in all courses were given a 50-item multiple choice pre-test during the first week of the semester and the same 50-item post-test during the last week of regular classes. The test consisted of 10-items per topic module covered by RMT. At the end of the semester, an additional 25 question post-test (5 items per topic module) was given to test for transfer learning. This transfer learning test consisted of research scenario-based critical analysis questions, which the students had not seen before. In addition, at the end of the semester, RMT students were given an 11-item questionnaire regarding their perceptions of the system. The questionnaire consisted of 8 questions that employed a 1 (Strongly Disagree) to 6 (Strongly Agree) scale and one yes/no question. Students were also encouraged to give general feedback in an open-ended question at the end of the questionnaire (“What, specifically, did you like/dislike about using the system? Do you have any other feedback for us?”).Students in all courses used the same textbook, followed the same basic course outline, and were taught by the same instructor. The only major difference between the conditions was that the students in the RMT section were assigned to complete RMT topic modules after the topics were covered in the class. RMT students who could download software (had regular access to a PC with Internet Explorer) were asked to select the agent presentation condition, and students who could not were assigned by default to the text-only condition.

2.3. Results

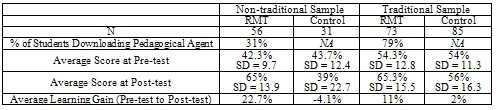

- Students who did not complete either the pre-test or post-test and RMT students who did not complete all of the RMT modules were removed from the analysis (n=6), leaving 56 students in the RMT condition and 31 in the control group. To address our first research question (Does RMT result in increased learning gains when used with non-traditional students?), we conducted an ANCOVA with the pre-test score as the covariate, the post-test score as the dependent variable, and the classroom condition (RMT vs. control) as the independent variable. We found that RMT students performed significantly better than control students on the basic assessment measure (post-test only), F(1, 84) = 54.78, p < .01, with an NRP effect size[26] of 1.39 standard deviations. RMT students had an average score of 32.6 (out of 50 possible – 65%) with a standard error of 1.1, while the control students had an average score of 19.5 (39%) with a standard error of 1.4. When only the transfer task was examined, RMT students significantly outperformed control students, F(1,84) = 14.05, p < .01, effect size = .71 standard deviations. RMT students scored an average of 13/25 with a standard error of .64, while control students scored an average of 8.9/25 with a standard error of .86. On the total assessment (post-test + transfer), RMT students also significantly outperformed the control, F(1,84) = 42.99, p < .01, effect size = 1.21 standard deviations. A comparison of the measured learning outcomes of the non-traditional and traditional student samples is found in Table 2.

|

|

|

3. Conclusions

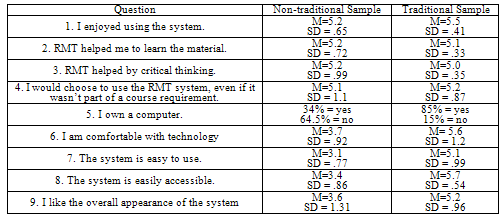

- Using a non-traditional student sample, we found additional evidence that RMT boosts student learning outcomes above classroom instruction alone. The observed RMT learning effect sizes of .71-1.39 SDs (based on the outcome measure used in the analysis) are impressive evidence of the system’s effectiveness. The demonstrated learning gains are comparable with lab-tested ITS, such as AutoTutor[13], and with estimates of the learning gains from one-on-one human tutoring[1]. This is particularly impressive given the fact that, unlike researchers using lab-based evaluations of ITS, the “naturalistic” approach used in the present study gave the researchers little control over the amount of time and attention paid to each RMT topic module. In addition, RMT was only used for 3-5 total hours over the course of the 16-week semester.When the results from the traditional and non-traditional samples were compared, a number of interesting differences emerged. First, although students in both traditional and non-traditional samples indicated that they enjoyed using RMT and that they believed that it aided learning, major differences in student accessibility were observed. Approximately 64.5% of students in our non-traditional sample did not have access to a computer, compared with only 15% in our traditional sample. Since the animated pedagogical agent had to be downloaded, students using a lab or work computer could not use the agent. Not surprisingly, only 31% of students in the non-traditional sample downloaded the agent, while 79% of students in the traditional sample used the agent. Non-traditional students also gave lower ratings to the accessibility of the system and their own comfort with technology. This poses a significant problem, as a primary goal of the RMT system was to provide greater access to tutoring. The second major difference centered on student perception of the animated pedagogical agent. In the non-traditional sample, students gave lower ratings to the overall look of the system. When asked for specific likes and dislikes, a large number (55%) of the students who successfully downloaded and used the agent commented on the agent’s appearance. The primary complaints were that the agent was difficult to understand and that the agent appeared to be a European American male. No student in the traditional sample voiced either of these concerns. Since previous research has suggested that various social cues, such as gender and perceived ethnicity[27], can affect the learning situation, it is possible that the largely female and African American non-traditional sample was not able to relate to the agent in a positive way. It is also possible that the agent distracted students from the learning situation, given the mixed evidence in support of animated pedagogical agents[28]. Future research would be needed to investigate this phenomenon, as the present study had a very low rate of agent usage, students self-selected into the agent condition, and there was no alterative agent for comparison.We also found that learning effect sizes were higher with the non-traditional student sample than those found in the traditional sample. Students in our non-traditional sample performed worse at pre-test than those in the traditional sample. The scores at post-test, however, were equivalent, resulting in learning gains for the non-traditional sample that were double those of the traditional sample. This finding is consistent with the ITS literature concerning the benefits of tutorial dialog[25]. Tutorial dialog has been found to be more useful for those who are novices in a given area. If pre-test score can be used as a measure of preparedness or familiarity with the course material, then the non-traditional sample was clearly less familiar with the material than the traditional sample at the beginning of the course. A major limitation of direct score comparison between the two samples, however, is that the pre-test/post-test was altered significantly in order to increase reliability. The traditional sample was administered a 106-item pre-test / post-test with approximately 20 items per topic module, while the non-traditional sample was given a 50-item pre-test / post-test with exactly 10 items per topic module. In addition, the traditional sample did not take the 25-item transfer learning test. A follow-up study could confirm and clarify these results by using the 50-item pre-test / post-test and transfer test with another traditional sample.Taken together, the data provide encouraging evidence of effectiveness. Specifically, the large learning gains observed in our non-traditional sample suggest that ITS may be particularly appropriate in this setting. However, clear directions for improvement also emerged. If an ITS is to be useful it should reach people that do not have access to human tutoring. The large number of student access difficulties, together with the dislike of the animated agent suggests that a new approach be taken in the design of the system. Thus, we plan to not only change the type of animated agent used, but also to investigate new techniques for using an agent that does not require a software download. We hope that these changes will make the system easier to use – especially for those uncomfortable with technology – and more widely accessible to students.

ACKNOWLEDGEMENTS

- The authors wish to acknowledge their research assistant, Michelle Rogers, for her help with data entry and analysis.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML