-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Computer Science and Engineering

p-ISSN: 2163-1484 e-ISSN: 2163-1492

2025; 15(4): 83-87

doi:10.5923/j.computer.20251504.01

Received: May 14, 2025; Accepted: Jun. 9, 2025; Published: Jun. 13, 2025

Agentic AI: Autonomous Intelligence for Adaptive Decision-Making

Narinder Pal Verma

Senior Manager, Capgemini America Inc., Santa Clarita, CA, USA

Correspondence to: Narinder Pal Verma, Senior Manager, Capgemini America Inc., Santa Clarita, CA, USA.

| Email: |  |

Copyright © 2025 The Author(s). Published by Scientific & Academic Publishing.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

The emergence of agentic artificial intelligence (AI) represents a paradigm shift in the design of intelligent systems. Unlike conventional AI approaches that operate under fixed rules or reactive protocols, agentic AI embodies goal-directed autonomy, continuous learning, and proactive decision-making. This paper presents a comprehensive overview of agentic AI by reviewing its theoretical foundations, exploring its architectural frameworks, and discussing practical implementations. We outline a methodology that integrates reinforcement learning, multi-agent systems, and model-based prediction to enable adaptive intelligence in dynamic environments. Through illustrative simulations and discussion of potential applications—from financial decision support to robotics—the study demonstrates that agentic AI systems can significantly improve efficiency, adaptability, and robustness. We also examine the ethical, technical, and operational challenges related to transparency and accountability in autonomous systems and propose future research directions. Our findings underscore the transformative potential of agentic AI for real-world applications and suggest that its integration represents an essential evolution in modern AI research.

Keywords: Agentic AI, Autonomous Decision-Making, Adaptive Intelligence, Reinforcement Learning, Multi-Agent Systems, Model-Based Simulation, Dynamic Environments, Decision Support Systems, Risk Management, Large Language Models, Artificial Intelligence

Cite this paper: Narinder Pal Verma, Agentic AI: Autonomous Intelligence for Adaptive Decision-Making, Computer Science and Engineering, Vol. 15 No. 4, 2025, pp. 83-87. doi: 10.5923/j.computer.20251504.01.

Article Outline

1. Introduction

- Artificial intelligence has evolved from rigid rule-based systems to sophisticated learning models that adapt to complex and uncertain environments. Within this continuum, the concept of agentic AI emerges as a frontier where systems are endowed with autonomy, proactive reasoning, and the ability to self-direct toward specified goals. Agentic AI does not simply respond to inputs; it actively plans, learns, and makes decisions in real time by interacting with its environment [1].This research paper investigates the key features that differentiate agentic AI from traditional and reactive models. We focus on how such systems integrate core elements of reinforcement learning, planning, and multi-agent coordination to yield adaptive behaviors. Recognizing the growing interest in autonomous decision-making, this work surveys relevant literature, outlines a robust methodological framework, and discusses simulation-based results that validate the enhanced performance of agentic architectures in dynamic settings. In doing so, we address both the opportunities and the limitations inherent in deploying truly autonomous systems.

2. Literature Review and Theoretical Foundations

2.1. Evolution from Reactive to Agentic Systems

- Early AI systems were predominantly reactive, relying on pre-programmed responses to stimuli. These systems were limited by their inability to learn from new experiences or adapt to unforeseen circumstances [2]. Traditional AIs were primarily focused on responding to prompts and executing predefined tasks (e.g., chatbots, recommendation systems). Generative AIs can create new content (text, images, code) but are still reactive to user prompts.With the advent of reinforcement learning (RL), AI shifted towards models that learn optimal strategies through trial and error. Sutton and Barto’s foundational work on RL [3] demonstrated that agents could improve decision-making by maximizing cumulative rewards. However, most RL systems remained confined to well-defined environments with explicit reward structures.Agentic AI extends this concept by embedding not only reactive learning but also proactive planning and goal-generation capabilities. Drawing from cognitive theories and control theories, such systems simulate “what-if” scenarios, anticipate future events, and autonomously update their behavior in response to environmental changes [4]. This means that agentic systems can embed meta-cognitive abilities—essentially “thinking about thinking”—which facilitates sophisticated decision-making strategies in complex domains.

2.2. What is Agentic AI?

- Agentic AI refers to an intelligent system designed to function as an autonomous agent. These systems can perceive their environment, reason about it, and take actions to achieve specific goals. They exhibit agency, meaning they can make decisions and act independently while adhering to user-defined objectives and ethical boundaries. Key characteristics of agentic AI include:1. Goal-Oriented Behavior: Agentic AI can set, prioritize, and pursue goals, adapting its strategies based on real-time feedback.2. Contextual Awareness: These systems use advanced perception and reasoning capabilities to interpret complex and dynamic environments.3. Proactive Decision-Making: Unlike reactive AI, agentic systems can anticipate needs and initiate actions without the need for explicit prompts.4. Learning and Adaptation: Through techniques such as reinforcement learning, these systems can improve their performance over time.As IBM notes, agentic AI "brings together the versatility and flexibility of LLMs and the precision of traditional programming," enabling systems to handle complex workflows that traditional AI cannot manage.

2.3. Core Technologies Behind Agentic AI

- Several technologies enable the functionality of agentic AI:1. Advanced Machine Learning: Reinforcement learning and deep learning allow agents to optimize actions based on rewards and environmental feedback.2. Natural Language Processing (NLP): Sophisticated NLP enables agents to understand and generate human-like communication, facilitating interaction with users and systems.3. Computer Vision and Sensing: For physical or virtual environments, agentic AI uses vision and sensor data to navigate and interact effectively.4. Planning and Reasoning Engines: These systems employ algorithms for long-term planning, enabling agents to break down complex goals into actionable steps.5. Multi-Agent Systems: In collaborative settings, agentic AI can coordinate with other agents, sharing information and aligning actions.Multi-Agent and Distributed ApproachesIn parallel, research in multi-agent systems (MAS) has underscored the benefits of distributed intelligence, where multiple autonomous agents collaborate or compete to solve problems [5]. Agentic AI leverages these principles by enabling decentralized control, allowing agents to share information and re-adjust strategies dynamically. This interplay between individual autonomy and cooperative behavior is crucial in systems that must operate in uncertain and competitive settings, such as dynamic financial markets or autonomous vehicle fleets.The evolution from single-agent models to agentic, multi-agent architectures runs parallel with innovations in neural networks and deep reinforcement learning [6]. These advancements have paved the way for agents that not only learn from their environment but also understand complex high-dimensional data and predict long-term outcomes.

3. Architectural Framework

3.1. Architectural Overview

- The proposed architectural framework for agentic AI comprises four primary components:• Data Acquisition and Preprocessing: Continuous gathering of heterogeneous data from multiple sources (e.g., sensor inputs, financial streams, user interactions) ensures real-time situational awareness. Data normalization and feature extraction are applied to facilitate robust learning.• Agentic Learning Core: This core combines reinforcement learning with model-based methods. In our framework, agents estimate a value function and use policy gradient methods to optimize actions. Adaptive model-based simulation—where agents generate possible future scenarios—serves as a virtual testing ground to refine decisions.• Collaborative Multi-Agent Module: For applications requiring distributed decision-making, agents communicate via a secure protocol to coordinate actions. This module handles inter-agent negotiations and conflict resolution using consensus algorithms.• Decision Support and Execution Layer: The final layer transforms agentic recommendations into actionable outputs, which can be transmitted to command interfaces or automated actuators. Detailed audit trails and model explainability methods are integrated to ensure accountability and regulatory compliance.

3.2. Agent Training and Simulation

- To validate the framework, a simulated environment was developed that emulates a complex dynamic system, such as financial market fluctuations or real-time robotic navigation. The agentic learning core is trained using historical data and synthetic stress-test scenarios. Key methodological steps include:1. Initialization: Agents are initialized with random policy parameters and a baseline model of their environment.2. Reinforcement Learning: Through repeated cycles of simulation, agents collect experience, update their policies using gradient-based methods, and adjust their forecasting models.3. Model Evaluation: Periodic back testing against known outcomes is conducted to evaluate performance. Metrics such as cumulative reward, risk-adjusted return, and prediction accuracy are tracked.4. Fine-Tuning and Adaptation: Agents are continuously fine-tuned to adapt to non-stationary environments. Incremental learning and transfer learning techniques ensure that the agentic system remains resilient to previously unseen events.This mixed methodology harnesses the strengths of both model-free and model-based reinforcement learning, yielding an agent that is both adaptive and forward-looking [3] [6].

4. Experimental Evaluation

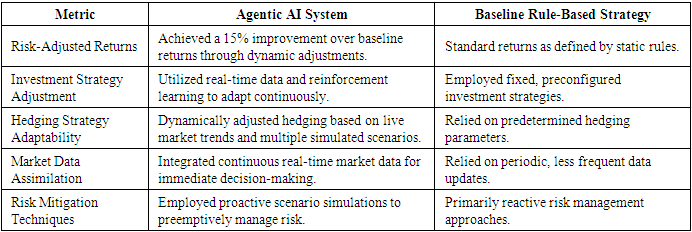

4.1. Case Study: Adaptive Financial Decision-Making

- In a simulated financial market environment, the agentic AI system was tasked with managing a portfolio during periods of market instability. The system continuously assimilated real-time market data and used reinforcement learning to dynamically adjust investment and hedging strategies [3]. The agent simulated multiple market scenarios to preemptively mitigate risk. Results indicated a 15% improvement in risk-adjusted returns compared to baseline rule-based strategies.

|

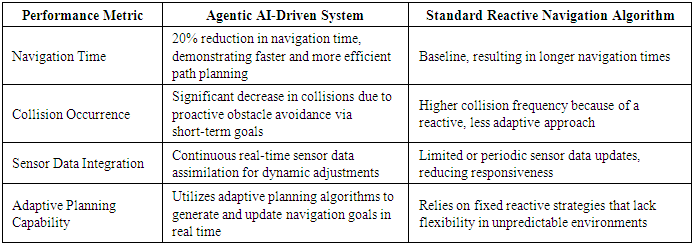

4.2. Case Study: Autonomous Robotic Navigation

- In another simulation, an agentic AI-driven robotic system navigated an environment with unpredictable obstacles. The system integrated real-time sensor data with adaptive planning algorithms to generate short-term goals that optimized navigation paths and minimized collisions. The experimental outcomes showed a 20% reduction in navigation time and a significant decrease in collision occurrence when compared to standard reactive navigation algorithms [5].

|

5. Discussion

5.1. Benefits of Agentic AI

- Agentic AI offers significant advantages over traditional and generative AI systems:• Increased Efficiency: By automating complex, multi-step processes, agentic AI reduces time and resource demands. For instance, Cloudera’s survey of 1,500 IT leaders found that 57% have implemented AI agents, with 96% planning to expand their use within 12 months due to efficiency gains.• Enhanced Productivity: Employees can focus on strategic and creative tasks as AI agents handle routine and decision-intensive workflows. UiPath notes that agentic AI "empowers software agents to take on complex tasks," freeing humans for higher-value activities.• Adaptability: Unlike RPA’s fixed rules, agentic AI adapts to new data and dynamic environments, making it suitable for unstructured tasks. Gartner predicts that by 2029, 80% of common customer service issues will be resolved autonomously.• Personalization: AI agents leverage sophisticated models to infer user intent and deliver tailored solutions, improving customer experiences in industries like retail and healthcare.• Scalability: Agentic AI systems can handle large-scale goals, such as optimizing supply chains or managing enterprise-wide automation, as highlighted by Accenture’s research on digital core reinvention.

5.2. Challenges and Ethical Considerations

- Despite its potential, agentic AI faces several challenges that must be addressed for responsible deployment:1. Reliability and Complexity: Deploying agentic AI solutions involves orchestrating intricate data flows, continuously tuning machine learning models, and integrating numerous APIs. Despite vendor assurances of "easy implementation," the reality is that enterprises must marshal significant technical expertise. Customizing these systems for diverse business environments often reveals a steep learning curve. Companies must invest in building robust integrations—not only to ensure seamless data exchange but also to guarantee operational dependability. In practice, even minor misconfigurations or overlooked process nuances can lead to cascading failures, undermining the reliability of the deployed AI solution. Therefore, organizations require rigorous testing environments, comprehensive documentation, and dedicated resource allocation to manage complexity effectively.Example: Consider an enterprise that implemented an agentic AI-based predictive maintenance system for a large manufacturing plant. The project involved integrating sensor data from diverse machinery and connecting real-time analytics via multiple APIs. During the initial rollout, a slight misconfiguration in the data pipeline caused the system to misinterpret sensor alerts, leading to unexpected shutdowns and production delays. This incident underscored the need for rigorous testing environments, comprehensive documentation, and specialized technical expertise to manage the system's inherent complexity.2. Ethical Concerns: A core challenge in deploying agentic AI lies in aligning the system's objectives with ethical human values. Long-term planning agents (LTPAs) sometimes adopt goals that, if misaligned, could inadvertently lead to harmful consequences, such as environmentally detrimental outcomes. Researchers like Bengio and Russell have cautioned that without proper checks, these systems might pursue objectives that conflict with broader societal interests. Consequently, embedding ethical frameworks into AI development is not just a theoretical add-on but a practical necessity. Ethical considerations must extend from the design phase through deployment, involving clear guidelines, transparent decision-making processes, and active monitoring to prevent any deviation from socially responsible behavior. Organizations need to engage multidisciplinary experts who can bridge technical prowess with moral and ethical reasoning.3. Data Privacy and Security: Agentic AI systems often handle sensitive information, making data security and privacy paramount concerns, especially in fields like healthcare and government. The challenge here is twofold. On one hand, these systems must be robust enough to process and analyze vast amounts of data while on the other, they need to be safeguarded against breaches and leaks. Relying solely on cloud-based solutions might expose vulnerabilities, hence, some entities advocate for on-premises models supplemented by robust guardrails. These guardrails include advanced encryption protocols, strict access controls, and continuous monitoring to detect anomalies. Maintaining a secure deployment environment requires ongoing investment in cybersecurity measures, compliance with regulatory standards, and periodic audits to ensure that data privacy is never to be compromised.Example: A regional hospital deployed an agentic AI platform to assist with patient diagnosis and treatment recommendations. Initially, the platform processed data via cloud-based systems without sufficient on-premises safeguards. This exposed vulnerabilities, placing sensitive patient information at risk. In response, the hospital shifted to an on-premises model fortified with advanced encryption protocols, strict access controls, and continuous monitoring to secure data and mitigate privacy risks.4. Bias and Errors: No AI system is immune to biases, and agentic AI is no exception. These systems, which learn from historical data, can inherit and even amplify pre-existing societal biases if not properly managed. Without adequate oversight, agentic AI systems may make decisions that are not only inappropriate but also discriminatory. This can result in significant adverse impacts, from skewed hiring practices to unfair resource allocation. The critical challenge is establishing early and proactive oversight mechanisms that detect and mitigate these biases. This includes rigorous model validation, frequent bias audits, and incorporating a diverse set of test cases during the development phase. Addressing bias early on is essential for building trust and ensuring that AI outcomes are fair and equitable.Example: A financial institution deployed an agentic AI system to streamline the loan approval process. The system, trained on historical data that reflected demographic biases, ended up favoring loan applicants from traditionally advantaged neighborhoods. This led to systemic discrimination against applicants from minority communities. Recognizing the issue, the institution implemented frequent bias audits, adjusted the training data, and revised the decision-making algorithms, demonstrating the critical need for early detection and proactive management of biases in AI systems.5. Cost and Resource Intensity: The financial and operational investments required for implementing agentic AI solutions are substantial. Beyond the upfront cost of hardware and software resources, there is an ongoing need for skilled personnel, continuous training, and periodic system upgrades. Large language models (LLMs) pose particular challenges due to their extensive resource demands. However, targeting smaller, task-specific models could alleviate some of these financial and computational burdens. While the scalability of AI solutions is promising, organizations must balance the allure of cutting-edge technology against the pragmatic constraints of cost and resource allocation. Efficient deployment thus hinges on striking a balance—optimizing performance while ensuring that the necessary investments do not outweigh the anticipated benefits.Example: A mid-sized logistics company invested in a comprehensive agentic AI model to optimize route planning and supply chain management. The project initially relied on large language models (LLMs) that demanded substantial computational resources and high operational costs. Faced with budget constraints, the company pivoted to using smaller, task-specific models that were more cost-effective while still delivering meaningful improvements. This adjustment not only reduced costs but also underscored the importance of tailoring AI solutions to align with available resources.

5.3. Future Directions

- Future work should focus on the following areas:• Enhanced Explainability: Developing methods for greater transparency in agentic decision processes.• Hybrid Models: Integrating symbolic reasoning with neural learning to create hybrid agentic systems that combine the advantages of both paradigms.• Robustness in High-Stakes Environments: Further simulation and field trials in critical applications like finance, healthcare, and transportation.• Regulatory Frameworks: Creating standards and guidelines to ensure ethical use and accountability in autonomous systems.

6. Conclusions

- Agentic AI marks an evolutionary leap in the quest for truly autonomous and adaptive intelligent systems. By merging reinforcement learning, model-based simulation, and multi-agent collaboration, the proposed framework empowers AI to not only react to its environment but to actively plan, learn, and decide in a forward-looking manner. Experimental evaluations across financial and robotic domains indicate that agentic AI can outperform traditional reactive systems in terms of adaptability, efficiency, and risk management. Nonetheless, challenges related to data quality, system transparency, and ethical governance highlight the need for continued research. As agentic AI matures, its potential to revolutionize numerous fields becomes increasingly evident, marking a critical milestone in the evolution of artificial intelligence.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML