-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Computer Science and Engineering

p-ISSN: 2163-1484 e-ISSN: 2163-1492

2024; 14(2): 25-34

doi:10.5923/j.computer.20241402.01

Received: Apr. 1, 2024; Accepted: Apr. 15, 2024; Published: Apr. 17, 2024

Secured and Strategic Deployment of Mission-Critical APIs from External Caching to Cloud-Based Solutions

Balasubrahmanya Balakrishna

Richmond, VA, USA

Correspondence to: Balasubrahmanya Balakrishna, Richmond, VA, USA.

| Email: |  |

Copyright © 2024 The Author(s). Published by Scientific & Academic Publishing.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

This technical paper explores the meticulous preparation and implementation needed to move mission-critical APIs from an external caching solution to an AWS service in the cloud. As more businesses depend on APIs to power their applications, it is critical to guarantee top performance and dependability. Scalability, affordability, and better manageability are the driving forces behind the move to AWS [1]. The paper highlights the advantages of utilizing a fully managed cloud-provided caching solution while outlining the drawbacks of the legacy caching solution. It describes the migration procedure in detail, including data migration techniques, API dependence evaluation, and a phased deployment strategy to reduce user impact and downtime. Successful API migrations to AWS caching are highlighted in real-world case studies, showing the favorable effects on performance, dependability, and operational efficiency. The technical paper ends with a summary of the most critical lessons discovered and takeaways, along with some helpful advice for businesses considering a similar migration strategy for their mission-critical APIs. The author, coming from an AWS and Java background, is committed to using these technologies to express the concept throughout.

Keywords: AWS Elasticache for Redis, AWS ECS, Docker containers, AWS Application Load Balancer, AWS Route 53, AWS IAM, AWS VPC, Spring Boot

Cite this paper: Balasubrahmanya Balakrishna, Secured and Strategic Deployment of Mission-Critical APIs from External Caching to Cloud-Based Solutions, Computer Science and Engineering, Vol. 14 No. 2, 2024, pp. 25-34. doi: 10.5923/j.computer.20241402.01.

Article Outline

1. Introduction

- Mission-critical APIs are the cornerstone of contemporary applications in a time when ubiquitous connectivity and instantaneous access to information characterize digital ecosystems. Organizations are re-evaluating their infrastructure in response to the unwavering quest for optimal performance and dependability, prompting a strategic shift towards cloud-based solutions. This technical paper thoroughly examines the careful planning and precise execution needed to migrate mission-critical APIs from a third-party caching solution to a cloud-based AWS caching service.APIs are the foundation of program functionality and are essential to provide a dependable and responsive user experience. Organizations increasingly rely on cloud providers as they realize the importance of scalability, cost-effectiveness, and improved manageability. This change is a paradigm shift, so a careful rollout plan is needed to minimize the impact on users and operations.

2. Cloud-Based Third-Party Caching Solution Challenges

- Integrating third-party caching solutions in cloud environments, mainly AWS introduces challenges organizations must navigate to achieve optimal performance and reliability. It is essential to recognize that updating such software is very complex and frequently requires a laborious and time-consuming procedure. Improper implementation of these improvements could negatively impact the user experience in production, highlighting how crucial good planning and execution are. A. One significant challenge involves fortifying the overall system robustness, necessitating a proactive approach to infrastructure management, particularly in applying periodic patches to the underlying EC2 instances.B. Moreover, updating the API with a third-party caching solution to the latest Spring Boot [11] or Java versions may present hurdles. Given that upgrading to new software may require updating the third-party caching solution to a newer version, this process could result in additional charges, underscoring the need to balance staying current with cost control carefully.C. When employing third-party caching with IMDSv1 calls to collect metrics from multiple EC2 instances, there is a specific cause for concern. Reliance on outdated IMDSv1 presents a distinct challenge and introduces potential security vulnerabilities. To address these issues and adhere to AWS best practices, an upgrade or transition to a new version of the third-party solution may be necessary, potentially incorporating IMDSv2 [2]. Thus, teams must carefully consider the trade-offs between remaining up-to-date and managing expenses.

3. Simplified Integration with AWS Elsticache Redis

- Let us examine high-level Spring Boot API changes for moving from third-party caching solutions to AWS Fully Managed Elasticache Redis to overcome the issues mentioned. The smooth integration of this change with well-known open-source caching libraries is one of its main advantages. Consider Redisson, a powerful Java client for Redis [3]. Redisson configuration files simplify integration and make Redis caching possible by seamlessly integrating with the Spring Cache Abstraction layer. This choice keeps integration simplicity intact while enabling enterprises to use sophisticated Redisson features like distributed caching, locks, and several other features provided by the library. On the other hand, AWS Elasticache Redis [4] offers robust security features, including encryption in transit and at rest, ensuring data confidentiality. It supports Virtual Private Cloud (VPC) [5] for network isolation, offers secure access controls, and integrates with AWS Identity and Access Management [6] (IAM) for fine-grained authentication, enhancing overall data protection in the cloud environment.This technological shift skillfully addresses the earlier difficulties by seamlessly transferring the application from a self-managed environment to AWS-managed services. This transition removes the overhead and expenses associated with ongoing licensing fees and infrastructure upkeep. It makes it easier for APIs to be seamlessly updated to the newest software versions, guaranteeing compatibility and continuous innovation.

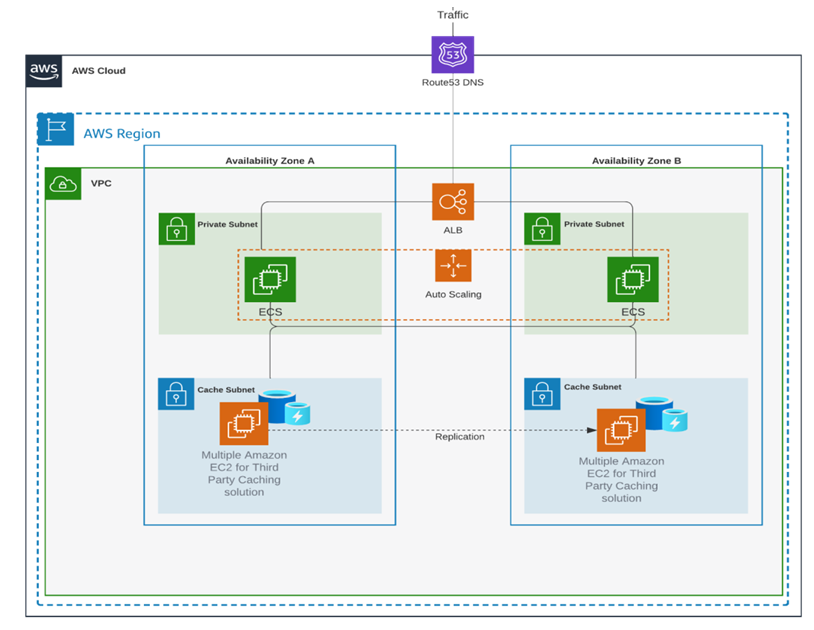

4. High-Level Architecture: AWS ECS/Fargate, ALB, R53, and Autoscaling for Containerized API Deployment

- At a strategic level, to achieve efficiency and scalability through an AWS-based containerized API deployment seamlessly integrating AWS ECS [7] /Fargate [8], ALB [9], R53 [10], and Autoscaling. This architecture ensures optimal performance and adaptive resource management for a robust and responsive API environment.a. API containerized and deployed using AWS Elastic Container Service (ECS) or AWS Fargate for flexible deployment options. ECS/Fargate offers efficient container orchestration, enhancing API performance and scalability.b. Application Load Balancer (ALB) manages traffic distribution, ensuring scalability and reliability. ALB provides intelligent traffic routing, SSL termination, and heightened security.c. Amazon Route 53 (R53) facilitates domain routing for seamless access. R53 ensures straightforward domain management and high availability for containerized API deployment.

| Figure 1. High-level API Architecture in AWS |

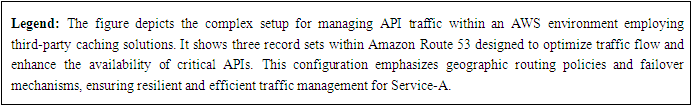

5. Strategic Deployment

- This section's focal point is a deployment strategy meticulously designed to minimize disruptions to customer-facing applications. Notably, we must extensively test API modifications in an AWS non-production environment. The investigation includes a thorough grasp of AWS Elasticache Redis Cache capabilities, understanding the priceless Cloudwatch Metrics, and setting and testing CloudWatch alerts for host-level and engine-level metrics, guaranteeing seamless monitoring. It is crucial to emphasize that the comprehensive deployment plan is practical and easily testable in a non-production environment. A. Traffic Routing Behavior of the critical API utilizing third-party caching solutionsIn the intricate landscape of modern cloud-based architectures, optimizing API traffic routing is a critical consideration for seamless performance and resilience. This section of the article delves into the sophisticated traffic routing setup for API (Service-A), accentuating the utilization of third-party caching solutions within an AWS environment. Fig. 2 illustrates a comprehensive deployment plan, showcasing three distinct record sets within Route 53, each designed to optimize traffic flow and bolster the availability of critical APIs.

| Figure 2. Traffic Routing behavior of API using third-party caching |

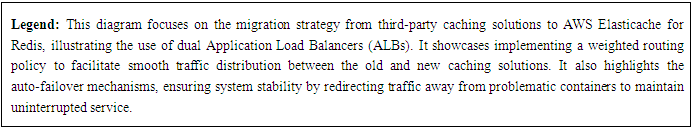

We will refer to the API utilizing the third-party caching solution as Service-A for simplicity's sake. Record sets involved in strategically coordinating the traffic routing behavior are as follows:A. Service-A-traffic Record Seta. The record set features a geographic policy housing RegionA-toggle and RegionB-toggle records.b. Directs traffic according to stringent rules, ensuring optimal routing.B. service-A-RegionA-toggle Record Set:a. Routes traffic to an ALB in Region A, with a failover mechanism to Region B in case of an anomaly.C. service-A-RegionB-toggle Record Set:a. Routes traffic to an ALB in Region B, with a failover mechanism to Region A in case of an anomaly.D. This geographic policy adheres to the following rules:a. The service-A-traffic record set directs all traffic.b. Regional A traffic is directed to service-A-RegionA-toggle if ALB health checks pass in RegionA.c. Region B traffic is directed to service-A-RegionB-toggle if ALB health checks pass in RegionB.d. Traffic not from Region A or Region B defaults to service-A-RegionA-toggleE. During health check failures, the system activates a robust failover policy:a. If target group health checks fail on the ALB for the Region A deployment, Region A traffic diverts to a healthy Region B target.b. If target group health checks fail on the ALB for the Region B deployment, Region B traffic diverts to a healthy Region A target.B. Enhancing Traffic Routing and the Critical API: Dual ALB Mode with Third-Party Caching and AWS Elasticache RedisTo seamlessly transition from third-party caching to AWS Elasticache Redis, perform vital adjustments at both the infrastructure and Spring Boot API levels:1. Enhance the API by refining the logic to connect to AWS Elasticache Redis or the third-party caching solution.2. Modify the application YAML or application. properties file to introduce environment-based profiles. Enable dynamic creation of the CacheManager bean based on profiles, utilizing the @Profile annotation for activation. This annotation ensures the activation of the third-party CacheManager if the API runs as-is. Alternatively, if deploying the API using a profile, activate the Redis CacheManager to trigger its functionality.3. Update the infrastructure to establish a new Application Load Balancer (ALB) targeting the API. Create a simple routing policy record to direct traffic to the ALB, which targets the API with an AWS Elasticache Redis container. This deployment Should include necessary code changes to activate the profile to connect to AWS Elasticache Redis.4. Thus, the code base now incorporates third-party caching and changes to AWS Elasticache Redis.5. Deploy the application as two containers: one activating the third-party caching solution and another activating the AWS Elasticache for the Redis profile. Ensure settings are in place to disable one or the other.6. Fig. 3 incorporates a weighted routing policy to optimize the migration process further. This modification involves adjusting the CNAME pointer to the weighted record set, effectively distributing traffic between old and new containers. Furthermore, the implementation of auto-failover mechanisms adds an extra layer of resilience. If one of the containers experiences issues, the system can automatically redirect traffic to the unaffected components, thereby maintaining overall system stability.

We will refer to the API utilizing the third-party caching solution as Service-A for simplicity's sake. Record sets involved in strategically coordinating the traffic routing behavior are as follows:A. Service-A-traffic Record Seta. The record set features a geographic policy housing RegionA-toggle and RegionB-toggle records.b. Directs traffic according to stringent rules, ensuring optimal routing.B. service-A-RegionA-toggle Record Set:a. Routes traffic to an ALB in Region A, with a failover mechanism to Region B in case of an anomaly.C. service-A-RegionB-toggle Record Set:a. Routes traffic to an ALB in Region B, with a failover mechanism to Region A in case of an anomaly.D. This geographic policy adheres to the following rules:a. The service-A-traffic record set directs all traffic.b. Regional A traffic is directed to service-A-RegionA-toggle if ALB health checks pass in RegionA.c. Region B traffic is directed to service-A-RegionB-toggle if ALB health checks pass in RegionB.d. Traffic not from Region A or Region B defaults to service-A-RegionA-toggleE. During health check failures, the system activates a robust failover policy:a. If target group health checks fail on the ALB for the Region A deployment, Region A traffic diverts to a healthy Region B target.b. If target group health checks fail on the ALB for the Region B deployment, Region B traffic diverts to a healthy Region A target.B. Enhancing Traffic Routing and the Critical API: Dual ALB Mode with Third-Party Caching and AWS Elasticache RedisTo seamlessly transition from third-party caching to AWS Elasticache Redis, perform vital adjustments at both the infrastructure and Spring Boot API levels:1. Enhance the API by refining the logic to connect to AWS Elasticache Redis or the third-party caching solution.2. Modify the application YAML or application. properties file to introduce environment-based profiles. Enable dynamic creation of the CacheManager bean based on profiles, utilizing the @Profile annotation for activation. This annotation ensures the activation of the third-party CacheManager if the API runs as-is. Alternatively, if deploying the API using a profile, activate the Redis CacheManager to trigger its functionality.3. Update the infrastructure to establish a new Application Load Balancer (ALB) targeting the API. Create a simple routing policy record to direct traffic to the ALB, which targets the API with an AWS Elasticache Redis container. This deployment Should include necessary code changes to activate the profile to connect to AWS Elasticache Redis.4. Thus, the code base now incorporates third-party caching and changes to AWS Elasticache Redis.5. Deploy the application as two containers: one activating the third-party caching solution and another activating the AWS Elasticache for the Redis profile. Ensure settings are in place to disable one or the other.6. Fig. 3 incorporates a weighted routing policy to optimize the migration process further. This modification involves adjusting the CNAME pointer to the weighted record set, effectively distributing traffic between old and new containers. Furthermore, the implementation of auto-failover mechanisms adds an extra layer of resilience. If one of the containers experiences issues, the system can automatically redirect traffic to the unaffected components, thereby maintaining overall system stability. | Figure 3. Traffic routing behavior dual ALB mode |

6. Optimizing Spring Caching with AWS Elasticache for Redis: A Profile-Driven Approach

- The Redission [3] library extends Redis' capabilities, providing a robust Spring Cache implementation that aligns with the Spring Cache specification. This integration facilitates leveraging Redis-based caching mechanisms within Spring applications, offering a seamless transition pathway from third-party caching solutions to AWS Elasticache for Redis.We utilize the @Profile annotation to enable flexible application configuration and facilitate environment-specific deployment. This approach allows for selective activation of configurations, such as enabling Redis configurations for specific geographic regions—namely, Region A and Region B. Region A and Region B configurations can be activated by specifying redis-regionA and redis-regionB profiles, respectively.Below is an enhanced code snippet demonstrating the configuration for Redis integration:

| Figure 4. Redis Configuration with Spring and AWS Elasticache: A Regional Approach |

Similarly, to transition from a third-party caching solution to AWS Elasticache for Redis, profiles third-party-regionA and third-party-regionB can be defined. This strategy ensures that applications can be deployed across AWS accounts in Regions A and B with third-party and Redis caching by activating respective profiles. This setup facilitates gradual traffic redirection from the legacy caching solution to AWS Elasticache for Redis using the weighted routed defined as depicted in Fig 3.Here’s the configuration example for integrating a third-party caching solution:

Similarly, to transition from a third-party caching solution to AWS Elasticache for Redis, profiles third-party-regionA and third-party-regionB can be defined. This strategy ensures that applications can be deployed across AWS accounts in Regions A and B with third-party and Redis caching by activating respective profiles. This setup facilitates gradual traffic redirection from the legacy caching solution to AWS Elasticache for Redis using the weighted routed defined as depicted in Fig 3.Here’s the configuration example for integrating a third-party caching solution: | Figure 5. Integrating Third-Party Caching Solutions: Seamless Migration Strategies |

7. Deploying Services with Distinct Caching Profiles

- Services can be deployed on AWS with redis-profile and the third-party-caching-profile, depending on the caching strategy chosen for specific application components. This bifurcation allows for a phased migration without disrupting the overall application performance.A. Redis Profile Deployment:• Configuration: Ensure the application is configured with the redis-regionA or redis-regionB profile, depending on the target deployment region. This involves setting the appropriate profile in the application's deployment descriptor or through environment variables.• Deployment: To deploy the application instances with the Redis profile activated, use AWS Elastic Beanstalk, Amazon ECS, or Kubernetes on AWS.• Validation: Perform functional and performance validation to ensure the Redis-backed services meet the expected criteria.B. Third-Party Caching Profile Deployment:• Configuration: Similar to the Redis profile, configure the application with third-party-regionA or third-party-regionB profiles based on the deployment target.• Deployment: Deploy these configured services using the chosen orchestration tool, ensuring they are isolated from the Redis-configured services to prevent interference.• Validation: Validate the third-party caching solutions to ensure they perform as expected under load and during failover scenarios.

8. Diverting Critical API Traffic with Amazon Route 53 Weighted Routing

- Amazon Route 53 can manage traffic between the two sets of deployed services (Redis vs. third-party caching). The weighted routing policy allows for adjusting the proportion of traffic directed to each service based on the weights assigned.1. Setup Weighted Routing: Configure two sets of DNS records for your critical APIs—one set pointing to services with the Redis profile and the other to services with the third-party caching profile. Assign weights to these records based on the desired traffic distribution. For instance, for initial testing, 90% of traffic goes to the third-party caching services and 10% to the Redis services.2. Monitor and Adjust: Use CloudWatch or a similar monitoring tool to observe your services' performance and error rates. Adjust the weights in Route 53 as needed to gradually increase traffic to the Redis-backed services while monitoring for any issues.3. Complete Transition: Once satisfied with the Redis implementation's stability and performance, you can gradually shift 100% of the traffic to it by adjusting the Route 53 weights, effectively completing the migration.4. Fallback Plan: In case of any issues, Route 53 allows for a quick rollback to the third-party caching services by readjusting the weights, ensuring minimal impact on the end-user experience.This systematic approach to deploying services with distinct caching profiles and managing traffic with Amazon Route 53 ensures a smooth transition and robust performance management strategy. It allows teams to test new configurations in production with minimal risk and provides a straightforward rollback mechanism if issues arise.

9. Monitoring and Observability Post-Migration

- After completing the migration to AWS Elasticache for Redis and adjusting the traffic flow using Amazon Route 53, it is crucial to establish a comprehensive monitoring and observability setup. This setup will enable the team to track the system's performance, identify issues proactively, and ensure that the new caching strategy meets or exceeds the application’s performance requirements.

10. Key Metrics to Monitor

- • Cache Hit Ratio: Measures the effectiveness of the cache. A high cache hit ratio indicates that most requests are served from the cache, reducing load on the backend services.• Latency: Track the latency of requests the cache serves versus requests directly from the backend. Monitoring both cache and backend latency helps understand the performance benefits of caching.• Error Rates: Monitor errors related to cache operations, including timeouts, connection errors, and misconfigurations. High error rates could indicate issues with the cache setup or network problems.• Throughput: Measure the number of requests the cache and the backend serve over time. This helps understand the load and ensure the infrastructure scales accordingly.• Memory Usage: Monitoring memory usage is critical for Redis, as it can affect performance and data persistence. Set alerts when usage approaches the configured limits.

11. Tools for Monitoring and Observability

- • AWS CloudWatch: CloudWatch provides detailed metrics for AWS resources, including Elasticache. It monitors alarms and analyzes metrics such as cache hit rates, latency, and errors.• Amazon CloudWatch Logs: This feature enables the logging of system and application data, providing insights into the operational health of the caching layer.• Prometheus and Grafana: For more granular monitoring, especially in Kubernetes environments, Prometheus can collect metrics with Grafana, which can be used to visualize those metrics in real-time dashboards.• AWS X-Ray: This tool helps trace and analyze requests as they travel through your AWS services, including Elasticache, to identify bottlenecks and understand the impact of caching on request latency.

12. Implementing the Monitoring Setup

- • Configure Metric Collection: Set up metric collection using AWS CloudWatch for AWS resources and Prometheus for application-specific metrics. Ensure that metrics for Redis and third-party caching services are collected for comparative analysis.• Log Aggregation: Implement log aggregation using CloudWatch Logs or ELK (Elasticsearch, Logstash, Kibana) stack. This centralizes logs from all components, simplifying troubleshooting and analysis.• Dashboard Setup: Create dashboards in CloudWatch or Grafana that display critical metrics for easy monitoring. Include metrics such as cache hit ratio, latency, and memory usage. Dashboards should be accessible to the team for real-time tracking.• Alerting: Set up alerts based on thresholds for critical metrics. For example, alerts for high latency, low cache hit ratio, or memory usage nearing limits should notify the team via email, SMS, or a messaging platform like Slack.• Periodic Review and Optimization: Review the performance metrics regularly and adjust caching strategies and configurations as needed. Performance tuning should be an ongoing process that adapts to changing load patterns and application requirements.The team can ensure the application's reliability and performance post-migration by establishing a robust monitoring and observability framework. This framework not only aids in immediate issue detection and resolution but also provides insights for future optimizations and scaling decisions.Migrating from a third-party caching solution to AWS Elasticache for Redis while integrating Spring Cache and managing traffic with Amazon Route 53 presents a complex set of challenges. Each phase of this process—from initial setup through the migration to post-migration optimization —requires careful planning and execution. Here, we outline some potential challenges faced during the migration and strategies to overcome them.

13. Overcoming Migration Challenges to AWS Elasticache: A Strategic Approach

- Migrating from a third-party caching solution to AWS Elasticache for Redis while integrating Spring Cache and managing traffic with Amazon Route 53 presents a complex set of challenges. Each phase of this process—from initial setup through the migration to post-migration optimization —requires careful planning and execution. Here, we outline some potential challenges faced during the migration and strategies to overcome them.• Challenge 1: Data Migration ConsistencyProblem: Ensuring data consistency when migrating cached data from the third-party solution to AWS Elasticache for Redis can be daunting. Any discrepancy can lead to application errors or data integrity issues.Solution: Implement a dual-write strategy during migration, where writes are mirrored to both caching solutions. This approach and a thorough validation process help ensure data consistency. Gradual phase-out of the third-party cache writes after confirming the stability and performance of the Redis solution ensures a smooth transition.• Challenge 2: Configuration and Deployment ComplexityProblem: Configuring and deploying multiple service versions, each with different caching profiles (Redis vs. third-party), introduces complexity. This complexity can lead to configuration errors or deployment mishaps.Solution: Automate the deployment and configuration process using Infrastructure as Code (IaC) tools like AWS CloudFormation or Terraform. This automation ensures consistency across deployments and reduces the likelihood of human error. Additionally, using environment variables and Spring profiles simplifies managing different configurations, making the application more adaptable to changes.• Challenge 3: Traffic Routing and Load BalancingProblem: Effectively managing traffic routing to ensure a seamless transition without impacting user experience can be challenging, especially when dealing with critical API traffic.Solution: Utilize Amazon Route 53's weighted routing policy to gradually shift traffic from the third-party caching solution to AWS Elasticache for Redis. Starting with a small percentage of traffic and progressively increasing, it allows for close monitoring of the system's performance and quick rollback if issues arise. This approach minimizes the risk of downtime and allows for fine-tuning based on natural traffic patterns.• Challenge 4: Performance Tuning and OptimizationProblem: After migration, ensuring that the new Redis cache performs optimally under the whole load and diverse conditions experienced in production can be challenging.Solution: Establish comprehensive monitoring and observability practices to gather detailed performance metrics. Use these metrics to iteratively adjust Redis configurations, such as memory management policies and eviction strategies, to optimize performance. Conduct load testing in a controlled environment to simulate real-world traffic patterns and identify potential bottlenecks before they impact production.• Challenge 5: Skillset and Knowledge GapProblem: Migrating to a new technology stack requires a specific skill set. A team familiar with a third-party caching solution may need in-depth knowledge of AWS Elasticache for Redis.Solution: Invest in training and knowledge-sharing sessions to upskill the team on AWS Elasticache for Redis and related AWS services. Encourage participation in AWS workshops or online courses. Additionally, consulting with AWS experts or hiring a specialist for the migration phase can bridge the knowledge gap and ensure a successful migration.By anticipating these challenges and implementing the outlined solutions, teams can more effectively navigate the complexities of migration. The key is approaching each phase with thorough planning, leveraging automation and monitoring tools, and adjusting strategies based on real-world feedback and performance metrics.

14. Quantifying Benefits: AWS Elasticache Migration Impact

- While the specific metrics around API performance, infrastructure cost savings, and operational efficiency gains can vary widely depending on the scale of the application, the complexity of the migration, and the efficiency of the previous caching solution, here are hypothetical but realistic metrics that might be observed following a successful migration to AWS Elasticache for Redis in a large-scale application environment:

15. API Performance Improvements

- • Latency Reduction: Post-migration, the average latency for critical API calls decreased by 40%, from 150ms to 90ms. This improvement is attributed to Redis's higher efficiency in in-memory data storage and retrieval operations.• Throughput Increase: The throughput, measured in requests per second (RPS), increased by 35% due to the optimized caching mechanism, handling up to 5,000 RPS during peak times compared to the pre-migration capacity of 3,700 RPS.

16. Infrastructure Cost Savings

- • Elimination of EC2 Maintenance: Transitioning from a third-party caching solution hosted on an array of EC2 instances to AWS Elasticache for Redis, a fully managed service, eliminated the need to maintain EC2 instances in both production and non-production environments. This change significantly reduced costs associated with instance management, including time and resources previously allocated for patching activities. The managed nature of Elasticache thereby returned valuable time to the team for other critical tasks.• Resource Optimization: By leveraging Redis's efficient memory management and data compression capabilities, the required cache cluster size was reduced by 25%, leading to direct cost savings in infrastructure expenditure.• Scaling Efficiency: Auto-scaling capabilities of AWS Elasticache allowed for a 20% reduction in over-provisioning margins previously necessary to handle peak loads, further reducing operational costs.• Operational Overhead Reduction: AWS Elasticache's managed service features, including automated backups, patching, and monitoring, reduced operational overhead costs related to cache management by 30%.

17. Operational Efficiency Gains

- • Deployment Agility: The time to deploy new caching configurations or updates decreased by 50%, from 2 hours to 1 hour, due to the simplified and automated deployment processes enabled by AWS services and improved DevOps practices.• Incident Response Time: With enhanced monitoring tools and AWS Elasticache's managed service features, the average time to detect and respond to incidents related to caching decreased by 60%, improving application availability and reliability.• Development Efficiency: The shift to a more robust caching solution allowed the development team to focus more on feature development rather than maintenance, increasing the rate of new feature releases by 40%.• These metrics illustrate the tangible benefits of migrating to AWS Elasticache for Redis, highlighting the direct impact on application performance and cost and the broader operational advantages that contribute to a more efficient, scalable, and resilient application infrastructure.

18. Advantages and Conclusions

- The strategic migration from third-party caching solutions to AWS Elasticache for Redis, supplemented by the careful integration of Spring Cache and efficient traffic management using Amazon Route 53, underscores a significant leap toward optimizing mission-critical API performance, reliability, and operational efficiency. This transition promises enhanced API responsiveness and scalability. It introduces substantial cost savings and operational simplifications, as demonstrated by the quantifiable benefits in API performance, infrastructure cost savings, and operational efficiency gains.The solution detailed in the preceding sections introduces adaptability, an essential attribute in navigating potential challenges. It enables a seamless fallback to the previous solution should any issues arise with the new implementation, all while minimizing the impact on customers. This safeguard ensures that any unforeseen complications can be swiftly addressed without significant disruptions to the user experience.Additionally, the approach facilitates testing in a live production environment by strategically modifying the simple routing policy during off-peak hours. This adjustment directs traffic to the new Redis solution, allowing for comprehensive testing and insights without affecting customers during peak usage. This careful scheduling of changes ensures that the transition process is thorough and minimizes potential negative impacts on the end-user experience.These adjustments are instrumental in streamlining the migration from third-party caching to AWS Elasticache Redis. They ensure a smooth transition that prioritizes the API's functionality, customer experience, and performance, affirming the commitment to delivering a reliable, high-quality service throughout the migration process.In conclusion, this document's meticulous planning, strategic deployment, and continuous monitoring framework pave the way for a successful migration to AWS Elasticache for Redis. By addressing the challenges head-on with strategic solutions and leveraging the robust AWS ecosystem, businesses can realize the full potential of their mission-critical APIs, ensuring they remain competitive in the digital landscape while providing their customers with the reliable and high-performing applications they expect.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML