Hajji Tarik, Mohcine Kodad, Jaara El Miloud

Laboratoire de Recherche en Informatiques (LaRI), Faculté des Sciences d’Oujda (FSO), Université Mohammed Première (UMP), Oujda, 60000, Maroc (LaRI-FSO-UMP)

Correspondence to: Hajji Tarik, Laboratoire de Recherche en Informatiques (LaRI), Faculté des Sciences d’Oujda (FSO), Université Mohammed Première (UMP), Oujda, 60000, Maroc (LaRI-FSO-UMP).

| Email: |  |

Copyright © 2014 Scientific & Academic Publishing. All Rights Reserved.

Abstract

The objective of this work is to make the presentation of a new method that uses the power of artificial neural networks to the restoration and correction of errors in digital images in the case of a bad capturing of the image also be called the movement’s images. In this work, we will prove the effectiveness of artificial neural networks as a good solution for this problem. We will also present in this work, a new study to choose the structure of artificial neural networks most efficient and personalized learning algorithm to teach the neural network the recovery mechanism of such images.

Keywords:

Artificial Neural Networks, Image Processing Learning Algorithms

Cite this paper: Hajji Tarik, Mohcine Kodad, Jaara El Miloud, Digital Movements Images Restoring by Artificial Neural Networks, Computer Science and Engineering, Vol. 4 No. 2, 2014, pp. 36-42. doi: 10.5923/j.computer.20140402.02.

1. Introduction

The analysis of movement of digital images is a particularly interesting problem on the scientific level. This problem studied in this work is exhibited by all the defective pictures after a capture at the time of movement either object captured or the device that made this capture. The problem of moving images far beyond personal use such as cameras, digital cameras, Smartphone’s and many other computers working able to catch for personal use, for reaching sensitive areas such as monitoring of road traffics which we have taken to properly identify the characteristics of the vehicles concerned. You can use the fruits of this work in pouring areas, which uses automatic catches (e.g. radar, cameras of surveillance and telecommunication in general). This approach uses a learning for an artificial neural network can give effective results compared to other restoration approaches to the problem of moving images, as we shall see later in this work. We will present in the remainder of this document, the status of l Are on this topic, a description of the proposed method as an introduction to this work. Then we will describe our methodology which contains a remind on the mode of operation of artificial neural networks, a brief presentation of the problem of restoration of digital images, and we will describe our training database, the learning algorithm and our test database, we will also present the results obtained.

1.1. Artificial Neural Networks

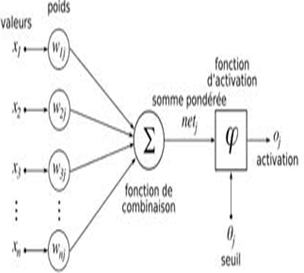

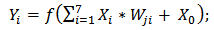

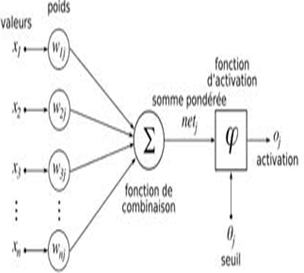

The neural network is inspired by biological neural system. It is composed of several interconnected elements to solve a collection of varied problems. The brain is composed of billions of neurons and trillions of connections between them. The nerve impulse travels through the dendrites and axons, and then treated in the neurons through synapses. This results in the field of artificial neural networks in several interconnected elements or belonging to one of the three marks neurons, input, output or hidden. Neurons belonging to layer n are considered an automatic threshold. In addition, to be activated, it must receive a signal above this threshold, the output of the neuron after taking into account the weight parameters, supplying all the elements belonging to the layer n +1. As biological neural system, neural networks have the ability to learn, which makes them useful. The artificial neural networks are units of troubleshooting, capable of handling fuzzy information in parallel and come out with one or more results representing the postulated solution. The basic unit of a neural network is a non-linear combinational function called artificial neurons. An artificial neuron represents a computer simulation of a biological neuron human brain. Each artificial neuron is characterized by an information vector which is present at the input of the neuron and a non-linear mathematical operator capable of calculating an output on this vector. The following figure shows an artificiel neuron: | Figure 2. Artificial neuron |

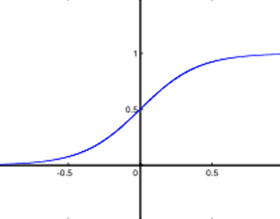

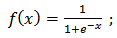

The synapses are Wij (weights) of the J neurons; they are real numbers between 0 and 1. The function is a summation of combinations between active synapses associated with the same neuron. The activation function is a non-linear operator to return a true value or rounded in the range [0 1]. In our case we use the sigmoid function.  | Figure 3. Sigmoid function |

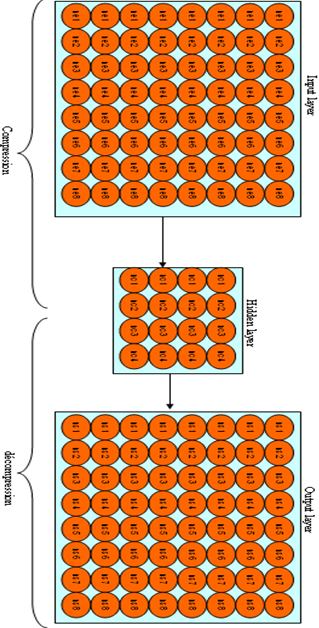

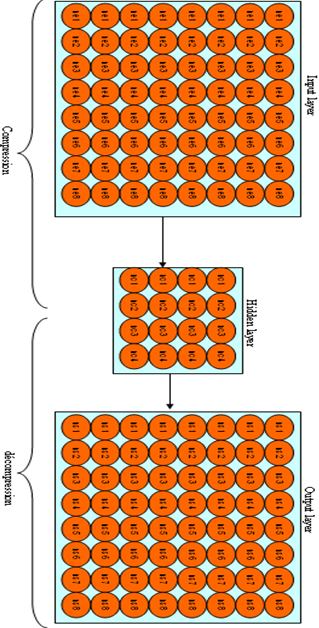

An artificial neural network is composed by a collection of artificial neurons interconnected among them to form a neuronal system able to learn and to understand the mechanisms. Each artificial neural network is characterized by its specific architecture; this architecture is denoted by the number of neurons of the input layer, the number of hidden layers, the number of neurons in each hidden layer and the neurons number in the output layer. A layer of neurons in a neural network is a group of artificial neurons, with the same level of importance, as is shown in the following figure: | Figure 4. Artificial neural network |

The operating principle of artificial neural networks is similar to the human brain; first, it must necessarily pass on the learning phase to record knowledge in the memory of the artificial neural network. The storage of knowledge is the principle of reputation and compensation to a collection of data that forms the basis of learning. We have several algorithms that can teach an artificial neural network as backpropagation. The backpropagation is a method of calculating the weight for network supervised learning is to minimize the squared error output. It involves correcting errors according to the importance of the elements involved in fact the realization of these errors: the synaptic weights that help to generate a significant error will be changed more significantly than the weights that led to a marginal error. In the neural network, weights are, first, initialized with random values. It then considers a set of data that will be used for learning.

1.2. Restoration of Digital Images

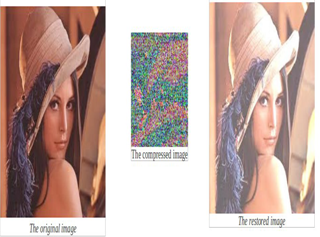

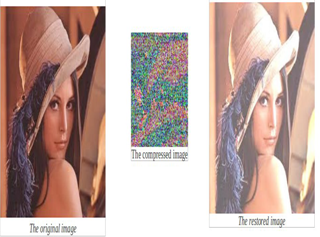

Restoration of digital images is an essential axis in the science of image processing. The objective of the restoration is the regeneration of the original image, after a response to a manipulation of the image processing operation, such as the restoration of an image, after a pressing operation by an artificial neural network. An example of compression and decompression of image Linda with a neural network (64 * 8 * 64): | Figure 5. Compression and decompression with an artificial neural network |

In most cases, the restoration operation is not reversible to100%; we always have a wealth of information lost. For each sequence of image processing to make the handling of transformations on an image, has its own restoration algorithm which is independent of the algorithm of the operation and other authentic it is linked to the algorithm of the operation for example the case of image compression by the artificial neural networks: | Figure 6. Network compressor decompressor |

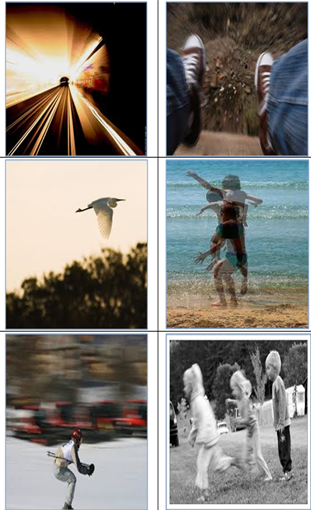

In this example, the operation of the restoration is effected by the second portion of the artificial neural network from the hidden layer to the output layer. It should be noted that the artificial neural network is used in this case in two modes: the compressor and decompressor (restaurateur).Figure 1 shows an example of a catching of an object during the movement; it has the problem of moving images studied in this work: | Figure 1. Digital moving image |

1.3. Related work

Overwhelmingly, we have two main types of methods deal with the problem of analysis of moving images in intension to identify forms of those images to make the other operations such as restoring: The first is to tag the interesting objects in each image one after the other. Thus, we can assume that we can locate a specific object (the head of a person, for example) in the first image of a sequence to the XY coordinates (217.117), and in the next image, head at (225.120) is identified.It can be assumed that the head moved between the two images and its direction of motion was 8 pixels to the right and 3 down. Such an approach depends critically on the accuracy of the labeling process. Moreover, it must not let the image contains too many similar objects; if not the classical problem of matching appears. That said, this type of analysis of "high-level" movement corresponds fairly well to what is called "long-range motion analysis" in humans, and further, it can be seen from the current architecture SpikeNet.The second method involves a step of treatment to analyze the local movements in the image, and more particularly by calculating the optical flow at each point of the image. Once these movements are analyzed, we can look for sequences specific movements that characterize a given action, without going through the identification of the objects concerned. This is indeed exactly what happens in the perception of the point-light displays, because no specific form is identifiable - this is the pattern of movement that is being used.Recently, Giese and Poggio (2003) have developed a simplified model of the visual system that integrates these two types of mechanisms. They used a multilayer neural network similar to that previously developed by Reisenhuber and Poggio for the treatment of static scenes (Riesenhuber & Poggio, 2002). The big difference lies in the fact that two independent processing pathways, one specialized in the analysis of forms and be able to analyze each set separately and another specializing in the treatment of movements alone. This division is justified in the organization of the visual pathways in primates where the distinction between forms processing and movement is evident in the organization of extra-striate visual areas. The model of Giese and Poggio is very interesting, but still at the demonstration because it only works with artificial images of small and composed of drawn shapes with lines. In addition, their system is not intended for use "real time". One reason for this is their choice to use neurons with ongoing activity, as in almost all artificial neural networks. We found that this approach is computationally inefficient compared to the impressive speed of SpikeNet which overcomes this constraint. Therefore, a primary objective for the future is to provide an impulse and asynchronous version of the model developed by Giese and Poggio. For the subject of artificial neural networks in digital image processing, we cannot make a panoramic citation for lifetime achievement on this topic. However, it should be noted that our research group conducted a computer Lari collection travails research is about as: Digital Watermarking and Signing by Artificial Neural Networks, with the aim of using neural networks as functions of hashes end to generate a digital signature for the images in 2014. In the same year we have developed an application capable of automatic generation of artificial neural networks with simple descriptions Meta model under the title: automatic generation of artificial neural networks through a met model description. And also we made a comparative study to choose the structure of neural network the most effective for the compression and restoration of digital images.

1.4. Proposed Method

The method we propose to solve the problem of the restoration of defective pictures due to poor capture related to movement sensors used or the movement of objects captured, it is based on artificial neural networks and supervised learning with a dedicated version of the backpropagation algorithm. It has a data base composed of a learning set of images with their infected versions. For every holy image, a collection formed by clone’s holy image is available represent the motion capture holy image.

2. Materials and Methods

2.1. Explication de la Méthode

In most cases, the restore operation is not 100% reversible. We always have a wealth of information lost. For each sequence of image processing to make the handling of transformations on an image, it has its own restoration algorithm that is independent of time by the operation of the algorithm and other algorithm related to the time of operation for example the case of image compression by the artificial neural networks . We began our approach by building the basis of training data, since we will use a supervised learning. This database is formed by infected images and their holy versions. We prepare these images with Photoshop software. The following figure shows an example of a moving image and its holy Version: | Figure 7. Holy digital image |

| Figure 8. Digital moving image |

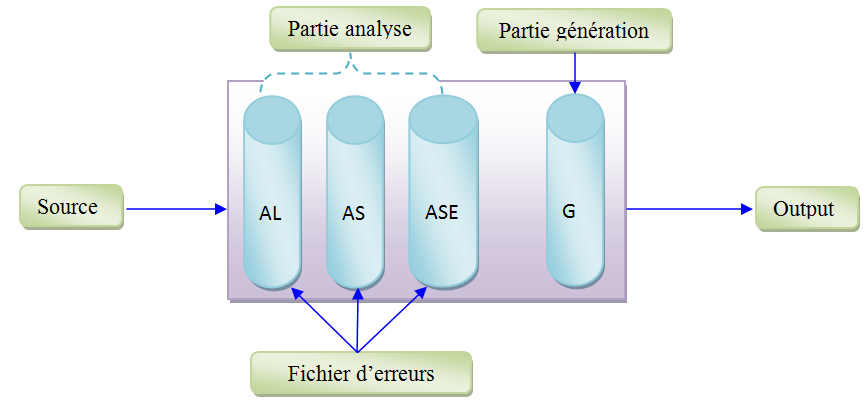

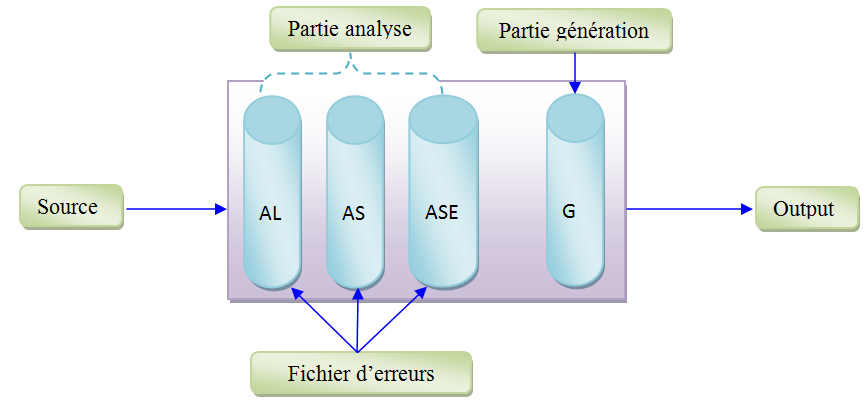

Then we used an application able to generating the neural networks with descriptions meta model (we published this application in a work titled automatic generation of artificial neural networks by a meta description model). The objective of using this application is customizing settings required for the functioning of artificial neural networks. It should be noted that the classical model of artificial neural networks, proposed in 1986 by Rumelhart no longer perform, because it is always necessary to make an adaptation of this model to the instance of the problem studied. The following figure shows the general architecture of this application: | Figure 9. General architecture of the generator |

The generator is composed mainly by four programs: 1. Lexical analyzer: who makes the verification of the lexicon and the recognition of all lexical units and fills the error file if any errors ever met. If the lexical analyzer completes his execution without encountering any error then it passes to parser the program, it should be noted that this latter is generated by the Flex tool that provides a C program that must be compiled to give an executable.2. The parser can recognize syntax errors then recorded them in the error log and evaluate the expressions using the units generated by the lexical analyzer program.3. The semantic analyzer allows for the semantic validation of sources according to all specifications of artificial neural networks. If we encounter errors, we have to save them in the errors file and stop the build operation.Syntactic, lexical and semantic analyzers represent the control phase of the generator while the generator program do the automatic generation of a program written in an object oriented language. It should be noted that this application can generate an ANN program directly in C++ and java or in an XML form that must be exploited to expand the set of target languages. After the creation of artificial neural networks, we use the backpropagation gardian as a learning algorithm. It should be noted that each artificial neural network is characterized by its own architecture. The objective is to choose the most efficient architecture based network.We denote by the architecture of a neural network, the number of artificial neurons in the input layer, the number of hidden layers, the number of artificial neurons in each hidden layer and the number of artificial neurons in the layer output.After the full convergence of artificial neural networks, we proceed to test these systems on a test basis formed by 25% of the images already processed at the time of learning and 50% of the images carefully generated by Photoshop and 25% meadows pictures randomly by the camera of your smartphone.

2.2. Algorithme d’apprentissage

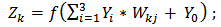

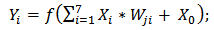

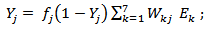

The learning algorithm is as following:- Step 1: Initialize the connection weights (weights are taken randomly).- Step 2: Propagation entries entered the  are presented to the input layer:

are presented to the input layer:  | (1) |

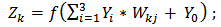

The spread to the hidden layer is made using the following formula: | (2) |

Then from the hidden layer to the output layer, we adopt: | (3) |

and

and  are scalar;

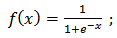

are scalar; Is the activation function:

Is the activation function: | (4) |

- Step 3: Back propagation of error at the output layer, the error between the desired output  and

and  output is calculated by:

output is calculated by:  | (5) |

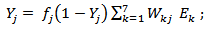

The error calculation is propagated on the hidden layer using the following formula: | (6) |

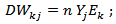

- Step 4: Fixed connection weights the connection weights between the input layer and hidden layer is corrected by: | (7) |

And | (8) |

Then, we change the connections between the input layer and the output layer by: | (9) |

N is a parameter to be determined empirically.- Step 5 loops: Loop to step 2 until a stop to define criterion (error threshold, the number of iterations).

3. Résultats et Discussion

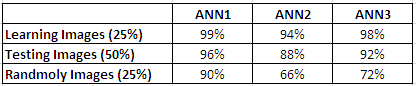

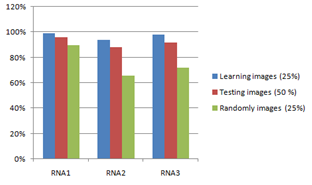

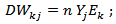

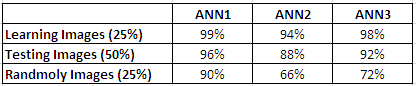

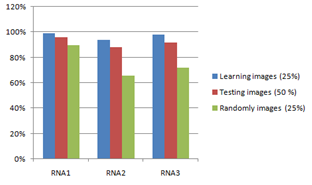

In this section we will expose only the results for artificial neural networks characterized by the following three architectures:1. ANN1 (64 * 16 * 64) characterized by 64 artificial neurons in the input layer, one hidden layer of 16 artificial neurons and an output layer of 64 artificial neurons.2. ANN2 (64 * 4 * 64): characterized by 64 artificial neurons in the input layer, one hidden layer of 4 artificial neurons and an output layer of 64 artificial neural.3. ANN3 (64 * 16 *4 * 16* 64): characterized by 64 artificial neurons in the input layer, hidden three layers, the first 16 artificial neural layer, the second 4 artificial neural layer, the third 16 layer of artificial neurons and an output layer of 64 artificial neurons.The following figure shows the rate of convergence for the three artificial neural networks ANN1, ANN2 and ANN3 according to the number of iterations of the learning algorithm: | Figure 10. Convergence rate |

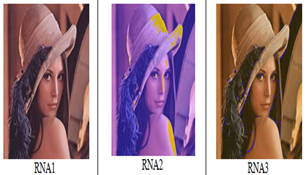

Bitter the graphs generated by the software MATLAB, we have the artificial neural network converges faster than ANN1 ANN2, it converges faster than ANN3 network. The following figure shows an example of restoring an image by artificial neural networks ANN1, ANN2 and ANN3: | Figure 11. Rrestoring a digital image |

According to the results of the operation of the restoration carried out on an image, 3 artificial neural networks (RNA1, RNA2 and RNA3), we have ANN1 is the most efficient that RNA2 and RNA3 and RNA3 is more efficient that RNA2. The following figure shows the results for the rate of convergence with three artificial neural networks applied on the basis of tests: | Figure 12. Recognition rate |

1. For ANN1: we have almost a perfect restoring for all the images already seen by the artificial neural network or time of learning, very good results for all images in the test database and acceptable results for meadows pictures randomly.2. For ANN2: we have good results for the learning images, poor behavior for the test images and a high rate of information loss for random pictures3. For APP3: we have rate pleasant catering for learning images, an acceptable rate of recovery for images testing and performance monk behavior for random images.The following graph shows an overview of restoration rate for the three artificial neural network based on test bases used:  | Figure 13. Graph of restoration rate |

Bitter graph generated by the EXCEL software, we have the artificial neural network ANN1, is the most successful raptor by the artificial neural networks ANN2 and ANN3.

4. Conclusions

Multilayer neural network is a nice solution to the problem of restoration of digital moving images.In this work we set up we can recover over 96% of the amount of information infected following an operation to capture incorrect.The behavior of the neural networks is related to the network architecture and also with the category test images. Following this work, it can be concluded that artificial neural network characterized by 64 artificial neurons in the input layer, one hidden layer of four artificial neurons and an output layer of 64 artificial neural gives a nice solution for problem of restoring digital moving images.

References

| [1] | Fabre-Thorpe, M., Delorme, A., Marlot, C., & Thorpe, S. (2001). A limit to the speed of processing in ultrarapid visual categorization of novel natural scenes. J Cogn Neurosci, 13(2), 171-180. |

| [2] | Paquier, W., & Thorpe, S. J. (2000). Motion Processing using One spike per neuron. Proceedings of the Computational Neuroscience Annual Meeting, Brugge, Belgium. |

| [3] | Rousselet GA, Fabre-Thorpe M, Thorpe SJ. 2002. Parallel processing in high-level categorization of natural images. Nat Neurosci 5: 629-30. |

| [4] | Thorpe S. 2002. Ultra-Rapid Scene Categorization with a Wave of Spikes. In Biologically Motivated Computer Vision, ed. HH Bulthoff, SW Lee, TA Poggio, C Wallraven, pp. 1-15. Berlin: Springer Lecture Notes in Computing |

| [5] | Thorpe, S., Delorme, A., & Van Rullen, R. (2001). Spike-based strategies for rapid processing. Neural Networks, 14(6-7), 715-725. |

| [6] | Thorpe, S. J., Delorme, A., VanRullen, R., & Paquier, W. (2000). Reverse engineering of the visual system using networks of spiking neurons, Proceedings of the IEEE 2000 International Symposium on Circuits and Systems (Vol. IV, pp. 405-408): IEEE press. |

| [7] | VanRullen, R., & Thorpe, S. J. (2001). Rate coding versus temporal order coding: what the retinal ganglion cells tell the visual cortex. Neural Comput, 13(6), 1255-1283. |

| [8] | VanRullen R, Thorpe SJ. 2002. Surfing a spike wave down the ventral stream. Vision Res 42: 2593-615. |

| [9] | Vapnik V.N., 1995, The nature of statistical learning theory: Springer-Verlag. |

| [10] | Vapnik V.N., 1998, Statistical learning theory: John Wiley. |

| [11] | Viéville T., Crahay S, 2002, A deterministic biologically plausible classifier. INRIA Research Report, 4489. |

| [12] | Desai (2010), Gujarati handwritten numeral Optical Character Reorganization through neural network pattern recognition 43-2010. |

| [13] | R. EL Yachi, K. Moro, Mr. Fakir, B. Bouikhalene (2010), Evaluation Methods SKeletonization for the recognition of printed Tifinaghe Characters, Sitacam'09. Mr. Banoumi, A. Lazrek and K.Sami (2002), an Arabic transliteration / novel for e-paper, Proceeding of the 5th International Symposium on Electronic Document, Federal Conference on the document, Hammamet, Tunisia. |

| [14] | C. Rey & J.-L. Dugelay. Blind Detection of Malicious Alterations On Still Images Using Robust Watermarks. IEE Secure Images and Image Authentication colloquium, London, UK, Apr. 2000. |

| [15] | M. Kutter, S. Voloshynocskiy and A. Herrigel. The Watermark Copy Attack. In Proceedings of SPIE Security and Watermarking of Multimedia Content II , vol. 3971, San Jose, USA, Jan. 2000. |

| [16] | J. Fridrich, M. Goljan & N. Memon. Further Attacks on Yeung-Minzer Fragile Watermarking Scheme. SPIE International Conf. on Security and Watermarking of Multimedia Contents II, vol. 3971, No13, San Jose, USA, Jan. 2000. |

| [17] | Julien Chable. Emmanuel Robles Programmation Android, de la conception au déploiement avec le SDK Google Android, New York, France, 2009. |

| [18] | UML Infrastructure Final Adopted Speci_cations, version 2.0, Sebtembre 2003.http://www.omg.org/cgi-bin/doc?ptc/03-09-15.pdf. |

| [19] | Giese MA, Poggio T. 2003. Neural mechanisms for the recognition of biological movements. Nat Rev Neurosci 4: 179-92. |

are presented to the input layer:

are presented to the input layer:

and

and  are scalar;

are scalar; Is the activation function:

Is the activation function:

and

and  output is calculated by:

output is calculated by:

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML