Alabi A. A.1, Akanbi L. A.2, Ibrahim A. A.3

1Computer Science and Engineering Department, the Polytechnic, Ile-Ife, Nigeria

2Computer Science and Engineering Department, Obafemi Awolowo University, Ile-Ife, Nigeria

3Mathematics Department, Oduduwa University, Ipetumodu, Ile-Ife, Nigeria

Correspondence to: Alabi A. A., Computer Science and Engineering Department, the Polytechnic, Ile-Ife, Nigeria.

| Email: |  |

Copyright © 2015 Scientific & Academic Publishing. All Rights Reserved.

Abstract

This research work centered on the analysis and identification of the major features making up the human face in relation to their roles in the Eigenface Algorithm. The area of concern was to ascertain the workability and efficiency of the developed algorithm by evaluating its performance on gallery of faces with plain features in comparison with that of faces with distinct features. Seventy five percent (75%) representing three out of every four images were used to form the training set while the remaining twenty five (25%) were meant for the test images. The characteristics of the face in terms of facial dimension, types of marks, structure of facial components such as the eye, mouth, chin etc. were analyzed for identification. The face images were resized for proper reshaping and cropped to adjust their backgrounds using the Microsoft Office Picture Manager. The system code was developed and run on both set of face images (Plain and Distinct) using Matrix Laboratory software (MatLab7.0). The system was observed to be of better results with the use of faces with distinct features than those with plain features. This was duly observed both in terms of the total number of identified images as well as the execution time. Nearly all the tested images were identified from those with distinct features while the case was not the same with those with plain images. The system evaluation has shown an estimated difference of 25% in terms of identification and 45% in execution time. The study concluded that the existence of distinct features on those facial images employed catalyzed the recognition rate of the developed PCA code on such faces not only in terms of identification but also in the speed of the system. It has also shown that the performance of the Eigenface algorithm is greater in the recognition of faces with distinct features compared with those with plain features.

Keywords:

Eigenfaces, Plain and Distinct Features, Eigenvalues, Eigenvectors, Faces

Cite this paper: Alabi A. A., Akanbi L. A., Ibrahim A. A., Performance Evaluation of the Eigenface Algorithm on Plain-Feature Images in Comparison with Those of Distinct Features, American Journal of Signal Processing, Vol. 5 No. 2, 2015, pp. 32-39. doi: 10.5923/j.ajsp.20150502.02.

1. Introduction

Recognition is a peculiar process as far as the Human Visual System (HVS) is concerned. This is as a result of the fact that, every human being carries with him from his cradle to his grave, certain physical marks which do not change his character and by which he can easily be identified. The main subject here is ‘the face’ which is defined as the frontal part of the head in humans from the fore head, to chin including the hair, eyebrow, eyes, nose, cheek, mouth, lips, teeth, and skin. It is used for expression, appearance and identity among others even though, no two faces are alike in the exact same way, not even twins. In recent times, face recognition has being a popular issue under consideration in the area of pattern recognition. Many different pattern recognition algorithms have been developed for recognizing different objects (including faces) in the area of Computer Visual System (CVS). A good example is the Eigenface Algorithm which makes use of the Principal Component Analysis (PCA). This according to analysis has the needed procedural steps and qualities that cater for the proper recognition of the human face. The Eigenface Algorithm centers on the major portions of the human face representing the areas with most relevant information about the person to be identified. This thus suggests that humans can simply be identified by comparing major featural portions of their faces with a set of trained images features on a system.

2. Problem Statement

Faces in real life are in categories aside from the issue of their structural resemblance or difference. Some are composed of only features which naturally make up the basic components of a face. This category is referred to as ‘plain-feature’ since such faces consist of features with clear structural surface of key parts such the nose, mouth, eyes, cheeks, chin etc. On the other hand, some of these major facial components may carry on them certain supplementary features which together form the face. For instance, the presence of features such as tribal marks, scarifications, tattoo etc. on the popular facial components (nose, eyes, mouth etc.) makes them different from the type mentioned earlier. A face with such properties is said to be of ‘distinct-feature’.However, the consideration of only high energy-level vectors ignoring the low energy features in the analysis and recognition of face images in the Eigenface algorithm has proven positive only on faces with no special features but the effect is yet to be seen on faces with distinct features. This necessitates the need to implement such a system and compare its performance using galleries of faces with plain features with those with unique features.However, the relevance of the use of these vectors of high eigenvalues representing the features with the most distinct and important information about face images could better be demonstrated if the set of images to be trained and tested are of such needed features. In other word, implementing the algorithm using the two sets of faces is a way of determining which of the set of faces best showcases the strength of the algorithm. This research is aimed at evaluating the performance of the Eigenface algorithm on two different galleries of face images (i.e. those with plain features and those with distinct features) with a view to determining how useful are the vectors of higher level energy in the recognition of face images.

3. Brief Review

The Eigenface Algorithm is an approach which makes use of a technique known as Principal Component Analysis (PCA). This technique, according to Turk and Pentland (1991), treats only the main (principal) features of a given image for analysis and processing. In this case, face images are converted into their basic features which are then stored as vectors (eigenvectors) in a face space following a normalization process. These normalized faces have their images remapped in space (Undrill, 1992). The idea of using eigenvectors first came into existence in 1987 in a technique developed for efficiently representing pictures of faces using Principal Component Analysis by Sirovich and Kirby. The technique according to Sirovich and Kirby (1987) was able to produce the best coordinate system for image compression, where each coordinate was actually an image that was termed as Eigenpicture. They then argued that, in principle, any collecting of face image can be approximately reconstructed by storing small collection of weights for each face and a small set of standard pictures (Eigenpicture) whose weights were found by projecting the face image onto each Eigenpicture. This was later studied and employed as a key principle for face recognition system by Turk and Pentland (1991). PCA is also called the (discrete) Karhunen-Loeve Transformation (or KLT, named after Kari Karhunen and Michael Loeve) or the Hoteling Transform (in honour of Harold Hoteling) (Turk and Pentland, 1991). The Karhunen-Loeve transformation is simply to find the vectors that best account for the distribution of face images within the entire image space. These vectors define the face space, thus, each vector of length N2 describes an N by N image which is a linear combination of the images in original face image. These vectors are the eigenvectors of the covariance matrix corresponding to the original face image and they also are face like-in nature as revealed by Turk and Pentland (1991). However, these characteristics made them to be referred to as Eigenfaces in face recognition. The Eigenfaces span a basis set with which to describe the face image. (Sirovich and Kirby, 1987). Today, the terms Eigenfaces and PCA are used interchangeably especially when it comes to the analysis of the major features of human faces. The technique seeks a linear combination of variables such that the maximum variance is extracted from the variables. PCA is a way of identifying patterns in data, and expressing the data in such a way as to highlight their similarities and differences. Since patterns in data can be hard to find in data of high dimension, where the luxury of graphical representation is not available, PCA serves as a powerful tool for analyzing data (Lindsay, 2002). Meanwhile, once these patterns in the data are found, they can be compressed, i.e. by reducing the number of dimensions, without much loss of information (Lindsay, 2002). Its procedural steps gives room for radical data compression thus, allowing narrow bandwidth communication channels to carry large amount of information. Although, many algorithms and techniques have come up for the improvement of existing ones as regards issues such as impairments as explained by Bate and Bennetts (2014), Principal Components Analysis still retains its credibility as a promising image processing approach to face recognition (Burton et al., 1999). The approach still remains one of the most successful representations for recognition according to Marian et al., 2002.

4. System Analysis and Design

4.1. Methodology

A number of four replications of face images in two separate galleries (i.e. faces with plain features and those with distinct features) were generated with the aid of a digital camera. These images were taken through snapshots with different orientations. Seventy five percent (75%) representing three out of every four images were used to form the training set while the remaining twenty five (25%) were meant for the test images. The corresponding sets of images were duly cropped and re-sized accordingly to stay clear of every foreign (unwanted) portion of the images. The developed Eigenface code was run on the sets of face images and the results were discussed as stated in the next section of this paper.

4.2. The Mathematical Analysis of the Eigenface Technique

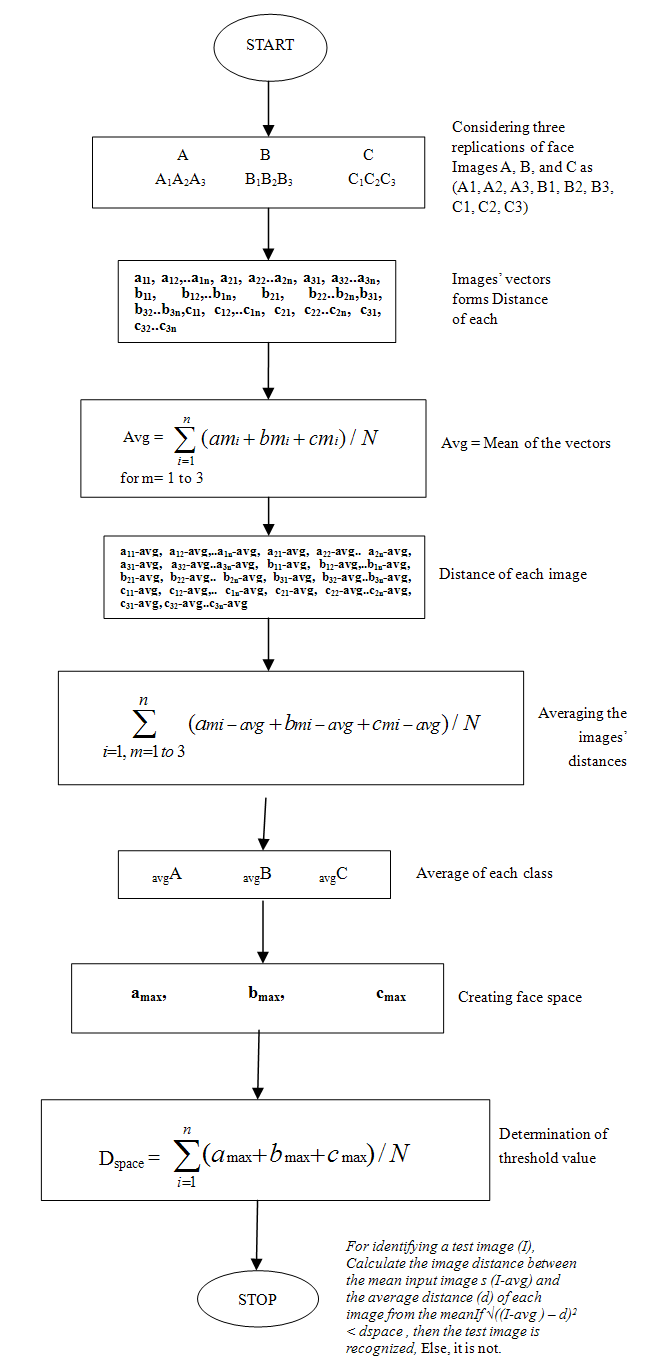

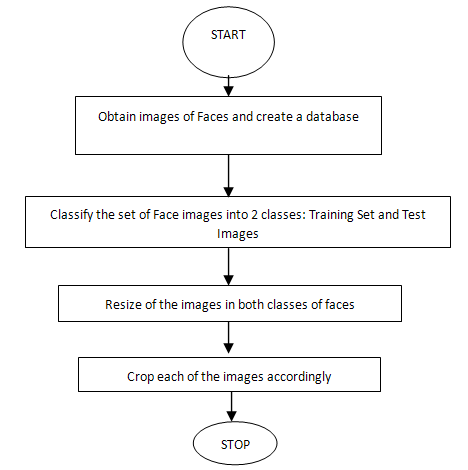

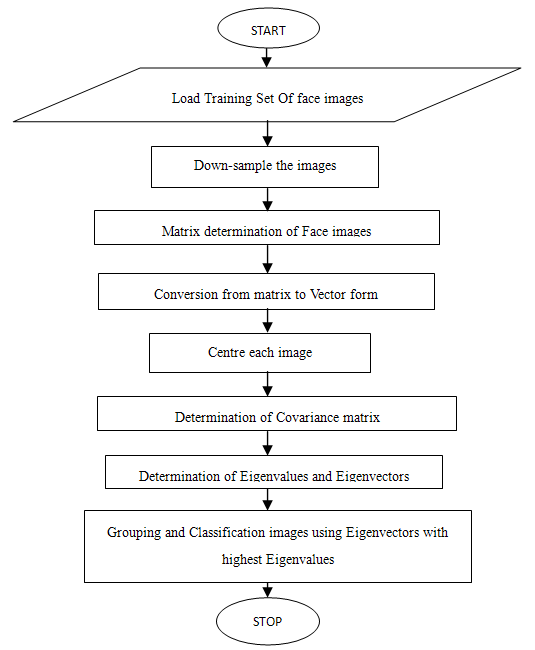

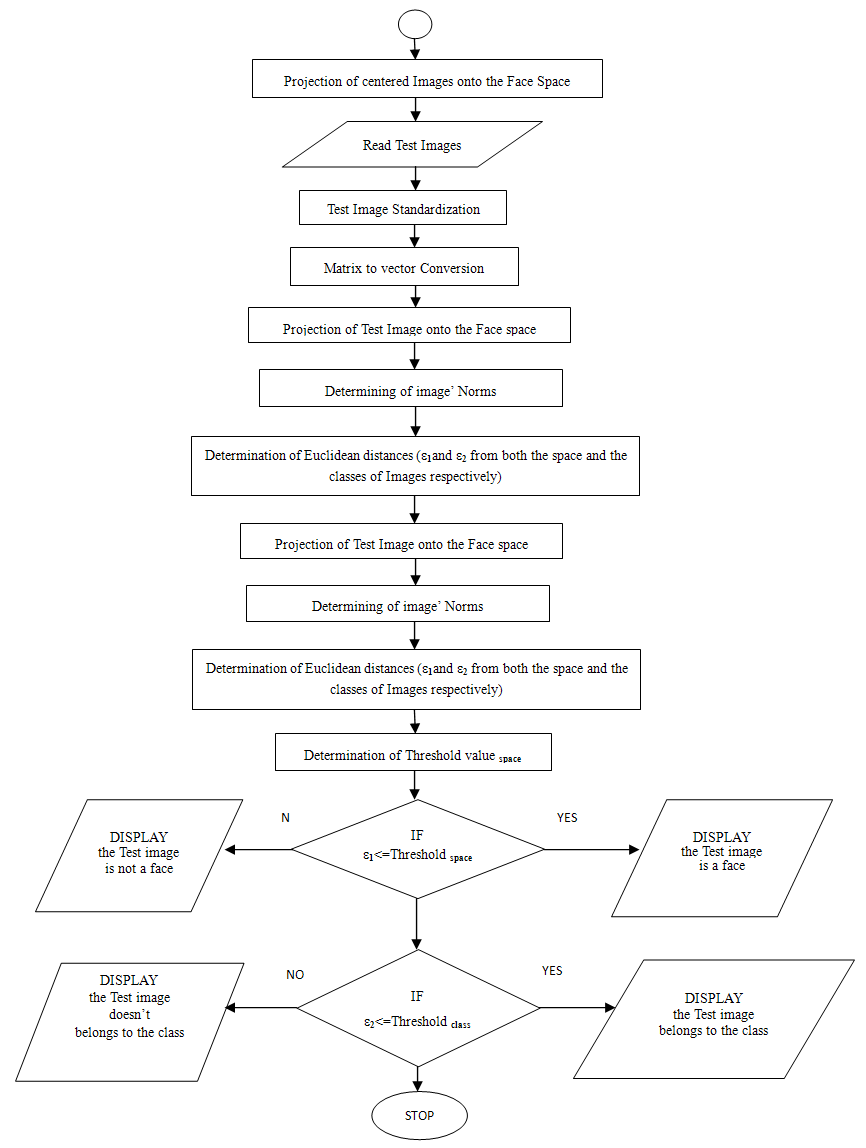

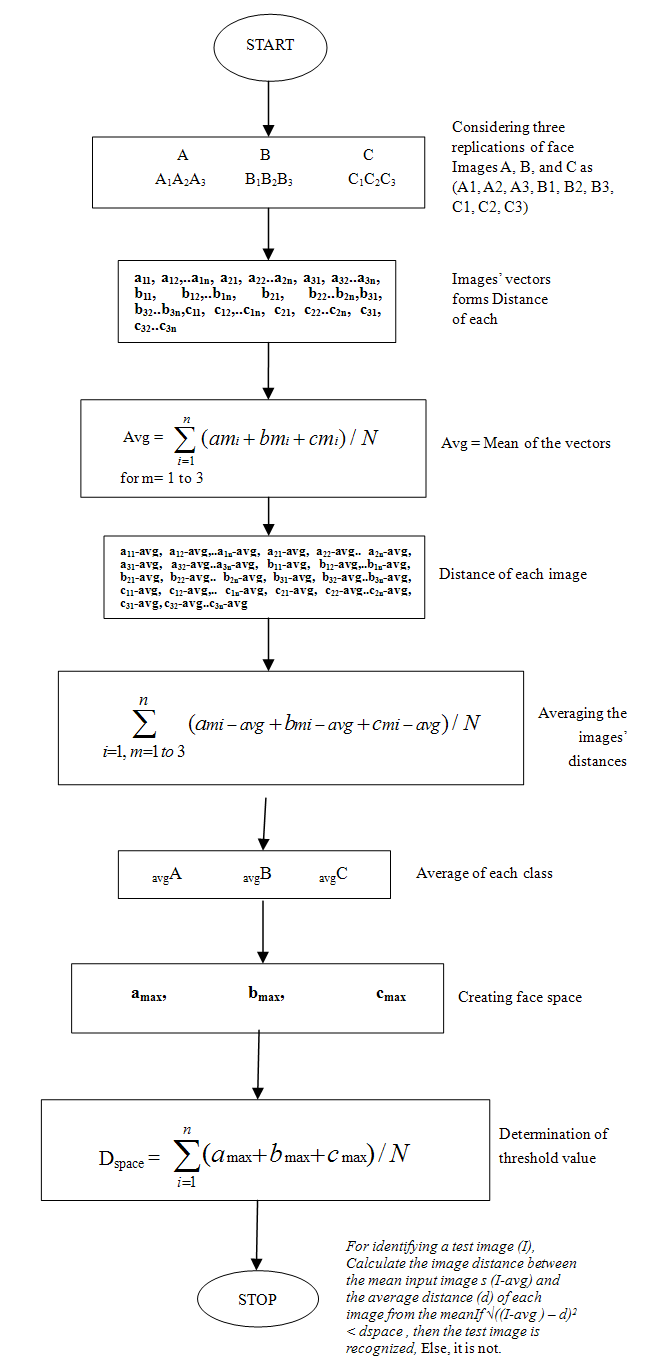

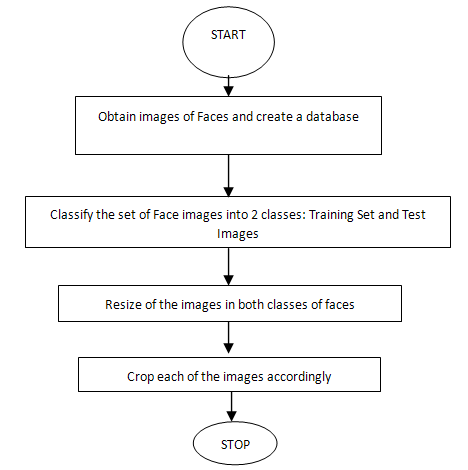

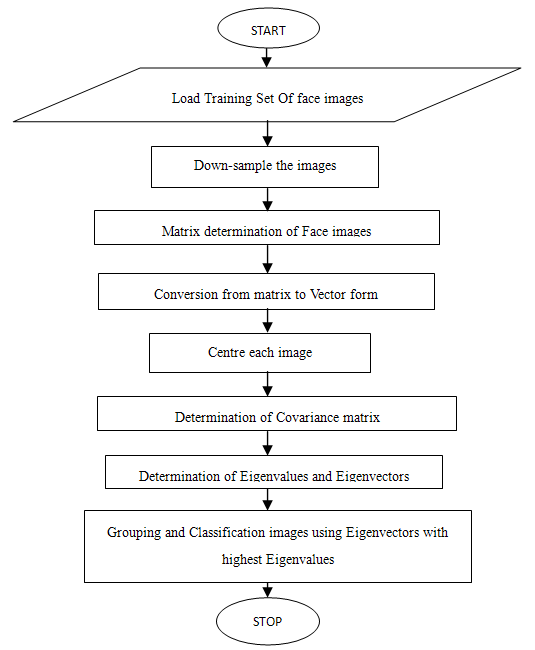

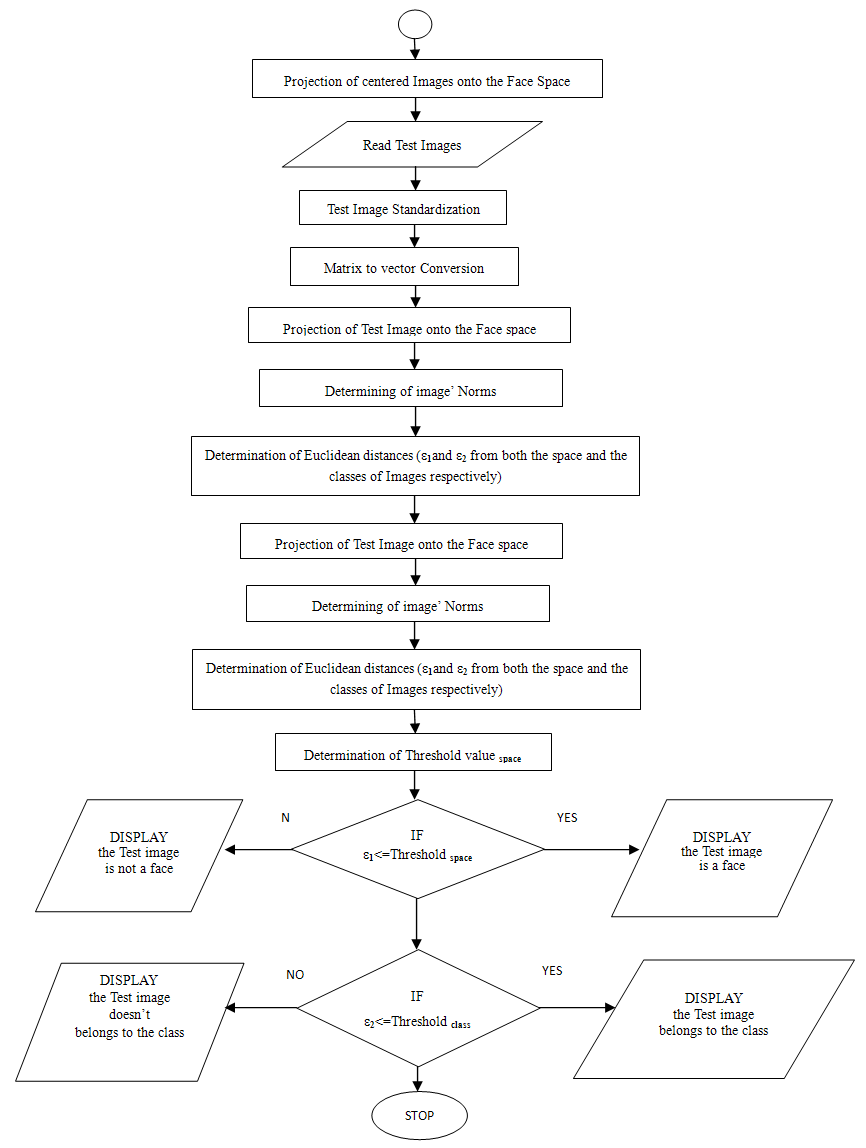

As shown in the figure 1, the required steps involved in the PCA algorithm can be explained as follows:- At the start, a collection of a set of images needs to be acquired to serve as the training set. This is the collection of original face images meant to be processed and stored in a database and it entails the taking of photographs of the concerned objects images with different views under the same conditions.- What follows is the determination of the vectors with the highest eigenvalues usually referred to as Eigenfaces from the training set (say M), keeping only the images (M’) that correspond to the highest eigenvalues. These (M’) images define the face space.- The next stage is to calculate corresponding distribution in M-dimensional weight space for each known object.- For recognition purpose, the procedure is furthered by imputing an unknown image (i.e. a set of weights based on the input image) followed by the calculation of M-Eigenface by projecting the input image unto each of the Eigenface.- Also, the Euclidean distance (i.e. distance from the face space) is calculated to determine whether or not the input image is a face.- If the distance is said to be less than a threshold value, then the input image is said to be a face and stored accordingly, otherwise, it is not a face. | Figure 1. Illustration of the PCA steps |

4.3. Design Analysis

The PCA technique in the Eigenface Algorithm explains that the number of selected eigenvectors (Eigenfaces) forming up the face space must not be greater than the number of original face images. This is mathematically expressed as follows; M (v) < = N (I)Where M (v) = No of selected eigenvectors and N (I) = Total number of vectors from the training set of images. This is better explained in the algorithm that follows:- Let K = Total number of original image- Let M (v) = Number of selected vectors- Calculate the eigenvalues of face images- Calculate the corresponding eigenvectors- Determine the maximum eigenvalue for each image- FOR M (v) <=K DO- Select the vector with the maximum eigenvalue for each of the image- Group the vectors to form the space- Calculate the average of the vectors (i.e. threshold value)The details of the entire process is sub-divided into three basic stages namely; Pre-processing, Training and Recognition (as demonstrated in Figures 2, 3&4 below). | Figure 2. Pre-Processing Flowchart |

| Figure 3. Training of face images |

| Figure 4. Recognition |

5. System Evaluation

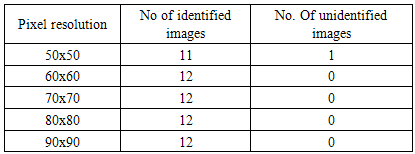

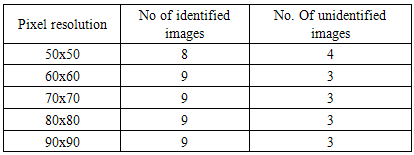

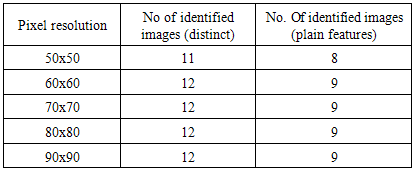

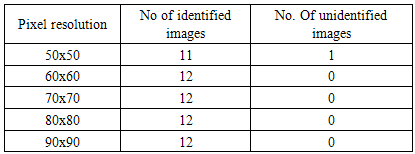

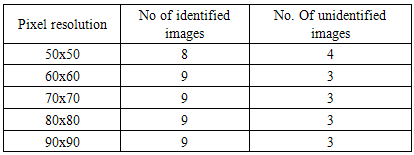

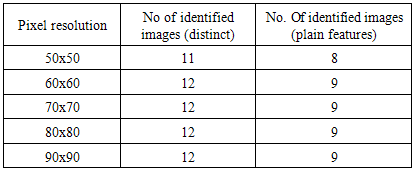

The evaluation aspect of this research work centers on two basic parameters namely; the recognition accuracy and the rate of execution of the PCA code. Meanwhile, the same PCA code was on the two sets of face images with the same images’ dimensions and pixels resolutions on the same computer and the following deduction were made based.Recognition accuracy: The issue of recognition is considered as the most important evaluation parameter in this research owing to the fact that it addresses the exact function of the developed system; that is, the real task for which the system is been developed. The fact here is that, whether or not the system works fast, its ability to recognize faces takes precedence over any other parameters. It was observed (as shown by the results on tables 1&2) that nearly all the test images were recognized when running the code on the gallery of images with distinct features while the same did not happen when those of the gallery of faces with plain features were tested. This shows that the performance of the PCA on the set of images with distinct features outweighs those with plain features.Table 1. Identification Results of PCA on Faces with distinct features

|

| |

|

Table 2. Identification results of PCA on Faces with plain features

|

| |

|

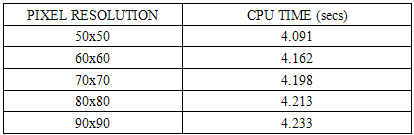

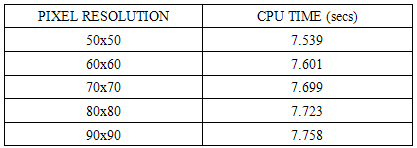

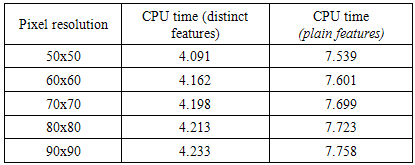

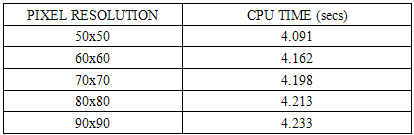

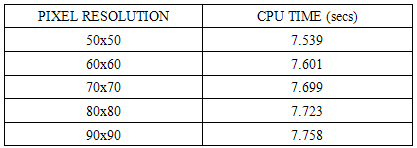

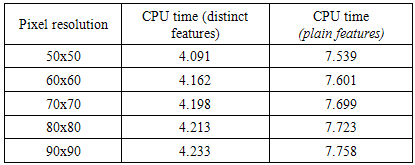

Rate of Execution: it was discovered that the system’s execution (i.e. the running of the PCA code) on the gallery of faces with distinct features took less time to process and display the expected result compared to the time spent on those images of faces with plain features. This is to say that recognizing faces with special features was observed to be faster than that of faces with no special features; as demonstrated in tables 3 and 4.Table 3. Estimated CPU Time on Faces with Distinct Features

|

| |

|

Table 4. Estimated CPU Time on Faces with Plain Features

|

| |

|

5.1. General Deductions on the Evaluation Results

According to Turk and Pentland, the Eigenface Algorithm centers on the aspect of face images called “the major images”. This simply refers to the actual portions of a particular face that give quick and reliable information about the identification of the person in question. The existence of distinct features on those facial images employed here adds more to the workability of the developed PCA code on such faces not only in terms of identification but also in the speed of the system. For instance, the percentage difference in the numbers of identified images as shown in table 5 is 25%. Also, the average CPU time taken to process and display the result is 4.1674 for face images with distinct features while that of the other gallery of images is 7.664. This can also be automatically expressed as 45% difference in execution time (table 6).Table 5. Identification Results of the two sets of Images

|

| |

|

Table 6. Estimated CPU Time on the sets of Images

|

| |

|

6. Conclusions

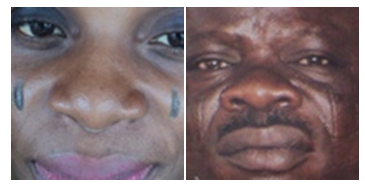

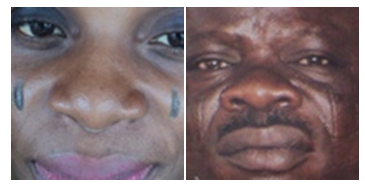

The theory in this has shown that the performance of the Eigenface algorithm is greater in the recognition of faces with distinct features compared with those with plain features (see figure 5). This is to say that it is easier and faster to identify a particular face with unique features than that with nothing of such. Faces with plain features seem to be composed of features that are closely related and thus, are likely to share Eigenvalues that are also closely related making it less easy to locate what is unique on the said set of images. Meanwhile, a face with distinct features (see figure 6) on the other hand is made up of features that set clear difference between one another and so, speed up recognition. In short, the presence of distinct features on facial images aid their recognition using PCA.  | Figure 5. Plain-feature images |

| Figure 6. Distinct-feature images |

It could therefore be established that face images are better represented and recognized if they carry on them features that clearly distinguish them from others. This also corroborates the fact that the higher the eigenvalue of a face image (i.e. the property that best represents the level of information contained in a particular vector form of the said face), the most relevant its corresponding vector and so the faster the recognition of such faces.

References

| [1] | Bate S and Bennetts R (2014) The Rehabilitation of Face Recognition Impairments: A Critical Review and Future Directions. Frontiers of Human Neuroscience. 8:491. |

| [2] | Burton, M., Bruce, V. and Hancock, P. (1999). From Pixels to People: A Model of Familiar Face Recognition. Cognitive Science, Vol. 23(1), 1-31. |

| [3] | Lindsay, I.S. (2002). A Tutorial on Principal Components Analysis. http://www.facerec.org/. |

| [4] | Marian, S. B., Javier, R. M., and Terrence, J. S. (2002). Face Recognition by Independent Component Analysis. IEEE Transactions on Neural Networks, Vol. 13, No. 6, pp.1450-1463. |

| [5] | Sirovich, L. and Kirby, M. (1987). Low-dimensional Procedure in the characterization of human faces. Journal of the Optical Society of America A, 4(3), 519-524. |

| [6] | Turk, M., and Pentland, A., (1991). Eigenface for Recognition. Journal of Cognitive Neuroscience, Vol. 3, No.1, pp.71- 86. |

| [7] | Undrill, P.E. (1992). Digital Images: Processing and The Application of Transputer. ISBN 90 199 0715, pp.4-15, IOS B.V, Van Diemenstraat 1013, CN, Amsterdam. |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML