-

Paper Information

- Next Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Signal Processing

p-ISSN: 2165-9354 e-ISSN: 2165-9362

2013; 3(3): 41-48

doi:10.5923/j.ajsp.20130303.01

Evaluation of Random-Projection-Based Feature Combination on Dysarthric Speech Recognition

Toshiya Yoshioka, Tetsuya Takiguchi, Yasuo Ariki

Graduate School of System Informatics, Kobe University, 1-1 Rokkodai, Nada, Kobe, 6578501, Japan

Correspondence to: Toshiya Yoshioka, Graduate School of System Informatics, Kobe University, 1-1 Rokkodai, Nada, Kobe, 6578501, Japan.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

We investigated the speech recognition of persons with articulation disorders resulting from cerebral palsy. The articulation of their first speech tends to become unstable due to strain on speech-related muscles, and that causes degradation of speech recognition. In this paper, we propose a feature extraction method based on RP (Random Projection) for dysarthric speech recognition. Random projection has been suggested as a means of space mapping, where the original data are projected onto a space using a random matrix. It represents a computationally simple method that approximately preserves the Euclidean distance of any two points through the projection. Moreover, as we are able to produce various random matrices, there may be some possibility of finding a random matrix that gives better speech recognition accuracy among these random matrices. To obtain an optimal result from many random matrices, a vote-based combination is introduced in this paper. ROVER combination is applied to the recognition results obtained from the ASR (Automatic Speech Recognition) systems created from each RP-based feature. Its effectiveness is confirmed by word recognition experiments.

Keywords: Articulation Disorders, Speech Recognition, Random Projection, ROVER

Cite this paper: Toshiya Yoshioka, Tetsuya Takiguchi, Yasuo Ariki, Evaluation of Random-Projection-Based Feature Combination on Dysarthric Speech Recognition, American Journal of Signal Processing, Vol. 3 No. 3, 2013, pp. 41-48. doi: 10.5923/j.ajsp.20130303.01.

Article Outline

1. Introduction

- Recently, the importance of information technology in the welfare-related fields has increased. For example, sign language recognition using image recognition technology [1][2][3], text-reading systems from natural scene images [4][5][6], and the design of wearable speech synthesizers for voice disorders[7][8] have been studied.There are 34,000 people with speech impediments associated with articulation disorders in Japan alone, and it is hoped that speech recognition systems will one day be able to recognize their voices. One of the causes of speech impediments is cerebral palsy. Cerebral palsy results from damage to the central nervous system, and the damage causes movement disorders. Three general times are given for the onset of the disorder: before birth, at the time of delivery, and after birth. Cerebral palsy is classified as follows: 1) spastic type 2) athetoid type 3) ataxic type 4) atonic type 5) rigid type, and a mixture of types[9].In this paper, we focused on persons with articulation disorders resulting from the athetoid type of cerebral palsy. Athetoid symptoms develop in about 10-15% of cerebral palsy sufferers. In the case of a person with this type of articulation disorder, the first movements are sometimes more unstable than usual. That means, in the case of speaking-related movements, the first utterance is often unstable or unclear due to the athetoid symptoms, and that causes degradation of speech recognition. Therefore, we recorded speech data for persons with articulation disorders who uttered each of the words several times, and investigated the influence of the unstable speaking style caused by the athetoid symptoms.The goal of front-end speech processing in ASR is to obtain a projection of the speech signal to a compact parameter space where the information related to speech content can be extracted. In current speech recognition technology, MFCC (Mel-Frequency Cepstrum Coefficient) is being widely used. The feature is uniquely derived from the mel-scale filter-bank output by DCT (Discrete Cosine Transform). The low-order MFCCs account for the slowly changing spectral envelope, while the high-order ones describe the fast variations of the spectrum. Therefore, a large number of MFCCs is not used for speech recognition because we are only interested in the spectral envelope, not in the fine structure. In[10], we proposed robust feature extraction based on PCA (Principal Component Analysis) with more stable utterance data instead of DCT in a dysarthric speech recognition task. Also,[11] used MAF (multiple acoustic frames) as an acoustic dynamic feature to improve the recognition rate of a person with articulation disorders, especially in speech recognition using dynamic features only. These methods improved the recognition accuracy, but the performance for articulation disorders was not sufficient when compared to that of persons with no disability.Random projection has been suggested as a means of space mapping, where a projection matrix is composed of the columns defined by the random values chosen from a probability distribution. In addition, the Euclidean distance of any two points is approximately preserved through the projection. Therefore, random projection has also been suggested as a means of dimensionality reduction[12]. In contrast to conventional techniques such as PCA, which find a subspace by optimizing certain criteria, random projection does not use such criteria; therefore, it is data independent. Moreover, it represents a computationally simple and efficient method that preserves the structure of the data without introducing significant distortion[13]. Goel et al[13] have reported that random projection has been applied to various types of problems, including information retrieval (e.g.,[14]), image processing (e.g.,[15][16]), machine learning (e.g.,[17][18][19]), and so on. Although it is based on a simple idea, random projection has demonstrated good performance in a number of applications, yielding results comparable to conventional dimensionality reduction techniques, such as PCA.In this paper, we investigate the feasibility of random projection for speech feature transformation in order to improve the recognition rate of persons with articulation disorders. In our proposed method, original speech features (MFCCs) are transformed using various random matrices. Then, we use the same number of dimensions for the projected space as that of the original space. There may be some possibility of finding a random matrix that gives better speech recognition accuracy among random matrices, since we are able to produce various RP-based features (using various random matrices). Therefore, a vote-based combination method is introduced in order to obtain an optimal result from many (infinite) random matrices, where ROVER combination[20] is applied to the results from the ASR systems created from each RP-based feature.The rest of the paper is organized as follows. Section 2 describes a feature projection method using random orthogonal matrices. In Section 3, a vote-based combination method is explained. Results and discussion for the experiments on a dysarthric speech recognition task are given in Section 4. Section 5, concludes the paper with a summary of our proposed method, contribution, and future work.

2. Random Orthogonal Projection

- This section presents a feature projection method using random orthogonal matrices. The main idea of random projection arises from the Johnson-Lindenstrauss lemma [21]; namely, if original data are projected onto a randomly selected subspace using a random matrix, then the distances between the data are approximately preserved.Random projection is a simple yet powerful technique, and it has another benefit. Dasgupta[17] has reported that even if distributions of original data are highly skewed (have ellipsoidal contours of high eccentricity); their transformed counterparts will be more spherical.

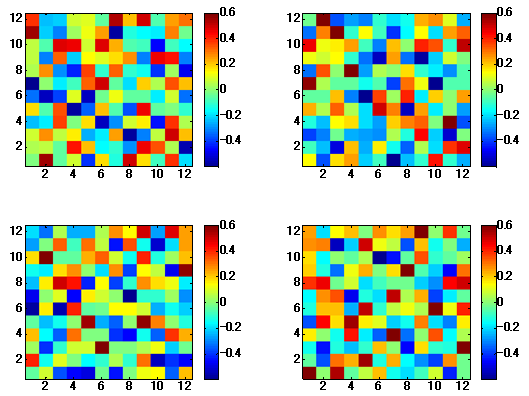

| Figure 1. Examples of random matrices 12 dim. (12 12) |

- First, we choose an

dimensional random vector,

dimensional random vector,  , and let

, and let  be the

be the

matrix whose columns are vectors,

matrix whose columns are vectors,  . Then, an original

. Then, an original dimensional vector,

dimensional vector,  , is projected onto a

, is projected onto a  dimensional subspace using the

dimensional subspace using the  random matrix,

random matrix,  , where we compute a

, where we compute a  dimensional vector,

dimensional vector,  , whose coordinates are the inner products,

, whose coordinates are the inner products,  .

. | (1) |

dimensions to

dimensions to  (=

(= ) dimensions is represented by an

) dimensions is represented by an  matrix,

matrix,  . It has been shown that if the random matrix,

. It has been shown that if the random matrix,  , is chosen from the standard normal distribution, with mean 0 and variance 1, referred to as

, is chosen from the standard normal distribution, with mean 0 and variance 1, referred to as  . Then, the projection preserves the structure of the data[21]. In this paper we use

. Then, the projection preserves the structure of the data[21]. In this paper we use  for the distribution of the coordinates. The random matrix,

for the distribution of the coordinates. The random matrix,  , can be calculated using the following algorithm [13][17].● Choose each entry of the matrix from an independent and identifiably distributed

, can be calculated using the following algorithm [13][17].● Choose each entry of the matrix from an independent and identifiably distributed  value.● Make the orthogonal matrix using the Gram Schmidt algorithm, and then normalize it to unit length.Orthogonality is effective for feature extraction because the HMMs used in speech recognition experiments consist of diagonal covariance matrices. Fig. 1 shows examples of random matrices from

value.● Make the orthogonal matrix using the Gram Schmidt algorithm, and then normalize it to unit length.Orthogonality is effective for feature extraction because the HMMs used in speech recognition experiments consist of diagonal covariance matrices. Fig. 1 shows examples of random matrices from  .

.3. Vote-Based Combination

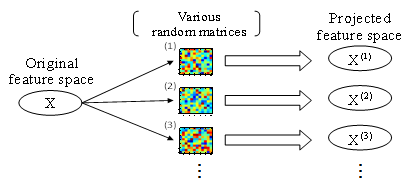

- As described in Section 2, we can make many (infinite) random matrices from

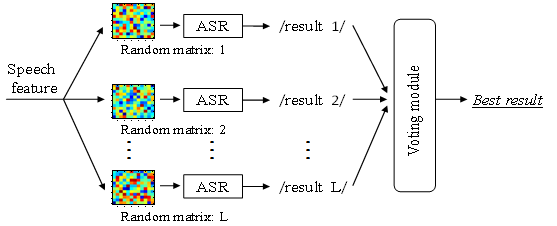

(Fig. 2). Since there may be some possibility of finding a random matrix that gives better performance, we will have to select the optimal matrix or the optimal recognition result from them. To obtain the optimal result, a majority vote-based combination is introduced in this paper, where ROVER combination is applied to the results from the ASR systems created from each RP-based feature.Fig. 3 shows an overview of the vote-based combination method. First, random matrices

(Fig. 2). Since there may be some possibility of finding a random matrix that gives better performance, we will have to select the optimal matrix or the optimal recognition result from them. To obtain the optimal result, a majority vote-based combination is introduced in this paper, where ROVER combination is applied to the results from the ASR systems created from each RP-based feature.Fig. 3 shows an overview of the vote-based combination method. First, random matrices  (

( ) are chosen from the standard normal distribution, with mean 0 and variance 1. Original speech features (MFCCs) are projected using each random matrix. An acoustic model corresponding to each random matrix is also trained. For the test utterance, using each acoustic model, an ASR system outputs the best scoring word by itself. To obtain a single hypothesis from among all the results for random projection, voting is performed by counting the number of occurrences of the best word for each RP-based feature.

) are chosen from the standard normal distribution, with mean 0 and variance 1. Original speech features (MFCCs) are projected using each random matrix. An acoustic model corresponding to each random matrix is also trained. For the test utterance, using each acoustic model, an ASR system outputs the best scoring word by itself. To obtain a single hypothesis from among all the results for random projection, voting is performed by counting the number of occurrences of the best word for each RP-based feature. | Figure 2. Random projection on the feature domain. An original feature is transformed to various features using various random matrices. (Eq. 1) |

| Figure 3. Overview of the vote-based combination |

- For example, in the case of l = 20, 20 kinds of new feature vectors are calculated using 20 kinds of random matrices. Then, we train the 20 kinds of acoustic models using 20 kinds of new feature vectors. In the test process, 20 kinds of recognition results are obtained using 20 kinds of acoustic models. To obtain a single hypothesis from among 20 kinds of recognition results, voting is performed.

4. Evaluation

4.1. Experimental Conditions

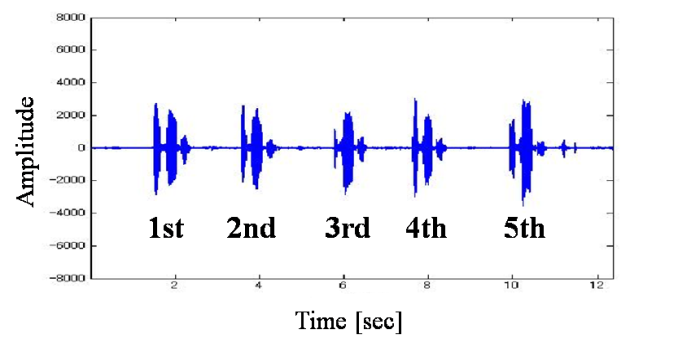

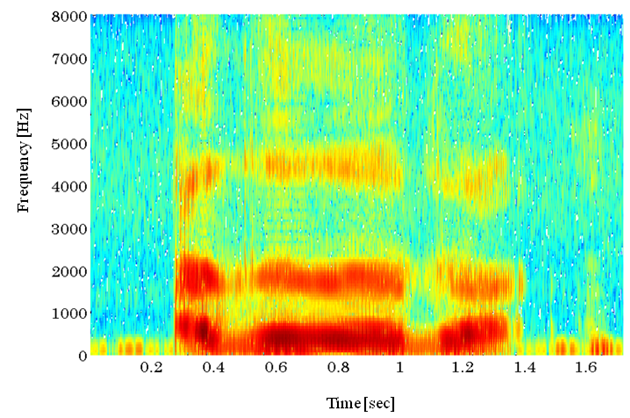

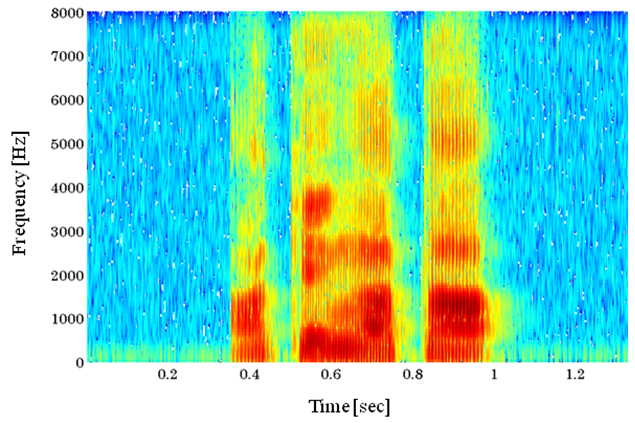

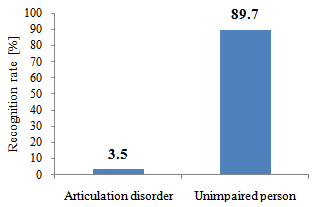

- The proposed method was evaluated on a word recognition task for three males with articulation disorders (Speaker A, B, C). For the conducted experiments, we recorded 210 words included in the ATR Japanese speech database for each speaker. Each of the 210 words was repeated five times (Fig. 4). The speech signal was sampled at 16 kHz and windowed with a 25-msec Hamming window every 10 msec. Fig. 5 shows an example of a spectrogram spoken by a person with an articulation disorder. Fig. 6 shows a spectrogram spoken by a physically unimpaired person doing the same task.

| Figure 4. Example of recorded speech data |

| Figure 5. Example of a spectrogram from a person with an articulation disorder. (//a k e g a t a) |

| Figure 6. Example of a spectrogram from a physically unimpaired person. (//a k e g a t a) |

| Figure 7. Recognition results[%] for the speaker-independent model using training data uttered by unimpaired persons |

4.2. Experiment 1

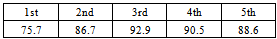

- In Experiment 1, recognition results were obtained for each utterance using speaker-dependent models. Each system was trained using 24-dimensional feature vectors consisting of 12-dimensional MFCC parameters, along with their delta parameters. When we recognized the 1st utterance, the 2nd through 5th utterances were used for training. We iterated this process for each utterance.

|

|

|

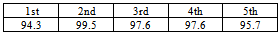

4.3. Experiment 2

- The aim of Experiment 2 is to evaluate the improvement introduced by the use of a RP-based feature projection method for the unstable 1st utterance. In the experiments, the following RP-based features were evaluated. Random projection is applied to MFCC at the

frame,

frame,  , and the new feature,

, and the new feature,  , is obtained:

, is obtained: | (2) |

. The final system feature dimensionality is 24 (

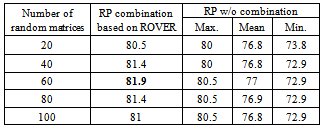

. The final system feature dimensionality is 24 ( ).We investigated the performance of random projections for various random matrices (l = 20, 40, 60, 80, and 100) sampled from

).We investigated the performance of random projections for various random matrices (l = 20, 40, 60, 80, and 100) sampled from  . Tables 4 ~ 6 show the recognition rate versus the number of random matrices for each speaker. The results of “RP w/o combination” show the maximums, means, and minimums obtained from each random projection without ROVER-based combination.

. Tables 4 ~ 6 show the recognition rate versus the number of random matrices for each speaker. The results of “RP w/o combination” show the maximums, means, and minimums obtained from each random projection without ROVER-based combination.

|

|

|

4.4. Experiment 3

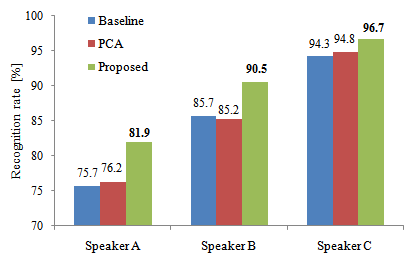

- In order to show the superiority of the RP-based feature projection method, in Experiment 3, we compared the proposed method and the PCA-based feature projection method.For Experiment 3, PCA was applied to 12-dimensional MFCC, and the new feature also had the delta coefficient of the MFCC features. Then, we computed the eigenvector matrix using the 2nd through 5th utterances (the more stable utterances) for each speaker. A comparison between the PCA-based feature projection method and the results obtained by our proposed method using the combination of 60 random matrices are shown in Fig. 8. We can see that the combination of random projection and ROVER outperforms both the baseline method (MFCCs) and the PCA-based feature extraction method. This result gives the evidence of the improvement introduced by the speech feature extraction based on random projection and the use of ROVER to obtain an optimal result. One of the possible reasons the random projection improves the recognition rates may be that if distributions of original data are skewed (have ellipsoidal contours of high eccentricity), their transformed counterparts will become more spherical[17]. However, there were ‘bad’ projections that cause degradation of speech recognition accuracy compared with the recognition of original features (Tables 4 ~ 6). Therefore, more research will be needed to investigate the effectiveness of the random projection method for speech features.

| Figure 8. Comparison between the PCA-based feature projection method and the results obtained by our proposed method for the 1st utterance |

5. Conclusions

- As a result of this work, a method for recognizing dysarthric speech using RP-based features has been developed. The proposed method transforms the conventional speech features such as MFCC using various random matrices. It also introduces the vote-based combination method to obtain an optimal result from the ASR systems created from each RP-based feature. Word recognition experiments were conducted to evaluate the proposed method for three males with articulation disorders. The results of the experiments showed that all the recognition rates of the proposed method outperformed the baseline rate (using MFCCs).As future work, we will continue to investigate how to select the optimal basis vector via the use of such random matrices.

ACKNOWLEDGEMENTS

- This research was supported in part by MIC SCOPE.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML