-

Paper Information

- Next Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Signal Processing

p-ISSN: 2165-9354 e-ISSN: 2165-9362

2012; 2(2): 1-9

doi: 10.5923/j.ajsp.20120202.01

User Psychology and Behavioral Effect on Palmprint and Fingerprint Based Multimodal Biometric System

Mustafa Mumtaz , Hassan Masood , Salah ud Din, Rafia Mumtaz

National University of Science and Technology, Sector H-12, Islamabad, Pakistan

Correspondence to: Mustafa Mumtaz , National University of Science and Technology, Sector H-12, Islamabad, Pakistan.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

The ever increasing demand of security has resulted in wide use of Biometric systems. Despite overcoming the traditional verification problems, the unimodal systems suffer from various challenges like intra class variation, noise in the sensor data etc, affecting the system performance. These problems are effectively handled by multimodal systems. User psychology is accounted has been taken into consideration which highly effects its eventual user acceptability and collectability among masses. Desirable image quality is achieved by a stable and user friendly image acquisition platform. In this paper, we present multimodal approach for palm and fingerprints by feature level and score level fusions (sum and product rules). The proposed multimodal systems are tested on a developed database consisting of 440 palm and fingerprints each of 55 individuals. In feature level fusion, directional energy based feature vectors of palm- and fingerprint identifiers are combined to form joint feature vector that is subsequently used to identify the individual using a distance classifier. In score level fusion, the matching scores of individual classifiers are fused by sum and product rules. Receiver operating characteristics curves are formed for unimodal and multimodal systems. Equal Error Rate (EER) of 0.538% for feature level fusion shows best performance compared to score level fusion of 0.6141 and 0.5482% of sum and product rules, respectively. Multimodal systems, however, significantly outperform unimodal palm and fingerprints identifiers with EER of 2.822 and 2.553%, respectively.

Keywords: User Psychology Index, Contourlet Transform, Distance Transform, Euclidean Distance Classifier

Article Outline

1. Introduction

- Biometrics as a subject incorporates any physical feature of human body which exhibits characteristics like universality, uniqueness, permanence, collectability, performance, user acceptability and circumvention[15]. While universality is generally fulfilled in all complete normal humans, uniqueness in terms of appearance makes a characteristic a real strong candidate to be selected in the group of biometric family. The above mentioned traits also promise to form the fiber of the most strengthened core of security.Modern networked society requires more reliability in providing high level security to access and transaction systems. Traditional personal identity verification systems i.e. token and password based, can be easily breached when the password is disclosed or the card is stolen. The traditional systems are not sufficiently reliable to satisfy modern security requirements as they lack the capability to identify the fraudulent user who illegally acquires the access privilege. The pronounced need for establishing secured identity verification systems has turned the world’s attention towards the field of biometrics which utilizes unique behavioral or physiological traits of individuals for recognition purposes and, therefore, inherently possesses the capability of differentiating genuine users from imposters[4],[5]. These traits include palm-print, palm-geometry, fingerprint, palm-vein, finger-knuckle-print, face, retina, iris, voice, gait, signature and ear etc. Unimodal systems that use single biometric trait for recognition purposes suffer several practical problems like non-universality, noisy sensor data, intra-class variation, restricted degree of freedom, unacceptable error rate, failure-to-enroll and spoof attacks[13]. Several studies have shown that in order to address some of the problems faced by unimodal systems and improved recognition performance, multiple sources of information can be consolidated together to form multi-biometrics systems[3],[9],[12].Multi-biometrics system can be developed by utilizing different approaches: (a) multi-sensor systems combine evidences of different sensors using a single trait, (b) multi-algorithm systems process single biometric modality using multiple algorithms, (c) multiinstance systems consolidate multiple instances of the same body trait, (d) multi-sample systems use multiple samples of same biometric modality using a single sensor, (e) multimodal systems are developed by fusing the information of different biometric traits of the individual to establish identity[13].Multimodal system can be developed by fusing information of different biometric modalities at pre-classification level which includes fusion at feature extraction level and post-classification fusion which includes fusion at matching score level and decision level[6],[10],[13]. At feature level fusion, feature vectors extracted from different biometric modalities are combined together and subsequently used for classification[8]. Fusion at score level is performed by combining the matching scores originating from different classifiers pertaining to various modalities, and depending upon the score threshold a classification decision is made. For decision level fusion, final outputs of different classifiers are fused together through different fusion techniques like Bayesian Decision Fusion[12].In this paper, we present a multimodal system at feature level fusion using our already reported palm-print and finger print identifiers[1],[14]. The unimodal finger and palm print identification systems utilize directional energies of texture as features, extracted using contourlet transform. The rest of paper is organized as follows: Section 2 briefly describes the unimodal palmprint and finger print systems, followed by importance of image quality and user psychological effect on image acquisition in Section 3. The feature extraction for palmprint and finger print is disused in Section 4, followed by feature and score level fusion of palmprint and fingerprint identifiers for multi-modal system in section 5. The details of experiments and results are given in section 6, and the paper is concluded in Section 7.

2. Uni-modal Biometrics Identifier

2.1. Palmprint Identifier

2.1.1. Development of Image Acquisition Platform

- Human recognition is a compromise between behavioral and physiological features of human body and the contextual information of the environment[16]. The more sophisticated the hardware acquisition platform the more accurate the registration/enrolment and hence the final outcome. It is often thought that dedicated hardware has high maintenance cost[16]. Sophisticated hardware helps remove lengthy programming for removing artifacts, out of focus image, motion blur and unwanted clutter. Moreover it decreases the failure to enrol rate which accounts highly offensive among the noble.We have indigenously developed an image acquisition device as shown in Fig.1. It is an enclosed rectangular box painted from the inside with mat-black colour so as to suppress light reflection. The rectangular box has two plates placed inside. The upper plate holds the camera whereas the palm rests on the lower one. Source of illumination is being provided with a white circular light attached from the upper plate. The distance between the plates is kept as 14 inches after empirical testing.Any change in image acquisition setup in terms of capturing device or scale will cause huge variation in final image since each camera has its own intrinsic and extrinsic parameters which have an overall effect on the image in terms of detail. Secondly varying the scale would not only change the resolution which controls pixel density but it would also vary the Field of View of the camera. Hence the whole image; may it be intra class or inter class would become quite different in terms of their visual appearance. Therefore by keeping the same capturing device and scale; a few parameters affecting efficient and robust image registration are restricted to a minimum.

2.1.2. Source of Illumination

- Lighting can enhance or suppress important features in an image[17]. Source of illumination is one primary factor since variance in light illumination would alter the appearance of the image to a great deal. Although illumination cannot be maintained at a constant value every time an image is acquired. There are two types of artificial illumination methods used in image acquisition which use hard light or soft light. Hard light is defined as the illumination which makes an object have sharp edged shadows and clearly demarcates the object from the back ground. It is more often used in spot lights and fashion industry. Soft light on the other hand is a type of illumination which uniformly illuminates the area and thus has blended shadows[18] .It is more suitable in biometric applications since it uniformly illuminates the area of interest. We used a soft circular light that was attached on the upper plate of image acquisition device.

| Figure 1. Image Acquisition platform for Palmprint |

2.1.3. Image Acquisition

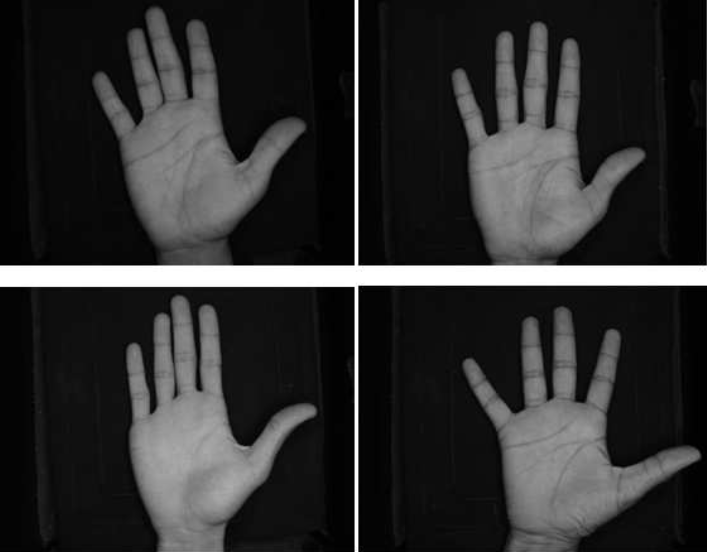

- We collected intra class images in three sessions at different times. Incorporation of such committed measures in our indigenously developed platform before image acquisition of second and third session saw a decline in both "failure to enroll rate" and "failure to acquire rate". These parameters were initially moderate since the user was not habituated with the system. The database for the third session was conducted in an unsupervised friendly environment and showed marked improvement in user confidence, attitude, cooperation and eventually results of the system. The familiarization with the developed system drew cooperation and reduced the above mentioned essential parameters to a minimum.The images taken initially during the first session showed more variance in terms of stretch and pose whereas the subsequent second session showed more regularity. The third session showed further uniformity and discipline among intra class images since most users by then had developed a sound ergonomic sense with the image acquisition platform. The users were habituated and no image with an awkward pose was observed.The more the user would be habituated or familiarized with the biometric modality the better would be the results. Accustomed user would contribute significantly to lesser false reject rates and thus less user irritation. The crossover point or equal error point is advocated to be the indication of user behavior in[19] and the results validates it with authority. The user psychology is a vital element since biometrics involves a very close interaction between the template matching machine and the subject in question. The user was changed from a general one to a professional one in terms of his approach and it helped in suppression of ``Fear of Rejection Syndrome"[20].10 images from 50 male individuals have been collected making a total of 500 images as the experimental dataset. The age distribution of individuals is between 22 to 56 years, with high percentage between 22 to 25 years. SONY DSC W-35 cyber shot camera has been utilized for imaging the palmprint and the obtained images have a low resolution of 72 dpi. Some of the images from our database are shown in Fig.2.

| Figure 2. Different Palm images from our indigenous Database |

2.2. Fingerprint Identifier

- Our work on fingerprint identification system using contourlet transform is reported in the literature[14]. Fingerprint scanner of digital persona is used for capturing fingerprints of individuals. Region of interest (ROI) of 128 x 128 pixels size is extracted from input image and Contourlet transform is used for its textural analysis. With the help of Directional Filter Banks (DFBs) 2-D spectrum is fragmented into fine slices. In order to make feature vectors of input fingerprints, directional energy values are calculated for each sub block from the decomposed subband outputs at different resolutions. Feature set for fingerprint comprises of core and delta points along with the ridge and valley orientations which have strong directionality. Euclidian distance classifier is employed for fingerprint matching. To further improve the matching criteria, adaptive majority vote algorithm is employed.

3. Image Quality and Biometrics

- It is pertinent to mention that image quality is of prime importance in recognition/authentication algorithms[16]. Specularity of skin and background reflection affects the overall appearance of the image and is one of the vital cause of visual variance among intra class images. Although universal image quality measure is not possible but images of high quality would be a discriminatory factor among the inter-class images besides reducing significant variance among the intra-class images[21]. Considerable research has been done so as to evolve certain image quality assessment algorithms in face, iris[22] and fingerprint recognition[23].Image quality depends upon the capturing or acquisition device (Digital camera), acquisition process and algorithmic capability to remove noise. For naked eye clarity of ridges, wrinkles and principal lines would constitute as an authority for a high quality image. Researchers have also utilized image enhancement techniques to increase image quality before feature extraction[24],[25],[26] where normalization and histogram fitting has been done to as to cater for inadvertent lighting variations before eventual feature extraction.Texture analysis of palmprint is sensitive to image enhancement techniques since such methods do not promise to maintain the integrity of wrinkles, minutiae and ridges in palmprint. Many researchers in the field of Palmprint analysis have emphasized upon the utility of low resolution images in texture analysis as mentioned in the literature review. Here we do not differ in opinion as resolution and dpi are not the criteria of a high quality image in biometrics rather the subject parameters add more to appeal than recognition value. In biometric it is the truthfulness of the information reflected in the pixels which defines the ultimate image quality. The more true or pure the image the lesser would be the intra class variance.A small blur, out of focus/de-focus palm or motion noise may render the image useless for texture analysis. Since we have not applied image enhancement techniques after extraction of ROI and have directly exposed the same for feature extraction. No matter what image enhancement technique is applied it would not be able to compensate for motion blur and de-focused image without affecting the truthfulness of pixels. As mentioned in our previous research on a peg-free system[27-29] we have advocated that even slight image rotation changes the original image parameters and induces a certain blur which in turn degrades the performance of the system. In order to get a pure image we have endeavored to improve upon the process, the subjects and the capturing device before eventual image acquisition. Each of these has been described in subsequent sections.

3.1. User Training and Effect of Human Factor on Performance Parameters

- The next consideration before image acquisition would be user training. Biometric systems would not work at extreme efficiency till the time the user is not trained both mentally and physically upon the use of the particular biometric system[30]. User training has proven to be a key factor in the improvement of the performance parameters of any biometric system[31]. The problem of pose variation would be greatly reduced once the user knows how to use the system, else the penultimate image would have large amount of inherent rotation and translation asking for a complex logical coding at software level.Though biometrics is reputed as a technology directly raising issues like violation of physical privacy, invasiveness and religious concerns[32] yet it is no denying to the fact that the modernized world of today demands an accurate and reliable identification/verification solution which has its hopes pinned up with Biometrics. It must be noted here that no biometric system is spoof free[33] and also does not easily attracts user cooperation. A non cooperative user creates a big hurdle in the success of any biometric modality as he/she increases the probability of false rejection and spoofing[34].User is a critical tier in the selection, use and employment of a biometric modality and can heavily affect the results in case he is not accustomed, acclimatized and familiarized with the biometric security system. Such huge is its impact that in recent times, "User Psychology Index"[20] is being sought as the essential performance parameter in the eventual employment of a particular biometric modality.Since we have used palmprint as our biometric modality and have employed a peg free system for user comfort, trade off has been on the user side in terms of his/her convenience, and in most often accuracy of biometric system comes at the cost of user inconvenience[35].We believe that no user of is non-cooperative since people have accepted security measures in their routine. We used an Overt biometric system where the subject was made aware that his/her palmprint is being analyzed which would contribute towards the development of a security system. The user was given the feel of a ``Team-man" which significantly changed his attitude towards the system.Self esteem and privacy concerns were also taken care since people with problems like arthritis and amputation were not invited for the image acquisition session. Moreover users were advised to avoid the system when fresh out of a swim or wash owing to shriveling of fingers and the soft palm skin so as to avoid false rejection and subsequent irritation and embarrassment. Moreover the user privacy concerns[36],[37] and security rights[32],[38] that he might be tracked or sought after to name a few were duly addressed. The developed system inherently provided an acceptable hygienic level as the users touch their backside of palm with the platform and the inner surface of palm remained contact less facing the distant camera.

4. Feature Extraction

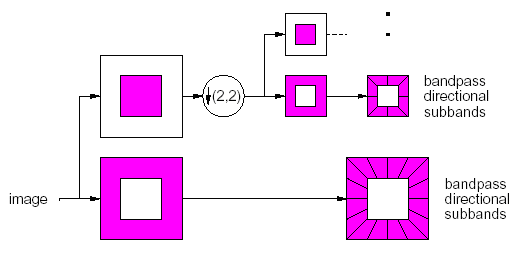

4.1. Contourlet Transform

- Contourlet, a new discrete transform, can efficiently handle the intrinsic geometrical structure containing contours. It is proposed by Minh Do and Martin Vetterli[39],[40] and provides sparse representation at both spatial and directional resolutions. Additionally, a flexible multiresolution and directional decomposition by allowing different number of directions at each scale with flexible aspect ratio is offered. Contourlet transform uses a structure similar to that of curvelets[41],[42]. Fig.3 shows a double filter bank structure comprising the Laplacian pyramid capturing the point discontinuities followed by a directional filter bank to link point discontinuities into linear structure. The contourlet transform satisfies the anisotropy scaling relation for curves by doubling the number of directions at every finer scale of the pyramid. The reconstruction is perfect, almost critically sampled with a small redundancy factor of up to 4/3[40].

| Figure 3. Contourlet Stricture with multiscale decomposition and directional subbands[39] |

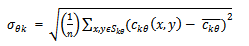

4.2. Proposed Algorithm for Palmprint

- The extracted ROI is decomposed into sub-bands at four different resolution levels. At each resolution level ``k'' the ROI is decomposed in 2n sub-bands where ``n'' is the order of the directional filter. Fig.4 gives the pictorial view of the decomposition at just three levels to keep the figure simple.The highest resolution level (level 1) corresponds to the actual size of ROI i.e. 256X256. The next resolution level is determined by the expression 2N-1 where N in this case is 7. This gives us an ROI of size 128X128 at level 2. Similarly the ROI is further reduced by subsampling at levels 3 and 4 and generating an ROI of sizes 64X64 and 32X32 respectively. We have empirically chosen to apply a 5th order filter at resolution level 1, thus giving a total of 32 subbands. By applying a 4th order filter at resolution level 2, 16 subband outputs are obtained. Similarly resolution levels 3 and 4 give 8 and 4 subbands respectively. Resultantly, 60 valued feature vector is calculated by finding the directional energies in respective sub-bands.

| Figure 4. Subband Decomposition at three resolution levels of Palmprint |

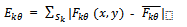

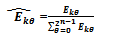

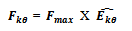

, defined as the Energy value in directional sub-band

, defined as the Energy value in directional sub-band  resolution level is given by:

resolution level is given by: | (1) |

is the mean of pixel values of

is the mean of pixel values of  in the sub-band.

in the sub-band.

is the contourlet coefficient value at position (x,y). Additionally, the directional sub-bands vary from 0 to

is the contourlet coefficient value at position (x,y). Additionally, the directional sub-bands vary from 0 to  . The normalized energy value

. The normalized energy value  of subband θ at

of subband θ at  resolution level is defined as:

resolution level is defined as: | (2) |

value equal to maximum intensity level of 255, the feature value

value equal to maximum intensity level of 255, the feature value  is calculated as:

is calculated as: | (3) |

4.3. Proposed Algorithm for Fingerprint

- Our work on fingerprint identification system is reported in the literature[14]. Fingerprint scanner of digital persona “U 4000-B” is used for capturing fingerprints of individuals. A total of 440 fingerprints of 55 individuals are stored. The image is 512 × 460 pixels wide and its output is a 8-bit grayscale image. JAVA platform is used in order to develop image Acquisition software. Input image is re-processed using histogram equalization, adaptive thresholding, Fourier transform and adaptive binarization. A re-processed fingerprint is shown in Fig. 5.

| Figure 5. (a) Acquired Image (b) Enhanced Image after pre-processing |

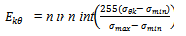

| (4) |

| (5) |

are the maximum and minimum standard deviation values for a particular sub-block. N is the number of pixels in sub-band

are the maximum and minimum standard deviation values for a particular sub-block. N is the number of pixels in sub-band .

.  is the mean of contourlet coefficients

is the mean of contourlet coefficients  in the sub-band block

in the sub-band block  . The normalized energy for each block is computed as:

. The normalized energy for each block is computed as: | (6) |

represents directional energy of sub-band θ at k level and

represents directional energy of sub-band θ at k level and  represents total directional energy of all sub-block at k level, while E is the normalized energy. Feature set for fingerprint comprises of core and delta points along with the ridge and valley orientations which have strong directionality. Euclidian distance classifier is finally employed for fingerprint matching .

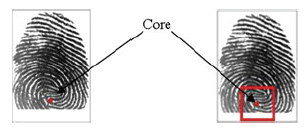

represents total directional energy of all sub-block at k level, while E is the normalized energy. Feature set for fingerprint comprises of core and delta points along with the ridge and valley orientations which have strong directionality. Euclidian distance classifier is finally employed for fingerprint matching . | Figure 6. Core points located at extreme margins of the image |

5. Feature and Score Level Fusion

5.1. Feature Level Fusion

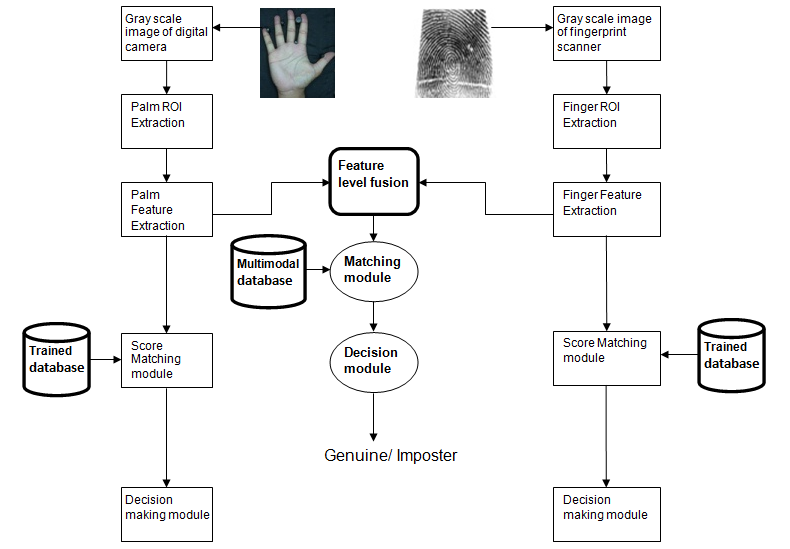

- Figure 7 depicts the basic methodology for feature level fusion of the multimodal system based upon palm- and fingerprints. Joint feature vector is matched with the already stored multimodal database in matching module that consists of Euclidian classifier. Depending upon the threshold, the decision module declares the result as genuine or impostor. Similarly, in case of unimodal identifiers, the extracted features are matched with respective database using a Euclidian classifier in matching module, followed by decision on the basis of selected threshold in the decision module. For feature level fusion of palmprint and fingerprint, feature vectors of palmprint and fingerprints are concatenated together to make combined feature vector similar to Kumar and Zhang[7]. Let P = p1, p2, . . . ・ pm and F = f1, f2 . . . fn represent feature vectors containing the information extracted from palmprint and fingerprint, respectively. The objective is to combine these two feature sets after normalization in order to yield a joint feature vector (JFV). JFV is obtained by combining P and F feature sets. Problem of compatibility of feature sets is overcome inherently as feature vectors in case of both palm- and fingerprint identifiers consist of normalized energy values. Thus, need for normalizing feature sets is eliminated. One hundred and twenty-four different feature values of palmprint are concatenated with 60 different feature values of fingerprint to give a joint feature vector (JFV) of 184 feature values representing the same individual. JFVs are generated and stored in order to make multimodal database which is subsequently used for identification and verification purpose.

5.2. Score Level Fusion

- Fusion at score level demands matching scores generated by comparing input test image with trained database[13]. Feature vectors of palmprint and fingerprint are compared with their respective databases using normalized euclidean distance classifier to generate the matching scores. These scores contain less amount of information as compare to feature vectors. Before fusing scores together, scores should be normalized to a common scale. As normalized energy values are used in both palmprint and fingerprint systems to generate the scores, so generated scores are already on a common scale and hence eliminate the need of using any score normalization technique. Palm and finger scores are combined using two rules: Sum Rule and Product Rule[6].

5.2.1. Sum Rule

- According to sum rule, the scores of palmprint and fingerprint input images are added together to yield a new set of values. Thus, the new set of values contains more amount of information as compared to the individual unimodal systems, hence, giving more information to identify a person. Finally, the decision of input claim is established on the basis of preset threshold by the classifier. Suppose P = p1, p2, . . . pm and F = f1, f2 . . . fn give the scores of palm and finger images, respectively, then according to the sum rule, the combined score vector

is obtained

is obtained  . Here, ‘k’ represents the total number of generated score of test image corresponding to trained database. sk is the combined score which is used for decision making.

. Here, ‘k’ represents the total number of generated score of test image corresponding to trained database. sk is the combined score which is used for decision making.5.2.2. Product Rule

- The scores of palmprint and fingerprint images are multiplied together to produce a new set of values consisting of combined values of both the systems. Suppose P = p1, p2, . . . pm and F = f1, f2 . . . fn give the scores of palm and finger images, respectively, then according to the product rule the combined score vector

is obtained as:

is obtained as:  Here, ‘k’ represents the total number of generated score of test image corresponding to trained database, and

Here, ‘k’ represents the total number of generated score of test image corresponding to trained database, and  is the combined score which is used for decision making.

is the combined score which is used for decision making.6. Experiments and Results

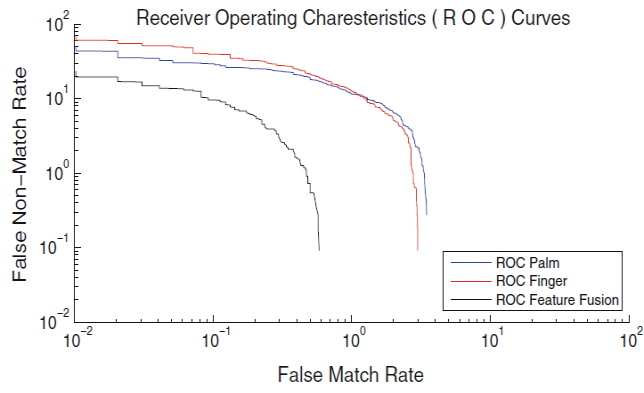

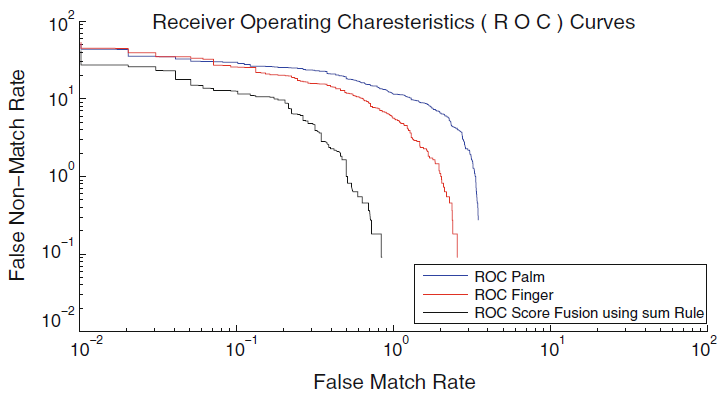

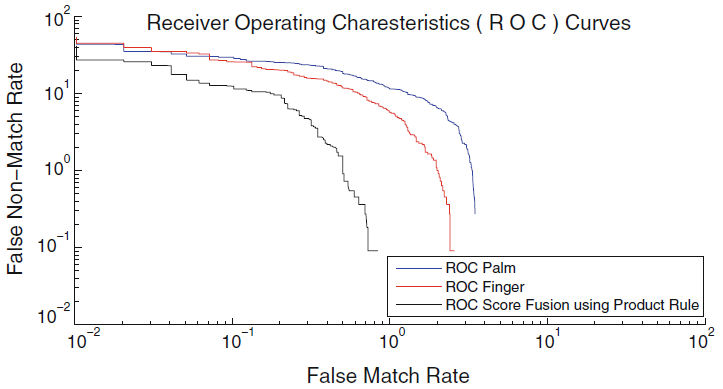

- Fingerprint images were collected usingDigital Persona fingerprint scanner 4000B, while palmprints with the help of developed platform. A database consisting of palm and finger images of 55 individuals has been constructed. Sixteen prints are collected from single individual with 8 records per biometric modality. Thus, multimodal database consists of 16×55 = 880 records, consisting of 440 palmprint and 440 fingerprint records. The database is developed in two sessions with an average interval of two months to focus on performance of developed multimodal system. User training is conducted prior to data acquisition phase for both palm and fingerprints. In our experiments, the developed database is divided into two non-overlapping sets: training and validation sets of 440 images each (220 for each modality). Palmprint and fingerprint-based multi-modal system is implemented in Matlab on a 3.0GB RAM, 2.0GHz Intel CoreDuo processor PC. Training set is first used to train the system and threshold determination. Validation data set is then used to evaluate the performance of trained system. The performance of the system is recorded in terms of statistical measures like False Acceptance Rate (FAR), False Rejection Rate (FRR) and Equal Error Rate (EER), and results were plotted in terms of Receiver Operating Characteristics (ROC) curves.Figure 8 shows the ROC curve of proposed multimodal system using feature level fusion in comparison with unimodal palmprint and fingerprint systems, respectively. Figures 9 and 10 give the ROC curves for score level fusion by sum and product rules, respectively, in comparison with unimodal palmprint and fingerprint systems. It is evident from the ROC curves that multimodal system shows improved performance compared to individual unimodal systems.Table 1 gives the comparison of Equal Error Rates of Multimodal systems with the unimodal systems. Equal Error Rate, EER of feature level fused system is 0.5380%, while that of score level fusion with sum and product rules are 0.6141 and 0.5482%, respectively. EER for multimodal systems is far less than EER values of individual palmprint (2.8224%) and fingerprint (2.5533%) identifiers. The results depict obvious improvement in performance of multimodal system as compared to unimodal systems. Amongst multimodal systems, the feature level fusion performs best, followed by score level fusion using product rule.

| Figure 7. Methodology of feature level and score level Plam-Finger multimodal system |

| Figure 8. ROC curve for Multimodal system using feature level fusion in comparison with unimodal fingerprint and palmprint identifiers |

| Figure 9. ROC curve for Multimodal system using score level fusion (Sum Rule) in comparison with unimodal fingerprint and palmprint identifiers |

| Figure 10. ROC curve for Multimodal system using score level fusion (Product Rule) in comparison with unimodal fingerprint and palmprint identifiers |

7. Discussion and Comparison of Results

- Multimodal biometric systems fuse two or more physical or behavioral traits to give minimum EER values and hence improving system dependability. Table 2 presents a comparison of results of different approaches proposed by Snelick et al.[44], Kumar and Zhang[7] and Wang et al.[45] to our proposed approach. Minimum EER value of proposed multimodal system as compared to different biometric systems proves the effectiveness of presented approach. The paper presents multimodal personal identification system utilizing palmprint and fingerprint systems. The unimodal identifiers utilize directional energies for matching purpose with the help of distance-based classifier. The feature level fused multi-modal system uses a joint feature vector representing the palm and finger energy features, which is subsequently used for matching using distance classifier. In score level fused multimodal system, individual scores of by sum and product rules. ROC curves and EER values demonstrate considerable improvement in recognition results for multimodal system as compared to individual unimodal identifiers. Among the multimodal systems, the feature level fused system performs the best.

|

ACKNOWLEDGEMENTS

- Authors want to thank M Asif Afzal Butt from College of Signals, National University of Science and Technology for his valuable suggestions related to this research work.

References

| [1] | M. A. A. Butt, H. Masood, M. Mumtaz, A. B. Mansor, and S. A. Khan. “Palmprint identification using contourlet transform,” In Proc. of IEEE second International conference on Biometrics Theory, Applications and Systems, Washington, USA, 2008. |

| [2] | M. N. Do and M. Vetterli. “Contourlets in Beyond wavelets,” J. Stoeckler and G.V.Welland, Eds, Academic Press, New York, 2003. |

| [3] | L. Hong, A. K. Jain and S Pankanti, “Can Multibiometrics Improve Performance?” In Proc. Of IEEE workshop on Automatic Identification Advanced Technologies, New Jersy, USA, pages 59-64, 1999. |

| [4] | K. Jain, R. Bolle, and S. Pankanti Eds. “Biometrics: Personal Identification in Networked Society,” Springer Verlag, New York, USA, 2006. |

| [5] | K. Jain, A. Ross, and S. Prabhakar. “An introduction to biometric recognition,” In IEEE Transaction on Circuits and Systems for Video Technology, 14(1): 4-20, January, 2004. |

| [6] | Kumar and D. Zhang. “Personal authentication using multiple palmprint representation,” Pattern Recognition,38(10):1695–1704, October 2005. |

| [7] | Kumar and D. Zhang. “Combining fingerprint, palmprint and hand-shape for user authentication,” In International Conference on Pattern Recognition, pages IV: 549-552, 2006. |

| [8] | Kumar and D. Zhang. “Personal recognition using hand shape and texture,” IEEE Transactions on image processing, 15(8):2454–2461, August 2006. |

| [9] | S. Prabhakar and A. K. Jain, “Decision-level fusion in fingerprint verification,” Pattern Recognition, 35:861– 874, 2001. |

| [10] | S. Ribaric and I. Fratric. “A biometric identification system based on eigenpalm and eigenfinger features,” IEEE Transaction on Pattern Analysis and Machine Intelligence. 27(11):1698–1709, 2005. |

| [11] | Ross and R. Govindarajan. “Feature level fusion using hand and face biometrics,” In Proceedings of SPIE Conference on Biometric Technology for Human Identification II, Orlando, USA, pages 196–204, 2005. |

| [12] | Ross and A. K. Jain. “Multimodal biometrics: An overview,” In Proc. of 12th European Signal Processing Conference (EUSIPCO), Vienna, Austria, pages 1221– 1224, September 2004. |

| [13] | Ross, K. Nandakumar, and A. K. Jain. “Handbook of Multibiometrics,” Springer-Verlag, New York, USA, 2006. |

| [14] | Saeed, A. B. Mansoor, and M. A. A. Butt. A novel contourlet based online fingerprint identification. Lecture Notes in Computer Science, Springer Verlag, Heidelberg, Germany, 5707:308–317, 2001. |

| [15] | Kong A., Zhang D., Kamel M., A Study of Brute-Force Break-Ins of a Palmprint Authentication System, In: IEEE Transactions on Systems, Man and Cybernetics, Part B, vol. 36, no. 5 pp. 1201-1205, 2006. |

| [16] | Methani C., Camera Based Palmpritn Recognition, Master Thesis, International Institute of Information Technology, Hyderabad, India, 2010. Weblink:http://cvit.iiit.ac.in/thesis/chhayaMS2010/Downloads/Chhaya_Thesis2010.pdf |

| [17] | Guo Z., Zhang D., Zhang Lei., Is White Light the Best Illumination for Palmprint Recognition? , 13th International Conference on Computer Analysis of Images and Patterns, vol. 5702/2009, pp. 50-57, 2009. |

| [18] | Siskin J., Hard Decisions and Soft Light , Photo Techniques Magazines, 2007. website:http://www.siskinphoto.com/magazine4c.html |

| [19] | Biometrics Technology Application Manual: Applying Biometrics , National Biometric Security Project, vol. 2, 2008. website: http://www.nationalbiometric.org/btamvol2.pdf |

| [20] | Ashbourn J., User Psychology and Biometric Systems Performance, 1999. website: http://www.ntlworld.com/avanti/ psychology.htm |

| [21] | Hsieh M., Biometrics Qaulity: Technologies, Benefits and Challenges , NIST Quality Workshop, Gaithersburg, MD, 2006. website:http://biometrics.nist.gov/cs_links/quality/workshopI/proc/ming_cogent_presentation.pdf |

| [22] | Kalka N D., Zuo J., Schmid N A., Cukic B., Image Quality Assessment for Iris Biometrics , Proceedings of the 241h Annual Meeting of the Gesellschafi flit Klassifikation, Springer, pp. 445-452, 2002. |

| [23] | Fronthaler H., Kollreider K., Bigun J., Automatic Image Quality Assessment with Application in Biometrics , Conference on Computer Vision and Pattern Recognition Workshop, DOI: 10.1109/CVPRW.2006.36, 2006. |

| [24] | Wong E K Y., Sainarayanan G., Chekima A., Palmprint Based Biometric System: A Comparative Study on Discrete Cosine Transform Energy, Wavelet Transform Energy and SobelCode Methods , Biomedical Soft Computing ang Human Sciences}, vol. 14, no. 1, pp. 11-19, 2009. |

| [25] | Kumar A., Wong D C M., Shen H C., Jain A K., Personal verification using palmprint and hand geometry biometric, Proceedings of the 4th International conference on Audio- and video-based biometric person authentication, Springer-Verlag Berlin, Heidelberg, 2003. |

| [26] | Ribaric S., Fratric I., A Biometric Identification System based on Eigenpalm and Eigenfinger Features, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, issue. 11, pp. 1698-1709, 2005. |

| [27] | Mumtaz M., Mansoor A B., Masood H., Directional Energy Based Palmprint Identification using Non Subsampled Contourlet Transform, IEEE International Conference on Image Procesing ICIP pp. 1965-1968, DOI: 10.1109/ICIP.2009.5413471, 2009. |

| [28] | Mansoor A B., Masood H., Mumtaz M., Khan S A., A Feature Level Multimodal Approach for Palmprint Identification Using Directional Subband Energies, Journal of Network and Computer Applications, vol. 34, issue 1, 2011. |

| [29] | Din S U., Mansoor A B., Masood H., Mumtaz M., Personal Identification Using Feature and Score Level Fusion of Palm and Fingerprints, Journal of Signal, Image and Video Processing, DOI: 10.1007/s11760-011-0251-7, 2011. |

| [30] | Zhang D., Lu G., Kong A., Wong M., A Novel Personal Authentication System Using Palmprint Technology, Pattern Recognition and Machine Intelligence, vol. 3776/2005 Springer- Verlag Heidelberg 2005. |

| [31] | Coventry L., Fingerprint authentication: The user experience, DIMACS Workshop on Usable Privacy and Security Software, 2004. |

| [32] | Hochman D., Biometrics: Security and Privacy Issues Come to a Head, Conference on Contigency Planning and Managment, vol. 7, issue. 2, pp 28, 2002. |

| [33] | Lewis M., Biometrics Demystified White Paper, Information Risk Management, 2007, website:http://www.irmplc.com/downloads/whitepapers/Biometrics\%20Demystified.pdf |

| [34] | Biometrics for Identification and Authentication-Advice on Product Selection, 2001, website:http://scgwww.epfl.ch/courses/notes/11-Advice-and-Product-Selection.pdf |

| [35] | Patrick A S., Usability and Acceptability of Biometric Security Systems., Lecture Notes in Computer Science, vol. 3110 / 2004. |

| [36] | Cimato S., Gamassi M., Vincenzo P., Sassi R., Scotti F., Privacy Issues in Biometric Identification, Information Security, pp. 40-42, 2006. |

| [37] | Backman P., Kennedy C., Biometric Identification and Privacy Concerns-A Canadian Prespective, Canadian Privacy Law Review, vol. 7. no. 4, 2010. |

| [38] | Biometrics in Quebec : Application Principles ``Making an Informed Choice", Commission d'acces grave{a} l'information du Quebec, 2002. website:www.cai.gouv.qc.ca/home_00_portail/01_pdf/biometrics.pdf |

| [39] | Do M. N., Multi-resolution image representation, PhD thesis, EPFL, Lausanne, Switzerland, Dec 2001. |

| [40] | Do M. N., Vetterli M., Contourlets in Beyond wavelet, J. Stoeckler and G.V. Welland, Eds. Academic Press, New York, 2003. % |

| [41] | Candes E. J., Donoho D. L., Curvelets-A surprisingly effective non-adaptive representation for objects with edges, In Curve and Surface Fitting, Saint-Malo 1999, A. Cohen, C. Rabut, and L. L. Schumaker, Eds. Nashville, TN: Vanderbilt Univ. Press, 1999. |

| [42] | Candes E. J., Donoho D. L.,, Curvelets, Multiresolution Representation, and Scaling Laws, In Wavelet Applications in Signal and Image Processing VIII, A. Aldroubi, A. F. Laine, M. A. Unser eds., Proc. SPIE 4119, 2000. |

| [43] | Park, C.-H., Lee, J.-J., Smith,M., Park, S., Park, K.-H.: Directional filter bankbased fingerprint feature extraction and matching. IEEE Trans. Circuits Syst. Video Technol. 14(1), 74–85 (2004) |

| [44] | Snelick, R., Uludag, U., Mink, A., Indovina, M., Jain, A.K.: Large scale evaluation of multimodal biometric authentication using state-of-the-art systems. IEEE Trans. Pattern Anal. Mach. Intell. 27(3), 450–455 (2005) |

| [45] | Wang, J.-G.,Wang,W.-Y., Suwandy, A., Sung, E.: Fusion of almprint and palm vein images for person recognition based on ’laplacian palm’ feature. IEEE Computer Vision and Pattern Recognition Workshop on Biometrics, pp. 1–8 (2007) |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML