-

Paper Information

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Mathematics and Statistics

p-ISSN: 2162-948X e-ISSN: 2162-8475

2015; 5(4): 184-189

doi:10.5923/j.ajms.20150504.03

Estimating Historical Volatility via Dynamical System

Onyeka-Ubaka J. N.1, Okafor R. O.1, Adewara J. A.2

1Department of Mathematics, University of Lagos, Akoka, Lagos, Nigeria

2Distance Learning Institute, University of Lagos, Akoka, Lagos, Nigeria

Correspondence to: Onyeka-Ubaka J. N., Department of Mathematics, University of Lagos, Akoka, Lagos, Nigeria.

| Email: |  |

Copyright © 2015 Scientific & Academic Publishing. All Rights Reserved.

This paper emphasizes estimating volatility using historical time series data of volatility indices at the Nigeria Stock Exchange. The realized volatility that are widely used by financial and risk management practitioners are employed to determine levels of volatility risk. These well-known features of financial data imply dynamic behaviours which can be well captured using regime structures. The analysis shows that the high-low-open-close volatility estimator performed better than the other estimators. The results also show that the pricing dynamic of the pricing model is heavily dependent on the dynamic of the underlying stochastic process whether or not the parameters is time varying.

Keywords: Volatility, Historical volatility, Dynamical system, Wiener process, Brownian motion

Cite this paper: Onyeka-Ubaka J. N., Okafor R. O., Adewara J. A., Estimating Historical Volatility via Dynamical System, American Journal of Mathematics and Statistics, Vol. 5 No. 4, 2015, pp. 184-189. doi: 10.5923/j.ajms.20150504.03.

Article Outline

1. Introduction

- Volatility is one of the prime and hidden risk factors on capital markets. Financial time series show random behaviours which is highly unpredictable. Efforts are made to apply the theory of stochastic process and statistical techniques of model building to enhance understanding of apparently, irregular patterns of these financial time series data. Financial time series analysis is concerned with the theory and practice of asset valuation over time. It is a highly empirical discipline but like other scientific fields, theory forms the foundation for making inference. The price of a financial asset evolves over time and forms a stochastic process, which is a statistical term used to describe the evolution of a random variable over time. The observed prices are a realization of the underlying stochastic process. The theory of stochastic process is the basis on which the observed prices are analyzed and statistical inference is made. The price changes can be continuous or discrete. A continuous price can assume any positive real number, whereas a discrete price can only assume a countable number of possible values.In recent times, nonlinear modeling has received increasing attention in financial time series analysis. These series are affected by a considerable number of sources of nonlinearity such as asymmetrics in the returns, business cycles, asymmetric behaviours of the variability (usually called leverage effect), volatility clustering and so on. These well-known features of financial data imply dynamic behaviours which can be well captured using regime structures. The collection of all random function of a continuous parameter is called a random process. A random process may be uncorrelated, orthogonal and independent of itself. The orthogonality concept is useful only when the random processes under consideration are of zero mean, in this case, it becomes equivalent to the uncorrelated condition. A random process is also stationary when its statistics do not change with the continuous time parameter, that is, it is particularly useful in stationary or purely white noise process. Random processes in continuous time can also be defined as processes with independent increment (standard Weiner process) and processes with independent increment are Markov processes.The paper is motivated by the recent globalization and increasing complex dependence of financial markets. Financial markets assets depend on a huge number of an underlying of an option such as macro-economic ones like interest rate measurements, foreign currencies, inflation, investments, balanced and unbalanced budgets, micro-economic and business-specific factors like flexibility of labour markets, sales number, etc. Option traders are constantly faced with a dynamically altered volatility risk. Higher risk associated with financial assets requires a risk management and the use of hedging instrument for internationally active organization, Aas [1]. The financial market has become an integral part of the global economy to the extent that any fluctuation in the market influences private and corporate live. In this paper, nonlinear time series is viewed as a realization from stochastic process of a nonlinear dynamical system. Dynamical systems are mathematical objects used to model physical phenomena whose state (or instantaneous description) changes over time. These models are used in financial and economic forecasting; environmental modeling, medical diagnosis, etc. Applications of dynamical system are broadly categorized into three areas which are predictive in which the objective is to predict future states of the system from observations of the past and present states of the systems. The second is diagnostics, in which the objective is to infer what possibly past states of the system might have led to the present state of the system and finally application, in which the objective is neither to predict the future nor explain the past but rather to provide a theory for the physical phenomena. These three categories correspond to the need to predict, explain and understand physical phenomena. The assumptions in finance for dynamical models are formulated by systems of differential equations. Random dynamical systems are characterized by a state space S, a set of maps T from S into itself that can be thought of as the set of all possible equations of motion and a probability distribution Q on the set T that represents the random choice of map.

2. Literature Review

- Many physical processes are continuous in space and time. Any system that depends on the current state is Markovian and follows a diffusion process. The financial system is continuous in space and time just like many physical processes in real life. A stochastic process can be described as a statistical phenomenon that evolves in time according to probabilistic law, Chatfield [4]. The field of stochastic process in continuous time is defined as solutions of stochastic differential equations. Stochastic differential equation is defined as a white noise driven differential equation in which one or more of the terms are stochastic and the solution itself is also a stochastic process. A stochastic process is called Gaussian if all its finite dimensional distribution is multivariate Gaussian. The distribution of a Gaussian stochastic process is determined only by the collection of the expectation and covariance matrices of the finite dimensional distribution, Mikosch [9]. Some established models like the Black-Sholes Options Pricing model have not proven to withstand the test of time and empirical data where stylized features of financial market returns such as volatility clustering, heavy tails and second order serial correlation, etc exist, Dacorogna et al [5]; Bradley and Taqqu [2]. The random level shift model transform data into a linear state space form with a mixture of normal innovations, Li and Perron [8]. The historical (realized) volatility and the implied volatility that are widely used by financial and risk management practitioners are used to determined levels of volatility risk, Forsberg and Bollerslev [6]. Implied volatility is forward looking that reflects the volatility of the underlying assets given its market’s option price. The historical volatility is derived from recent historical data of annualized squared log returns of the option prices observed in the past on the options market, Onyeka-Ubaka et al [10]. Actual market observations conveyed skewness of volatility, i. e. identical option with different strikes possessing different implied volatility. Volatility, standard deviation and risk are sometimes used interchangeably for financial practitioners but in fact there are some conceptual differences, Poon and Granger [12]. The main question is to find out which one of the two market measurements of volatility is better at forecasting true market volatility.

3. Methodology

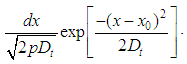

- The model is better viewed as a probability distribution of the stochastic systems in order to capture the dynamics of the system. The methodologies of estimating historical volatility include(i) Close-Close Volatility Estimator(ii) High-Low Volatility Estimator(iii) High-Low-Open-Close volatility Estimator Suppose a point particle undergoes a one-dimensional, continuous random walk with diffusion constant D. Then, the probability of finding the particle in the interval

at time y, if it started at point

at time y, if it started at point  at time t = 0, is

at time t = 0, is  By comparison with the normal distribution, we see that D is the variance of the displacement

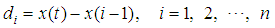

By comparison with the normal distribution, we see that D is the variance of the displacement  after a unit time interval Parkinson [11]. This suggests the traditional way to estimate D, then, defining

after a unit time interval Parkinson [11]. This suggests the traditional way to estimate D, then, defining  = displacement during the ith interval,

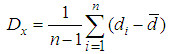

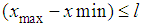

= displacement during the ith interval,  , we have the diffusion constant as

, we have the diffusion constant as as an estimate for D.

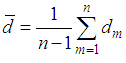

as an estimate for D. = mean displacementUsing this approach, the transformed (logarithmic) price changes, over any time interval in a normally distributed manner with mean zero and variance proportional to the length of the interval and exhibits continuous sample paths. These sample path may be observable anywhere.Given a series of stock prices

= mean displacementUsing this approach, the transformed (logarithmic) price changes, over any time interval in a normally distributed manner with mean zero and variance proportional to the length of the interval and exhibits continuous sample paths. These sample path may be observable anywhere.Given a series of stock prices  which are quoted at equal intervals of a unit of time; equaling

which are quoted at equal intervals of a unit of time; equaling

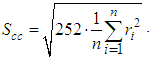

is the mean rate of return, annual number of trading days = 252 days and r is the rate of return over ith time interval, then the annualized Close-Close estimator,

is the mean rate of return, annual number of trading days = 252 days and r is the rate of return over ith time interval, then the annualized Close-Close estimator,  is the classical definition of standard deviation which also happens to be the square root of the diffusion constant definition D. The Close-Close volatility estimator is given as

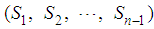

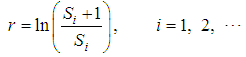

is the classical definition of standard deviation which also happens to be the square root of the diffusion constant definition D. The Close-Close volatility estimator is given as This method has some shortcomings such as inadequate usage of readily available information like opening, closing, high and low daily prices in its estimation. High-Low Volatility Estimator: This is the extreme value method to estimates of variance of the rate of returns. It incorporates the intraday high and low prices of the financial asset into its estimation of volatility. Here the diffusion constant of the underlying random walk of the stock price movements is the true variance of the rate of return of a common stock over a unit of time. The paper established that the use of extreme values in estimating the diffusion constant provides a significantly better estimate. Let

This method has some shortcomings such as inadequate usage of readily available information like opening, closing, high and low daily prices in its estimation. High-Low Volatility Estimator: This is the extreme value method to estimates of variance of the rate of returns. It incorporates the intraday high and low prices of the financial asset into its estimation of volatility. Here the diffusion constant of the underlying random walk of the stock price movements is the true variance of the rate of return of a common stock over a unit of time. The paper established that the use of extreme values in estimating the diffusion constant provides a significantly better estimate. Let  during time interval t and the observed set (l1, l2, . . . ln) originates from a random walk, the factor

during time interval t and the observed set (l1, l2, . . . ln) originates from a random walk, the factor  . The extreme value estimate for the diffusion constant D is

. The extreme value estimate for the diffusion constant D is Applying to the stock market, let

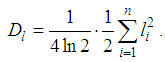

Applying to the stock market, let  and the annualized High-Low Volatility Estimator as

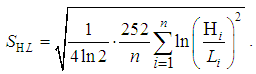

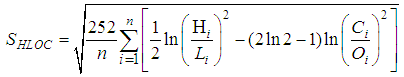

and the annualized High-Low Volatility Estimator as High-Low-Open-Close Volatility Estimator: This incorporates not only the high and low historical prices but also the open and closing historical indicators of the stock price movements in estimating variance and hence volatility. The assumptions here were that stock prices follow a geometric Brownian motion. The annualized high-low-open-close volatility estimator (SHLOC) from Garman and Klass [7] is given as

High-Low-Open-Close Volatility Estimator: This incorporates not only the high and low historical prices but also the open and closing historical indicators of the stock price movements in estimating variance and hence volatility. The assumptions here were that stock prices follow a geometric Brownian motion. The annualized high-low-open-close volatility estimator (SHLOC) from Garman and Klass [7] is given as whereO = opening price of the periodC = closing price of the period.The random variable volatility which is estimated has a tighter sampling distribution.The model diagnosis was performed. Given a collection of models for the data, Akaike Information Criterion (AIC) estimates the quality of each model, relative to the other models. Hence AIC provides a means for model selection. Let L be the maximized value of the likelihood function for the model; let k be the number of parameters in the model (i.e. k is the number of degrees of freedom). Then the AIC value is as followsAIC = 2k – 2ln (L)Given a set of candidate models for the data, the preferred model is the one with the minimum AIC value, hence AIC rewards goodness of fit (as assessed by the likelihood function). It chooses the model with the best fit as measured by the likelihood function, subject to a penalty term to prevent over-fitting that increases with the number of parameters in the model.

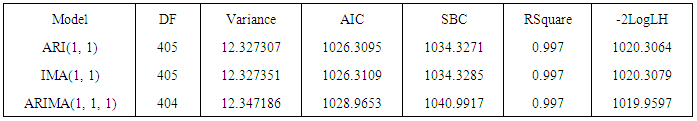

whereO = opening price of the periodC = closing price of the period.The random variable volatility which is estimated has a tighter sampling distribution.The model diagnosis was performed. Given a collection of models for the data, Akaike Information Criterion (AIC) estimates the quality of each model, relative to the other models. Hence AIC provides a means for model selection. Let L be the maximized value of the likelihood function for the model; let k be the number of parameters in the model (i.e. k is the number of degrees of freedom). Then the AIC value is as followsAIC = 2k – 2ln (L)Given a set of candidate models for the data, the preferred model is the one with the minimum AIC value, hence AIC rewards goodness of fit (as assessed by the likelihood function). It chooses the model with the best fit as measured by the likelihood function, subject to a penalty term to prevent over-fitting that increases with the number of parameters in the model. 4. Results and Discussion

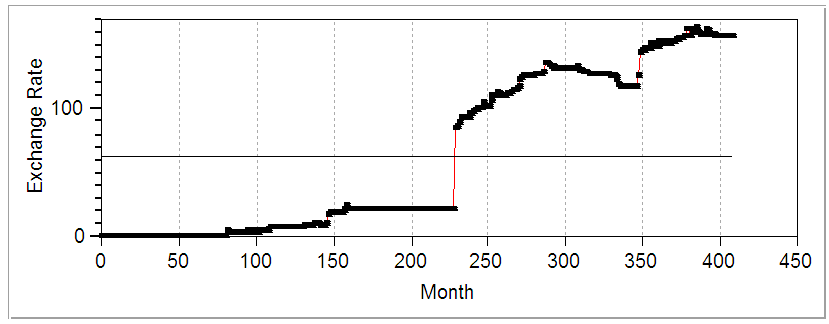

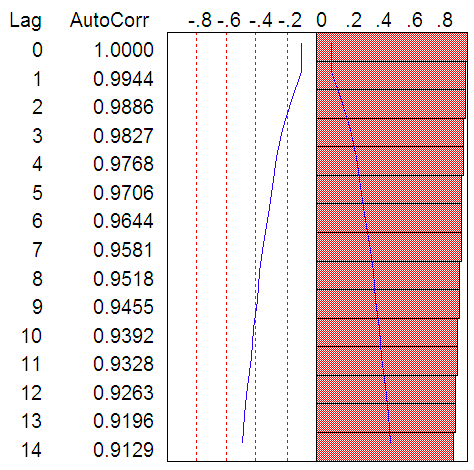

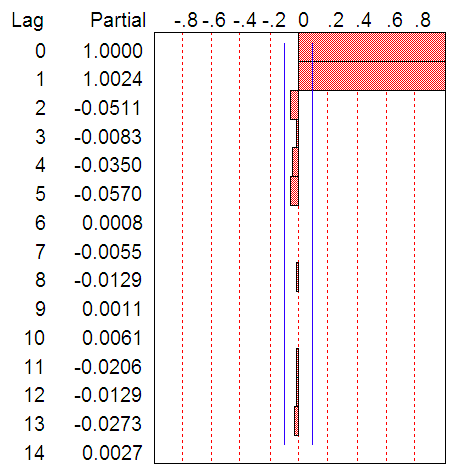

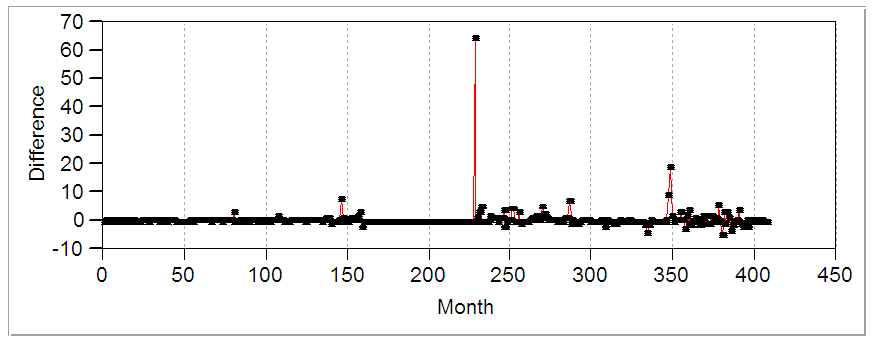

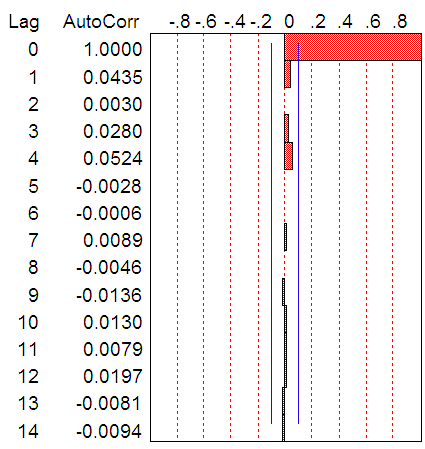

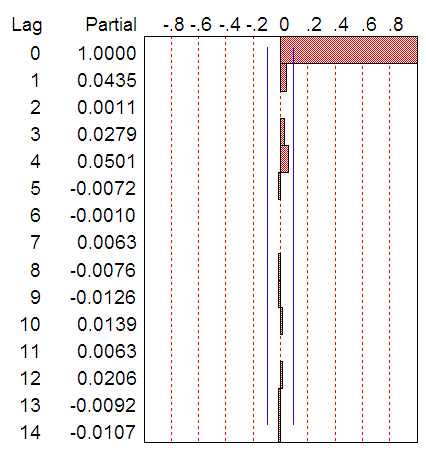

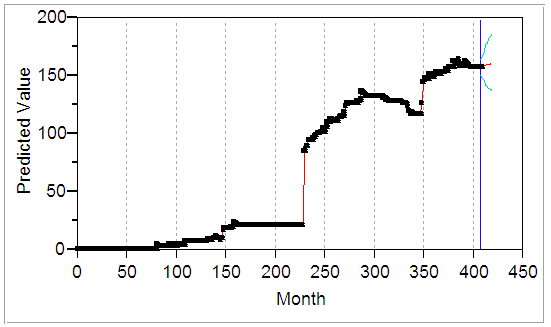

- Figure 1 detail plots of the Nigerian Naira to US Dollar exchange rates from 1980 to 2013. The data plotted display a non-stationary pattern with increasing and decreasing behaviour. The autocorrelation function (ACF) and partial autocorrelation function (PACF) decrease slowly indicating non-stationarity of the process. The study adapts the three iterative steps of Box-Jenkins (1976) to select a suitable stochastic model. These steps are identification, estimation and diagnostic checks. The objective of the identification is to select a subclass of the family of autoregressive moving average (ARMA) models appropriated to represent the data. Box-Cox transformation was applied to the data to achieve stationarity in variance while the first difference was used to get the stationarity in mean. The transformed data are plotted in Figure 4. The transformed exchange rates data are both stable and invertible.

| Figure 1. Plots of Exchange Rate Data |

| Figure 2. ACF of Raw Data |

| Figure 3. PACF of Raw Data |

| Figure 4. Transformed data (Difference: (1-B)^1) |

|

| Figure 5. ACF of Transformed Data |

| Figure 6. PACF of Transformed Data |

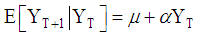

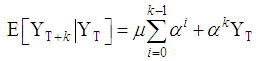

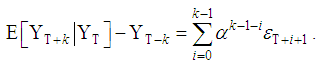

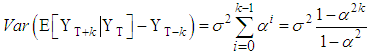

The 1-step forecast can be iterated to get the k-step forecast

The 1-step forecast can be iterated to get the k-step forecast  as

as As

As  , the forecast approaches the stationary value

, the forecast approaches the stationary value  . The forecast error is given as

. The forecast error is given as The forecast error is therefore a linear combination of the unobservable future shocks entering the system after time T. The variance of the k-step forecast error is given as

The forecast error is therefore a linear combination of the unobservable future shocks entering the system after time T. The variance of the k-step forecast error is given as  Thus, as

Thus, as  , the variances increases to the stationary level,

, the variances increases to the stationary level,  .Forecast

.Forecast | Figure 7. |

5. Conclusions

- The results show that the pricing dynamic of the pricing model is heavily dependent on the dynamic of the underlying stochastic process whether or not the parameters is time varying. Some stylized properties for high frequency exchange market returns such as the non-normal distribution, first order negative autocorrelation, increasing fat tail and seasonality are observed from the analysis. The results establish that using high frequency data improve and outperform volatility forecast performance than the option implied volatility. The paper also establishes that most accurate historical forecasts come from the use of high frequency returns and not from a long memory specification. The forecasted values seem to suggest that the Nigerian economy would be in a dynamical state in which every aspects of the economy would be adversely affected by the steady increase in demand for Dollars. The study therefore calls on stakeholders and Nigerian government in particular to put in place adequate monetary policy to check the dwindling purchasing power of Naira from collapse in the international monetary market.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML