-

Paper Information

- Next Paper

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Medicine and Medical Sciences

p-ISSN: 2165-901X e-ISSN: 2165-9036

2015; 5(4): 164-167

doi:10.5923/j.ajmms.20150504.04

Comparison of Two Standard Setting Methods in a Medical Students MCQs Exam in Internal Medicine

Omer Abdelgadir Elfaki1, Karimeldin M. A. Salih2

1A/Prof of Internal Medicine and Medical Educationist, Department of Internal Medicine and Department of Medical Education, College of Medicine, King Khalid University

2A/Prof of Pediatrics and Medical Educationist, Department of Pediatrics and Department of Medical Education, College of Medicine, King Khalid University

Correspondence to: Omer Abdelgadir Elfaki, A/Prof of Internal Medicine and Medical Educationist, Department of Internal Medicine and Department of Medical Education, College of Medicine, King Khalid University.

| Email: |  |

Copyright © 2015 Scientific & Academic Publishing. All Rights Reserved.

Background: There is no single recommended approach to standard setting and many methods exist. These include norm-referenced methods and the criterion- referenced methods. The Angoff method is the most widely used and researched criterion-referenced method of standard setting.Becausethe outcome of assessments is determined by the standard-setting method used and because different methods of setting standards result in different standards, the choice and process of the method used is of utmost importance. The aim of this study was to compare two standard setting methods, norm-referenced and Angoff methods.Methods: Two different methods of standard setting were applied on the raw scores of 106 final medical students on a multiple choice (MCQ) examination in internal medicine. One of these was the modified Angoff method and the other the norm-reference method of standard setting (mean minus 1 SD). The pass rates derived from the two methods were compared. Results: The pass rate with the norm-reference method was 88% (93/106) and that by the Angoff method was 39% (41/106). The percentage agreement between Angoff method and norm-reference was 36% (95% CI 36% – 81%).Conclusions: The two standard-setting methods used, produced significantly different outcomes. This was demonstrated by the different pass rates.

Keywords: Standard, Setting, Angoff, Norm-reference, Assessment

Cite this paper: Omer Abdelgadir Elfaki, Karimeldin M. A. Salih, Comparison of Two Standard Setting Methods in a Medical Students MCQs Exam in Internal Medicine, American Journal of Medicine and Medical Sciences, Vol. 5 No. 4, 2015, pp. 164-167. doi: 10.5923/j.ajmms.20150504.04.

1. Background

- A standard is a conceptual boundary on the true-score scale between acceptable and non-acceptable performance [1]. Generally, there are 2 types of standards: absolute or criterion-reference and relative or norm-reference [2-4]. In absolute standards, the performance is measured against a predetermined criterion and therefore is independent of the performance of the group of examinees. On the other hand, in relative standards, the performance of the examinee is compared to others who took the test and hence the pass/fail depends on the performance of that group.The outcome of any assessment is determined by the standard-setting method used. Standard – setting is defined as "the process of deciding what is good enough" [5]. There is no single method of choice for standard setting and many methods exist [6]. These include norm – reference methods and the criterion reference methods. Each standard- setting method has its advantages and disadvantages, and there is no gold standard. A criterion-referenced standard is generally preferred to a norm-referenced (fixed pass rate) or holistic model (arbitrary pass mark at, say, 60%) [7]. The Angoff method is the most widely used and researched criterion-referenced method of standard setting and is well supported by evidence [2, 8, 9]. However, it has some disadvantages which include the high time and number of personnel needed [2, 10] and the difficulty inherited in applying the concept of the borderline candidate [2, 11-13]. In this method, a panel of judges examines each MCQ item and estimates the probability that the "minimally competent" or "borderline" candidate would answer the item correctly [8]. Then the scores are discussed in the group and consensus is reached if possible. This stage is not done in the modified approach. Each judge's estimate scores on all items are added up and averaged and the test standard is the average of these means for all the judges. The norm reference methods are easy to use and understand but some examinees will always fail irrespective of their performance and the pass score is not known in advance and can deliberately be influenced by the students [9].It has been found that different methods of setting standards result in different standards and hence it is argued that the validity of a test is determined as much by the method used to set the standard, as by the test content itself. Downing et al [14] argued that all standards are ultimately policy decisions and that 'there is no gold standard for a passing score. What is important is the process of setting the standard. The four important criteria that underpin the process of standard setting are that it is systematic, reproducible, absolute and unbiased.The objective of this study was to compare two standard setting methods, norm – reference and Angoff methods for MCQs examination.

2. Methods

- This study was conducted to answer the research question: What is the agreement of the standards and the pass rates resulting from application of these two standard setting methods on the same MCQs exam? The scores of 106 final medical students on MCQs paper in their internal medicine course at the College of Medicine of King Khalid University were collected after ethical approval from the research committee. The MCQs paper had 90 one best answer type items with four options. The questions covered topics in general internal medicine and clinical medicine. Using the two different standard-setting methods-the norm-reference method and the modified Angoff method- two standards were determined. The two methods were compared by the pass rates. In the norm-reference method the standard was determined by calculating the mean of the scores and the Standard Deviation (SD). The standard was set as the adjusted mean minus 1.0 SD.In the modified Angoff method, a panel of seven judges participated in the standard-setting round. All of them were consultant physicians who participated in teaching the medical students and thus were familiar with the curriculum. A consensus on the definition of a minimally acceptable, that is borderline candidate, was reached. Based on that definition, each rater judged each MCQ item and the probability that a borderline candidate would answer the item correctly. As the modified Angoff method was used, no group discussion and consensus was established for each item. All ratings were collected and the mean of each rater's total judgment scores on all 90 items was calculated. This mean score represents the score that a minimally competent candidate would obtain according to the rater's judgment.Statistical analysis was done using SPSS version 20.0.The pass rates were calculated based on the pass scores set for each of the two methods. Comparison of the Angoff and norm reference methods was done by looking at their percentage agreement, which was determined by calculating the percentage of students that gets the same result (pass or fail) by the 2 different methods.

3. Results

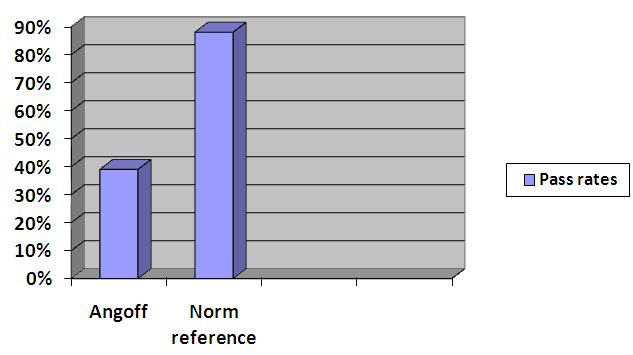

- The mean of scores and the standard deviation are shown in table 1. The pass rate with the norm-reference method i.e. means minus 1.0 SD was 88% (93/106) and that by the modified Angoff method was 39% (41/106) (table 2). The choice of mean minus 1.0 SD as the pass/fail cut -off score was entirely arbitrary (although this is common practice among educationalists). The two methods of standard – setting, i.e. norm – reference (mean minus 1.0 SD) and modified Angoff method, were compared by looking at the percentage agreement between them (Figure 1). The percentage agreement between the Angoff and the norm – reference method was 36% (95% Confidence Interval = 36% – 81%).

|

| |||||||||||||||

| Figure 1. The pass rates of the two methods |

4. Discussion

- In this study, there was limited agreement between the modified Angoff method and the norm-reference method in determining the outcome of MCQs paper for a batch of medical students. The pass rate was found to be 88% with the norm – reference method, whilst by the Angoff method was 39%. Thus, these two different standard setting methods yielded different standards and the percentage agreement between the two was only 36%. This finding is similar to that reported in previous studies [14-19]. Verhoven et al [20] compared the pass/fail rates derived from the modified Angoff method and the norm – reference method (mean minus 1 SD) and found them to be significantly different with failure rates of 56.5% and 10.1% respectively. Standards were also found to be very different in other studies where different standard setting methods to OSCEs in undergraduate medical examinations had been used [21, 22]. Although it is now fairly well established that different standard setting methods result in different outcomes or passing scores, they can be made credible, defensible and acceptable by ensuring the credibility of judges and using a systematic approach to collect their judgments [14].The number of judges who participated in the Angoff method of standard setting in this study was relatively small, though it might be acceptable. Some researchers recommended that the number of judges should be between 5 and 10 [23] while others suggested between 5 and 30 judges [24]. Although there is no clear consensus among researchers on the most appropriate number of judges, larger numbers might yield more valid findings.

5. Conclusions

- The pass rates generated by the two methods proved to be significantly different. The percentage agreement of the pass rates by the two methods is very low.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML