-

Paper Information

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Intelligent Systems

p-ISSN: 2165-8978 e-ISSN: 2165-8994

2016; 6(3): 74-77

doi:10.5923/j.ajis.20160603.03

Single Multiplicative Neuron Model Artificial Neuron Network Trained by Bat Algorithm for Time Series Forecasting

Eren Bas1, Ufuk Yolcu2, Erol Egrioglu1, Ozge Cagcag Yolcu3, Ali Zafer Dalar1

1Department of Statistics, Faculty of Arts and Science, Forecast Research Laboratory, Giresun University, Turkey

2Department of Statistics, Faculty of Science, Ankara University, Turkey

3Department of Industrial Engineering, Faculty of Engineering, Forecast Research Laboratory, Giresun University, Turkey

Correspondence to: Erol Egrioglu, Department of Statistics, Faculty of Arts and Science, Forecast Research Laboratory, Giresun University, Turkey.

| Email: |  |

Copyright © 2016 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

In recent years, artificial neural networks have been commonly used for time series forecasting. One popular type of artificial neural networks is feed forward artificial neural networks. While feed forward artificial neural networks give successful forecasting results, they have an architecture selection problem. In order to eliminate this problem, Yadav et al. (2007) proposed single multiplicative neuron model artificial neural network (SMNM-ANN). There are various learning algorithms for SMNM-ANN in the literature such as particle swarm optimization and differential evolution algorithm. In this study, differently from these learning algorithms, bat algorithm is used for the training of SMNM-ANN for forecasting of time series. The SMNM-ANN trained by bat algorithm is applied to two well-known real world time series data sets and its superior forecasting performance is proved by comparing with the results of other techniques suggested in the literature.

Keywords: Artificial neural networks, Single multiplicative neuron model, Bat algorithm, Forecasting

Cite this paper: Eren Bas, Ufuk Yolcu, Erol Egrioglu, Ozge Cagcag Yolcu, Ali Zafer Dalar, Single Multiplicative Neuron Model Artificial Neuron Network Trained by Bat Algorithm for Time Series Forecasting, American Journal of Intelligent Systems, Vol. 6 No. 3, 2016, pp. 74-77. doi: 10.5923/j.ajis.20160603.03.

1. Introduction

- There are many models presented in time series literature for forecasting problem. Traditional models may fail to satisfy to analyze many data sets which have various assumptions. From this point of view, especially over the last decades, artificial neural networks (ANNs) that do not include such strict assumptions have been widely taken advantage of as an alternative forecasting approach. The most important characteristic of ANN is that it has the ability to learn from a source of information (data). Learning process of ANN is the process of getting the best values of weights and this process is called as the training of ANN. The training problem of ANN can be regarded as an optimization problem. In the literature, although Levenberg-Marquardt and Back Propagation (BP) learning algorithms have been used to train ANN, some heuristic algorithms such as genetic algorithms, particle swarm optimization, differential evolution algorithm, simulated annealing and taboo search algorithm are also used, to train ANN especially in recent years.The first ANN model was proposed by [1]. Multilayer perceptron (MLP) was presented by [2] in order to solve a non-linear problem. A MLP model is composed of an input and output, and one or more hidden layer(s). Various ANN models were put forward by [3-6]. Moreover generalized mean neuron model (GMN) and geometric mean neuron (G-MN) model were introduced by [7, 8], respectively. [9] presented an approach to get combining forecast by using ANN. In time series forecasting problem, multi-layer feed forward artificial neural networks (ML-FF-ANN) model have been most commonly used. The time series forecasting literature based on ANN was summarized by [10]. And also [11-14], for forecasting real-world time series, touched on the issue of network structure in their studies. Even though feed forward artificial neural networks can produce successful forecasting results, they have an architecture selection problem that can have a negative effect on the forecasting performance of ANN. Therefore the determination of the numbers of neurons in the layers in ML-FF- ANN is a crucial problem. [15] proposed single multiplicative neuron model artificial neural network (SMNM-ANN) that has a single neuron and does not have architecture selection problem. While [15] used back propagation learning algorithm, [16] used cooperative random particle swarm optimization (CRPSO) in the learning process of SMNM-ANN. And also, the modified particle swarm optimization method and differential evolution algorithm (DEA) were utilized to train MNM-ANN by [17, 18]. In this study, bat algorithm (BA) that is used in new studies frequently is firstly utilized for the training of SMNM-ANN in time series forecasting. BA is based on the echolocation features of micro-bats (Yang, 2010) and bats are very good at finding the prey. They can distinguish very small prey and obstacles even in the dark. The algorithm mimics the same process of how bats can find and detect the food source with the help of echolocation. To support the idea that SMNM-ANN trained by BA (BA-SMNM-ANN) has satisfied forecasting performance, two well-known real-life time series data were analyzed. Section 2 gives an introduction of BA-SMNM-ANN and an algorithm of training process. The implementation and the obtained results are presented in Section 3. And finally last section provides some discussions.

2. The Proposed Method

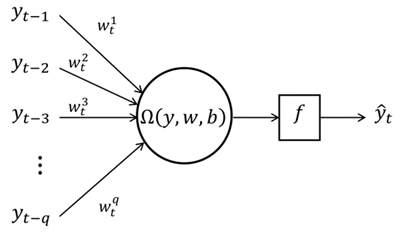

- There are various studies that show SMNM-ANN is effectively used in time series forecasting. Different learning algorithms have been used in the training process of SMNM-ANN to get better forecasting results. In this study, SMNM-ANN is firstly trained by BA. Since the proposed BA-SMNM-ANN model is basically a SMNM, it has same structure with SMNM and it can be demonstrated in Figure 1.

| Figure 1. The structure of a BA-SMNM-ANN |

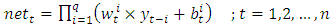

is the product of the weighted inputs and also

is the product of the weighted inputs and also  shows the activation function. Here,

shows the activation function. Here,  and

and  are inputs and outputs of BA-SMNM-ANN, respectively. Moreover, q can be called as model order, n is the number of observations in training set of time series. The SMNM-ANN with q inputs given in Figure 1 has

are inputs and outputs of BA-SMNM-ANN, respectively. Moreover, q can be called as model order, n is the number of observations in training set of time series. The SMNM-ANN with q inputs given in Figure 1 has  weights. Of these, q are the weights corresponding to the inputs

weights. Of these, q are the weights corresponding to the inputs  and q to the sides of the weights

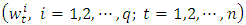

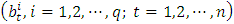

and q to the sides of the weights  . The optimization process can be also called as the training of BA-SMNM-ANN. The weights and biases are shown with

. The optimization process can be also called as the training of BA-SMNM-ANN. The weights and biases are shown with  and

and  . Thus, each member of bat population has

. Thus, each member of bat population has  positions. The structure of a member of bat population is illustrated in Figure 2.

positions. The structure of a member of bat population is illustrated in Figure 2. | Figure 2. The structure of a bat |

, positions

, positions  , rate

, rate  , and loudness

, and loudness  are generated. The initial values of

are generated. The initial values of  and

and  and

and  are constant parameters.Step 2. Calculate the fitness value of each bat in the population.To obtain fitness function values, firstly the outputs of the network are calculated by using following equations;

are constant parameters.Step 2. Calculate the fitness value of each bat in the population.To obtain fitness function values, firstly the outputs of the network are calculated by using following equations; | (1) |

| (2) |

| (3) |

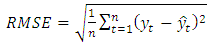

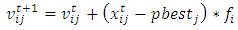

from uniform distribution with

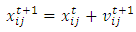

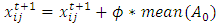

from uniform distribution with  parameters.Step 3.2. Calculate velocities and positions of bats by using following formula.

parameters.Step 3.2. Calculate velocities and positions of bats by using following formula. | (4) |

| (5) |

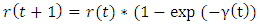

is generated from uniform distribution with the parameters

is generated from uniform distribution with the parameters  . If

. If  , positions of bats are updated by using following formula.

, positions of bats are updated by using following formula. | (6) |

is a random number and it is generated from uniform distribution with the

is a random number and it is generated from uniform distribution with the  parameters

parameters  . Besides,

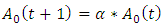

. Besides,  is a vector and its elements are constituted by average loudness of all bats.Step 3.4.

is a vector and its elements are constituted by average loudness of all bats.Step 3.4.  is generated from uniform distribution with the parameters

is generated from uniform distribution with the parameters  . If

. If  and new fitness value of the bat is smaller than the previous fitness value of the bat at the same time.

and new fitness value of the bat is smaller than the previous fitness value of the bat at the same time. | (7) |

| (8) |

.Step 4. Check the stopping criteria.If it is reached to the maximum number of iterations or the fitness value calculated from the bat population with the best fitness value. is less than a predetermined value

.Step 4. Check the stopping criteria.If it is reached to the maximum number of iterations or the fitness value calculated from the bat population with the best fitness value. is less than a predetermined value  the process is end, otherwise move to Step 3.

the process is end, otherwise move to Step 3.3. Implementation

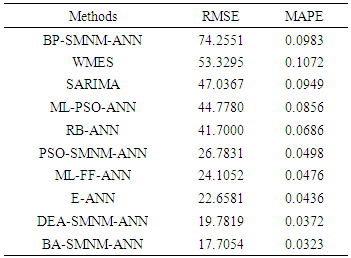

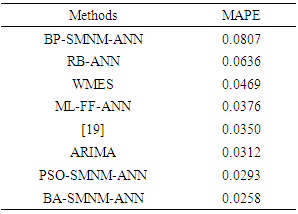

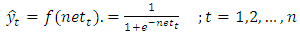

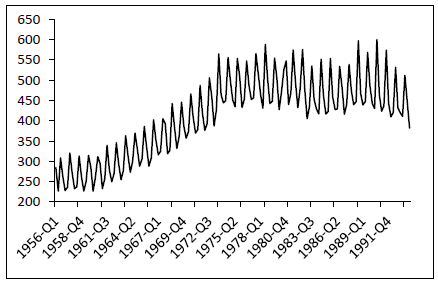

- To show the performance of the BA-SMNM-ANN, Australian Beer Consumption “(AUST)" data with 148 observations between years 1956 and 1994 was analyzed firstly.The results obtained from various methods have been compared in respect to RMSE and Mean Absolute Percentage Error (MAPE) criteria given in Eq. 7.

| (9) |

and

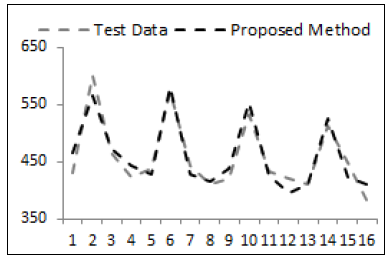

and  show the number of training samples, observed values and the forecasting values, respectively.The last 16 observations of the time series was taken as test data. In addition to the proposed method, AUST data, given in Figure 3, was analyzed by seasonal autoregressive integrated moving average (SARIMA), Winter's multiplicative exponential smoothing (WMES), Multi-layer feed-forward neural network (ML-FF-ANN), Multilayer neural network based on particle swarm optimization (ML-PSO-ANN), Back propagation learning algorithm based on single multiplicative neuron model neural network (BP-SMNM-ANN), single multiplicative neuron model artificial neural network based on particle swarm optimization (PSO-SMNM-ANN), single multiplicative neuron model artificial neural network based on differential evolution algorithm (DA-SMNM-ANN), Radial basis artificial neural network (RB-ANN), Elman neural network (E-ANN) methods.

show the number of training samples, observed values and the forecasting values, respectively.The last 16 observations of the time series was taken as test data. In addition to the proposed method, AUST data, given in Figure 3, was analyzed by seasonal autoregressive integrated moving average (SARIMA), Winter's multiplicative exponential smoothing (WMES), Multi-layer feed-forward neural network (ML-FF-ANN), Multilayer neural network based on particle swarm optimization (ML-PSO-ANN), Back propagation learning algorithm based on single multiplicative neuron model neural network (BP-SMNM-ANN), single multiplicative neuron model artificial neural network based on particle swarm optimization (PSO-SMNM-ANN), single multiplicative neuron model artificial neural network based on differential evolution algorithm (DA-SMNM-ANN), Radial basis artificial neural network (RB-ANN), Elman neural network (E-ANN) methods. | Figure 3. AUST data between the years of 1956-1994 |

|

| Figure 4. The graph of real observations and forecasts obtained from proposed method for AUST data |

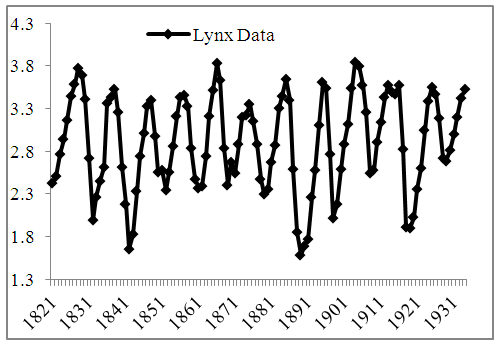

| Figure 5. Logarithmic Canadian lynx data series (1821-1934) |

|

4. Discussion and Conclusions

- Several kind of ANN model is used in time series forecasting literature. Although ML-FF-ANN has been commonly used in the forecasting problem of time series, the architecture selection problem of it is crucial to get the reasonable forecasts. SMNM-ANN has not also such a problem because it has only one neuron. To train SMNM-ANN, there are various learning algorithms in the literature. In this paper, for time series forecasting problems, SMNM-ANN is trained by BA. By favour of real-life time series implementations, the outstanding forecasting performance of BA-SMNM-ANN has been brought to light.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML