Otar Verulava

Department of Artificial Intelligence, Georgian Technical University, Tbilisi, Georgia

Correspondence to: Otar Verulava, Department of Artificial Intelligence, Georgian Technical University, Tbilisi, Georgia.

| Email: |  |

Copyright © 2015 Scientific & Academic Publishing. All Rights Reserved.

Abstract

The learning process of formal neuron, error-correction of recognition through the correction of weight coefficients and neuron threshold is considered. On the first stage, initial descriptions-templates of patterns are chosen or received; on the next stage, according to the results of recognition change-correction of initial descriptions take place; if result of recognition is correct, description will not be changed, if recognition is wrong, then the error will be corrected through early developed algorithm-program module. The Error-correction algorithm is an iterative procedure where every step begins by presenting for recognition of an unknown realization and finishes according to the result of recognition through the procedures of rewarding or non-rewarding. The learning Algorithm consists of two stages: the first, formation of descriptions for the considered pattern through the realizations of initial descriptions and learning set; the second, formation of the final descriptions through the descriptions of the other patterns and their realizations. Vectors of the feature (measurement) space, elements of which are real-number values represent realizations of recognizing patterns. These vectors or even perhaps matrices are equal-dimensioned for each realization and equal to the number of features in the feature space. As needed during the learning process the evaluation of the isolated feature or the whole feature space are carried out according to the correct recognition criterion. Recognition procedure via formal neurons is simultaneously used for the description of patterns, error correction and for the realization of the feature evaluation algorithms. Compact and original description of the recognition process by the formal neuron is offered.

Keywords:

Neuron, Learning, Error-Correction, Recognition

Cite this paper: Otar Verulava, Error-Correction of Recognition in the Process of Neuron Learning, American Journal of Intelligent Systems, Vol. 5 No. 3, 2015, pp. 92-96. doi: 10.5923/j.ajis.20150503.02.

1. Introduction

Most of works in respect of neuronal networks of the scientific literature describe the construction method of the net structure, however comparatively fewer numbers of works are dedicated to the error-correction throughout the recognition process. In that case when error-recognition process is discussed, e.g. error back propagation algorithm, the reasons of which are not defined and their role (contribution) in the recognition of error. In this work the method of revealing recognition error and correction, which is based on the feedback of neurons are discussed, owing to them information about error is spread, which is followed by the parameters of neurons: weighing coefficients and in case of need changes of the threshold value. Algorithm of changes is iterational and proceeds up to the time until the error recognition is corrected.Additionally, the method of evaluation of features is described, owing to that each feature or their group under viewpoint of correct recognition is evaluated.

2. Description of the Recognition Process by the Formal Neuron

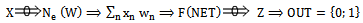

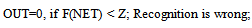

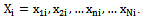

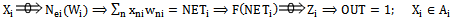

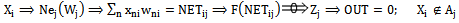

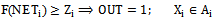

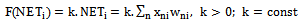

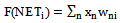

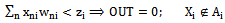

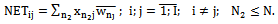

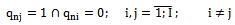

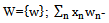

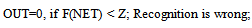

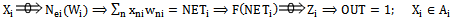

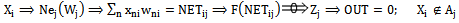

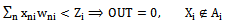

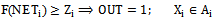

Recognition of unknown or known set of patterns, for example realization of learning set, vectors from feature space get at the entry of recognition system, in our case neuron or neuronal network. As a result the coordinates of vectors are multiplied by the appropriate weight coefficients of the neuron. Each member of the received product is summed up by the summing element, whereof neuron reaction is received upon the realization of recognition vectors, NET number, with the help of which we establish appropriate meaning of the activation function F(NET). The meaning of the activation function is compared to Z neuron threshold. According to the comparison result the output of neuron - OUT is formed, the meaning of which is equal to zero, if the recognition is wrong, or to one if the recognition is correct.Let us define with predicate “presentation” the appearance of recognizable vector at the input of neuron, mathematical analogue of which is graphically expressed by the symbol ”  ”, where symbol

”, where symbol  expresses predicate and represents the result received through mathematical operation. This allows us to express recognition process compactly:

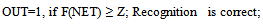

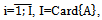

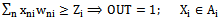

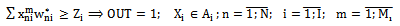

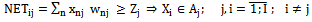

expresses predicate and represents the result received through mathematical operation. This allows us to express recognition process compactly: | (2.1) |

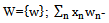

Where  is a recognizable realization, Ne(W)-set of neurons with the weighing coefficients

is a recognizable realization, Ne(W)-set of neurons with the weighing coefficients  summing element;

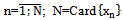

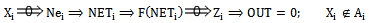

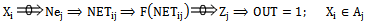

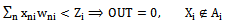

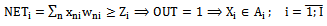

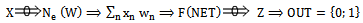

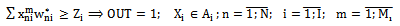

summing element;  the meaning of activation function F(NET) is defined according to NET, that is compared with the value of threshold Z of the neuron and according to the comparison the meaning of output signal OUT of neuron is defined. In particular we have:

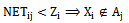

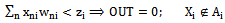

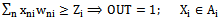

the meaning of activation function F(NET) is defined according to NET, that is compared with the value of threshold Z of the neuron and according to the comparison the meaning of output signal OUT of neuron is defined. In particular we have: | (2.2) |

| (2.3) |

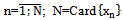

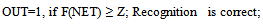

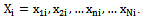

Expression (2.2) describes the process of making decision whilst presenting unknown realization. Let us note that the conclusion of the experimenter about rightfulness and incorrectness of the recognition process, especially while realization of the learning process, might be different from the formal procedure, which we are going to discuss afterwards.Let us denote pattern set for recognition by A, while the element of this set by  where

where

, where

, where  represents

represents  realization; the coordinates of vector

realization; the coordinates of vector  are the real numbers received via measuring the features:

are the real numbers received via measuring the features:

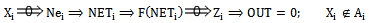

3. Description of Correct Recognition Process

Correct recognition process consists of two parts. The first, when the realization of recognizable pattern is presented to this kind of recognizing neuron, e.g.  realization is presented to

realization is presented to  neuron.We consider, that in such case realization appeared at the input of “Its” type neuron. The second, one type realization e.g.

neuron.We consider, that in such case realization appeared at the input of “Its” type neuron. The second, one type realization e.g.  is presented to

is presented to  for recognition, that is “The other” type neuron. Both cases are described in (2.1) and (2.2) terms.

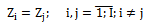

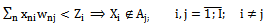

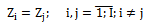

for recognition, that is “The other” type neuron. Both cases are described in (2.1) and (2.2) terms. | (3.1) |

| (3.2) |

Where  Let us denote that in (3.1) term OUT=1, but in (3.2) OUT=0; Despite this, in both cases recognition is correct, thereby 3.1 and 3.2 differ from 2.1 and 2.2 terms.

Let us denote that in (3.1) term OUT=1, but in (3.2) OUT=0; Despite this, in both cases recognition is correct, thereby 3.1 and 3.2 differ from 2.1 and 2.2 terms.

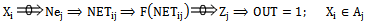

4. Description of Incorrect Recognition Process

We might have two cases among incorrect recognition processes: the first, when any kind of realization is presented to “Its” neuron and the second one type of realization is presented for recognition to “the other’s” type neuron. In order to describe the first case let us assume, that  is presented to

is presented to  neuron, term (3.1), with the difference that the output is equal to zero;

neuron, term (3.1), with the difference that the output is equal to zero; | (4.1) |

| (4.2) |

Where  terms (4.1) and (4.2) give us description of the incorrect recognition process. The aim of the following thesis is to correct this mistake. In order to achieve this aim, hereafter let us discuss in detail the process of making an error and we will work out the algorithms for its correction.

terms (4.1) and (4.2) give us description of the incorrect recognition process. The aim of the following thesis is to correct this mistake. In order to achieve this aim, hereafter let us discuss in detail the process of making an error and we will work out the algorithms for its correction.

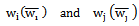

5. Error Correction Whilst Recognizing “Its” Realization

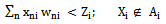

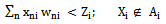

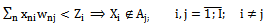

In order to examine the error made by term (4.1) let us discuss the second (last) part of the this term:  | (5.1) |

There is one way to correct the error: we must change neuron weighing coefficients of so that it changed the sign of inequality

| (5.2) |

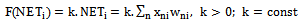

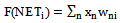

Let us discuss  value-receiving process. The function F(.) is called an activation function, which is various by different nonlinears, we choose one out of them. Activation functions have one similar function: increasing monotonously, existence of nearly linear segment (besides activation function of perceptron). So we can assume, that F(NET) function parameters, or neuron weighing coefficients are chosen in the way that, in case of any kind of change of weighing coefficients we stay on the linear segment of the activation function, so the following correlation is correct:

value-receiving process. The function F(.) is called an activation function, which is various by different nonlinears, we choose one out of them. Activation functions have one similar function: increasing monotonously, existence of nearly linear segment (besides activation function of perceptron). So we can assume, that F(NET) function parameters, or neuron weighing coefficients are chosen in the way that, in case of any kind of change of weighing coefficients we stay on the linear segment of the activation function, so the following correlation is correct: | (5.3) |

In case of term 5.3 is true k value represents scale coefficient, which is changed during the process of learning weighing coefficient. So we receive, that k=1 and accordingly instead of term 5.3, we will have:  accordingly instead of term 5.1 we will have: 5.4

accordingly instead of term 5.1 we will have: 5.4 | (5.4) |

In the process of recognition the error correction will take place if (5.1), i.e. instead of (5.4) term we will have inequality expressed by (5.2) term: | (5.5) |

It is possible to get (5.5) inequality from inequality (4.4)  through increasing of weighing coefficients. Therefor we should grant weighing coefficients with initial meanings. Let us assume that in

through increasing of weighing coefficients. Therefor we should grant weighing coefficients with initial meanings. Let us assume that in  realization set we have this type learning realization set for which the following condition is fulfilled:

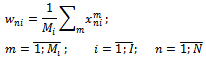

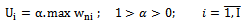

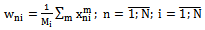

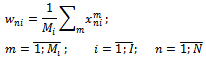

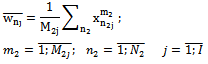

realization set we have this type learning realization set for which the following condition is fulfilled:  Let us count weighing coefficients initial meanings through learning set realization (vectors) coordinates; through so-called awarding procedures:

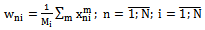

Let us count weighing coefficients initial meanings through learning set realization (vectors) coordinates; through so-called awarding procedures: | (5.6) |

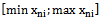

By conducting overlay procedure for any kind of neuron e.g.  we take

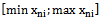

we take  weighing coefficients, the values of which are placed at

weighing coefficients, the values of which are placed at  range. It is possible to increase weighing coefficient step-by-step using recurrent iteration procedure and heuristically i.e. all of a sudden by adding any constant/unvarying number. We should carry out increasing i.e. awarding procedure for the weighing coefficients values of which is close or equals to

range. It is possible to increase weighing coefficient step-by-step using recurrent iteration procedure and heuristically i.e. all of a sudden by adding any constant/unvarying number. We should carry out increasing i.e. awarding procedure for the weighing coefficients values of which is close or equals to  it means these characteristics are more important for the given pattern than any other. Let us discuss recurrent procedure:

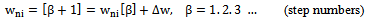

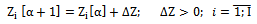

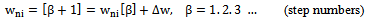

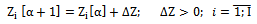

it means these characteristics are more important for the given pattern than any other. Let us discuss recurrent procedure: | (5.7) |

If we use (5.7) iteration it will be necessary to choose  that must be done by considering initial weighing coefficients. We check the recognition result of iteration at every step through the 5.5 term. We increase the number of steps until it becomes inequality 5.5 true/correct. Which corresponds to present incorrectly recognized realization for recognition for the second time. When weighing coefficients received from the previous step move unchangeably to the next step.Those values of weighing coefficients, when inequality (5.5) becomes true, let’s designate it by

that must be done by considering initial weighing coefficients. We check the recognition result of iteration at every step through the 5.5 term. We increase the number of steps until it becomes inequality 5.5 true/correct. Which corresponds to present incorrectly recognized realization for recognition for the second time. When weighing coefficients received from the previous step move unchangeably to the next step.Those values of weighing coefficients, when inequality (5.5) becomes true, let’s designate it by  In that case we will have:

In that case we will have:  | (5.8) |

The truthfulness of 5.8 term means that the error in recognition is corrected. The change of “i “and “m” indexes show that errors can be corrected for  realizations of any learning set and for any pattern of A set. The algorithm of error-correction in (5.8) term does not change correct recognition results received through changes of neuron weighing coefficients of different patterns.Whilst changing of weighing coefficient we just use “Awarding” procedure expression (5.7); we choose equal quantities as neuron thresholds initial values:

realizations of any learning set and for any pattern of A set. The algorithm of error-correction in (5.8) term does not change correct recognition results received through changes of neuron weighing coefficients of different patterns.Whilst changing of weighing coefficient we just use “Awarding” procedure expression (5.7); we choose equal quantities as neuron thresholds initial values:  | (5.9) |

Then by using (5.8) and (5.6) procedures we have an opportunity to recognize all the learning-set-realizations of pattern sets without errors, so that previously received recognition correct results stay unchanged.

6. Error Correction Whilst Recognizing Realizations of “Other’s” Pattern

The process of incorrectly recognition is described by (4.1) and (4.2) terms.Let us discuss 4.1 expression, where instead of  we write its term according to 5.1.

we write its term according to 5.1. | (6.1) |

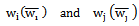

Where  In order to correct the error it is necessary to change inequality signs in (6.1) term, that can be done by increasing

In order to correct the error it is necessary to change inequality signs in (6.1) term, that can be done by increasing  weighing coefficients, i.e. with the method described in the previous paragraph.Let’s discus the right part of the (4.2) term:

weighing coefficients, i.e. with the method described in the previous paragraph.Let’s discus the right part of the (4.2) term:  | (6.2) |

Threshold must be increased for correcting the error, or  weighing coefficient decreased. Because of the fact that according to the condition, we use just “awarding” procedure, that’s why we choose the procedure of increasing of the neuron threshold in the image similar to (5.7), where instead of weighing coefficient we will have the meaning of the threshold:

weighing coefficient decreased. Because of the fact that according to the condition, we use just “awarding” procedure, that’s why we choose the procedure of increasing of the neuron threshold in the image similar to (5.7), where instead of weighing coefficient we will have the meaning of the threshold: | (6.3) |

Required value of the neuron threshold when (6.2) sign of inequality is changed in an opposite way, we will denote it with

| (6.4) |

As the neuron pattern is one, which has one threshold, that’s why if we assume the increase of the threshold of the  type neuron up to

type neuron up to  quantity, then we might receive errors in the recognition of “its” realizations, such opportunity especially will take place there, where the term is fulfilled:

quantity, then we might receive errors in the recognition of “its” realizations, such opportunity especially will take place there, where the term is fulfilled:  Precisely in order to find out those characteristics, upon which “awarding” procedure might be carried out, it is necessary to carry out the experiment of recognition for those pattern realizations for which the above mentioned inequality takes place. In case of an error we should repeat the procedure that was mentioned in the fifth paragraph.

Precisely in order to find out those characteristics, upon which “awarding” procedure might be carried out, it is necessary to carry out the experiment of recognition for those pattern realizations for which the above mentioned inequality takes place. In case of an error we should repeat the procedure that was mentioned in the fifth paragraph.

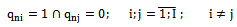

7. Evaluation of the Features According to the Correct Recognition Criteria

For the evaluation of features basically clustering methods are used, for example “Theory of Rank Links” [1], [2], with their help the meanings of clusters are established for each pattern; also the degree of their compactness or non- compactness and etc. are established. In our case we consider recognition process by the formal neuron, but for the evaluation of features we use just the correct recognition criteria. The correct recognition criterion implies determination of the features, changes of which provide correct recognition, or if the recognition is incorrect, corrects an error.

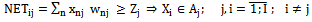

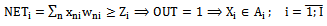

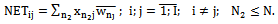

recognition process of realization by

recognition process of realization by  neuron is described in 3.1 term. In the recognition process basically receiving of

neuron is described in 3.1 term. In the recognition process basically receiving of  value, which may be used instead of

value, which may be used instead of  value (5.3 term) in order to receive final result OUT of recognition. Hereinafter we will see that changes of neuron weighing coefficients F(.) take place on the linear segment of the function (5.3 term).According to the assumption in 5.3 term the result of recognition process is correct, if inequality is fulfilled 5.5:

value (5.3 term) in order to receive final result OUT of recognition. Hereinafter we will see that changes of neuron weighing coefficients F(.) take place on the linear segment of the function (5.3 term).According to the assumption in 5.3 term the result of recognition process is correct, if inequality is fulfilled 5.5: | (7.1) |

The value in 7.1 term  consists of the sum of

consists of the sum of  multiplications. Let us examine each term of the sum, which consists of n element and the appropriate weighing coefficient of the learning set realization. In order to fulfil the inequality of 7.1 term the meaning/value of the multiplication must be as high as possible. As realization coordinates are important for the signs received through measuring, which could not be changed, therefore we can change just weighing coefficients, i.e. elements of

multiplications. Let us examine each term of the sum, which consists of n element and the appropriate weighing coefficient of the learning set realization. In order to fulfil the inequality of 7.1 term the meaning/value of the multiplication must be as high as possible. As realization coordinates are important for the signs received through measuring, which could not be changed, therefore we can change just weighing coefficients, i.e. elements of  vector and choose

vector and choose  initial meanings of weighing coefficients through learning set realization. Let us consider that

initial meanings of weighing coefficients through learning set realization. Let us consider that  If we denote elements of X set by

If we denote elements of X set by  but

but  coordinates

coordinates  of then for the initial meanings/values of the weighing coefficients will be:

of then for the initial meanings/values of the weighing coefficients will be: | (7.2) |

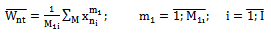

By fulfilling of 7.2 term we will get vectors for each  pattern and neuron initial weighing coefficients.

pattern and neuron initial weighing coefficients.  set, coordinates of which

set, coordinates of which  represent objective values, as they are received through the processing of the results of features.It is obvious that as high of

represent objective values, as they are received through the processing of the results of features.It is obvious that as high of  meaning/value in 7.1 term is as high its contribution in receiving

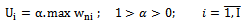

meaning/value in 7.1 term is as high its contribution in receiving  value. By this way the reliability of correct recognition will increase. In order to evaluate weighing coefficients we have to ascertain lower limit of their meanings/values, according to which we can evaluate meanings/values of the given features, i.e. their contribution in the formation of NET value. We should choose lower limit of

value. By this way the reliability of correct recognition will increase. In order to evaluate weighing coefficients we have to ascertain lower limit of their meanings/values, according to which we can evaluate meanings/values of the given features, i.e. their contribution in the formation of NET value. We should choose lower limit of  value according to maximum meaning of the weighing coefficients of features.

value according to maximum meaning of the weighing coefficients of features. | (7.3) |

Where  is a lower limit of the meanings/values of

is a lower limit of the meanings/values of  neuron weighing coefficients.Definition 7.1

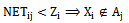

neuron weighing coefficients.Definition 7.1  for

for  feature is important for the given pattern, if the value received by measuring of which is more or equals to a lower limit of the weighing coefficient:

feature is important for the given pattern, if the value received by measuring of which is more or equals to a lower limit of the weighing coefficient:  Let us assume that the term of 7.1 definition is fulfilled by the number of feature

Let us assume that the term of 7.1 definition is fulfilled by the number of feature  Let us calculate new weighing coefficients according to 7.2 term.

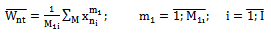

Let us calculate new weighing coefficients according to 7.2 term. | (7.4) |

Where  constitutes a number of those realizations, where inequality of 7.1 term was fulfilled. It is clear that

constitutes a number of those realizations, where inequality of 7.1 term was fulfilled. It is clear that  that’s why by using

that’s why by using  in 7.1 term, the inequality will strengthen, which means that the reliability of correct recognition will increase. In that case when the recognition is wrong, which means that

in 7.1 term, the inequality will strengthen, which means that the reliability of correct recognition will increase. In that case when the recognition is wrong, which means that  it can be corrected. In case the error is not corrected, we should use algorithm described in chapter 5. Where we will award features defined by 7.1 term. We will isolate subsets from the feature sets by (7.3) and (7.4) terms, which is important and necessary for the correct recognition of “its” realisation of the given pattern.Let us discuss the method and algorithm for the features definition, which gives us opportunity to correct the result of the incorrect recognition, which we might have of one type, for e.g. when presenting the

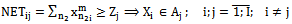

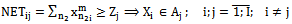

it can be corrected. In case the error is not corrected, we should use algorithm described in chapter 5. Where we will award features defined by 7.1 term. We will isolate subsets from the feature sets by (7.3) and (7.4) terms, which is important and necessary for the correct recognition of “its” realisation of the given pattern.Let us discuss the method and algorithm for the features definition, which gives us opportunity to correct the result of the incorrect recognition, which we might have of one type, for e.g. when presenting the  realization for the other type Nej neuron. According to terms 7.2 we will have:

realization for the other type Nej neuron. According to terms 7.2 we will have: | (7.5) |

İn order to correct an error it is important to change the sign of 7.5 inequality reversely, so the right part of the inequality must be decreased/reduced. Reduction can be carried out in two ways: 1.  Through the decreasing of weighing coefficients, which might cause errors. While recognizing of “Its”

Through the decreasing of weighing coefficients, which might cause errors. While recognizing of “Its”  realizations; hence we cannot use this method because according to the term we use just awarding procedure.2. Let us discuss the method of the reduction of the sum of the weighing coefficients i.e.

realizations; hence we cannot use this method because according to the term we use just awarding procedure.2. Let us discuss the method of the reduction of the sum of the weighing coefficients i.e.  value, which was described in (7.3) and (7.4) terms. Let us suppose that we have ascertained

value, which was described in (7.3) and (7.4) terms. Let us suppose that we have ascertained  sub-level of weighing coefficients- Uj and through it let us set a definition (7.1) important features list

sub-level of weighing coefficients- Uj and through it let us set a definition (7.1) important features list  for pattern and

for pattern and  for neuron weighing coefficients. Let us calculate weighing coefficients according to (7.4) terms.

for neuron weighing coefficients. Let us calculate weighing coefficients according to (7.4) terms. | (7.6) |

Where  important features of neurons are number of those

important features of neurons are number of those  realizations, for which 7.3 term is fulfilled;

realizations, for which 7.3 term is fulfilled;  those numbers of features, for which definition 7.1, i.e.

those numbers of features, for which definition 7.1, i.e.  inequality is true.İn order to calculate value of the definition 7.5

inequality is true.İn order to calculate value of the definition 7.5  instead of

instead of  let us use its correlation from 7.5 terms and we will receive:

let us use its correlation from 7.5 terms and we will receive: | (7.7) |

Because summing in 7.7 terms is done  by index, but presumably as a rule

by index, but presumably as a rule  that’s why the quantity of the components of sum 7.7 is reduced

that’s why the quantity of the components of sum 7.7 is reduced  by term, which might change the sign of the inequality in 7.5 terms reversely:

by term, which might change the sign of the inequality in 7.5 terms reversely: | (7.8) |

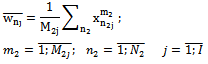

İn that case if the sign of the inequality in 7.5, despite the attempt will not change, then we should use the procedure described in chapter 6, i.e increasing the threshold, for the compensation of which it is necessary to increase weighing coefficient. In order not to make new errors while increasing  coefficients while recognising

coefficients while recognising  realizations, when defining features for awarding we should use the procedure described below.Let us assume that

realizations, when defining features for awarding we should use the procedure described below.Let us assume that  and

and  for neuron patterns

for neuron patterns  and

and  We have the vectors of weighing coefficients

We have the vectors of weighing coefficients  calculated by the 7.2 and 7.3 terms. Let us make binary vectors

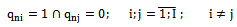

calculated by the 7.2 and 7.3 terms. Let us make binary vectors  by means of them, the elements of which fulfill the term:

by means of them, the elements of which fulfill the term: It is obvious that vectors

It is obvious that vectors  will be the same dimensional as

will be the same dimensional as  vectors. Let’s assume that for recognition of

vectors. Let’s assume that for recognition of  pattern

pattern  realization we get an error

realization we get an error  pattern on

pattern on  neuron. Let us choose corresponding

neuron. Let us choose corresponding  for

for  as means of awarding, for which the term is satisfied.

as means of awarding, for which the term is satisfied. | (7.9) |

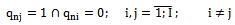

İf when recognizing the realization  we get an error to neuron

we get an error to neuron  then in order to correct an error we should choose

then in order to correct an error we should choose  appropriate

appropriate  for which the term is fulfilled:

for which the term is fulfilled: | (7.10) |

İn both cases the inequality 7.8 will be srengthened, so that the recognition results received through 7.1 inequality will be unchanged.

8. Conclusions

The method of error-correction in the learning process of neuron and its net is described in the work. Theoretical and Applied Perspectives of learning method are elaborated. Two possibilities of making an error are mentioned and algorithmically described. First, the error which will be made through the recognition of the realizations of “Its” pattern. Second, the error which we have through the recognition of the realizations of “Other’s” pattern. Both cases require the formation of different theoretical and applied means. It is proved that error can be corrected through the means of changing of neuron weighing coefficients and thresholds values. Thereby just awarding procedure is used, which provides an error–correction, in that case, if necessary measures will be received in order to avoid neuron over-filling effect.It has been proved theoretically that correction of one error does not cause other errors.

References

| [1] | O. Verulava, R. Khurodze, Neural Networks modeling by rank of links, Proceedings of the symposium “The XIV international symposium large systems control”, Tbilisi, 2000. |

| [2] | O. Verulava “Clustering analysis by “rank of links” Transactions N3(414). Georgian Technical University, Tbilisi. 1997. |

| [3] | Ramaz Khurodze, Development of the Learning Process for the Neuron and Neural Network for Pattern Recognition, American Journal of Intelligent Systems, Vol. 5 No. 1, 2015, pp. 34-41. doi: 10.5923/j.ajis.20150501.04. |

| [4] | R. Khurodze, O. Verulava, M. Chkhaidze, Determining the Number of Neurons Using the Cluster Identification Methods, Bulletin of the Georgian National academy of sciences, vol. 8, no 2, 2014. |

| [5] | O. Verulava, R. Khurodze “Theory of rank of links, modeling of recognition processes” Mathematic Research Developments. New York 2011. |

| [6] | Dr. Rama Kishore, Taranjit Kaur. “Back propagation Algorithm: An Artificial Neural Network Approach for Pattern Recognition”. |

| [7] | Soren Goyai, IIT Kanpur Paul Benjamin, “Object Recognition Using Deep Neural Networks: A Survey” Pace University. |

| [8] | Igor Aizenberg, Senior Member. A Modified Error-Correction Learning Rule for Multilayer Neural Network with Multi-Valued Neurons. |

| [9] | Bernard Widrow and Michael A. Lehr. ARTIFICIAL NEURAL NETWORKS OF THE PERCETRON, MADALINE, AND BACKPROPAGATION FAMILY. |

”, where symbol

”, where symbol  expresses predicate and represents the result received through mathematical operation. This allows us to express recognition process compactly:

expresses predicate and represents the result received through mathematical operation. This allows us to express recognition process compactly:

is a recognizable realization, Ne(W)-set of neurons with the weighing coefficients

is a recognizable realization, Ne(W)-set of neurons with the weighing coefficients  summing element;

summing element;  the meaning of activation function F(NET) is defined according to NET, that is compared with the value of threshold Z of the neuron and according to the comparison the meaning of output signal OUT of neuron is defined. In particular we have:

the meaning of activation function F(NET) is defined according to NET, that is compared with the value of threshold Z of the neuron and according to the comparison the meaning of output signal OUT of neuron is defined. In particular we have:

where

where

, where

, where  represents

represents  realization; the coordinates of vector

realization; the coordinates of vector  are the real numbers received via measuring the features:

are the real numbers received via measuring the features:

realization is presented to

realization is presented to  neuron.We consider, that in such case realization appeared at the input of “Its” type neuron. The second, one type realization e.g.

neuron.We consider, that in such case realization appeared at the input of “Its” type neuron. The second, one type realization e.g.  is presented to

is presented to  for recognition, that is “The other” type neuron. Both cases are described in (2.1) and (2.2) terms.

for recognition, that is “The other” type neuron. Both cases are described in (2.1) and (2.2) terms.

Let us denote that in (3.1) term OUT=1, but in (3.2) OUT=0; Despite this, in both cases recognition is correct, thereby 3.1 and 3.2 differ from 2.1 and 2.2 terms.

Let us denote that in (3.1) term OUT=1, but in (3.2) OUT=0; Despite this, in both cases recognition is correct, thereby 3.1 and 3.2 differ from 2.1 and 2.2 terms. is presented to

is presented to  neuron, term (3.1), with the difference that the output is equal to zero;

neuron, term (3.1), with the difference that the output is equal to zero;

terms (4.1) and (4.2) give us description of the incorrect recognition process. The aim of the following thesis is to correct this mistake. In order to achieve this aim, hereafter let us discuss in detail the process of making an error and we will work out the algorithms for its correction.

terms (4.1) and (4.2) give us description of the incorrect recognition process. The aim of the following thesis is to correct this mistake. In order to achieve this aim, hereafter let us discuss in detail the process of making an error and we will work out the algorithms for its correction.

value-receiving process. The function F(.) is called an activation function, which is various by different nonlinears, we choose one out of them. Activation functions have one similar function: increasing monotonously, existence of nearly linear segment (besides activation function of perceptron). So we can assume, that F(NET) function parameters, or neuron weighing coefficients are chosen in the way that, in case of any kind of change of weighing coefficients we stay on the linear segment of the activation function, so the following correlation is correct:

value-receiving process. The function F(.) is called an activation function, which is various by different nonlinears, we choose one out of them. Activation functions have one similar function: increasing monotonously, existence of nearly linear segment (besides activation function of perceptron). So we can assume, that F(NET) function parameters, or neuron weighing coefficients are chosen in the way that, in case of any kind of change of weighing coefficients we stay on the linear segment of the activation function, so the following correlation is correct:

accordingly instead of term 5.1 we will have: 5.4

accordingly instead of term 5.1 we will have: 5.4

through increasing of weighing coefficients. Therefor we should grant weighing coefficients with initial meanings. Let us assume that in

through increasing of weighing coefficients. Therefor we should grant weighing coefficients with initial meanings. Let us assume that in  realization set we have this type learning realization set for which the following condition is fulfilled:

realization set we have this type learning realization set for which the following condition is fulfilled:  Let us count weighing coefficients initial meanings through learning set realization (vectors) coordinates; through so-called awarding procedures:

Let us count weighing coefficients initial meanings through learning set realization (vectors) coordinates; through so-called awarding procedures:

we take

we take  weighing coefficients, the values of which are placed at

weighing coefficients, the values of which are placed at  range. It is possible to increase weighing coefficient step-by-step using recurrent iteration procedure and heuristically i.e. all of a sudden by adding any constant/unvarying number. We should carry out increasing i.e. awarding procedure for the weighing coefficients values of which is close or equals to

range. It is possible to increase weighing coefficient step-by-step using recurrent iteration procedure and heuristically i.e. all of a sudden by adding any constant/unvarying number. We should carry out increasing i.e. awarding procedure for the weighing coefficients values of which is close or equals to  it means these characteristics are more important for the given pattern than any other. Let us discuss recurrent procedure:

it means these characteristics are more important for the given pattern than any other. Let us discuss recurrent procedure:

that must be done by considering initial weighing coefficients. We check the recognition result of iteration at every step through the 5.5 term. We increase the number of steps until it becomes inequality 5.5 true/correct. Which corresponds to present incorrectly recognized realization for recognition for the second time. When weighing coefficients received from the previous step move unchangeably to the next step.Those values of weighing coefficients, when inequality (5.5) becomes true, let’s designate it by

that must be done by considering initial weighing coefficients. We check the recognition result of iteration at every step through the 5.5 term. We increase the number of steps until it becomes inequality 5.5 true/correct. Which corresponds to present incorrectly recognized realization for recognition for the second time. When weighing coefficients received from the previous step move unchangeably to the next step.Those values of weighing coefficients, when inequality (5.5) becomes true, let’s designate it by  In that case we will have:

In that case we will have:

realizations of any learning set and for any pattern of A set. The algorithm of error-correction in (5.8) term does not change correct recognition results received through changes of neuron weighing coefficients of different patterns.Whilst changing of weighing coefficient we just use “Awarding” procedure expression (5.7); we choose equal quantities as neuron thresholds initial values:

realizations of any learning set and for any pattern of A set. The algorithm of error-correction in (5.8) term does not change correct recognition results received through changes of neuron weighing coefficients of different patterns.Whilst changing of weighing coefficient we just use “Awarding” procedure expression (5.7); we choose equal quantities as neuron thresholds initial values:

we write its term according to 5.1.

we write its term according to 5.1.

In order to correct the error it is necessary to change inequality signs in (6.1) term, that can be done by increasing

In order to correct the error it is necessary to change inequality signs in (6.1) term, that can be done by increasing  weighing coefficients, i.e. with the method described in the previous paragraph.Let’s discus the right part of the (4.2) term:

weighing coefficients, i.e. with the method described in the previous paragraph.Let’s discus the right part of the (4.2) term:

weighing coefficient decreased. Because of the fact that according to the condition, we use just “awarding” procedure, that’s why we choose the procedure of increasing of the neuron threshold in the image similar to (5.7), where instead of weighing coefficient we will have the meaning of the threshold:

weighing coefficient decreased. Because of the fact that according to the condition, we use just “awarding” procedure, that’s why we choose the procedure of increasing of the neuron threshold in the image similar to (5.7), where instead of weighing coefficient we will have the meaning of the threshold:

type neuron up to

type neuron up to  quantity, then we might receive errors in the recognition of “its” realizations, such opportunity especially will take place there, where the term is fulfilled:

quantity, then we might receive errors in the recognition of “its” realizations, such opportunity especially will take place there, where the term is fulfilled:  Precisely in order to find out those characteristics, upon which “awarding” procedure might be carried out, it is necessary to carry out the experiment of recognition for those pattern realizations for which the above mentioned inequality takes place. In case of an error we should repeat the procedure that was mentioned in the fifth paragraph.

Precisely in order to find out those characteristics, upon which “awarding” procedure might be carried out, it is necessary to carry out the experiment of recognition for those pattern realizations for which the above mentioned inequality takes place. In case of an error we should repeat the procedure that was mentioned in the fifth paragraph.

recognition process of realization by

recognition process of realization by  neuron is described in 3.1 term. In the recognition process basically receiving of

neuron is described in 3.1 term. In the recognition process basically receiving of  value, which may be used instead of

value, which may be used instead of  value (5.3 term) in order to receive final result OUT of recognition. Hereinafter we will see that changes of neuron weighing coefficients F(.) take place on the linear segment of the function (5.3 term).According to the assumption in 5.3 term the result of recognition process is correct, if inequality is fulfilled 5.5:

value (5.3 term) in order to receive final result OUT of recognition. Hereinafter we will see that changes of neuron weighing coefficients F(.) take place on the linear segment of the function (5.3 term).According to the assumption in 5.3 term the result of recognition process is correct, if inequality is fulfilled 5.5:

consists of the sum of

consists of the sum of  multiplications. Let us examine each term of the sum, which consists of n element and the appropriate weighing coefficient of the learning set realization. In order to fulfil the inequality of 7.1 term the meaning/value of the multiplication must be as high as possible. As realization coordinates are important for the signs received through measuring, which could not be changed, therefore we can change just weighing coefficients, i.e. elements of

multiplications. Let us examine each term of the sum, which consists of n element and the appropriate weighing coefficient of the learning set realization. In order to fulfil the inequality of 7.1 term the meaning/value of the multiplication must be as high as possible. As realization coordinates are important for the signs received through measuring, which could not be changed, therefore we can change just weighing coefficients, i.e. elements of  vector and choose

vector and choose  initial meanings of weighing coefficients through learning set realization. Let us consider that

initial meanings of weighing coefficients through learning set realization. Let us consider that  If we denote elements of X set by

If we denote elements of X set by  but

but  coordinates

coordinates  of then for the initial meanings/values of the weighing coefficients will be:

of then for the initial meanings/values of the weighing coefficients will be:

pattern and neuron initial weighing coefficients.

pattern and neuron initial weighing coefficients.  set, coordinates of which

set, coordinates of which  represent objective values, as they are received through the processing of the results of features.It is obvious that as high of

represent objective values, as they are received through the processing of the results of features.It is obvious that as high of  meaning/value in 7.1 term is as high its contribution in receiving

meaning/value in 7.1 term is as high its contribution in receiving  value. By this way the reliability of correct recognition will increase. In order to evaluate weighing coefficients we have to ascertain lower limit of their meanings/values, according to which we can evaluate meanings/values of the given features, i.e. their contribution in the formation of NET value. We should choose lower limit of

value. By this way the reliability of correct recognition will increase. In order to evaluate weighing coefficients we have to ascertain lower limit of their meanings/values, according to which we can evaluate meanings/values of the given features, i.e. their contribution in the formation of NET value. We should choose lower limit of  value according to maximum meaning of the weighing coefficients of features.

value according to maximum meaning of the weighing coefficients of features.

is a lower limit of the meanings/values of

is a lower limit of the meanings/values of  neuron weighing coefficients.Definition 7.1

neuron weighing coefficients.Definition 7.1  for

for  feature is important for the given pattern, if the value received by measuring of which is more or equals to a lower limit of the weighing coefficient:

feature is important for the given pattern, if the value received by measuring of which is more or equals to a lower limit of the weighing coefficient:  Let us assume that the term of 7.1 definition is fulfilled by the number of feature

Let us assume that the term of 7.1 definition is fulfilled by the number of feature  Let us calculate new weighing coefficients according to 7.2 term.

Let us calculate new weighing coefficients according to 7.2 term.

constitutes a number of those realizations, where inequality of 7.1 term was fulfilled. It is clear that

constitutes a number of those realizations, where inequality of 7.1 term was fulfilled. It is clear that  that’s why by using

that’s why by using  in 7.1 term, the inequality will strengthen, which means that the reliability of correct recognition will increase. In that case when the recognition is wrong, which means that

in 7.1 term, the inequality will strengthen, which means that the reliability of correct recognition will increase. In that case when the recognition is wrong, which means that  it can be corrected. In case the error is not corrected, we should use algorithm described in chapter 5. Where we will award features defined by 7.1 term. We will isolate subsets from the feature sets by (7.3) and (7.4) terms, which is important and necessary for the correct recognition of “its” realisation of the given pattern.Let us discuss the method and algorithm for the features definition, which gives us opportunity to correct the result of the incorrect recognition, which we might have of one type, for e.g. when presenting the

it can be corrected. In case the error is not corrected, we should use algorithm described in chapter 5. Where we will award features defined by 7.1 term. We will isolate subsets from the feature sets by (7.3) and (7.4) terms, which is important and necessary for the correct recognition of “its” realisation of the given pattern.Let us discuss the method and algorithm for the features definition, which gives us opportunity to correct the result of the incorrect recognition, which we might have of one type, for e.g. when presenting the  realization for the other type Nej neuron. According to terms 7.2 we will have:

realization for the other type Nej neuron. According to terms 7.2 we will have:

Through the decreasing of weighing coefficients, which might cause errors. While recognizing of “Its”

Through the decreasing of weighing coefficients, which might cause errors. While recognizing of “Its”  realizations; hence we cannot use this method because according to the term we use just awarding procedure.2. Let us discuss the method of the reduction of the sum of the weighing coefficients i.e.

realizations; hence we cannot use this method because according to the term we use just awarding procedure.2. Let us discuss the method of the reduction of the sum of the weighing coefficients i.e.  value, which was described in (7.3) and (7.4) terms. Let us suppose that we have ascertained

value, which was described in (7.3) and (7.4) terms. Let us suppose that we have ascertained  sub-level of weighing coefficients- Uj and through it let us set a definition (7.1) important features list

sub-level of weighing coefficients- Uj and through it let us set a definition (7.1) important features list  for pattern and

for pattern and  for neuron weighing coefficients. Let us calculate weighing coefficients according to (7.4) terms.

for neuron weighing coefficients. Let us calculate weighing coefficients according to (7.4) terms.

important features of neurons are number of those

important features of neurons are number of those  realizations, for which 7.3 term is fulfilled;

realizations, for which 7.3 term is fulfilled;  those numbers of features, for which definition 7.1, i.e.

those numbers of features, for which definition 7.1, i.e.  inequality is true.İn order to calculate value of the definition 7.5

inequality is true.İn order to calculate value of the definition 7.5  instead of

instead of  let us use its correlation from 7.5 terms and we will receive:

let us use its correlation from 7.5 terms and we will receive:

by index, but presumably as a rule

by index, but presumably as a rule  that’s why the quantity of the components of sum 7.7 is reduced

that’s why the quantity of the components of sum 7.7 is reduced  by term, which might change the sign of the inequality in 7.5 terms reversely:

by term, which might change the sign of the inequality in 7.5 terms reversely:

coefficients while recognising

coefficients while recognising  realizations, when defining features for awarding we should use the procedure described below.Let us assume that

realizations, when defining features for awarding we should use the procedure described below.Let us assume that  and

and  for neuron patterns

for neuron patterns  and

and  We have the vectors of weighing coefficients

We have the vectors of weighing coefficients  calculated by the 7.2 and 7.3 terms. Let us make binary vectors

calculated by the 7.2 and 7.3 terms. Let us make binary vectors  by means of them, the elements of which fulfill the term:

by means of them, the elements of which fulfill the term: It is obvious that vectors

It is obvious that vectors  will be the same dimensional as

will be the same dimensional as  vectors. Let’s assume that for recognition of

vectors. Let’s assume that for recognition of  pattern

pattern  realization we get an error

realization we get an error  pattern on

pattern on  neuron. Let us choose corresponding

neuron. Let us choose corresponding  for

for  as means of awarding, for which the term is satisfied.

as means of awarding, for which the term is satisfied.

we get an error to neuron

we get an error to neuron  then in order to correct an error we should choose

then in order to correct an error we should choose  appropriate

appropriate  for which the term is fulfilled:

for which the term is fulfilled:

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML