-

Paper Information

- Next Paper

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Intelligent Systems

p-ISSN: 2165-8978 e-ISSN: 2165-8994

2012; 2(7): 177-183

doi: 10.5923/j.ajis.20120207.04

Dynamic Mission Control for UAV Swarm via Task Stimulus Approach

Haoyang Cheng, John Page, John Olsen

School of Mechanical and Manufacturing Engineering, University of New South Wales, Sydney, 2052, Australia

Correspondence to: Haoyang Cheng, School of Mechanical and Manufacturing Engineering, University of New South Wales, Sydney, 2052, Australia.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Interest in self-organized (SO), multi-robotic systems is increasing because of their flexibility, robustness, and scalability in performing complex tasks. This paper describes a decentralized task allocation model based on both task stimulus intensity and a responding threshold. The response threshold method was developed through observations of social insects. It allows a swarm of insects with a relatively low-level of intelligence to perform complex tasks. In this work, an agent based simulation environment is developed incorporating these ideas. The mission scenario simulated in this study is a wide area search and destroy mission in an initially unknown environment. The mission objectives are to effectively allocate a UAV swarm to both optimize coverage of the search space and attack a target. Rule based behaviours were used to create UAV formations. Two sets of simulations with different swarm size and target numbers were performed. The simulation results show that with task stimulus intensity and a responding threshold, the UAV swarm demonstrates emergent behaviour and individual vehicles respond adaptively to the changing environment.

Keywords: UAV, Swarm Intelligence, Self-Organization, Simulation

Cite this paper: Haoyang Cheng, John Page, John Olsen, "Dynamic Mission Control for UAV Swarm via Task Stimulus Approach", American Journal of Intelligent Systems, Vol. 2 No. 7, 2012, pp. 177-183. doi: 10.5923/j.ajis.20120207.04.

Article Outline

Nomenclature

- a = acceleration vectorWi = waypoint iNBi = set of neighbours of UAV iPi = position vector of agent iVi = velocity vector of agent ir = interaction range between UAVsdc = distance below which the collision avoidance rule take effectds = separation distance from other UAVsdt = separation distance from targetnorm(V) = normalize vector Vwfi = weight factor for each ruleS = stimulus intensityθ = response thresholdPij = probability that Ui attack Tj

1. Introduction

- With recent technological advances in autonomous control and communication, multi-robotic systems are receiving a great deal of attention due to their increased ability to carry out complex tasks in a superior manner when compared to single-robotic systems. Multiple autonomous agents working in-group exceed the sum of the performance of the individuals. Currently, the human factor associated with the UAV (unmanned aerial vehicle) operators’ workload is one of the key limitations to increasing future UAS (unmanned aerial system) effectiveness[1]. Such a requirement not only increases the expense of UAV operations, but also makes coordination among UAVs more complex. For these reasons, research is required to investigate methods of increasing UAV autonomy and interoperability, while reducing global communication and human operator reliance. The cooperative control of UAVs is a complex problem that is dominated by uncertainty, limited information, and task coupling. Due to the complexities of the inherent problems, centralized decision and control algorithms are traditionally adapted to optimise timing and task constraints. One of the mission scenarios considered in the literature is cooperative moving target engagement. Kingston & Schumacher in their paper solved this problem with a mixed integer linear program that addressed task timing constraints and agent dynamic constraints to generate a flyable path[2]. A genetic algorithm (GA) is used to efficiently search the space of possible solutions and provide the cooperative assignment[3]. Schumacher and Chandler also addressed the problem of task allocation for a wide area search munitions scenario[4]. A network flow optimization model is used to develop a linear program for optimal allocation of powered munitions to perform several tasks, such as search, classify, attack and finally damage assessment of potential targets. Cooperative task assignment within an adversarial environment was addressed in[5][6]. In their work, a cooperative task assignment was computed with the additional knowledge of the future implications of a UAV’s actions. This was done to improve the expected performance of the other UAVs. In order to implement a centralized controller, consensus has to be reached under communication constraints. False information and communication delay strongly negates the benefits of cooperative control[7]. Alighanbari in his thesis[8] developed the unbiased distributed Kalman consensus algorithm which he proved converged to an unbiased estimate for both static and dynamic communication networks. Despite its benefits, the use of a centralized controller lacks significant robustness, is computationally complex and depends on high degree of global information. Such requirements make the implementation of centralized approach intractable in real-life missions. In contrast, decentralized decision and control algorithms, trade optimality and predictability, with robustness and adaptation to environmental changes. A self-organized (SO) system, or swarm, is typically a decentralized control system made up of autonomous agents that are distributed in the environment and follow stimulus response behaviours[9]. Examples from social insects, such as foraging and the division of labour show that SO systems can generate useful emergent behaviours at the system level. Self-organised, swarm based systems do not require a centralised plan or deliberate action from individuals. They therefore have the potential to reduce mission planning and therefore the amount of intelligence required in control systems design. Moreover, self-organised, swarm based systems demonstrate robustness and scalability. This means that adding or subtracting agents to or from the system may not significantly affect the overall performance and emergent behaviours of the system.Significant research effort has been invested in recent years into the design and simulation of intelligent swarm systems[10]. Intelligent swarm systems can generally be defined as decentralized systems, comprised of relatively simple agents which are equipped with the limited communicational, computational and sensing abilities required to accomplish a given task[11]. Gaudiano, et al. in their studies, tried to apply quantitative methodologies to evaluate the performance of UAV swarms under a variety of conditions[12]. In Price’s research, ten self-organization rules were implemented whose weight factors were collected into a single fitness function. This function was further refined using a genetic algorithm within the simulation[13]. Another widely adopted mechanism is digital pheromone maps that imitate the foraging behaviour of ants. Digital pheromones are modelled on the pheromone fields of the individual vehicles. By synchronizing their maps the UAVs coordinate to avoid redundant searches[14]. Hauert, et al. had been investigating the potential of using a swarm of UAVs to establish a wireless communication network[15]. They applied artificial evolution to develop neuronal controllers for the swarm of homogenous agents. This study designs a swarm controller to enable a swarm of UAVs to search for and attack targets. The concept of employing swarms of weapons was explored in[16]. In this concept, the individual of the swarm may be less capable than conventional weapons, but through cooperation across the swarm, the swarm exhibits behaviours and capabilities that can exceed those demonstrated by conventional systems that do not employ cooperation. To implement a swarm system, they designed a rule set of discrete behaviours, which was governed by interactive subsumptive logic. Building upon their research, we adopted an adaptive task controller based on task stimulus to optimize the performance of the swarm. In their design, once the targets were discovered, the weapons would home in on them. Through communication, other weapons had knowledge of how many weapons had been committed to a given target. Simple rules prevent excessive numbers of weapons from being expended on a single target. Our approach also takes into consideration the value of the targets and the time left in the mission. Thus the weapons would not be largely expended in the early stage of the mission, especially on low value targets, which leaves an inadequate number of UAVs to search for the remaining targets.The remainder of this paper is structured as follows. Section II describes the mission in detail and the simulation tools used in this study. In section III, we present the task controller. The results of the simulation are given in section IV while section V concludes the paper.

2. Simulation Environment

- In this section, we present the simulation tool and the overview of the simulated mission scenario.

2.1. Simulation Tool

- Agent-based simulations of complex adaptive systems are becoming an increasing popular tool in the artificial life community. The application of agent-based simulations in combat modelling had been explored by Ilachinski[17]. He argued that agent-based models are most useful when they are applied to complex systems that can be neither wholly described nor can be built by conventional models based on differential equations. Agent-based models are designed to allow users to explore evolving patterns of system-level behaviour that derive collectively from low-level interactions among their agents.In this study, the SWARM simulator, developed by the Santa Fe Institute was used to construct the simulation environment[18]. It provides a conceptual framework for designing, describing, and conducting experiments on agent-based models. This framework allows independent agents to interact via a schedule of discrete events. Bharathy et al. provided an architectural frame for generic agents, which comprised three external domains within an agent to interact with the agent’s environment: perception domain, action domain and communication domain[19]. Within the SWARM framework, the agents communicate both with each other and with their environment via messages. Swarm supplies a basic system library that manages a dynamic list of objects and handles message passing between objects. In our design, two different objects were modelled, namely the UAVs and their targets. The internal functions of the objects, which describe the rule set of behaviours, handle the messages that are sent to the object and modify their internal states.

2.2. Mission Description

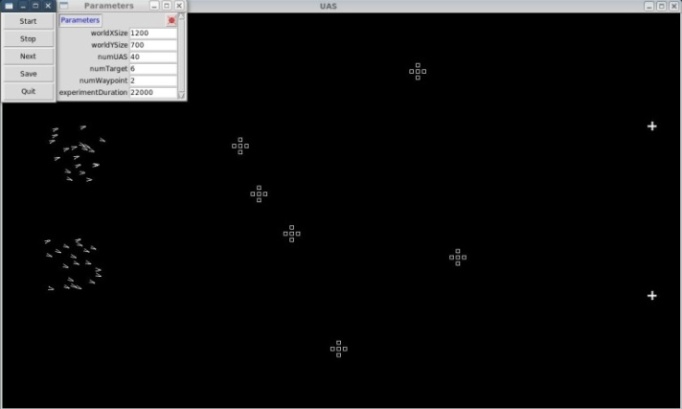

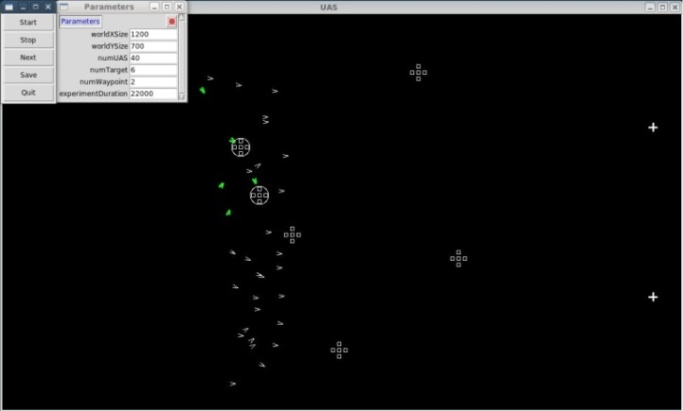

- In applying these theoretical concepts to a UAV swarm application, we designed the following wide area search and attack scenario. This scenario aims to search for targets within the defined area and destroy or degrade targets. The following is a list of assumptions and functionalities we use in our simulation:● The vehicles considered in this study are relatively simple platforms with the smallest effective payload and with a minimal set of onboard detection devices to sense the environment and one another other. One concept of such platform is called the Low Cost Autonomous Attack System (LOCAAS), which has a range of 100 nautical miles as well as laser radar with automatic target recognition to identify potential targets[20].● The mission area is defined as a rectangular region. Each UAV is aware of the terrain boundaries and will turn as it approaches a boundary to remain within the target area. The UAV has only enough fuel to fly over the mission area once. ● The UAV’s were modelled as identical agents in the simulator. Each UAV is equipped with a global positioning system (GPS) navigation system for autonomous waypoint navigation, a circular sensor to detect the position of other UAVs within a specified radius and a limited range communication system.● The UAV’s fly at constant altitude but at a variable speeds. Their vehicle dynamics will be discussed in next section.● The UAVs can change modes between search and attack. They can only change mode from search to attack once. The UAVs are themselves weapons which are destroyed in the attack process. The attack will have 80 percent chance of being successful. ● The targets are stationary and randomly distributed within target area. The potential targets could be relocatable air defence sites or vehicles in columns. The targets may be of high or low value. The stimulus of high value targets is set to be twice that of the low value targets. The onboard target recognition software has the ability to recognize the target type. The location and the nature of the targets are unknown to each UAV. Each target is assumed to be an array of ground vehicles or air defence units. In this simulation, each target array must be hit by 8 UAVs to be destroyed. The status of the target will degrade as the number of UAV attacks increase, so that the stimuli that attract UAV attacks will decrease. The mission control strategy based on task stimulus will be discussed in section III.The mission was started with two groups of UAVs entering the mission area from the left side (Figure 1). The UAVs were assumed to be deployed from a cargo aircraft, though this could have been a ship or ground transport. The reason for deploying them in two groups was to encourage them distribute themselves evenly across the space during the mission. In[21], the feasibility of using cargo aircraft for the mass delivery of standoff munitions is investigated. The UAV’s spread out immediately after release and form a loose formation using a six rule set (discussed in subsection B below) to hunt for targets (Figure 2). As shown in the figure, the targes within a circle were the ones that had been detected. The UAV’s shown in green began the attack. Through communication, other UAVs that met certain proximity requirements became aware of the identified targets. However, the onboard task controller would decide whether to attack the targets or proceed to search for other targets.

| Figure 1. Mission Start |

| Figure 2. Search and Attack |

3. Dynamic Mission Control

- In this section, the rule set of behaviours and task controller is discussed.

3.1. Rule sets of Movement

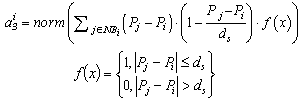

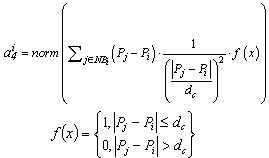

- The behaviours of the UAVs are built upon rule sets describing formation maintenance and target interaction. The mathematical definition of the rules is inspired from previous research[13]. This study extended the previous research by introducing two behavior logic modes on top of the rules, which are the search mode and target engagement mode. The switch between behavior modes is controlled by the task controller, which will be discussed in detail in next section. In this study, six rules are used to govern the movement of the vehicle: cohesion, alignment, separation, collision avoidance, target avoidance and goal seeking. When the UAVs are in the search mode, every rule is active, causing the vehicles to spread out while preserving intervehicle communication. Once the target engagement mode is triggered, the UAVs are committed to the attack phase and only collision avoidance and goal seeking rules are active. Each of these rules is mathematically defined below. 1) Rule 1: CohesionThe cohesion rule makes UAVs attract each other by orientating their acceleration vectors in the direction of the local flock center provided that the distance between them is greater than some set value.

| (1) |

| (2) |

means the distance between Uj and Ui.. Ni denotes the number of neighbours of UAV i.2) Rule 2: AlignmentThe alignment rule enables a UAV to align its heading and match its speed with its neighbours.

means the distance between Uj and Ui.. Ni denotes the number of neighbours of UAV i.2) Rule 2: AlignmentThe alignment rule enables a UAV to align its heading and match its speed with its neighbours. | (3) |

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

| (10) |

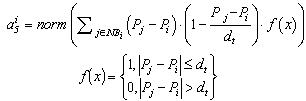

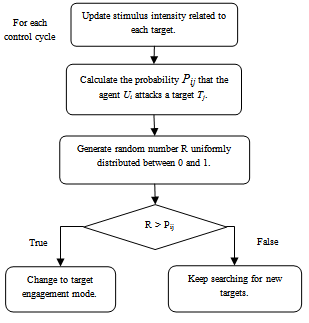

3.2. Task Controller

- In Bonabeau, et al[10], a task allocation model based on a response threshold was developed through the observation of social insects. The response threshold refers to the likelihood of reacting to a task associated stimulus. A response threshold θ, expressed in the units of stimulus intensity, is an internal variable that determines the tendency of an individual to respond to the intensity of the stimulus S and perform the task. A response function Tθ(S) which is the probability of performing the task as a function of stimulus intensity s, is given by:

| (11) |

| (12) |

| (13) |

| Figure 3. Task control flow chart |

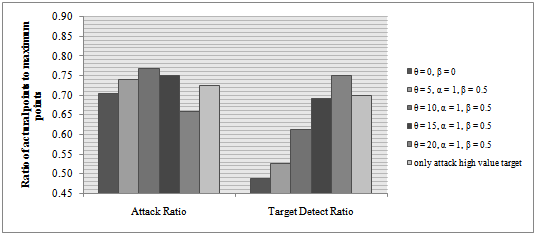

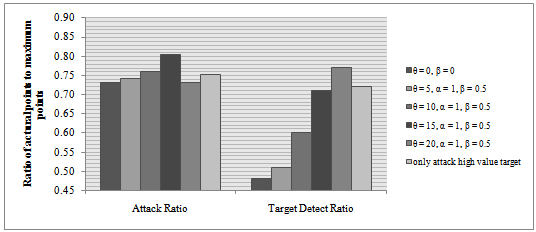

4. Simulation Results

| Figure 4. Simulation result with 40 UAVs and 8 targets |

| Figure 5. Simulation result with 50 UAVs and 10 targets |

5. Conclusions

- We have presented a formulation for a swarm of UAVs engaged in a wide area search and destroy mission in an unknown environment. We have used flocking behaviour to control the movement of the UAVs while searching the target area, as the rule based behaviour helps the vehicles to quickly disperse after deployment and readjust the formation as required. We have developed a decentralized mission control mechanism based on task stimulus intensity and a responding threshold, which can optimize the performance of the swarm. The simulation results show that, by using this mechanism, a swarm of UAVs demonstrates emergent behaviour through self-organisation, and individual vehicles respond adaptively to the changing environment. The simulation scenario presented here is very simplified in comparison to a real mission. One weakness being the mission control mechanism only allocates UAVs to a certain target. A more sophisticated cooperative control algorithm is needed to control UAVs attacking multiple targets, especially when possible adversary action of the target is taken into consideration.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML