Mohammed J. Islam1, Saleh M. Basalamah2, Majid Ahmadi3, Maher A. Sid-Ahmed3

1Department of Computer Science and Engineering, Shahjalal University of Science and Technology, Sylhet, Bangladesh

2Department of Computer Science, Umm Al Qura University, Makkah, KSA

3Department of Electrical and Computer Engineering, University of Windsor, Windsor, ON, Canada

Correspondence to: Mohammed J. Islam, Department of Computer Science and Engineering, Shahjalal University of Science and Technology, Sylhet, Bangladesh.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

Abstract

Real-time quality inspection of gelatin capsules in pharmaceutical applications is an important issue from the point of view of industry productivity and competitiveness. Computer vision-based automatic quality inspection is one of the solutions to this problem. Machine vision systems provide quality control and real-time feedback for industrial processes, overcoming physical limitations and subjective judgment of humans. In computer-vision based system a digital image obtained by a digital camera would usually have 24-bit color image. The analysis of an image with that many levels might require complicated image processing techniques. But in real-time application, where a part has to be inspected within a few milliseconds, either we have to reduce the image to a more manageable number of gray levels, usually two levels (binary image), and at the same time retain all necessary features of the original image. A binary image can be obtained by thresholding the original image into two levels. In this paper, we have developed an image processing system using edge-based image segmentation techniques for quality inspection that satisfy the industrial requirements in pharmaceutical applications to pass the accepted and rejected capsules.

Keywords:

Gelatin Capsule, Image Segmentation, Border Tracing, Edge-detection, Neural Network

Cite this paper: Mohammed J. Islam, Saleh M. Basalamah, Majid Ahmadi, Maher A. Sid-Ahmed, Computer Vision-Based Quality Inspection System of Transparent Gelatin Capsules in Pharmaceutical Applications, American Journal of Intelligent Systems, Vol. 2 No. 1, 2012, pp. 14-22. doi: 10.5923/j.ajis.20120201.03.

1. Introduction

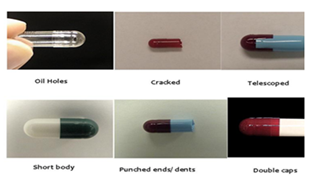

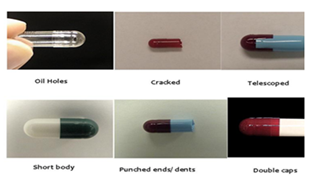

Two part (body and cap) gelatin capsules are sensitive to several common flaws caused by the equipment problems and inconsistencies of the manufacturing process that make them unacceptable for shipment and marketing. These flaws are incorrect size or color, dents, cracks, holes, bubbles, strings, dirt, double caps, missing caps and so on. Typical defects are shown in Figure 1. | Figure 1. Typical defects (Source: Pharmaphil Inc., Windsor, ON) |

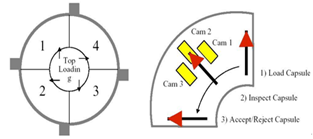

Some of these are called critical defects. A hole or crack is one of the critical defects and once the capsule is filled it is possible to spill out the product and can cause serious problem if it reaches the end-user. The area of bubble is much thinner and can easily turn into a hole. Dents have irregular shape and cause failure at the filling stage. Incorrect color, size and double caps can cause serious problems if they reach to the end-user, resulting in possible product recall. Dirt and strings are cosmetic flaws and do not affect the capsule, however it implies poor quality control of production. | Figure 2. Current capsule sorting system |

2. Existing Quality Control and Criticisms

Pharmaphil Inc. a based pharmaceutical industry manufactures two-part gelatin capsules. Currently human inspectors evaluate the quality of the capsules manufactured at Pharmaphil Inc. This process involves a worker monitoring a conveyor belt of capsules and removing any defective product that is shown in Figure 2. The capsules are then collected in bins, each of which contains 100000 capsules. Manual inspection has some advantages, such as a human inspector can provide immediate solution for quality control as they are trained to look for a set of various flaws in product line. The adaptability of human inspector to changing environments and different products is incomparable to any automated system. However, the drawback of manual inspection costs more. First of all, manual inspection is not consistent and it is unreasonable to expect that all human inspectors will provide the same inspection output. Secondly, human workers cannot keep their attention at same degree at all times for the duration of a given shift yielding inaccurate inspection results. Given that situation, when the inspectors find a defective capsule in one bin, the company discards the entire bin of capsules. This process results in a loss of revenue, a waste of manufacturing time, increase in manufacturing costs and uncertainty about the quality of the products the company sends to the market. Therefore, developing low-cost, high throughput quality process controller is a prime need to improve the productivity of the company, to improve the quality of their manufactured capsules and enable the company to better compete globally. To fulfil these objectives of the Pharmaphil Inc., a computationally efficient image processing technique was developed in addition to the low-cost hardware systems to perform the quality inspection of the capsules in collaboration with the Electrical and Computer Engineering Department at the . The main aim was to develop a computer vision system capable of isolating and inspecting capsules at the rate of 1000 capsules per minute with over 95% accuracy of detecting defects of size 0.2 mm and larger with the ability to detect holes, cracks, dents, bubbles, double caps and incorrect colour or size.

3. State-of-the-Art and Motivation

A computer vision based system controller requires one time establishment cost and possible maintenance costs. There are few capsule sorting machines with quality process control settings available in the market at a cost of over $500,000 per machine[1]. DJA- PHARMA, a division of Daiichi Jitsugyo has Capsules Visual Inspection System CVIS -SXX-E that inspects uni-color and bi-color hard gelatin capsules of size 1 through 5 and these with printed marks except both body and cap are transparent and dark color[2]. The inspection rate of this system is 1700 to 2500 capsules per minutes. Viswill has a Capsules Video Inspection Systems named CVIS-SXX manufactured in that uses high-resolution CCD line sensor cameras and is capable of detecting a minimum size flaws of 100 μm while Eisai Machinery USA has similar systems named CES-50, 100, 150 with an inspection rate of 800, 1600 and 2500 respectively. A based company called ProdiTec has pharmaceutical industrial vision system called InspeCaps-150 that inspects hard capsules of size 0 to 5 at the rate of 2000 capsules per minutes. Therefore, although automated systems are available, they are very expensive and very few of them dealing with transparent a capsule which is the case in the application at Pharmaphil Inc.The main objective of this project is to develop a working prototype that proves the concept of the capsule inspection system. A computationally efficient image processing techniques is a required addition to the low-cost hardware systems to develop the quality inspection systems of the capsules.

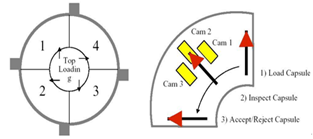

4. Capsule Inspection System- OptiSorter

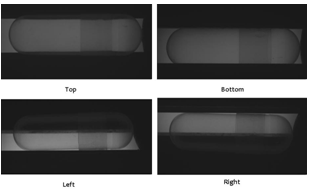

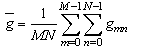

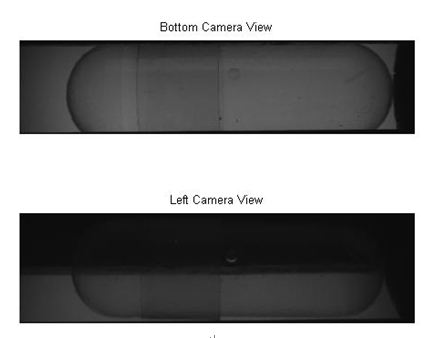

In this pharmaceutical application, the capsule inspection system requires that every capsule is fully inspected to meet accuracy of defects of size 0.2 mm and larger. In order to recognize the defects with a vision system, full 360o imaging should be acquired from multiple image sensors. The system must be able to verify the capsule color and size including other defects satisfying the throughput at a rate of 1000 fully inspected capsules per minute. The system must also be flexible enough to adjust for various sizes and colors of capsules, and accommodate future updates in image resolution and more thorough inspection algorithms[1].The image acquisition and processing components are placed into an existing capsule sorter called OptiSorter which fixes the capsules individually in solid holders allowing full 360o visibility of the capsule. The system controller allows the vision system to interact with the mechanical system and coordinating the triggering of the cameras as well as controlling the accepting and rejecting mechanisms of the capsules.To meet the system throughput, four identical inspection stations operate in parallel on a single mechanical system. A set of three USB cameras and a host PC belongs to each quadrant. Figure 3 shows the image acquisition system. It is great advantageous to use four stations that provides enough safeguards against system failure so that in any case if one station fails remaining stations can be operational properly[3]. Most of the vision system uses 3 cameras to cover full 360o views of a capsule, 120o separation of each camera from the other. However, it is recommended to use one more camera on top and the rationale will be discussed in subsection 6.1 of this paper. Figure 4 shows the acquired images of capsules from four different angles.  | Figure 3. Image acquisition system |

| Figure 4. Capsule image: Top, Bottom, Right and Left camera view |

5. Materials and Methods

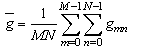

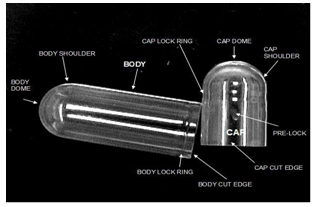

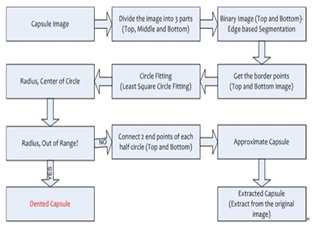

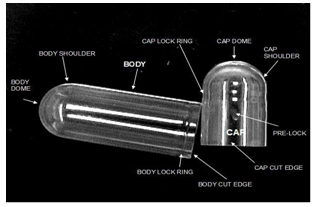

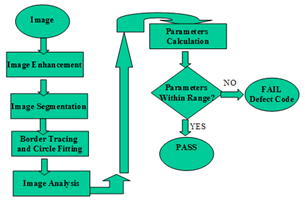

Where visual inspection is the main concern, automatic computer image analysis may help visual inspection[4]. Therefore, it is required to know the different parts of the capsule. A typical gelatin capsule image (size 0) is shown in Figure 5. In these pharmaceutical applications, various capsules parameters are required to be evaluated and analyzed. Capsules dimension (length and width), body and cap lengths, body and cap diameter, intersection of body and cap (closed joined lengths) are the important parameters for quality inspection that determines the wrong size, missing cap, double cap and some of dents. The block diagram of the proposed image processing system is shown in Figure 6.

5.1. Preprocessing

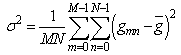

Background noise, unwanted reflections from poor illumination and geometric distortions are very common noise sources from the acquisition system during the capturing of the images of capsules by the digital cameras that makes the image segmentation more difficult. Image enhancement is a way of solving these problems as well as restores the image to improve overall clarity by correcting geometric distortions from the acquisition systems. Image enhancement techniques are used as preprocessing tools- a series of image-to-image transformations. It does not add more information but it may help to extract it. Visually it is hard to state the level of enhancement. Mostly, we are concerned with finding whether the enhancement has improved our ability to differentiate the object from background. This difficulty justifies the need for quantitative measures to quantify the performance of the enhancement techniques. Computational cost and global variance are proposed as a quantitative measure in this application for each method and finally the best method for this application is selected both in terms of execution time and global variance. Global variance is defined as follows: | (1) |

with an image size MxN and mean pixel value  . It is defined as:

. It is defined as: | (2) |

| Figure 5. Typical Capsule image- (Source: Pharmaphil Inc., ON) |

| Figure 6. Block diagram of the proposed image processing system |

5.2. Image Segmentation

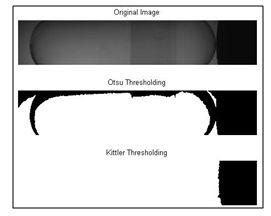

Image segmentation is an important first step for a variety of image analysis and visualization tasks[5]. Some of the issues that make image segmentation difficult are:a) The image capturing process results in noisy images,b) Sometimes the sensitivity of the image data to solve a given task is very high, like the case with transparent capsule imaging, the high frequency information in them is often distorted resulting in fuzzy, non-reliable edges, c) From image to image even in the same modality, the shape of the same structure can vary, d) The gray scale values and their distributions vary from image to image even in a single application. Pixels corresponding to a single class may exhibit different intensities between images or even within the same image.The main issues related to segmentation involve choosing good algorithms, measuring their performance, and understanding their impact on the capsule inspection system. But the segmentation does not perform well if the gray levels of different objects are quite similar which is the case in this application. Because capsules are transparent that is overlapped with background gray levels. Image enhancement techniques seek to improve the visual appearance of an image. They emphasize the salient features of the original image and simplify the task of image segmentation. The selection of a threshold value that can properly separate the foreground and background is a complicated issue. A good thresholding scheme that is suitable for industrial application should be fast and also should give a reliable threshold value[6-9]. An exhaustive survey was conducted of image thresholding methods in literature[7]. In this survey 40 selected bi-level thresholding methods from various categories are compared in the context of nondestructive testing applications as well as for document images. In this survey Kittler[9] was ranked as the top one. Sahoo et al.[10] compared the performance of more than 20 global thresholding algorithm using uniformity and shape measures and conclude Otsu[11] as the best one. Otsu method[11] is the most referenced thresholding method that minimizes within class variance or maximize between class variance. It shows good performance when the number of pixels in background and foreground are similar.Researchers have done some studies on threshold selection with the recent development of artificial intelligence. Artificial Neural Network (ANN) based image segmentation calculates the threshold value based on some local statistics like mean, standard deviation, smoothness, entropy and so on. Koker and Sari[12] uses ANN to automatically select a global threshold value for an industrial vision system. In this method, the frequencies for each gray level are used as an input to the NN and threshold value is used as an output. But the pixel value of the capsule is very close to the background in case of transparent capsule which is the case in this application. This similarity makes the segmentation job complicated. Alginahi[13] used ANN to segment bank cheques from complex background to use as an input to the OCR. But the segmentation based on the feature around every pixel in an image makes computational costs higher than others.Through observation one can notice that the values of pixels around the boundary changes abruptly even in case of complex background. In that case simple edge detector can easily distinguish the boundary of the objects from an inhomogeneous background[14,15]. In edge-based segmentation techniques, selection of the edge detector is an important issue. In terms of time, quality and the requirement of the segmentation, Sobel edge detector is recommended in this application because of its superior noise- suppression characteristics. The vertical and horizontal masks of Sobel edge detector are shown in Table 1 and 2 respectively. Since Otsu[11] is the most referenced global thresholding method, it is used here to localize the edges after edge detection.| Table 1. Sobel Edge Detector (Vertical) |

| |

|

| Table 2. Sobel Edge Detector (Horizontal) |

| |

|

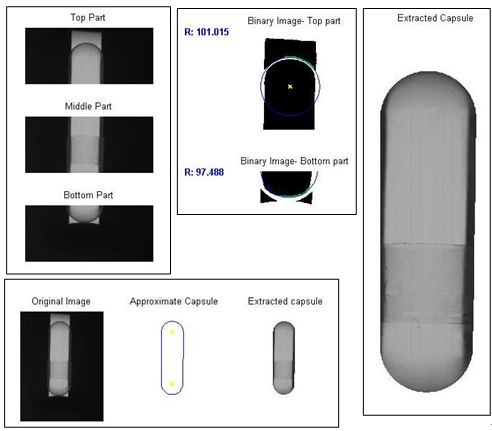

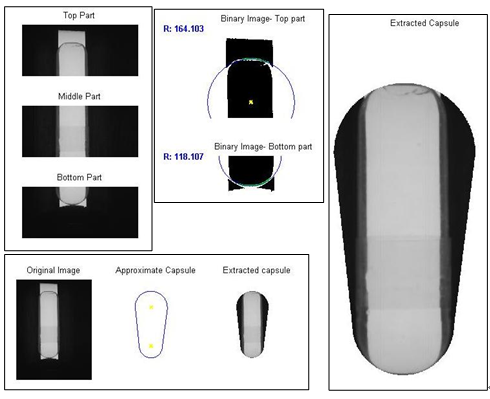

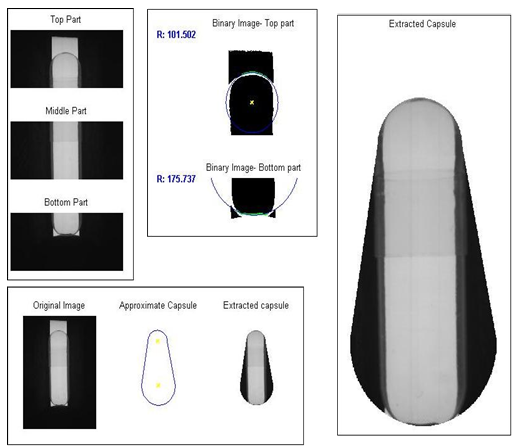

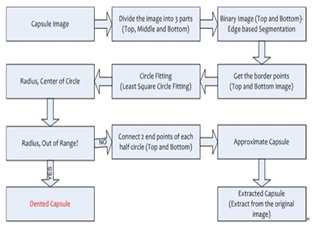

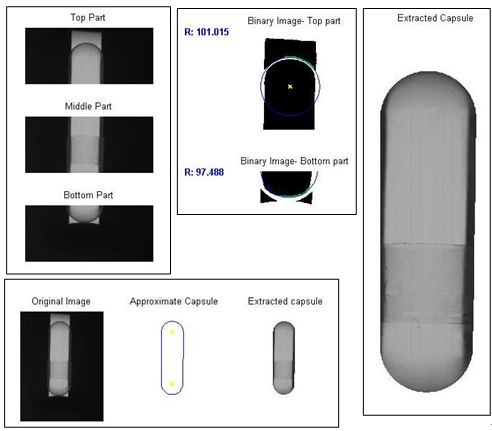

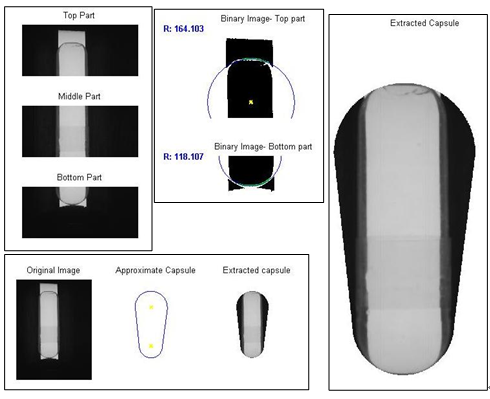

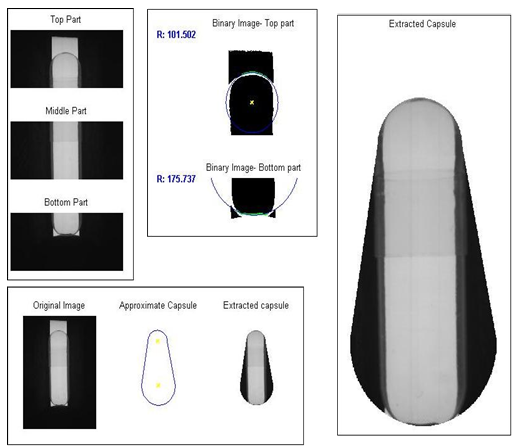

Dimension of the capsule as well as cap and body lengths are the important parameters for detecting the size, missing cap and double cap. Some dents can be detected from the size of the capsule. But most of the dents are so small that do not even change the shape of the two ends of the capsule. These types of dents are very hard to detect. From the dented capsules, it was found almost 90% dents are in either cap end or body end. Although sometimes it does not make any difference in the length or width, but in most cases, the radius of these two ends differs much. This observation motivated us to come up with an idea to measure the radius of both ends and compare the measured radius with the expected radius. The proposed steps are given below:a) Divide the capsule image into 3 parts. The top and bottom parts are very similar to the dome (half circle) and they are approximated to a half circle using least square circle fitting. To do that, at first an edge-based segmentation is used to locate the transition between background and edges of the capsules and mathematical morphological operators are used to smooth the edges.b) Using suitable border-tracing algorithm[16,17] the border points of both top and bottom parts are obtained and these border points are approximated to a half-circle and the radius of each part and two end points of the half circle are identified. The first decision whether it is a defect free or defective capsule comes from the radius. If the radius is out of the ideal range, it is defective.c) From the top and bottom part half circles we have a total of 4 end points and 2 points in each side are connected together that form an approximated capsule image. The flowchart of the proposed steps to extract the capsule image from the captured image is shown in Figure 7.Once the approximated capsule image is extracted the next step is to analyze the capsule region to detect the defects. At this stage, each part of the capsule for example top (left), bottom (right), left lock and right lock are segmented using edge-based segmentation followed by morphological smoothing to calculate the different capsule parameters. These calculated parameters are compared with the standard parameters and if any of them goes out of range, it is concluded that the capsule is defective. Once the parameters are in the range, connected component labelling is applied and counts the number of pixels in each region to check the holes, bubbles or cracks. The number of pixels allowed to be a defect free capsule can vary according to the requirements of the industry and the proposed system has the flexibility to take this value. | Figure 7. Flow chart of the proposed capsule extraction method |

6. Results and Performance Evaluation

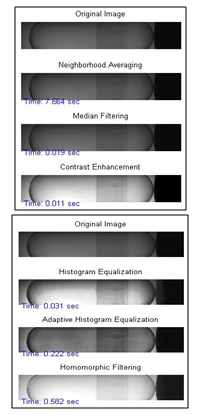

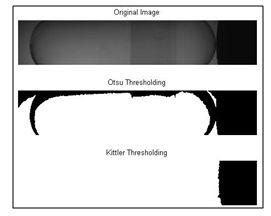

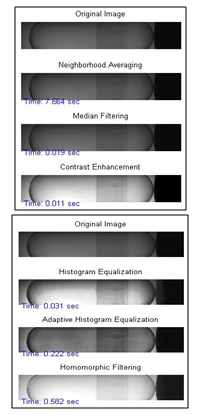

We have tested several image enhancement techniques using Matlab 7.0 in a Pentium IV, Intel Core 2 Duo @1.60GHz system. The image database of sample size more than 6000 was generated from the images captured from the developed capsule inspection system. The capsule size 0 was used in this application. The time requirement for inspecting one capsule from 4 different views is as follows. The industry requirement is to inspect 1000 capsule per minute. In the OptiSorter, 4 quadrants are working in parallel. Therefore, 250 capsules per min per quadrant have to be inspected. So, to inspect one capsule it requires (60/250)=240 ms. The hardware is developed in a way where around 50 ms is required for image acquisition. Therefore, around 190 ms is reserved for capsule inspection from 4 different views. It is observed that bottom and top camera images carry more information than left and right camera images. That's why bottom and top camera images are used to measure almost all parameters like length, width, cap size, body size, closed joined length and number of pixels in each area. Most of the decisions are taken from these two images and if any parameters go out of range, it is marked as a defective capsule. Therefore, comparing the image size and parameters calculation, it is estimated that the top and bottom camera images require 60 ms each and right and left camera images require 35 ms each.Our results show that median filter provides best results both in terms of computational time and global variance. The enhanced image using different methods are shown in Figure 8. Comparing the enhancement quality in Figure 8 and global variance and computational time in Table 3, it is obvious that the median filter shows best performance in this application. The enhanced image using median filtering is then passed through the image segmentation phase. The success of the capsule inspection system depends on the quality of the binary image. Different segmentation techniques were tested in this application. Otsu[11] and Kittler[9] are the dominating techniques in most survey. The binary image using these two methods is shown in Figure 9.| Table 3. Quantitative Measures- Image Enhancement |

| | Techniques | Variance | Computational Time (Sec)- MATLAB | | Original Image (1024x200) | 0.2215 | | | Neighborhood Averaging | 0.1014 | 7.664 | | Median Filtering | 0.2066 | 0.019 | | Contrast Enhancement | 1.3274 | 0.011 | | Histogram Equalization | 3.0077 | 0.031 | | Adaptive Histogram Equalization | 2.1208 | 0.222 | | Homomorphic Filtering | 3.5693 | 0.582 |

|

|

| Figure 8. Image enhancement using different techniques |

| Figure 9. Binary image using Otsu[11] and Kittler[9] |

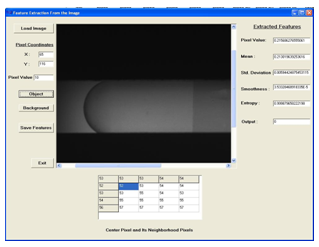

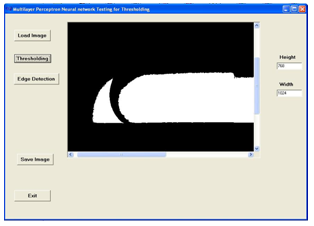

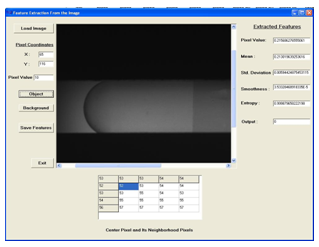

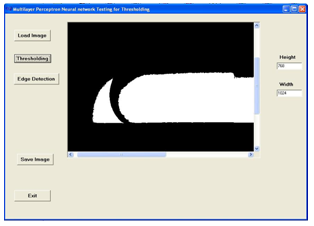

ANN-based thresholding was investigated in this application and applied to binarize the capsule image using five dominant features according to the paper[18]. Pixel value, mean, standard deviation, smoothness and entropy are calculated from the 5x5 neighborhood pixels[18] and used as input to the ANN to binarize the document image. In this application, the image features are also calculated from the 5x5 neighborhood pixels and save it to the training dataset as an object and background based on visual inspection. The training data preparation is shown in Figure 10. The network is trained from the available training dataset and weights are calculated using the method described in paper[18]. The binary image using ANN-based thresholding method is shown in Figure 11. The ANN architecture and computational time is shown in Table 4. | Figure 10. Training dataset generation using[18] |

| Table 4. Neural Network Architecture |

| | Layer | No. of Nodes | Comments | | Input | 5 | Features | | Output | 4 | 2/3*(Input+ Output) | | Hidden | 1 | 1- Object, 0- Background | | Time | 2.54 Sec (Computational) | 80 ms (Expected) |

|

|

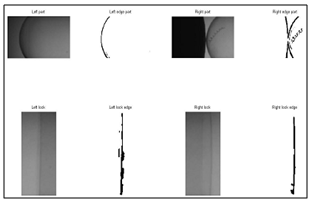

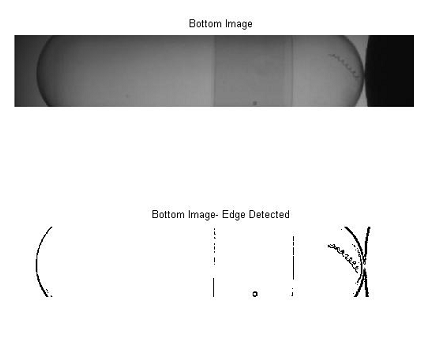

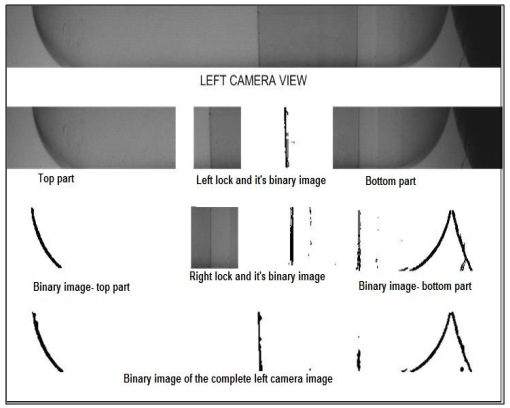

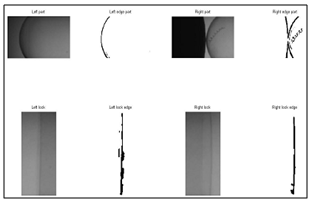

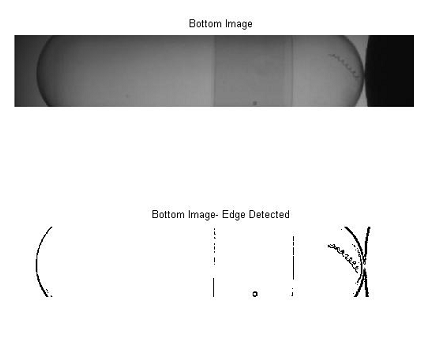

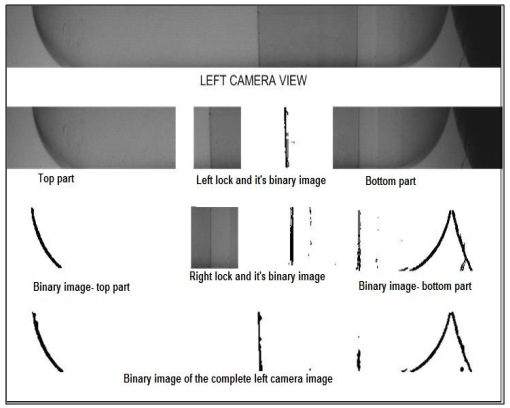

The ANN-based image segmentation is implemented using Borland C++. The processing time to have a binary image is around 2.54 sec whereas the time available for complete quality inspection including image segmentation is 60 ms. The quality of the binary image is evident from the Figure 11. Therefore in terms of computational time and quality of the binary image ANN-based image segmentation is not suitable approach in this application.On the other hand the binary image of different parts of the capsule using edge-based segmentation using Sobel edge detector is shown in Figures 12 and 13 where the quality of the binary image is evident. Using the proposed method the sample good and dented capsule is shown in Figures 14 and 15 respectively. The dented capsule in bottom part is shown in Figure 16.In case of left and right camera images, the same image processing techniques are applied like median filtering, Sobel edge detector and Otsu thresholding[11] techniques. From the left and right camera images mostly the surface flaws are calculated because some flaws are not detected in bottom and top images and very clearly can be detected from right and left images. But the image processing techniques are same as bottom and top images. In left and right images there is a similarity that makes the image processing task easier. The left image is the vertically flipped image of the right image. Therefore, the image processing techniques that are used to process the right image can be used to analyze the left image, only before processing the left image is vertically flipped. The binary image of different parts of the left and right camera images are shown in Figures 17 and 18 respectively. | Figure 11. Binary image using ANN[18] |

| Figure 12. Edge based segmentation |

| Figure 13. Closed view of edge based segmentation |

| Figure 14. Good capsule image using the proposed method |

| Figure 15. Dented capsule image using the proposed method- Example 1 |

| Figure 16. Dented capsule image using the proposed method- Example 2 |

The complete quality inspection result using the edge-based proposed method is shown in Table 5 for sample size approximately 6000 (size 0) where 4800 capsules are good and 1200 capsules are bad. Correct classification is obtained about 97.1% and misclassification is about 2.9%. The estimated sensitivity and specificity of the proposed system is shown in Table 6. In this table it is clearly shown that the sensitivity of the proposed system is 97.45% and the specificity is 95.67% whereas the industry requirement was 95%. It is estimated that the computational time for all four images is around 190 ms and the computational time using proposed method requires approximately 160 ms. | Figure 17. Binary image of left camera image using proposed method |

| Figure 18. Binary image of right camera image using proposed method |

| Table 5. Quality Inspection Result |

| | Defects | False Positive | False Negative | | Incorrect Size | 8 | 10 | | Dents | 9 | 38 | | Cracks | 17 | 25 | | Holes | 10 | 23 | | Bubbles | 8 | 26 | | Missing Cap | 00 | 00 | | Closed Cap | 00 | 00 | | Wrong Color | 00 | 00 | | Overall | 52 | 124 | | | | Misclassification (%) | 2.9 | | Correct Classification (%) | 97.1 | | Time Requirement (ms) | 190 | | Avg. Comp. Time (ms) | 160 |

|

|

| Table 6. Performance Evaluation |

| | True Positive (TP)= 4678 | False Positive (FP) = 52 | | False Negative (FN)= 122 | True Negative (TN)= 1148 | | Sensitivity (SN)=TP/(TP+FN) | 97.47% | | Specificity (SP)=TN/(TN+FP) | 95.57% | | Industry Requirement | 95% |

|

|

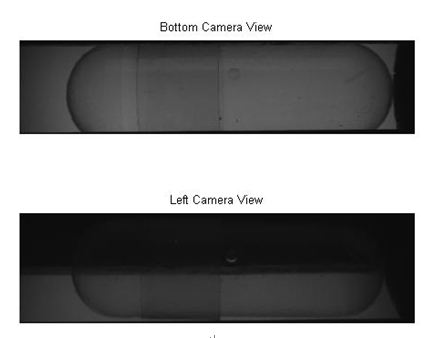

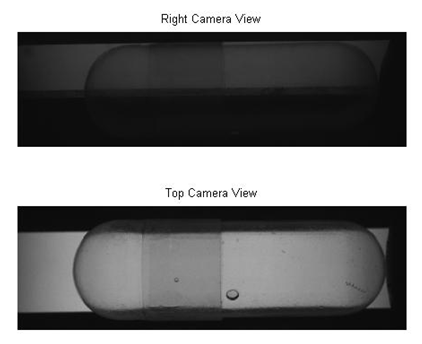

6.1. Rationale Using Four Cameras

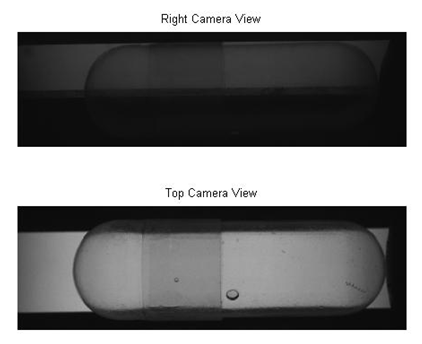

Three cameras are enough to cover full 360o, 120o apart from each other. However, it was found that for through inspection four cameras per quadrant provide better results. There are some bubbles, cracks or holes in a capsule that are missed when we use three cameras. This is because the orientation of the capsule is such that that the defect is not clearly visible from bottom, left and right camera, hence it is missed in image segmentation stage. But if we use one more camera on top, it becomes clearly visible and the defect is detected easily. An example of a defective capsule with bubble detected using the top camera is shown in Figures 19 and 20 respectively. | Figure 19. Bottom and left camera view of defective capsule |

| Figure 20. Top and right camera view of defective capsule |

7. Conclusions

In this paper, we have presented an edge-based binarization method to extract capsule from the low contrast gray scale image. With the developed low cost hardware system, the image processing system meets the requirement of inspecting 1000 capsules per minute with acceptable accuracy of 95%. Dents are detected from the radius of the half circle generated from the border points of the top and bottom part of the capsule. The reference points for calculating the number of connected components are calculated from the left lock and right lock regions and two radius of top and bottom parts. The proposed system is flexible and a threshold can be set to detect holes, bubbles, cracks, dirt and foreign body inside the capsule of different size based on the industry requirement. Using the proposed system 97.1% correct recognition rate is achieved. The sensitivity of the proposed system is 97.45% and specificity is 95.67% which is very much promising. The proposed quality inspection of two part gelatin capsules not only maintain high throughput, but also performs accurately and reliably. It also meets cost and speed requirements and provides the flexibility to process different size capsules through its reconfigurable hardware and software.

ACKNOWLEDGEMENTS

This research project has been financially supported by the Pharmaphil Inc., Windsor, ON, Ontario Center of Excellence (OCE), Natural Science and Engineering Research Council (NSERC) and Research Center for Integrated Microsystems (RCIM) of University of Windsor. The financial support of these organizations is greatly acknowledged with appreciation.

References

| [1] | A.C. Karloff, N.E. Scott, and R. Muscedere, A flexible design for a cost effective, high throughput inspection system for pharmaceutical capsules, in IEEE International Conference Industrial Technology (ICIT'2008), Chengdu, China, April 21-24, 2008 |

| [2] | Daiichi Jitsugyo Viswill Co. Ltd., Capsule Visual Inspection System, CVIS-SXX-E, http: //www.viswill.jp/English/CVIS_E/cvis_ index_e.html, 2005 |

| [3] | M.J. Islam, M. Ahmadi and M.A. Sid-Ahmed, Image processing techniques for quality inspection of gelatin capsules in pharmaceutical applications, in 10th International Conference on Control, Automation, Robotics and Vision (ICARCV 2008), Hanoi, Vietnam, December 17-22, 2008 |

| [4] | M.J. Islam, Q.M.J. Wu, M. Ahmadi and M.A. Sid-Ahmed, Grey scale image segmentation using Minimum Error Thresholding techniques, in 6th International Conference for Upcoming Engineers (ICUE' 2007), Ryerson University, Toronto, ON, Canada, pp. 1-5 (CD Version), May 28-29, 2007 |

| [5] | A. Chakraborty, L.H. Staib and J.S. Duncan, Deformable boundary finding influenced by region homogeneity, Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 624-627, Seattle, WA, June 1994 |

| [6] | R.C. Gonzalez and R.E. Woods, Digital image processing, 2nd ed., Pearson Education Asia, 2004 |

| [7] | M. Sezgin and B. Sankur, Survey over image thresholding techniques and quantitative performance evaluation, Journal of Electronic Imaging, Vol. 13, No. 1, 2004 |

| [8] | M.A. Sid-Ahmed, Image processing theory, algorithms and architectures, 1st ed., McGraw-Hill, New York, 1995 |

| [9] | J. Kittler, J. Illingworth, Minimum error thresholding, Pattern Recognition, Vol. 19, pp. 41-47, 1986 |

| [10] | P.K. Sahoo, S. Soltani, A.K.C. Wong, A Survey of thresholding techniques, Computer Vision, Graphics and Image Processing, Vol. 41, pp. 233-260, 1988 |

| [11] | N. Otsu, A threshold selection method from gray level histograms, IEEE Trans. On Systems, Man and Cybernetics, Vol. 9, pp. 62-66, 1979 |

| [12] | R. Koker and Y. Sari, Neural Network based automatic threshold selection for an industrial vision system, Proc. Int. Conf. on Signal Processing, pp. 523-525, 2003 |

| [13] | Y. Alginahi, Computer analysis of composite documents with non-uniform background, PhD Thesis, Electrical and Computer Engineering, University of Windsor, Windsor, ON, 2004 |

| [14] | M. Sharafi, M. Fathy and M.T. Mahmoudi, A classified and comparative study of edge detection algorithms, International Conference on Information Technology: Coding and Computing, pp. 117-120, April 8-10, 2002 |

| [15] | D.H. Ballard and C. Brown, Computer vision, Prentice Hall, 1982 |

| [16] | A. Chottera and M. Shridhar, Feature extraction of manufactured parts in the presence of spurious surface reflections, Canadian Journal of Electrical and Computer Engineering, Vol. 7, no. 4, pp. 29-33, 1982 |

| [17] | Border tracing, digital image processing lectures- The University of Iowa, |

| [18] | http://www.icaen.uiowa.edu/~dip/LECTURE/Segmentation2.html#tracing |

| [19] | Y. Alginahi, M.A. Sid-Ahmed and M. Ahmadi, Local thresholding of composite documents using Multi-layer Perceptron Neural Network, The 47-th IEEE International Midwest Symposium on Circuits and Systems, pp. 209-212, 2004 |

. It is defined as:

. It is defined as:

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML