-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Computer Architecture

2024; 11(1): 10-13

doi:10.5923/j.ajca.20241101.02

Received: Apr. 18, 2024; Accepted: May 7, 2024; Published: May 13, 2024

Solution Architecture for Salesforce CRM to Publish and Subscribe Events to Kafka

Swaran Kumar Poladi

Architect, IT - CRM, Medical Devices Company, Raleigh, USA

Correspondence to: Swaran Kumar Poladi, Architect, IT - CRM, Medical Devices Company, Raleigh, USA.

| Email: |  |

Copyright © 2024 The Author(s). Published by Scientific & Academic Publishing.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Companies that use Salesforce as their CRM system and use Salesforce CPQ, Sales or Service Cloud and transfer real-time events to ERP and other applications within company can become quite complex. This solution outlines how to process millions of real-time events on Salesforce by overcoming various governor and processing limits and making the solution scalable. The solution outlines the different API and transformation approaches used and how to publish or subscribe events to Kafka.

Keywords: Salesforce, CRM, Solution Architecture, Kafka, Event-based framework

Cite this paper: Swaran Kumar Poladi, Solution Architecture for Salesforce CRM to Publish and Subscribe Events to Kafka, American Journal of Computer Architecture, Vol. 11 No. 1, 2024, pp. 10-13. doi: 10.5923/j.ajca.20241101.02.

Article Outline

1. Introduction

- In the current world of interconnected, real-time events play a pivotal role in making the right decisions at the right time. Today, Salesforce CRM which is widely used by many companies as a Customer Relationship Management platform would need to connect to various applications to receive and send real-time data events. Apache Kafka is a distributed event streaming platform. Salesforce offers a wide variety of ways to publish and subscribe to these real-time events. Performing transformations on Salesforce to process events is a complex activity. As Salesforce is a multi-tenant application it has many governor limits to ensure that shared resources are not monopolized for one single organization/tenant.

2. Overview of Salesforce Streaming APIs

- Salesforce offers a wide variety of ways of APIs that can be used for publishing and subscribing events to and from Salesforce.

2.1. Pub/Sub API

- 1. Pub/Sub API is based on gRPC and HTTP/2 which efficiently publishes and delivers event messages in Apache Avro or JSON format.2. This API provides a single interface for both publishing and subscribing to SFDC events.3. Pub\Sub API has key benefits related to performance improvements compared to REST, HTTP/2 support, and bidirectional streaming.

2.2. Streaming API

- 1. Streaming API uses Bayeux protocol and CometD, which can be used to stream events out of Salesforce.2. This API uses HTTP/1.1 request-response model.

2.3. Outbound Notifications

- 1. Outbound messaging uses the notification call to send SOAP messages over HTTP(S) to a designated endpoint.2. This API uses the HTTP/1.1 request-response model.3. Messages are queued locally in Salesforce and the designated endpoint application needs to process these messages.

3. Problem Statement

- To publish or subscribe events directly from or to Kafka, Salesforce doesn’t offer any standard libraries with Apex language. The code language that is used on the Salesforce platform is Apex. To overcome this, we either need to go with a middle-ware type of solution or we can use the open-source connectors to act as an intermediate to publish or subscribe events back and forth between Salesforce and Kafka. When using the open-source connectors or other paid connectors, event transformation needs to be handled within the Salesforce platform and needs to make sure the solution is optimized to work within the Platform without running into any issues.

4. Solution Architecture

4.1. Solution Overview

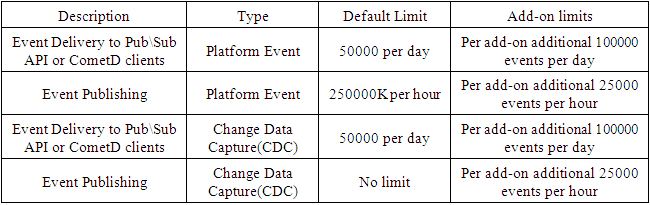

- This solution approach has three main components. Salesforce, Open-Source Connector hosted on Google Cloud, and Kafka managed services hosted on Google Cloud.

| Figure 1. Solution Overview of Salesforce, Connectors, and Kafka |

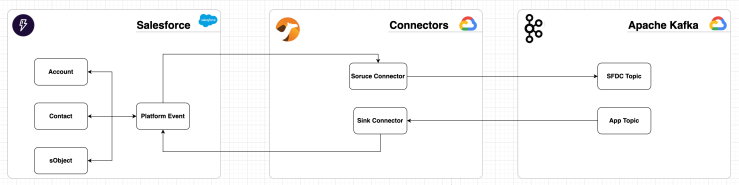

| Figure 2. Sink Connector - Events into Salesforce |

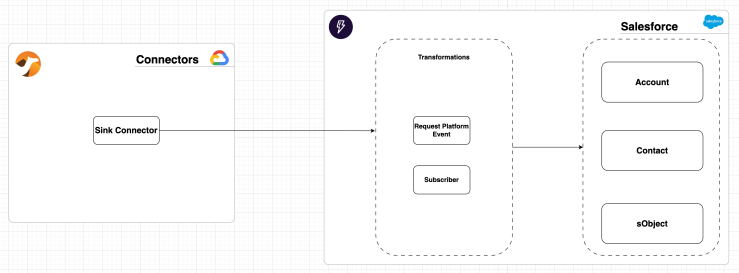

| Figure 3. Source Connector - Events into Kafka from Salesforce |

5. Governor Limit Considerations and License Cost of Salesforce Platform

5.1. Governor Limits

- As stated, there are many governor limits on the Salesforce multi-tenant platform which we need to consider during solutioning. Some of the common limits that we might hit during the code execution more frequently and how this solution overcomes it are stated below. These limits cannot be extended at any cost. So, it’s very important that we don’t run into any issues during the implementation and need to make sure the solutions are scalable.

|

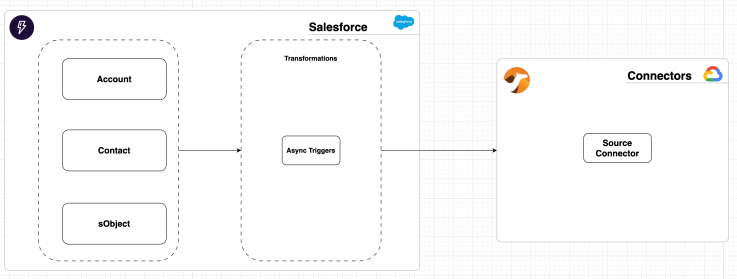

5.2. License Cost (Add-on for Salesforce Only)

- Platform Event and Change Data capture events that are subscribed by external applications (in this case Camel connector) have a contractual limit. These can be purchased additional as needed based on the demand.

|

6. Conclusions

- As more and more systems get connected, the more real-time events increase and the solution of handling millions of real-time events using packaged platforms like Salesforce sometimes can become super complex. The part of handling the complex transformation logic within the Salesforce platform and publishing or consuming events at a much faster phase is outlined in this article using an open-source connector but not by a middleware solution. Using middleware solution instead of a connector could ease the solution but there will be an associated cost to it in terms of both license and maintenance. This solution by using platform events and or Change Data Capture(CDC) can be scaled to consume or publish millions of events per day if needed.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML