-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Computer Architecture

2017; 4(1): 1-4

doi:10.5923/j.ajca.20170401.01

Cloud Burst for Legacy Enterprise Applications

Subash Thota

Data Architect, National Science Foundation, Washington DC, USA

Correspondence to: Subash Thota, Data Architect, National Science Foundation, Washington DC, USA.

| Email: |  |

Copyright © 2017 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Cloud Burst is an automatically scalable application model in which applications run in a data center and use the public cloud to augment the capacity on demand. Critical legacy applications that have shown Data and User growth for many years may need extra capacity during spikes. The time when extra capacity is needed is always a crucial period from a business perspective. This makes it difficult for Enterprises to accept the risk of any capacity change in the critical application during the period even if technically feasible. Also, standard processes and models for suitability assessment and implementation are still not mature enough to harness the potential of cloud burst to full extent. The document gives an overview of strategy on how the potential of cloud burst model can be used for such applications while still mitigating the involved risk. The documents further discuss the alternate strategies in case cloud burst is not suitable for a given application landscape.

Keywords: Cloud Computing, On-Premise, Business Intelligence, Cloud Strategy

Cite this paper: Subash Thota, Cloud Burst for Legacy Enterprise Applications, American Journal of Computer Architecture, Vol. 4 No. 1, 2017, pp. 1-4. doi: 10.5923/j.ajca.20170401.01.

Article Outline

1. Understanding the Scenario

- The document explains cloud burst with a most common scenario faced by the majority of enterprises these days. Applications may need an increased capacity for a short time due to reasons like month end reporting, data analysis, etc. Also, the same period can experience more application usage due to increased business user activity and thus add to capacity demand. Sometimes this capacity need is known in advance like month end etc. Other times it may be ad-hoc. The model will need to cater to the scenarios identified for the application.

2. Overview of Designing the Cloud Burst Model

- • The cloud burst model is based on identifying which application, components and environments can be moved to public cloud temporarily when a data center is running short in capacity. • Switching over to a public cloud of these identified applications/components / environments should be completely automated using DevOps tools and services. It can be scheduled (like on last day of every month for month end) or triggered by some resource shortage criteria. Also, the DevOps model needs to take care that there is a seamless connection with on-premises common services is maintained.• The model will further need to ensure that released capacity should be available for applications remaining on the data center. This can be done by removing capping of resource usage. Standard processes may be followed for doing it on a private cloud. Otherwise, one-time change may be needed in data center physical servers to remove any capping of resources for critical application production environments. This can include changes such as CPU realignment through change management process on production servers.

3. Different Scenarios on what can and cannot move in Public Cloud during Cloud Burst

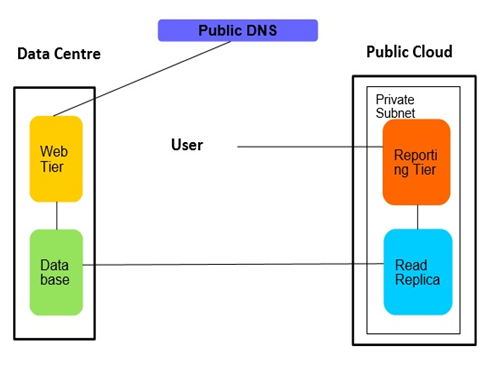

- The application landscape needs to be considered in identifying what can and cannot be moved into public cloud temporarily.• Critical applications, Applications with multiple interfaces, Integration environment with dependencies are not good candidates for movement.• Non-Production environment (other than integration environment) may be moved to public cloud temporarily.• Non-Critical Applications, environments that have fewer interfaces or dependencies on data centers can be a suitable candidate for movement. • Many critical Legacy applications need cloud burst due to reporting and data analysis needs during the specific scheduled period, for example, month end or quarter end reporting. In such case, entire Reporting framework can be completely offloaded to the public cloud. There will be no movement of any application components during cloud burst as reading replica database, and reporting processes will be triggered to be automatically created and operational by DevOps model in the Public cloud only during the specific time. Once complete these will be automatically decommissioned. Similarly, the need for analysis of large datasets can be completely offloaded to Public Cloud.

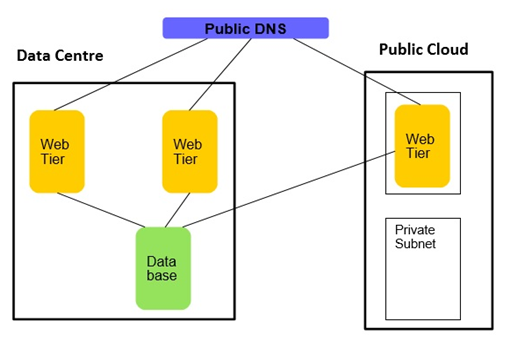

• It is also possible to add application component instance (like web tier) on the cloud rather than moving the entire application to the public cloud, but this can have connectivity bandwidth considerations. Latency can become a bottleneck especially if web tier and database are communicating through an encrypted tunnel on the internet.

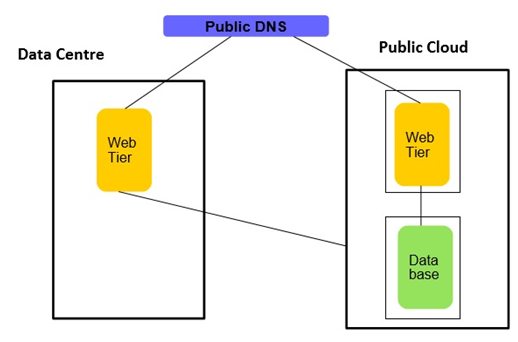

• It is also possible to add application component instance (like web tier) on the cloud rather than moving the entire application to the public cloud, but this can have connectivity bandwidth considerations. Latency can become a bottleneck especially if web tier and database are communicating through an encrypted tunnel on the internet. • In case offloading reporting to a Public cloud is not sufficient and further capacity is required for the database then the database can be moved to the Public cloud using the different techniques with no or minimal downtime. Again, this can have connectivity bandwidth considerations. Latency can become a bottleneck especially if web tier and database are communicating through an encrypted tunnel on the internet. Also, it's recommended to do this arrangement only for non-critical applications.

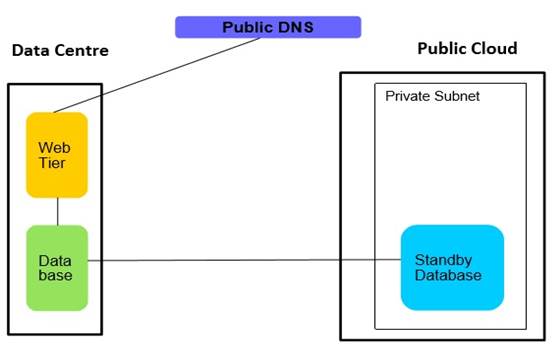

• In case offloading reporting to a Public cloud is not sufficient and further capacity is required for the database then the database can be moved to the Public cloud using the different techniques with no or minimal downtime. Again, this can have connectivity bandwidth considerations. Latency can become a bottleneck especially if web tier and database are communicating through an encrypted tunnel on the internet. Also, it's recommended to do this arrangement only for non-critical applications. • Many critical Legacy applications have a standby database which is meant for DR. The database or entire server is replicated near real time. Sometimes this standby database is in the same data center, and other times it is in a separate data center. Moving standby database can release additional capacity in the on-premises data center. Also moving a standby database which is collocated in the same facility can be beneficial from the purpose of DR by eliminating a single point of failure and hence increasing the protection during the crucial period when cloud burst is needed.

• Many critical Legacy applications have a standby database which is meant for DR. The database or entire server is replicated near real time. Sometimes this standby database is in the same data center, and other times it is in a separate data center. Moving standby database can release additional capacity in the on-premises data center. Also moving a standby database which is collocated in the same facility can be beneficial from the purpose of DR by eliminating a single point of failure and hence increasing the protection during the crucial period when cloud burst is needed.

4. Other Considerations while Designing Cloud Burst

- • Bandwidth and type of connectivity between the data center and Public Cloud need to be considered while designing the model. Data transfer between an application on cloud and common services on data center will need to be factored in a while deciding bandwidth and cost. Data transfer can further increase in case cloud burst deploys some components of applications on cloud, and others continue to be in Datacenter. Encrypted tunnel on Internet may not be suitable due to performance variations. Private direct connections can be suitable. But set up time and cost will increase as third party Private cloud partners will be involved in providing the connectivity depending upon the region of the data center.• Regulatory Compliance, privacy, and security need to be considered while building the cloud burst model. Applications with sensitive data may not be a good candidate for bursting into a cloud.• Supported technology stack of Public cloud and DevOps needs to be analyzed for Compatibility constraints before building the model.

5. Technical Details in Building the Model

- • Create a logically isolated network with Public and Private subnet on the Public Cloud.• Establish a connectivity between on-premises Data Centers and Public regions using Secure encrypted Internet tunnel or a private connection.• Configure route tables so that supporting services and interfaces connection is established with Public cloud network/subnets through encrypted internet tunnel or private connection.• Create network security groups, Network ACLs, etc.• Continuous deployment concept needs to be adopted. Depending on the tool chosen for DevOps, make the templates to automate the infrastructure creation including server and other resources provisioning. • IAM accesses will need to be configured for templates. • Installation and configuration of software applications and databases on virtual machines should again use continuous deployment. This can also be automated through inclusion in the template.• Some tools provide “wait” condition and other similar features to take care of dependencies. These need to be used depending on the infrastructure set up to be created.• The DevOps model should avoid the requirement of manual monitoring. The event (like high CPU or memory utilization) can be generated through monitoring infrastructure tools, and an API called automatically to trigger the resource creation through a template on the Public Cloud. Alternately it can be triggered by a scheduled event or manually by a push button.• Building and testing strategy of the model should leverage continuous integration concept automated build and soft release from version control tools.

6. Best Practices while Designing Cloud Burst

- • Templates should be designed in such a way that these are reusable in different Environments. If needed nesting techniques should be used to avoid reuse templates. Parameters can be passed on to a nested template and out variables received back to source template.• Templates should be integrated with version control tools to maintain the version and backups.• Divide the project into tasks and conquer the easy tasks first by automating.• Avoid hard-coding of credentials in templates. These should be passed on as parameters when needed. These can be input manually or by using appropriate encryption techniques.• Logging needs to be enabled when DevOps is creating resources so that any issues can be investigated through logs. The total number of resources of a type in a public cloud is often capped. For example, a maximum number of virtual machines in a particular region is often 20. If resource creation through template can breach the limit, then same should be taken care in advance by getting the limit increased.

7. Tools

- Appropriate tools will need to be selected to build the model. These can include the following.• Hybrid cloud solutions by a Public cloud provider or third parties. The solution components are located both on-premises and on the public cloud, and these include DevOps tools and services to automate the entire process of switching applications or certain processes to the public cloud.• DRaaS services/tools for switching application or servers to public cloud with minimal or no downtime. • Public cloud database migration service and tools can help in migrating database to public cloud with minimal or no downtime.• DevOps tool will need to be carefully evaluated and assessed as the success of a cloud burst depends on the amount of automation and scalability in the model.• Proprietary database Services or tools can be used for creating the read replica reporting database on Public Cloud.

8. Alternative Strategies

- At times cloud burst model can take substantial effort and budget for building the fully automated DevOps architecture. Also, sometimes it may not be recommended due to other factors described above. In such case, an alternative can be to keep sufficient capacity permanently available in the data center. This extra capacity can be created by identifying and migrating certain applications permanently from data center to public cloud.

9. Conclusions

- We have entered an era of Big Data, and an ODS and a Core DWH will surely stay within the confines of the home enterprise for quite a while. Cloud promises significant benefits. Cloud has been developed to enhance the efficiency, productivity, and performance of application. It helps in quick implementations, reduction of cost in applications. There are numerous advantages of cloud regarding flexibility, scalability, and management. However, without proper planning and execution on all the above, it will turn out to become a negative impact. Cloud can be expected to grow to the next generation which will stand more promising than the current scenarios. Various factors as described are available to assess whether cloud burst is suitable for an application landscape. A suitable cloud burst model can be a combination of strategies. Depending on the scenario, the appropriate strategy needs to be designed for the use case.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML