-

Paper Information

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Biomedical Engineering

p-ISSN: 2163-1050 e-ISSN: 2163-1077

2016; 6(2): 53-58

doi:10.5923/j.ajbe.20160602.02

The Image Registration Techniques for Medical Imaging (MRI-CT)

Hiba A. Mohammed

Department of Medical Engineering, University of Science and Technology, Omdurman, Sudan

Correspondence to: Hiba A. Mohammed , Department of Medical Engineering, University of Science and Technology, Omdurman, Sudan.

| Email: |  |

Copyright © 2016 Scientific & Academic Publishing. All Rights Reserved.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Image registration is the process of combining two or more images for providing more information. Medical image fusion refers to the fusion of medical images obtained from different modalities. Medical image fusion helps in medical diagnosis by way of improving the quality of the images. In diagnosis, image obtained from a single modality like MRI, CT etc, maynot be able to provide all the required information. It is needed to combine information obtained from other modalities also to improve the information acquired. For example combination of information from MRI and CT modalities gives more information than the individual modalities separately. The aim is to provide a method for fusing the images from the individual modalities in such a way that the fusion results is an image that gives more information without any loss of the input information and without any redundancy or artefacts. In the fusion of medical images obtained from different modalities the images might be in different coordinate systems and have to be aligned properly for efficient fusion. The aligning of the input images before proceeding with the fusion is called image registration. The intensity based registration is carried out before decomposing the images. The two imaging modalities CT and MRI are considered for this thesis. The results on the CT and MRI images display the performance of the fusion algorithms in comparison with the registration.

Keywords: 2D/2D images, Wavelet, Image registration, Rigid images, Medical imaging

Cite this paper: Hiba A. Mohammed , The Image Registration Techniques for Medical Imaging (MRI-CT), American Journal of Biomedical Engineering, Vol. 6 No. 2, 2016, pp. 53-58. doi: 10.5923/j.ajbe.20160602.02.

Article Outline

1. Introduction

- Medical images are increasingly being used in treatment planning and diagnosis. Medical devices such as Computerized Tomography (CT), Magnetic Resonance Imaging (MRI) are used to provide information about the anatomical structure of the organs while other devices Positron Emission Tomography (PET), Single Photon Emission Computerized Tomography (SPECT) provide the functional information. Each of these provides information that is useful in the treatment planning. In medical diagnosis and treatment, there are a wide interest in integrating information from multiple images using different modalities. This process is known as image registration. Image registration, also known as image fusion, matching or warping, can be defined as the process of aligning two or more images. The goal of an image registration method is to find the optimal transformation that best aligns the structures of interest in the input images. Image registration is a crucial step for image analysis in which valuable information is conveyed in more than one image; i.e., images acquired at different times, from distinct viewpoints or by different sensors can be complementary. Therefore, accurate integration (or fusion) of the useful information from two or more images is very important. Image fusion means the combining of two images into a single image that has the maximum information content without producing details that are non-existent in the given images. With rapid advancements in technology, it is now possible to obtain information from multi source images to produce a high quality fused image with spatial and spectral information. Image Fusion is a mechanism to improve the quality of information from a set of images. The objective of this paper is to develop a method for rigid image registration of CT and MRI with wavelet image fusion.

2. Methodology

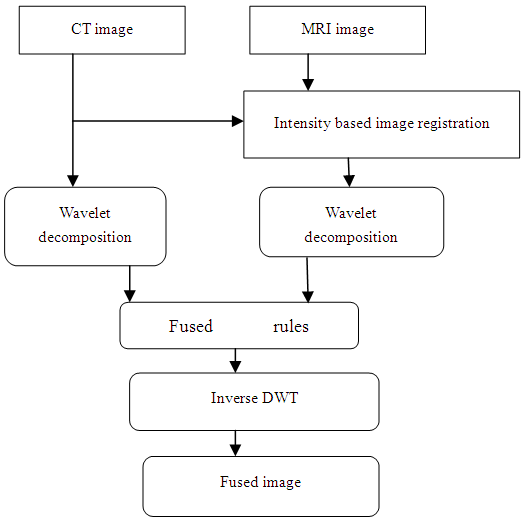

- The proposed method was developed using the MATLAB tool box, for the simulation of the image registration and image fusion algorithms. The data of DICOM images of CT and MRI are used for the implementation of the code were downloaded from the Math-works website. These multimodal images were processed prior to the application of the fusion algorithm. The program was performed in two stages: the first stage registration of DICOM images (CT-MRI) using intensity based registration, the reason of choosing this method is the simplest and least complicated, And the second stage the implementation of the fusion algorithm on the registered images using wavelet based image fusion, Also reason for choosing this method to achieve the best results possible by combing both techniques of registration and image fusion.

2.1. Image Registration

- Intensity-based automatic image registration is an iterative process. It requires that you specify a pair of images, a metric, an optimizer, and a transformation type. In this case the pair of images is the MRI image (called the reference or fixed image) which is of size 512*512 and the CT image (called the moving or target image) which is of size 256*256. The metric is used to define the image similarity metric for evaluating the accuracy of the registration. This image similarity metric takes the two images with all the intensity values and returns a scalar value that describes how similar the images are. The optimizer then defines the methodology for minimizing or maximizing the similarity metric. The transformation type that used is rigid transformation (2-Dimension) that work translation and rotation for target image (brings the misaligned target image into alignment image with the reference image). Before the registration process can begin the two images (CT and MRI) need to be pre processed to get the best alignment results. After the pre processing phase the images are ready for alignment. The first step in the registration process was specifying the transform type with an internally determined transformation matrix. Together, they determine the specific image transformation that is applied to the moving image. Next, the metric compares the transformed moving image to the fixed image and a metric value is computed. Finally, the optimizer checks for a stop condition. In this case, the stop condition is the specified maximum number of iterations. If there is no stop condition, the optimizer adjusts the transformation matrix to begin the next iteration. And display the results of it in part of result.

2.2. Image Fusion

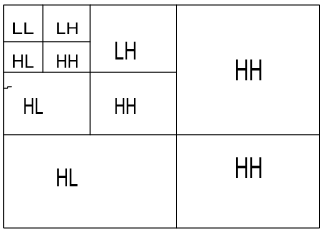

- The next stage after the registration process is the wavelet based image fusion. Wavelets are finite duration oscillatory functions with a zero average value. They can be described by two functions the scaling (also known as the father) function, and the wavelet (also known as the mother) function. A number of basic functions can be used as the mother wavelet for Wavelet Transformations. The mother wavelet through translation and scaling produces various wavelet families which are used in the transformations.The wavelets are chosen based on their shape and their ability to analyze the signal in a particular application. The Discrete Wavelet Transform has the property that the spatial resolution is small in low-frequency bands but large in high-frequency bands. This is because the scaling function is treated as a low pass filter and the wavelet function as a high pass filter in DWT implementation. The wavelet transform decomposes the image into low-high, high-low, high-high spatial frequency bands at different scales and the low-low band at the coarsest scale. The low-low image has the smallest spatial resolution and represents the approximation information of the original image. The other sub images show the detailed information of the original image. Different levels of decomposition can be applied as shown in the figure below.

| Figure 1. Wavelet decomposition |

| Figure 2. Image fusing scheme using wavelet transforms |

2.2.1. Implementation of Images Fusion

- After the registration process was inclusive the second stage was implemented using the wavelet toolbox main menu. To open the wavelet toolbox main menu write wave menu in the Matlab command window and the wavelet toolbox will appear directly. Once the window is open there are several selections, here the specialized tools 2-D was used, which is also subdivided into four selections, the one used for the implementation is the image fusion. After the image fusion is selected it opens another separate window. The two images (registered image and reference image) from both image data sets were then imported into this window in order to be fused one data set at a time. On the left side of the window are the wavelet families and level of decomposition selections, once these selections were defined the images were decomposed. In this thesis the eighth order Daubechies (db) wavelet family was used and the third level decomposition was applied to obtain the coefficients which are called the approximation coefficients, horizontal detail coefficients, vertical detail & diagonal detail coefficients that represent the four frequency bands LL, LH, HL and HH respectively once these selections were defined the decompose button was tapped and the images were decomposed. These coefficients of both the images are then fused using different fusion methods (rules), on the image fusion window below the decompose button there is a fusion selection method option here are where the fusion rules applied to the images are selected, certain rules that can be used are Maximum value, Minimum value, Mean Value and Random value. These techniques respectively merge the two approximations or details structures obtained from the images to be fused, element wise by taking the maximum, minimum, mean or a randomly chosen element. Here the rules applied to the images are the mean-mean, max-max, min-min. Finally the inverse wavelet decomposition was applied which results in the final fused image. And display the results of the fusion process it in part of result.

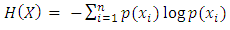

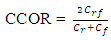

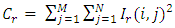

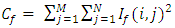

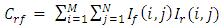

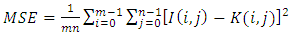

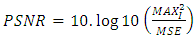

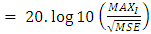

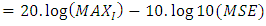

2.2.2. Performance Metrics to Evaluate Image Fusion Techniques

- The image fusion process should preserve all valid and useful information from the input images also it should not introduce undesired art facts. Various metrics are used in order to evaluate the performance of Image Fusion techniques. Examples of these metrics are: entropy, mean square error (MSE) and peak signal to noise ratio (PSNR).A) EntropyThe Entropy (H) is the measure of information content in an image. The amaximum value of entropy can be produced when each gray level of the whole range has the same frequency. If the entropy of the fused image is higher than reference image then it indicates that the fused image contains more information.

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

3. Results and Discussion

3.1. Result of the Registration Phase

- The DICOM CT and MRI images used to implement the code were downloaded from the math-works website. In this thesis two image data sets of the CT and MRI images were used for the implementation. These multimodal images were processed prior to the application of the fusion algorithm. The results of the preprocessing are shown below along with the original images before the preprocessing stage.

3.2. Pre processing Phase

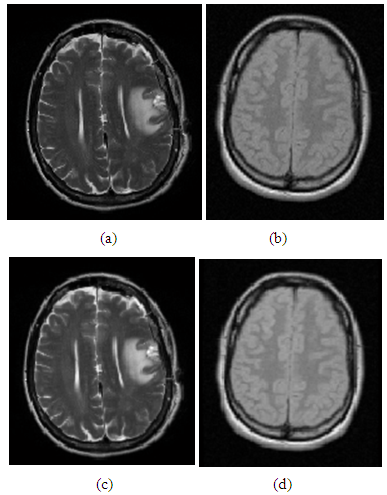

- The preprocessing phase generally means resizing the images, removing noise and improving or altering the image quality to suit a certain purpose. The first step in the preprocessing phase after reading and displaying the images into Mat lab was to resize the MRI (reference) image (a) to match the size of the CT (moving) image (b), and then several functions were used to complete the preprocessing task these step. Below are the results of the preprocessing along with the original images before preprocessing. From the images it can be seen that there is a difference between the original images (a), (b) and the images after the preprocessing (c), (d).

| Figure 3. (a) Original MRI image, (b) Original CT image, (c) MRI image after pre processing, (d) CT image after pre processing |

3.3. Implementation Result of the Images Registration

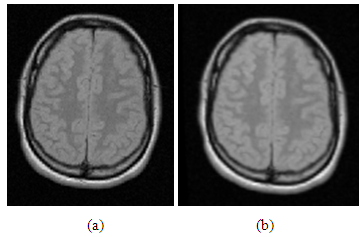

- After the images were preprocessed they were ready for the registration, here the registration method applied was the automated intensity based registration which was discussed in the methodology. The similarity between the images was found using the correlation function and the value was displayed in percentage along with the elapsed time as mentioned before. The similarity of the images before and after the registration and the elapsed time were 71.7448%, 82.7396%, 6.3648s for the image data sets. Below are the results of the images before and after the registration. The results before the registration are shown in figures (a), the image in green represents the reference image and the image in violet represents the moving image. Figures (b) the results of the images after the registration.

| Figure 4. (a) CT-MRI images before registration, (b) CT-MRI images after registration |

3.4. Result of the Images Fusion

- After the registration phase was done successfully the image fusion phase was implemented using the wavelet toolbox main menu.

3.4.1. Implementation Results of the Fusion

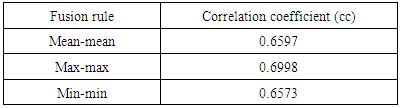

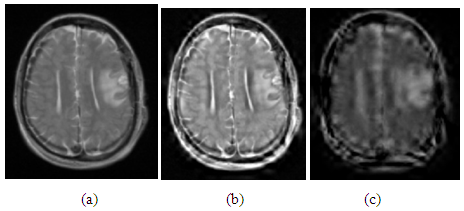

- After the registration stage was complete the registered image and the reference image for both image data sets were imported into the 2-D image fusion window in order to be fused. The images were decomposed using the eighth order Daubechies wavelet transform and third level decomposition was applied. The decomposed images were then fused using different fusion rules and finally the inverse wavelet decomposition was applied to obtain the final fused image. The results of the different fusion methods are shown in the figures below. The figures (a), (b), (c) are the results of the image fusion rules for the image data sets. The performance of the image fusion rules applied to the image data sets was evaluated using the Correlation Coefficient (CC). The values of this evaluation are listed in the tables below. Table 1. shows the image fusion rules of the image data sets. The results of the evaluation using the CC have shown very good performance.

| Figure 5. (a) Fused image CT-MRI using mean-mean fusion rule, (b) Fused image CT-MRI using max-max fusion rule , (c) Fused image CT-MRI using min-min fusion rule |

|

4. Conclusions

- The rigid image registration using wavelet based image fusion was performed using CT and MRI images. The quality of the images obtained by this technique has been verified using the Correlation Coefficient. The original input images and their corresponding registration and fusion results using the proposed technique are depicted in detail. The intensity based registration method was applied to the two input images, the quality of the registration was measured using the Correlation function to measure the similarity between the images before and after the registration the values of the correlation were displayed in percentage and the elapsed time taken for the registration process was also displayed, then the wavelet based image fusion algorithm was applied to the registered and reference images, the images were then decomposed and certain fusion rules were applied, finally the inverse decomposition was done to obtain the final fused images that contain information from both images. The quality of the fused images using the different fusion rules was done by measuring the Correlation Coefficient for each image fusion rule, and the resultant images are shown in the part of the result.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-text HTML

Full-text HTML