-

Paper Information

- Next Paper

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

American Journal of Biomedical Engineering

p-ISSN: 2163-1050 e-ISSN: 2163-1077

2012; 2(4): 155-162

doi: 10.5923/j.ajbe.20120204.02

Non-Invasive BCI for the Decoding of Intended Arm Reaching Movement in Prosthetic Limb Control

Ching-Chang Kuo 1, Jessica L. Knight 2, Chelsea A. Dressel 1, Alan W. L. Chiu 1, 3

1Biomedical Engineering, Louisiana Tech University, Ruston, LA, 71270, United States

2Biological Sciences, Louisiana Tech University, Ruston, LA, 71270, United States

3Applied Biology and Biomedical Engineering, Rose-Hulman Institute of Technology, Terre Haute, IN, 47803, United States

Correspondence to: Alan W. L. Chiu , Biomedical Engineering, Louisiana Tech University, Ruston, LA, 71270, United States.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

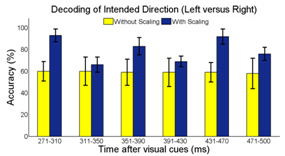

Non-invasive electroencephalography (EEG) based brain-computer interface (BCI) is able to provide an alternative means of communication with and control over external assistive devices. In general, EEG is insufficient to obtain detailed information about many degrees of freedom (DOF) for arm movements. The main objectives are to design a non-invasive BCI and create a signal decoding strategy that allows people with limited motor control to have more command over potential prosthetic devices. Eight healthy subjects were recruited to perform visual cues directed reaching tasks. Eye and motion artifacts were identified and removed to ensure that the subjects’ visual fixation to the target locations would have little or no impact on the final result. We applied a Fisher Linear Discriminate (FLD) classifier to perform single-trial classification of the EEG to decode the intended arm movement in the left, right, and forward directions (before the onsets of actual movements). The mean EEG signal amplitude near the PPC region 271-310ms after visual stimulation was found to be the dominant feature for best classification results. A signal scaling factor developed was found to improve the classification accuracy from 60.11% to 93.91% in the binary class (left versus right) scenario. This result demonstrated great promises for BCI neuroprosthetics applications, as motor intention decoding can be served as a prelude to the classification of imagined motor movement to assist in motor disable rehabilitation, such as prosthetic limb or wheelchair control.

Keywords: Brain Computer Interface (BCI), Classification, Electroencephalogram (EEG), Movement Intention, Posterior Parietal Cortex (PPC)

Article Outline

1. Introduction

- Brain Computer Interface (BCI) is a frontier research area in neural engineering that has gathered a great deal of attention from scientists and the general public. BCI technology allows communication to occur between the brain and an external machine[1], and its application can range from entertainment to assistive devices[2]. In a typical BCI system, the brain activities are recorded and processed by a computer system, which in turn, deciphers the mental or physical activities and creates commands to control external devices[3, 4]. One of the goals in BCI and neural engineering research is creating assistive devices for those with limited motor control. A successful BCI system is valuable in motor disable rehabilitation by allowing the subjects to perform physical practices[5]. This type of technology would drastically improve the quality of life for the patients by allowing these individuals to have better communication and more independent control[6, 7]. The operation of traditional electromyogram(EMG) based controlled prosthetics is based on the decoding of myoelectric signals of residual muscles[8, 9]. While these devices provide more basic control over the prostheses, certain limitations restrict their acceptability. Users with severely limited motor ability would require much effort to learn how to contract specific muscle groups in order to control the device. Therefore, the need to create a more intuitive control strategy based on the user’s naturally occurring brain signals is apparent.The BCI technology today encompasses invasiveelectrocorticography (ECoG), implanted electrodes, or non-invasive electroencephalography (EEG)[10]. Current literature suggests that EEG is adequate to extract detailed information about precise movements of the upper limb[11]. For our study, non-invasive techniques based on EEG surface potentials appear to be a more sensible method for collecting and processing data[12-14] for neuroprosthetics applications with relatively few DoF. Real-time signal classification based on the activation and feature extraction from particular brain regions can allow for the control of the assistive devices. When examining the neuronal activities for BCI applications, the signal intensity or signal power features has been commonly used for decoding user movement intents. The posterior parietal cortex (PPC) region is responsible for converting visual stimuli into motor movements[15] and is a vital location for decoding intended motor movement[12]. While most other research focused on discriminating EEG signals between left hand, right hand, toe, and tongue imagined movement[16, 17], our study endeavors to decode and classify EEG signals for the intended movement direction of the same limb, leading to a more realistic control of a single upper limb prosthetic arm[18]. Single-trial signal classification strategy was developed to evaluate the temporal, spatial, and spectral EEG features during the planning stages of motor movements in the left, right, and forward directions similar to[19-21]. Ultimately, such classification algorithm will be a part of a two-stage neuroprosthetics control strategy. In the first stage, the intended motor movement directions can be decoded using EEG signal features. The second stage is envisioned to be a motor imaginary classifier. In this paper, we shall focus our discussion on the classification of motor intention only. Since the presentation of the visual cues does not influence the performance[22], we used “realistic” instead of “abstract” visual-cue in order to avoid a tedious calibration procedure. Furthermore, realistic visual image environment may enhance learning progress[23]. Ensemble Empirical Mode Decomposition (EEMD), where the signals are decomposed into intrinsic mode functions (IMF)[24], was utilized to isolate the frequency information in the training set. We developed and validated the use of scalp EEG data and current density localization for intended movement direction analysis. Subsequently, we evaluate the feature classification strategy suitable for distinguishing the brain activity associated with the intended hand movement. Potential variations in electrode impedance at different recording locations and at different recording times may drastically impact the amplitude and signal-to-noise ratio (SNR) of the EEG signals. Finally, we proposed and evaluated a scaling factor based on the “signature” EEG signal after the presentation of the visual-cues. We hypothesize that such scaling factor is able to compensate the potential problems of electrode impedance differences between trials and across different locations. Our preliminary result indicated that the inclusion of such a scaling process would significantly improve the overall single-trial classification accuracy. In a two-class decoding scheme, the accuracy improved from approximately 60% to over 90% with the scaling factor. The implication of this work would have direct impact on the acceptability of the BCI neuroprosthetics application as the new device will function based on user’s intent, which can provide a more intuitive control paradigm, for simple device control with few DoF.

2. Methods

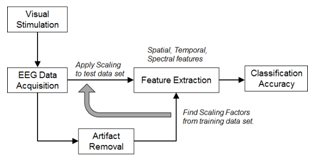

- The experimental procedure for investigating the motor intention using targeted BCI is shown in Figure 1. It involves the design of a visual stimulation system, an EEG data acquisition system, a signal pre-processing unit, an artifact removal algorithm, a feature extraction method, and a signal classifier. The detail for each step is provided in the subsequent sections. The method for computing the visual-cue based scaling factor is proposed and described in the feature extraction section.

2.1. Visual Stimulation and EEG Data Acquisition

2.1.1. Participants

- Eight able-body participants with normal or corrected to normal eye sight (6 males and 2 females, age 19-29, all right handed users) were recruited in this study. All of the subjects had no prior experience with BCIs and no history of neurology disorders. The protocol has been approved by the Institutional Review Board for Human Use (IRB) at the Louisiana Tech University. All participants had read and signed an informed consent.

2.1.2. Task and Stimuli

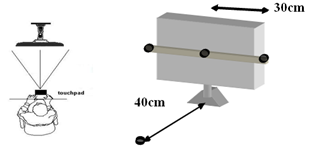

2.1.3. Apparatus

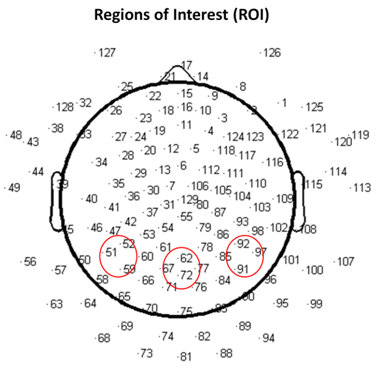

- The EEG evoked response potential (ERP) signals were recorded using a 128-channel HydroCel Geodesic Sensor Net (Electrical Geodesics Inc., Eugene, OR) with the Net-Station 5.3 software. Figure 4 shows the electrode placement as viewed from the top of the head and regions of interest around the PPC. All signals were anti-aliasing low-pass filtered at 100Hz, and digitized at a sample rate of 256Hz.

2.2. Signal Pre-processing and Artifact Removal

- The EEG data was digitally filtered between 0.1~30 Hz. Since this project focused only on the analysis motor intention prior to any actual movements, all three different effectors were included and combined in the analysis. The data was separated into three groups (left, right, or forward) based on the “Direction cues”. Bad channels, as the results of poor skin contact, eye blink, eye movement, or muscle movement were detected based on their particular signal characteristics and abnormal amplitude information, were replaced by the averaged signals from neighboring channels using NetStation built-in functions. Only those artifact-free epochs (with amplitude <50μV) were used for further analysis. The data was also re-referenced to the average signal across all 128 electrodes. The 100ms before onset of each trial was used for baseline correction adjustments.

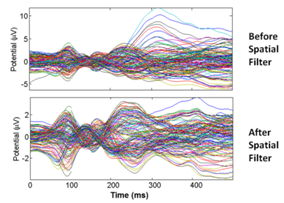

2.3. Offline Source Localization Validation

- Source localization was performed offline as a way to validate that the activated brain regions of our recorded data is consistent with the literature. The process described in this subsection would not be needed in the real-time implementation of the motor intention decoder. Independent component analysis (ICA)[25] was first performed using the extended Infomax-ICA algorithm in the EEGLAB tools[26] to find the maximally temporally independent signals available[27]. Figure 5 illustrates the averaged signals across all the recording trials before and after the removal of eye motion artifact. The DIPFIT 2.0 algorithm was then used to estimate the dipole sources of the remaining independent component (IC) after spatial filtering[26]. The dipoles were projected onto the boundary element mode in EEGLAB then plotted on the average MNI (Montreal Neurological Institute) brain images[28]. The source locations were then specified using the Talairach coordinate system. Dipole locations from the source localization algorithm would not be used in the single-trial classification of arm movement direction since it is a time consuming process.

2.4. Ensemble Empirical Mode Decomposition

- Ensemble empirical mode decomposition (EEMD) is a data-driven analysis method that separates the signal into a collection of intrinsic mode functions (IMFs). It is a powerful approach for analyzing nonlinear, non-stationary EEG signal since the method is only based on local characteristic time scale[29-31]. Unlike traditional bandpass filters, EEMD breaks down the signals in an empirical manner, which is strictly based on the signal characteristics without specifying any frequency bands[32]. Mode mixing problem that existed in the Empirical mode decomposition (EMD) method can be resolved by EEMD utilizing the uniformly distributed reference frame by the addition of white noise[33]. The procedure for EEMD has been described in great detailed in[29], and would not be repeated here.

2.5. Feature Extraction and Signal Classification

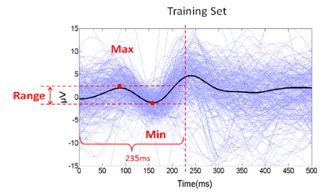

- Signal classifiers were created using the Statistical Pattern Recognition Toolbox in Matlab[34] to decode the EEG signal features. A two-class analysis (left versus right) was performed using Fisher Linear Discrimination (FLD) binary classifier in a 5x5-fold cross validation procedure. Eighty percent (80%) of the data for each direction was randomly chosen to be the training set. The remaining 20% of the data was assigned to be the testing set. The “signature” signal was acquired in each region of interest (ROI) near the PPC region (see Figure 4) using the training set for each cross-validation study. In this study, the averaged ERP signal within 235ms after the presentation of the “Direction cue” would be considered the “signature” at each ROI. It has been observed that regardless of the intended reaching direction or the type of effectors requested of the subject, the averaged EEG signal within the first 235ms after the presentation of the “Direction cue” retains similar signal profile. Each “signature”, consisted of a dominant high delta (0 – 4Hz) and a low theta (4 – 8Hz) component, has been observed to have similar shapes, regardless of the intended movement direction. The local maximum and local minimum of the “signature” signal at each ROI were found and their difference was used as a scaling factor. The signal amplitude at each recording site was scaled accordingly with the following equation:

| (1) |

3. Results

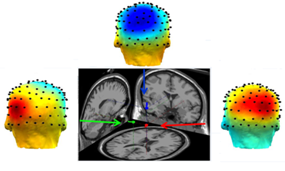

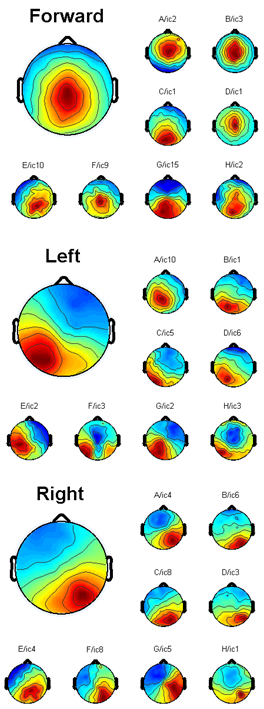

- Using Talairach coordinate system, the dominant equivalent dipole source for each intended arm movement direction was observed near the PPC areas for all subjects. Figure 7 illustrates the result of the EEGLAB plug-in DIPFIT2.0 output for a particular subject where the coordinates for the left component[-20, -40, 24], the forward component[0, -33, 40], and the right component[28, -40, 23] are found. This is consistent with the results reported in the literature[12]. The effects of the parietal ICs were then back-projected onto the scalp for each subject after artifact removal (Figure 8).

4. Discussion

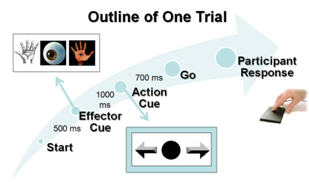

- BCI technology enables people to interact with external devices in new and intuitive ways. As a prosthetic application, it helps people with limited muscle control (such as those suffering from spinal injury, stroke or cerebral palsy) regain some of the lost motor functions. Even though there is still debate over the best classification method for BCI, we developed and validated the use of surface EEG to distinguish the brain activity during planning of intended arm movements. EEG data was recorded from untrained subjects excluding feedback, where each individual subject was analyzed independently in this study. Subjects only instructed to perform the indicated reaching tasks (see Figure 3). In the framework of upper limb neuroprosthesis, this paradigm could be directly implemented as a part of the control strategy of the prosthetic arm for activity of daily living (ADL). The spatial, temporal, and spectral features were extracted based on reported literature. We used the spatial information near the PPC regions as previously reported[15]. The temporal feature pertaining to the mean EEG signal amplitude 271 – 310ms after the presentation of the “Direction cue” visual-cue was found to have the most significant difference between the intended arm reaching directions, and the highest classification accuracy. A scaling strategy based on the EEG response to cue-based stimulus was proposed. The maxima and minima “signature” signal from 0 – 235ms after the presentation of the “Direction cue” was used as a scaling factor for subsequent single-trial analysis. The early synchronization in the delta (0 – 4Hz) and low theta (4 – 8Hz) bands is related to the “Direction cue”, which supports the idea of early component reflects the processing of the visual intention, where the alpha band (9 – 12Hz) is associated with the visual attention[38]. The “signature” signal around this frequency range can be found at different recording electrodes near the ROIs and the visual cortex during the delayed “Direction cue” period. Our current study did not attempt to distinguish the three effectors. Recently, there have been many reported studies on the classification of saccade motor imagery versus motor execution tasks[21, 39-40]. The combination of motor planning and motor imagery for amputee subjects may be a more viable technique for controlling neuroprosthetics devices. The utility of the proposed cue-based “signature” scaling factor gave some promising results by improving the classification accuracy of intended motor directions. To test this scaling strategy in more realistic situations, it may be extended to non-visual cue based (voluntary movement) setup in the future. In these experiments, the subjects will decide the desired reaching destinations without the target-specific stimulation. The “signatures” in these situations would have been internally triggered, possibly dominated by a slightly different frequency component.A non-invasive mobile prosthetic platform using wireless dry electrodes and wearable EEG systems would benefit in real world operational environments[41-42]. Before the implementation of a real-time BCI system, some hardware platforms and specific software need to be developed. Three main advantages of EEG recording system with a real-time signal processing platform are low-cost, easily customized and intuitive operation. Future development of specific software communication systems between EEG recording devices and signal processing platform must be designed and operated close to real-time. Other specification includes simple training protocol for rehabilitation purposes. More work is needed to understand how changes in attention and intention may impact EEG signals. Future study related to the angular direction decoding, instead of the current discrete directions, may be necessary. Participants withmotor-disabilities will be recruited to provide more conclusive results on the advantage of the proposed “signature” scaling and classification algorithms.

5. Conclusions

- Although surface EEG signals have limit information about complex arm movements, we demonstrated EEG signal can be used to decode the direction of reaching tasks during the planning stage. Experiments were designed to provide visual-cues to guide the user imagery/arm movements. ICA and EEMD are efficient to remove artifact. A cue-based scaling strategy was developed to adjust the EEG signal amplitude near the PPC regions. Temporal information (271 – 310ms) after the presentation of the visual cues was found to hold the most discriminatory features. This work would have direct application based on the electroencephalographicalsignals of the user intent. In addition, motor intention combined with motor imagery paradigms also would provide more commends on the control of BCI. The overall single-trial classification accuracy of 93.91 ± 6.09% holds this paradigm promising for non-invasive BCI design in neuromotor prosthesis or wheelchair applications.

ACKNOWLEDGEMENTS

- The project described was supported by Grant Number P20RR016456 from the National Institutes of Health (NIH) National Center for Research Resources (NCRR).

References

| [1] | J.R. Wolpaw, N. Birbaumer, D.J. McFarland, G. Pfurtscheller, T.M. Vaughan, "Brain-computer interfaces forcommunication and control", Clinical Neurophysiology, vol. 113, pp. 767-791, 2002. |

| [2] | P.S. Hammon, S. Makeig, H. Poizner, E. Todorov, V.R. de Sa, "Predicting Reaching Targets from Human EEG", IEEE Signal Processing Magazine, vol. 69, pp. 69-77, 2008. |

| [3] | A.Nijholt, D. Tan, “Brain-Computer Interfacing for Intelligent Systems”, IEEE Intelligent systems, vol. 23, pp. 72-79, 2008. |

| [4] | S. Makeig, K. Gramann, T.P. Jung, T.J. Sejnowski, H. Poizner, “Linking brain, mind and behavior”, International Journal of Psychophysiology, vol. 79, pp. 95-100, 2009. |

| [5] | G. Prasad, P. Herman, D. Coyle, S. McDonough, J. Crosbie, “Applying a brain-computer interface to support motor imagery practice in people with stroke for upper limb recovery: a feasibility study”, Journal of Neuroengineering and Rehabilitation, vol. 7, pp. 60, 2010. |

| [6] | A. Bashashati, M. Fatourechi, R.K. Ward, G.E. Birch, “A survey of signal processing algorithms in brain-computer interfaces based on electrical brain signals”, Journal of Neural Engineering, vol. 4, pp. 32-57, 2007. |

| [7] | Y.T. Wang, Y. Wang, T.P. Jung, “A cell-phone-based brain-computer interface for communication in daily life”, Journal of Neural Engineering, vol. 8, pp. 025018, 2011. |

| [8] | F.C. Sebelius, B.N. Rosen, G.N. Lundborg, “Refined myoelectric control in below-elbow amputees using artificial neural networks and a data glove”, The Journal of Hand Surgery, vol. 30, pp. 780-789, 2005. |

| [9] | J.M. Fontana, A.W.L. Chiu, “Control of Prosthetic Device Using Support Vector Machine Signal Classification Technique”, American Journal of Biomedical Sciences, vol. 1, pp. 336-343, 2009. |

| [10] | M. van Gerven, J. Farquhar, R. Schaefer, R. Vlek, J. Geuze, A. Nijholt, N. Ramsey, P. Haselager, L. Vuurpijl, S. Gielen, P. Desain, “The brain-computer interface cycle”, Journal of Neural Engineering, vol. 6, pp. 041001 2009. |

| [11] | T.J. Bradberry, R.J. Gentili, J.L. Contreras-Vidal, “Reconstructing three-dimensional hand movements from noninvasive electroencephalographic signals”, Journal of Neuroscience, vol. 30, pp. 3432-3437, 2010. |

| [12] | Y. Wang, S. Makeig, “Predicting Intended Movement Direction Using EEG from Human Posterior Parietal Cortex”, in D.D. Schmorrow et al. (Eds.): Augmented Cognition, HCII 2009, LNAI 5638, pp. 437-446, 2009. |

| [13] | J.N. Mak, Y. Arbel, J.W. Minett, L.M. McCane, B. Yuksel, D. Ryan, D. Thompson, L. Bianchi, D. Erdogmus, “Optimizing the P300-based brain-computer interface: current status, limitations and future directions”, Journal of Neural Engineering, vol. 8, pp. 025003, 2011. |

| [14] | P. Brunner, L. Bianchi, C. Guger, F. Cincotti, G. Schalk, “Current trends in hardware and software for brain-computer interfaces (BCIs)”, Journal of Neural Engineering, vol. 8, pp. 025001, 2011. |

| [15] | R. QuianQuiroga, L.H. Snyder, A.P. Batista, H. Cui, R.A. Andersen, “Movement intention is better predicted than attention in the posterior parietal cortex”, Journal of Neuroscience, vol. 26, pp. 3615-3620, 2006. |

| [16] | H.H. Ehrsson, S. Geyer, E. Naito, “Imagery of voluntary movement of fingers, toes, and tongue activates corresponding body-part-specific motor representations”, Journal of Neurophysiology, vol. 90, pp. 3304-3316, 2003. |

| [17] | M. Naeem, C. Brunner, R. Leeb, B. Graimann, G. Pfurtscheller, “Seperability of four-class motor imagery data using independent components analysis”, Journal of Neural Engineering, vol. 3, pp. 208-216, 2006. |

| [18] | C.F. Pasluosta, A.W.L. Chiu, “Slippage Sensory Feedback and Nonlinear Force Control System for a Low-Cost Prosthetic Hand”, American Journal of Biomedical Sciences, vol. 1, pp. 295-302, 2009. |

| [19] | S. Waldert, H. Preissl, E. Demandt, C. Braun, N. Birbaumer, A. Aertsen, C. Mehring, “Hand movement direction decoded from MEG and EEG”, Journal of Neuroscience, vol. 28, pp. 1000-1008, 2008. |

| [20] | S. Waldert, T. Pistohl, C. Braun, T. Ball, A. Aertsen, C. Mehring, “A review on directional information in neural signals for brain-machine interfaces”, Journal of Physiology - Paris, vol. 103, pp. 244-254, 2009. |

| [21] | L. Holper, M. Wolf, “Single-trial classification of motor imagery differing in task complexity: a functionalnear-infrared spectroscopy study”, Journal of Neuroengineering and Rehabilitation, vol. 8, pp. 34, 2011. |

| [22] | C. Neuper, R. Scherer, S. Wriessnegger, G. Pfurtscheller, “Motor imagery and action observation: modulation of sensorimotor brain rhythms during mental control of a brain-computer interface”, Clinical Neurophysiology, vol. 120, pp. 239-247, 2009. |

| [23] | G. Pfurtscheller, R. Leeb, C. Keinrath, D. Friedman, C. Neuper, C. Guger, M. Slater, “Walking from thought”, Brain Research, vol. 1071, pp. 145-152, 2006. |

| [24] | Z.X. Zhou, B.K. Wan, D. Ming, H.Z. Qi, “A novel technique for phase synchrony measurement from the complex motor imaginary potential of combined body and limb action”, Journal of Neural Engineering, vol. 7, pp. 046008, 2010. |

| [25] | T.P. Jung, S. Makeig, T-W. Lee, M.J. McKeown, G. Brown, A.J. Bell, T.J. Sejnowski, “Independent component analysis of biomedical signals”, Proc 2nd Int Workshop on Independent Component Analysis and Signal Separation, pp. 633-644, 2000. |

| [26] | A. Delorme, S. Makeig, “EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis”, Journal of Neuroscience Methods, vol. 134, pp. 9-21, 2004. |

| [27] | C.T. Lin, S.A. Chen, T.T. Chiu, H.Z. Lin, L.W. Ko, “Spatial and temporal EEG dynamics of dual-task drivingperformance”, Journal of Neuroengineering and Rehabilitation, vol. 8, pp. 11, 2011. |

| [28] | R. Oostenveld, T.F. Oostendorp, “Validating the boundary element method for forward and inverse EEG computations in the presence of a hole in the skull”, Hum Brain Mapping, vol. 17, pp. 179-192, 2002. |

| [29] | Z. Wu, N.E. Huang, “Ensemble empirical mode decomposition: A noise-assisted data analysis method”, Advances in Adaptive Data Analysis, vol. 1, pp. 1-41, 2009. |

| [30] | N.E. Huang, Z. Shen, S.R. Long, M.C. Wu, H.H. Liu, “The empirical model decomposition and the Hilbert spectrum for nonlinear and nonstationary time series analysis”, Proceedings of the Royal Society London A, vol. 454, pp. 903-995 1998. |

| [31] | C.L. Yeh, H.C. Chang, C.H. Wu, P.L. Lee, “Extraction of single-trial cortical beta oscillatory activities in EEG signals using empirical mode decomposition”, Biomedical Engineering Online, vol. 9, pp. 25, 2010. |

| [32] | W.Y. Hsu, “EEG-based motor imagery classification using neuro-fuzzy prediction and wavelet fractal features”, Journal of Neuroscience Methods, vol. 189, pp. 295-302, 2010. |

| [33] | T.Y. Wu, Y.L. Chung, “Misalignment diagnosis of rotating machinery through vibration analysis via the hybrid EEMD and EMD approach”, Smart Materials and Structures, vol. 18, pp. 095004, 2009. |

| [34] | V. Franc, V. Hlavac, “Statistical Pattern Recognition Toolbox for Matlab”, Center for Machine Perception, Czech Technical University, 2004. |

| [35] | M. Congedo, F. Lotte, A. Lecuyer, “Classification of movement intention by spatially filtered electromagnetic inverse solutions”, Physics in Medicine and Biology, vol. 51, pp. 1971-1989, 2006. |

| [36] | P. Flandrin, G. Rilling, P. Goncalves, “Empirical Mode Decomposition as a Filter Bank”, IEEE Signal Processing Letters, vol. 11, pp. 112-114, 2004. |

| [37] | Y. Wang, T.P. Jung, “A collaborative brain-computer interface for improving human performance”, PLoS One, vol. 6, pp. e20422, 2011. |

| [38] | M.S. Treder, A. Bahramisharif, N.M. Schmidt, M.A. van Gerven, B. Blankertz, “Brain-computer interfacing using modulations of alpha activity induced by covert shifts of attention”, Journal of Neuroengineering and Rehabilitation, vol. 8, pp. 24, 2011. |

| [39] | K.J. Miller, G. Schalk, E.E. Fetz, M. den Nijs, J.G. Ojemann, R.P. Rao, “Cortical activity during motor execution, motor imagery, and imagery-based online feedback”, Proceedings of the National Academy of Sciences USA, vol. 107, pp. 4430-4435, 2010. |

| [40] | E. Raffin, J. Mattout, K.T. Reilly, P. Giraux, “Disentangling motor execution from motor imagery with the phantom limb”, Brain, vol. 135, pp. 582-595, 2012. |

| [41] | C.T. Lin, L.W. Ko, J.C. Chiou, J.R. Duann, R.S. Huang, S.F. Liang, T.W. Chiu, T.P. Jung, “Noninvasive Neural Prostheses Using Mobile and Wireless EEG”, Proceedings of the IEEE, vol. 96, pp.1167-1183, 2008. |

| [42] | C.T. Lin, L.W. Ko, M.H. Chang, J.R. Duann, J.Y. Chen, T.P. Su, T.P. Jung, “Review of wireless and wearable electroencephalogram systems and brain-computer interfaces--a mini-review,” Gerontology, vol. 56, pp. 112-119, 2009. |

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML