-

Paper Information

- Previous Paper

- Paper Submission

-

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Archive

- Author Guidelines

- Contact Us

Advances in Computing

p-ISSN: 2163-2944 e-ISSN: 2163-2979

2012; 2(3): 35-41

doi: 10.5923/j.ac.20120203.02

Experiment on Tele-immersive Communication with Tiled Display Wall over Wide Area Network

Yasuo Ebara1, Yoshitaka Shibata2

1Central Office for Information Infrastructure, Osaka University, Osaka, 567-0047, Japan

2Faculty of Software and Information Science, Iwate Prefectural University, Iwate, 020-0193, Japan

Correspondence to: Yasuo Ebara, Central Office for Information Infrastructure, Osaka University, Osaka, 567-0047, Japan.

| Email: |  |

Copyright © 2012 Scientific & Academic Publishing. All Rights Reserved.

In order to support advanced remote collaborative works via WAN, sharing various visual contents between remote sites and displaying the existence of remote participants by transmission of high-quality video streaming in real scene is important. However, the display specification of large-scale screen system such as commercial projector and wide-area monitor to display these visual contents is low-resolution, and displaying of real video streaming with sufficient quality is difficult. In this paper, we have constructed tele-immersive environment with tiled display wall to realize displaying real video streaming and visual contents by high-resolution. In addition, we have tried the construction of the environment to display more high-quality video streaming on tiled display wall by transmitting multi-video streaming which captured by two cameras in remote site. By using the environment, we have conducted some experiments on tele-immersive communication on JGN2plus network which is a nationwide scale WAN. From experimental results, we have showed that these video streaming have been displayed wide area of remote site more high-quality than magnified view of video by a single small camera. Moreover, we have tried realistic display processing of ultra resolution astronomical observation image and movie data to display in full view visualization contents with ultra resolution which is difficult to display on a usual PC. As these results, we have enabled to observe the entire image of observation data all over tiled display wall.

Keywords: Tele-immersion, Tiled Display Wall, Remote Communication, High-resolution Visual Contents

Article Outline

1. Introduction

- Remote communication using WAN (Wide Area Network) become popular in daily life by rapid development of tele-communication systems and high-speed network technology. In order to support advanced remote collaborative works via WAN, share various visual contents such as photography, visualization image, and displaying the existence of remote participants by transmission of high-quality video streaming in real scene is important.Recently, various softwares as such video-phone for remote communication with PC are now provided by number of users, and the demands for these tend to increase each year. However, the window size of video image in these software applications is small and low-quality. Therefore, the video image in these is difficult to display the existence of participant with sufficient image quality in remote site.On the other hands, many high performance video-con- ference systems become popular, and these are used in wide range of fields. In order to realize the existence of realistic information in remote site with video-conference, utilization of large-scale screen system is effective, and it is considered to use display equipments such as a projector and wide-area monitor. However, the display of video streaming with sufficient quality is difficult because the display specification of commercial projector and wide-area monitor is low-resolution. In order to solve these issues, we consider that the use of large-scale display system with tele-immersion technology [1-4] is effective. Above all, we focus on the tiled display wall which configure a wide-area screen with two or more LCD panels, and try to display high-resolution visualization contents as effective large-sized display system. In this paper, we construct tele-immersive environment with tiled display wall to realize remote collaborative works with real video streaming and visual contents by high-resolution. In addition, by using the above environment, we conduct some experiments on tele-immersive communication between Osaka University, Kyoto University and Iwate Prefectural University with JGN2plus network which is a nationwide scale WAN in Japan.

2. Related Works

2.1. Tele-immersion Technique

- There has been a growing interest in the tele-immersion technique because it is expected to contribute creating more realistic remote communication on the Internet [1-4]. Tele-immersion was originally defined as the integration of audio and video conferencing, via image based modelling, with collaborative virtual reality in the context of data mining and significant computations [5]. It enables users to share a single virtual environment from different places. In the field of practical tele-immersion, it is considered necessary that everyone who is participating in the communication is able to see any part of the image transmitted from a remote site as he/she wanted.Various study cases on communication system using the tele-immersion technique includes 3-D (3-dimension) video-conference system by 3-D modelling and display techniques have reported [6-13]. Moreover, the video avatar technology has been studied to realize real-world-oriented 3D human images for remote communication with high-presence tools between remote places [14-15]. However, these systems are not able to fully support remote communication by face-to-face for which we have been working on because these require a HMD (Head Mount Display) in order to see 3-D image. In addition, 3-D video-conference is difficult to be used in practical environment because these technologies are now step in research and development.Therefore, we consider that it is important to develop new technique to display more high-quality information with simple ways for participants of remote site in order to realize high realistic remote communication. We try to construct tele-immersive environment by using tiled display wall which can display high-resolution and realistic visual contents in order to realize advanced remote communication via WAN.

2.2. Tiled Display Wall

- Tiled display wall is a technology to display various high-resolution contents by configuring the large-scale display with two or more LCD panels in order to construct effective wide-area screen system [16-17]. Much research has been developed tiled display wall and remote displays by using distribute rendering technique. For example, WireGL provides the familiar OpenGL API to each node in a cluster, virtualizing multiple graphics accelerators into a sort-first parallel renderer with a parallel interface [18-19]. It can drive a variety of output devices, from stand-alone displays to tiled display wall. However, it has poor data scalability due to its single source limitation.On the other hands, Chromium have designed and built a system that provides a generic mechanism for manipulating streams of graphics API commands [20]. It can be used as the underlying mechanism for any cluster-graphics algorithm by having the algorithm use OpenGL to move geometry and imagery across a network as required. In addition, Chromium’s DMX extension allows execution of multiple applications and window control. However, these frameworks have a single source and its design is not suitable for a large amount of data streaming over WAN.In this research, we apply SAGE (Scalable Adaptive Graphics Environment)[21-22] developed by Electronic Visualization Laboratory (EVL) to delivery streaming pixel data with virtual high-resolution frame buffer number of graphical sources for tiled display wall.

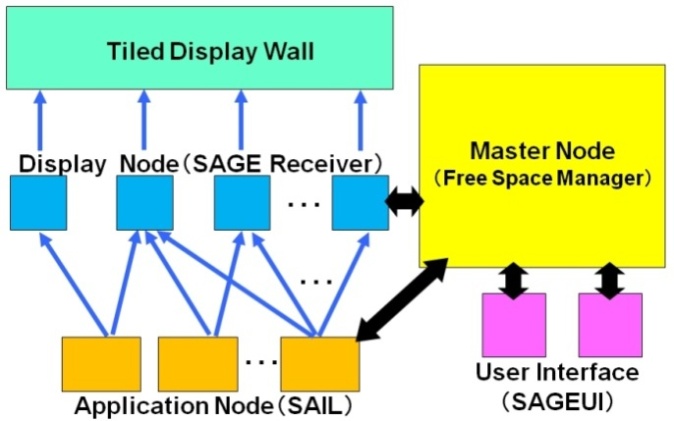

2.3. SAGE

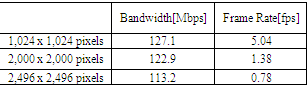

- SAGE is a graphics streaming architecture for supporting collaborative scientific visualization environments with potentially hundreds of megapixels of contiguous display resolution. In collaborative scientific visualization, it is crucial to share high-resolution visualization image as well as high-quality video streaming between participants at local or remote sites. The network-centered architecture of SAGE allows collaborators to simultaneously run various applications (such as 3D rendering, remote desktop, video streaming, and so on) on local or remote clusters, and share them by streaming the pixels of each application over WAN to large-scale tiled display wall.SAGE consists of the Free Space Manager (Master node), SAIL (SAGE Application Interface Library: Application node), SAGE Receiver (Display node), and SAGEUI (User Interface) as shown in Figure 1. SAGE over WAN from SAGEUI and controls pixel streams between SAIL and the SAGE Receivers. SAIL captures application’s output pixels and streams them to appropriate SAGE Receivers. A SAGE Receiver can receive multiple pixels streams from different applications and displays streamed pixels on tiled display wall. A SAGEUI sends user commands to control the Free Space Manager and receives messages that inform users of current status of SAGE.

| Figure 1. SAGE components. [22] |

3. Experimental Environment

3.1. Tiled Display Wall Environment

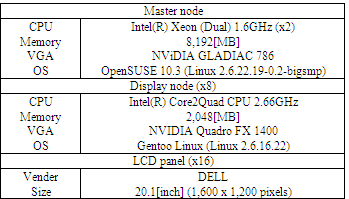

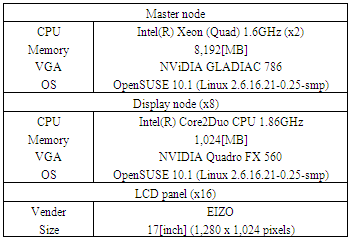

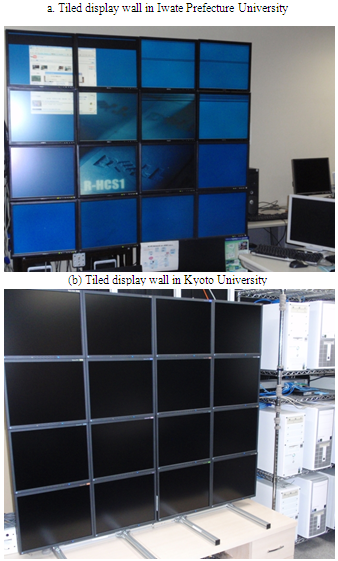

- We constructed two tiled display wall environments consist of 1 master node, 8 display nodes and 16 LCD panels in Iwate Prefectural University (referred to as Iwate) and Kyoto University (referred to as Kyoto). The tiled display wall is LCDs are located at 4 x 4 arrays as shown in Figure 2. These appliance specifications are shown in Tables 1 and 2. Master node and all display nodes are connected by Gigabit Ethernet network, and 2 LCD panels are connected to 1 display node with DVI cables. In our environment, we apply SAGE [21-22] as middleware of tiled display wall to delivery streaming pixel data with virtual high-resolution frame buffer number of graphical sources for tiled display wall.

| Figure 2. Appearance of tiled display wall in each site |

| Figure 3. Experimental environment over JGN2plus network |

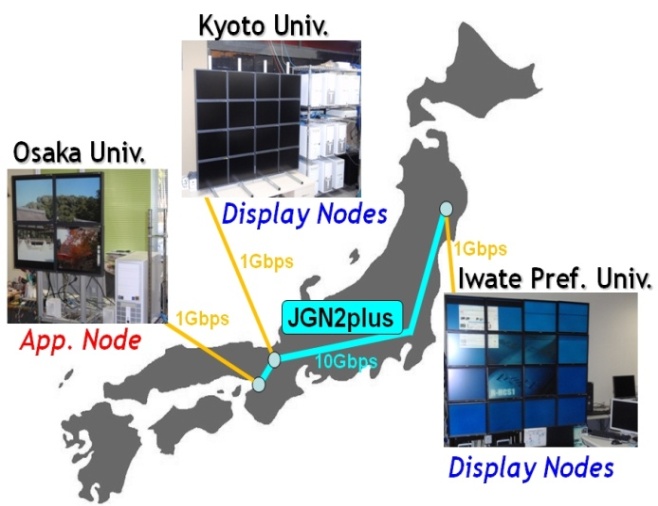

3.2. JGN2plus Network

- In this experimental network, Osaka University (referred to as Osaka), Kyoto and Iwate are connected to JGN2plus network (10Gbps) as WAN. In each site, tiled display wall environment is connected to the LAN using Gigabit Ethernet (1Gbps) network. Figure 3 shows the configuration of the network environment.JGN2plus is a three-year project starting from April, 2008 which consists of activities and operations of an open testbed network for research and development [23]. JGN2plus has supported NICT (The National Institute of Information and Communications Technology)’s driving activities for the development of the New Generation Network. It is also an open testbed network to researchers for working, field tests, and experiments for various applications.

|

|

4. Experiments on JGN2plus Network

4.1. Display of Multi-Video Streaming on Tiled Display Wall

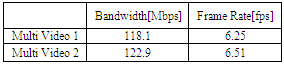

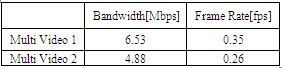

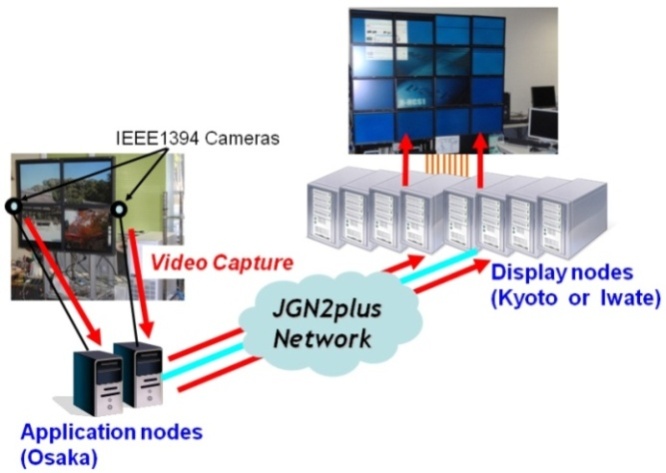

- We have implemented an application of high-resolution real video streaming by adding API code of SAIL in source program of application. In the application, pixel information obtained from an small IEEE1394 camera (Pointgrey Flea: 1,024 x 768 pixels, 30fps) is rendered as video image by glDrawPixels on tiled display wall. Video image is captured by an small IEEE1394 camera located at frame of LCDs on tiled display wall environment is transmitted to each display node in remote sites from an application node in Osaka, its video image is displayed on tiled display wall.Figure 4 shows that an example of video image captured by an small IEEE1394 camera is transmitted from Osaka to Iwate via JGN2plus network is displayed on tiled display wall in Iwate. The display resolution of video image is expanded from 1,024 x 768 to 3,072 x 2,304 pixels. We have showed that an high-resolution video streaming with a tiled display wall is effective to display the existence of participant and ambiance in remote place from the experimental results.

| Figure 4. Example of display results of transmission video image on tiled display wall (Resolution 3,072 x 2,304 pixels) |

| Figure 5. Configuration of multi-video transmission environment |

| Figure 6. Example of display results of multi-transmission video images on tiled display wall (Each resolution 2,560 x 1,024 pixels). |

|

|

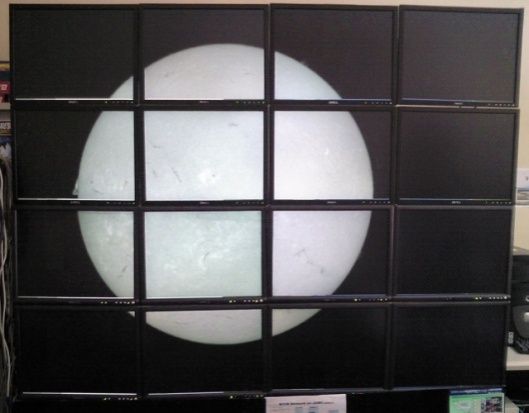

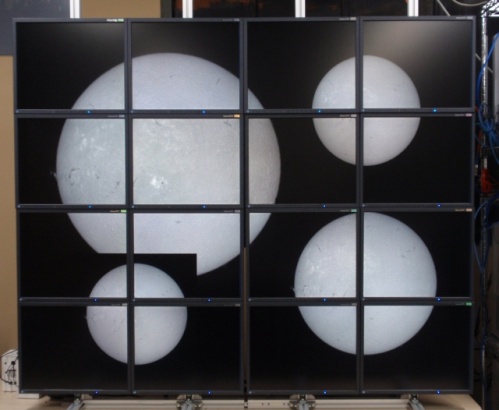

4.2. Realistic Display for Ultra Resolution Astronomical Observation Data

- Tiled display wall has the advantage of being able to display in full view visual contents with ultra resolution which is difficult to display on a usual PC. We have applied tiled display wall environment in this research to the field of astronomy as an effective application to realize a realistic ultra resolution display of observation image data. Recently, the research of Space Weather Forecast [24] have started to predict the explosion phenomenon that is called a flare occur on the sun’s surface and the influence on the global environment at home and abroad. In order to establish high precision forecast model, it is necessary to analyze a large amount of phenomenon with ultra resolution observation image data. We tried realistic display processing of ultra resolution astronomical observation image and movie data.In this research, the observation data (FITS format, resolution 4,096 x 4,096 pixels) by Solar Magnetic Activity Research Telescope (SMART) is located in Kwasan and Hida Observations, Kyoto University is used as image and movie data. In this experiment, observation data saved in Osaka is transmitted to Iwate and Kyoto via JGN2plus, and it is displayed on tiled display wall in each site. In general, FITS (The Flexible Image Transport System) is used as standard image format for astronomical observation image [25]. It is now used as the universal image format which enables to handle ordinary data in the field of astronomy such as astronomical spectrum and event data of X-ray observation in the current. In this paper, we have tried realistic display of image data by FITS format on tiled display wall. ImageViewer as image viewer application is implemented in SAGE. However, ImageViewer is not supported for display of FITS format. In this research, we have improved ImageViewer to display image of FITS format.An example of astronomical observation data by realistic resolution which is displayed on tiled display wall is shown in Figure 7. Moreover, Figure 8 shows an example of multi-display of astronomical observation image on tiled display wall. As these results, view of the entire image of observation data all over tiled display wall was realized. We consider that it is possible to promote new intellectual discoveries from observation data by collaborative works, as multiple users can observe these data at the same time in this environment. In addition, users can change the display position and size of large amounts of image data in the free position is easy in this environment. We need to study the possibility of useful analysis for these observation images by various techniques. Therefore, the construction of new environment to fill researcher’s requirements in the field of astronomy will be examined in the future works.

| Figure 7. Realistic display of astronomical observation image (4,096 x 4,096 pixels). |

| Figure 8. Multi-display of astronomical observation image. |

|

|

5. Conclusions

- In this paper, we have constructed tele-immersive environment with tiled display wall to realize remote collaborative works with real video streaming and visual contents by high-resolution in order to support advanced remote collaborative works via WAN. In addition, we have conducted some experiments on tele-immersive communication on JGN2plus network as WAN.In this environment, we have tried the construction of the environment to display more high-quality real video on tiled display wall by transmitting multi-video streaming which captured by two cameras in remote site. By using the application, transmission video images captured by 2 sets of small IEEE1394 cameras are displayed by setting parallel on tiled display wall. From experimental results, we have showed that these video streaming have been displayed wide area of remote site more high-quality than magnified view of video by a single small camera.Moreover, in order to apply various fields as effective application of the tiled display wall environment, we have tried realistic display processing of ultra resolution astronomical observation image and movie data to analyze of a large amount of phenomenon. As these results, it has enabled to observe the entire image of observation data all over tiled display wall.

Abstract

Abstract Reference

Reference Full-Text PDF

Full-Text PDF Full-Text HTML

Full-Text HTML